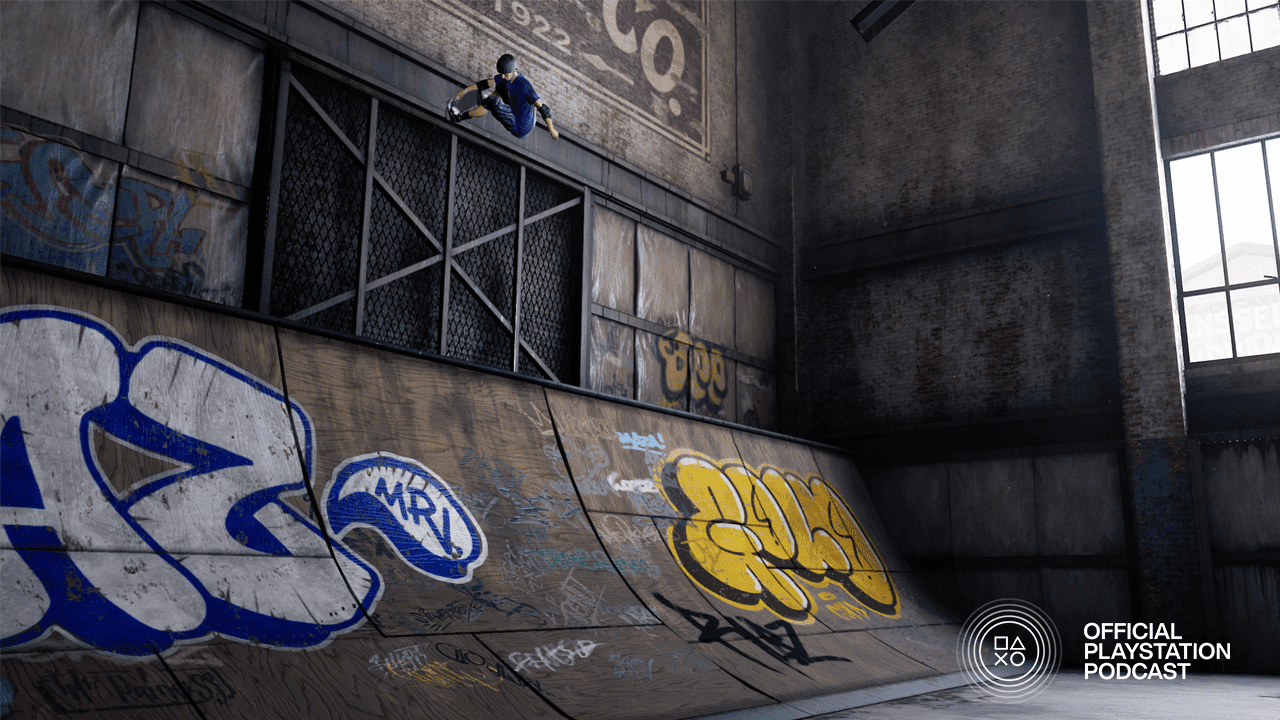

Reading Time: 5 minutesAb Freitag, 14. August, um 17 Uhr (MESZ) öffnen die Tore zur Warehouse-Demo für alle Skater rund um den Globus, die Tony Hawk’s Pro Skater 1 + 2 digital vorbestellt haben und jetzt vor der Veröffentlichung der Vollversion am 4. September losskaten können.

Es wird Zeit, Tony wieder ins Warehouse zu bringen – hier ist alles, was ihr über diese Demo wissen müsst:

Was enthält die Demo?

In diesem Vorgeschmack vor der Veröffentlichung der Vollversion skatet ihr als Tony Hawk in dem Level, in dem die Reihe ihren Anfang nahm – das Warehouse aus dem Originalspiel Tony Hawk’s Pro Skater.

Für diese Demo nehmt ihr an einer klassischen, schlichten Single Session teil. Ihr habt zwei Minuten – es sei denn, ihr geht mit einer Combo, die über das Zeitlimit hinaus andauert, in Overtime – um beim Erkunden des Warehouse so viele Punkte wie möglich zu sammeln.

Möchtet ihr einen weiteren Versuch wagen? Einfach die entsprechende Schaltfläche auswählen! Der Anzahl der Sessions ist keine Grenze gesetzt.

Nach jeder Single Session seht ihr eine Zusammenfassung eurer Endpunktzahl, der längsten Combo und weitere individuelle Statistiken zu eurem Skaten in dieser Session.

Zieht alle Tricks

Tony Hawk Pro Skater 1 + 2 (und diese Demo) beinhalten nicht nur Tricks aus den beiden Originalspielen – Vicarious Visions präsentiert weitere Moves aus den Sequels dieser Reihe, die ihr einsetzen könnt.

Dies sind die erwähnenswerten Tricks, die ihr in Tony Hawk’s Pro Skater 1 + 2 verwenden könnt:

Manual – Während der Skater samt Skateboard noch auf festem Boden steht, bewirkt ein schnelles Drücken der Tastenkombination oben, dann nach unten (vor und zurück), dass sich der Skater auf die Hinterseite des Boards lehnt und es nur noch mit zwei Rollen den Boden berührt. Diese Haltung muss weiterhin durch die gleiche Tastenkombination gehalten werden, ansonsten schlagen die zwei vorderen Rollen wieder auf dem Boden auf und beenden den Manual, oder der Skater fällt nach hinten und springt ab.

Nose Manual – Ähnlich zum Manual, nur lautet hier die Tastenkombination: erst unten, dann oben, damit nur noch die beiden vorderen Rollen des Boards den Boden berühren.

Flatland Tricks – Drückt ihr während eines Manuals oder Nose Manuals die Tasten für einen Grind, Flip oder Grab, führt der Skater Tricks wie Casper und Pogo aus.

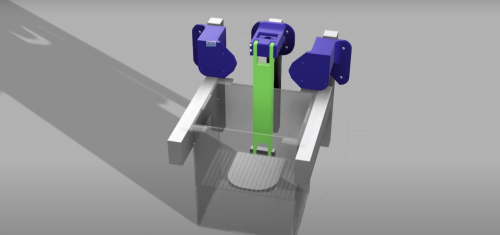

Revert – „Der Revert wurde zu einem der wichtigsten Grundpfeiler der Reihe, den wir von Anfang an unbedingt dabeihaben wollten“, sagt Leo Zuniga, leitender Designer bei Vicarious Visions. Und ihr könnt euch sicher sein, dass wir den Revert in Tony Hawk’s Pro Skater 1 + 2 und in diese Demo gepackt haben. Führt ein Skater einen Trick auf einer grünen Oberfläche wie z. B. einer Halfpipe oder Bowl aus, könnt ihr durch Drücken einer (oder beider) Tasten für den Haltungswechsel das Board um 180° drehen und so eine aktuelle Combo hinzufügen und diese möglicherweise noch mit einem Manual oder Nose Manual erweitern.

Spine Transfer – Ist gegenüber einer grünen Oberfläche, auf der sich der Skater gerade befindet, eine weitere, z. B. zwei Bowls oder Halfpipes nebeneinander, könnt ihr in der Luft mit der gleichen Tastenkombination wie beim Revert nahtlos von der einen Oberfläche zur anderen springen. Das wird direkt zu eurer aktuellen Combo hinzugefügt und ist vielleicht der Schlüssel für Extrapunkte durch Abstände.

Acid Drop – Führt ein Skater Ollies, No Complies, Boneless, Nollies, oder Fake Ollies über einer grünen Oberfläche aus und drückt dabei die Tastenkombination für ein Revert, landet er in einem Übergang. So lässt sich noch mal richtig Schwung holen, um nach dem Revert eine Combo zu starten oder in einen Manual oder Nase Manual überzugehen.

Wall Ride – Erfahrene Skater wissen es bereits, aber es ist eine Wiederholung wert: Springt ein Skater neben einer vertikalen Oberfläche – z. B. einer Wand – und drückt währenddessen die Grind-Taste, gleitet er mit seinem Board beim Fahren an der Wand entlang, was viele Punkte einfährt und ein guter Start oder eine gute Weiterführung für eine Combo ist.

Wall Plant – Springt ein Skater direkt auf eine vertikale Oberfläche zu und drückt die Tastenkombination für einen Boneless-Move, kann er sich mit einem Fuß in die entgegengesetzte Richtung von der Oberfläche abstoßen und noch mehr Schwung holen, anstatt direkt in die Oberfläche hinein zu brettern.

Trick Transitions – Ein Skater kann durch doppeltes Drücken der Grind-, Grab- oder Flip-Taste von einem Trick in einen anderen übergehen und dabei so ziemlich jeden Trick anwenden, vor allem Grinds.

Alte und neue Tricks

Neben anderen anpassbaren Funktionen innerhalb dieser Demo, zum Beispiel in den Einstellungen, könnt ihr hier Tony Hawks Trickliste komplett eigenständig anpassen und neuzuweisen.

In anderen Worten: Tony ist bereit für den 900, den Kickflip McTwist und den 5-0 Overturn Grind. Es sind allerdings noch zwei weitere Stellen für Spezialtricks offen.

Wollt ihr Tony einen Rowley Darkslide, den berühmten Move der Streetskating-Ikone Geoff Rowley, machen lassen? Oder wie wär‘s, wenn ihr Tony ein paar verrückte Tricks von neuen Skatern ausprobieren lasst, wie den „Hardflip Backside Nose Pick“ von Aori Nishimura? All diese und viele, VIELE weitere sind zum Auswählen verfügbar, um die offenen Stellen zu füllen, oder als Ersatz für Tonys Original-Spezialtricks.

Nicht nur Spezialtricks sind komplett frei anpassbar – ihr könnt auch Grab-, Flip- und Lip-Tricks nach Belieben individualisieren und neuzuweisen. Wenn ihr also Tonys Grab-Tricks austauschen wollt, damit er einen Cannonball macht und keinen Indy, ist das möglich.

Informationen zum Runterladen

Bereit, es selbst zu erleben?

Stellt zuerst sicher, dass ihr das Spiel digital im PlayStation Store vorbestellt habt. Für diejenigen, die es bereits vorbestellt haben: Ihr könnt die Warehouse-Demo ab dem 12. August um 17 Uhr (MESZ) herunterladen. Geht in eure Bibliothek, dort werdet ihr die Demo bereit zum Herunterladen vorfinden. Nach dem Herunterladen wird eure Demo am 14. August um 17 Uhr (MESZ) zum Spielen freigeschaltet.

Wenn ihr das Spiel nach der Veröffentlichung der Demo vorbestellt, werdet ihr beim Bezahlvorgang darauf hingewiesen, die Demo herunterzuladen.

Haltet nach weiteren Informationen zu Tony Hawk’s Pro Skater 1 + 2 Ausschau, während die Veröffentlichung am 4. September immer näher rückt.

Verfügbarkeit und Starttermin(e) der Warehouse-Demo können sich ändern. Internet erforderlich.

Website: LINK