Reading Time: 4 minutes

Maker Faire Rome, where everything started

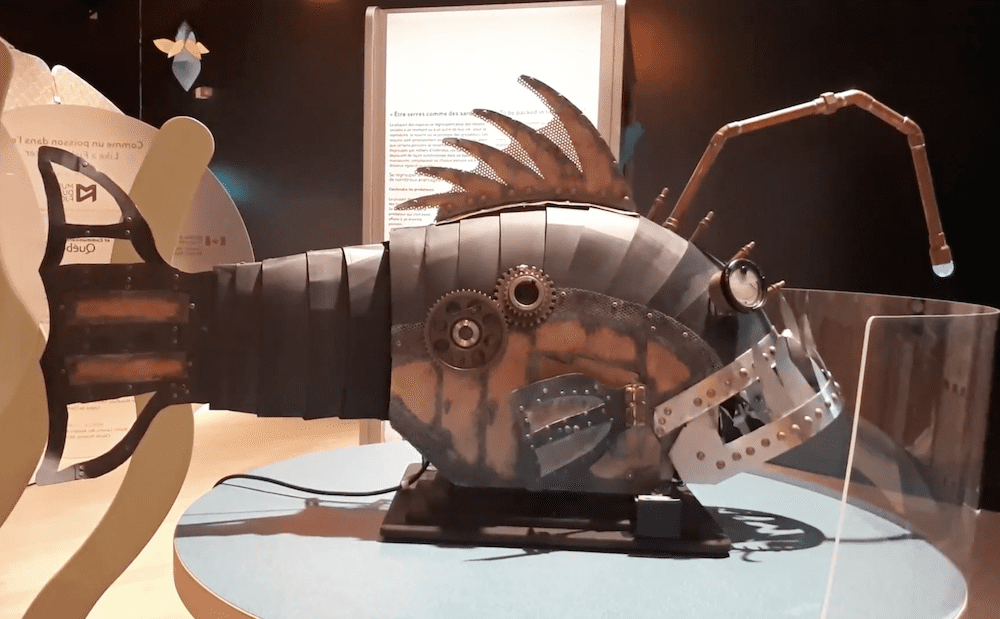

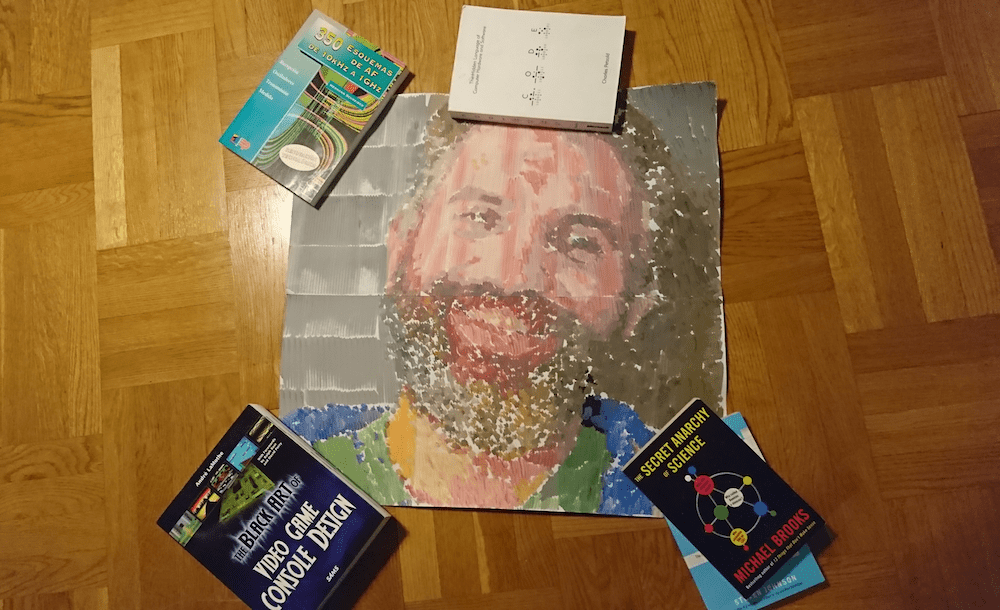

I participated in Maker Faire Rome back in December 2017. I came with the rest of the Arduino crew to spend two days talking to other makers in the show, check out the projects made in the field of education and to… get a portrait painted. Now seriously, I hadn’t planned to get a painting of my beard made at Maker Faire, it just happened. I was walking around together with Marcus, one of the guys running the Arduino Education web infrastructure, when I saw my own picture on a computer screen at a not-so-distant booth. We came closer just to satisfy my curiosity, and then the surprise… there was a robot making my portrait!

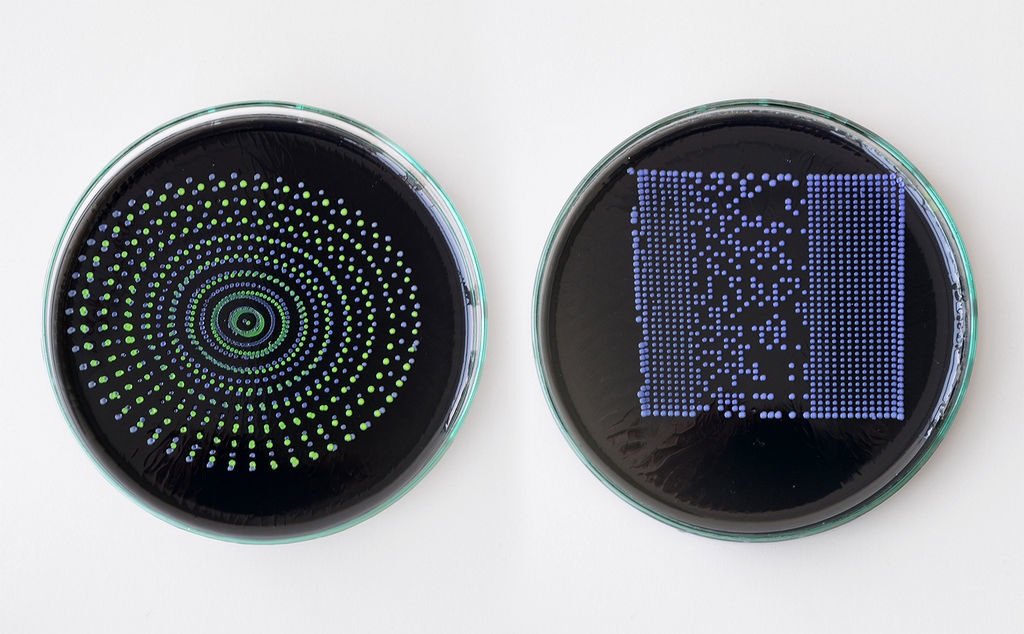

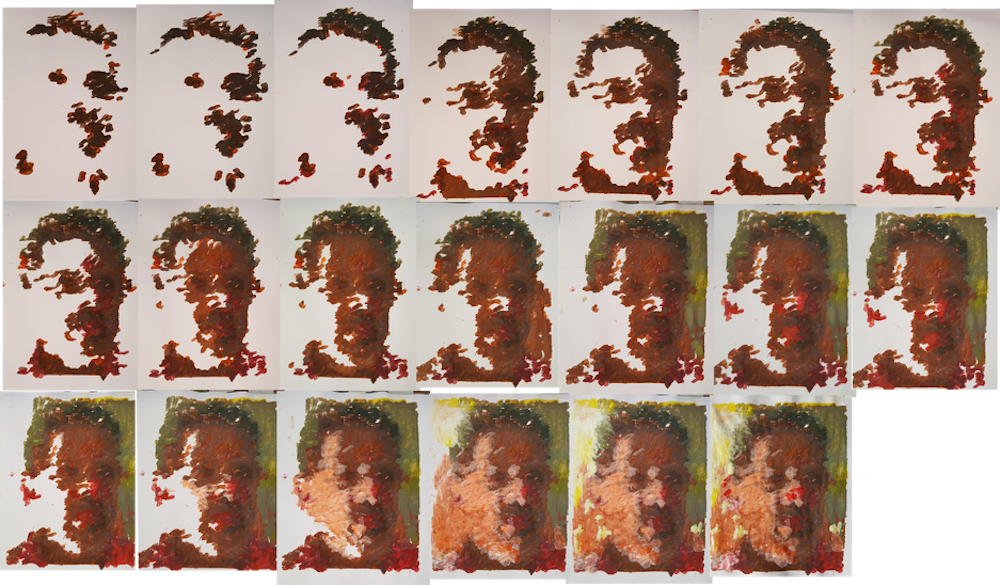

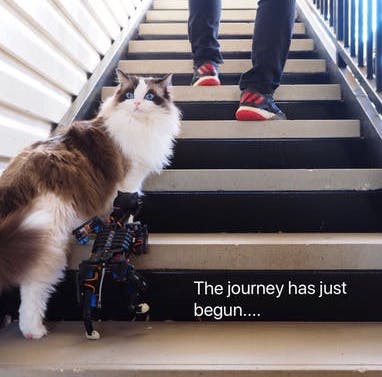

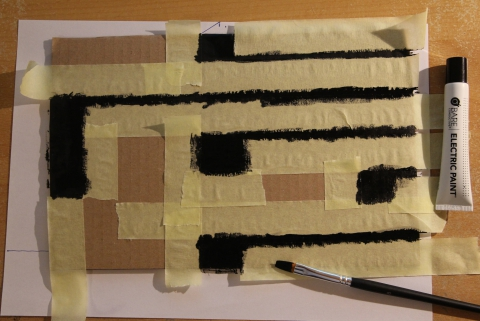

The process of making this portrait was not exactly short, the robot moves back and forth every couple of brush strokes to get some more paint. The colors are created by dipping into small containers. Imagine a CNC of sorts moving on the X-Y plane and making a small movement with the brush in order to make a mark on the canvas. My portrait is made of several A4 pages glued together, as you can see in the picture. In total it takes several hours to produce a portrait like this one.

You can see the first traces made by the machine while painting my portrait in the following video.

[youtube https://www.youtube.com/watch?v=-eHGRJ1dQ7I?feature=oembed&w=500&h=281]

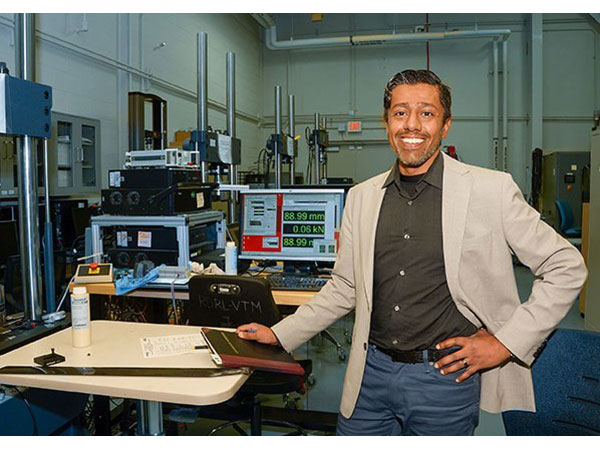

The painting robot was built by Jose Salatino, a painter gone roboticist that used to go around making portraits to famous musicians and giving the paintings away to them. He told me that this is how he started in the art world. At some point he wanted to bring together his life passion with his hobby (electronics) and got interested into painting robots (seems like there is a whole niche there I haven’t explored yet) and realized that very few people were really using a painter’s technique to mix the colors. That triggered him into learning more about machines in general, and machines that could paint in particular.

[Jose’s self portrait process, image courtesy of Jose Salatino]

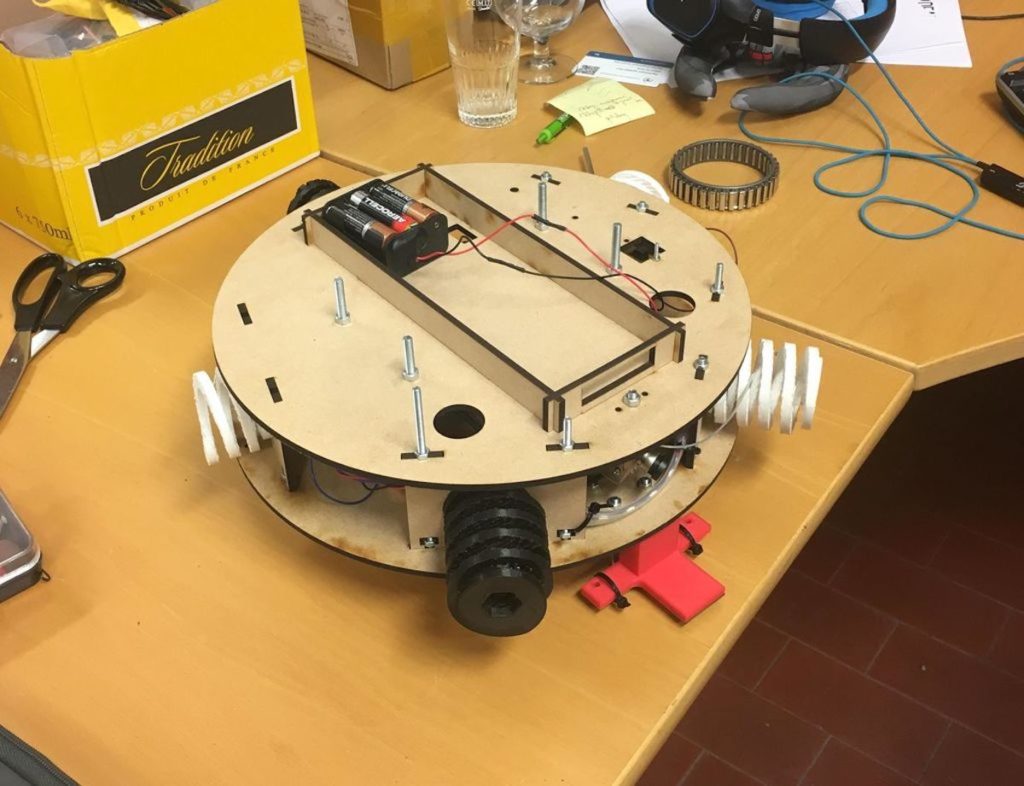

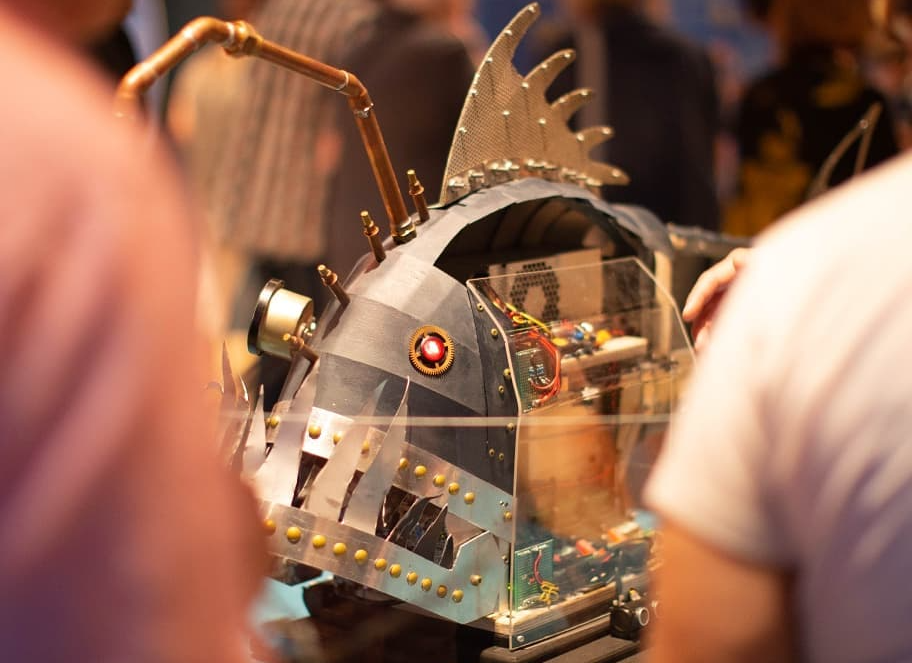

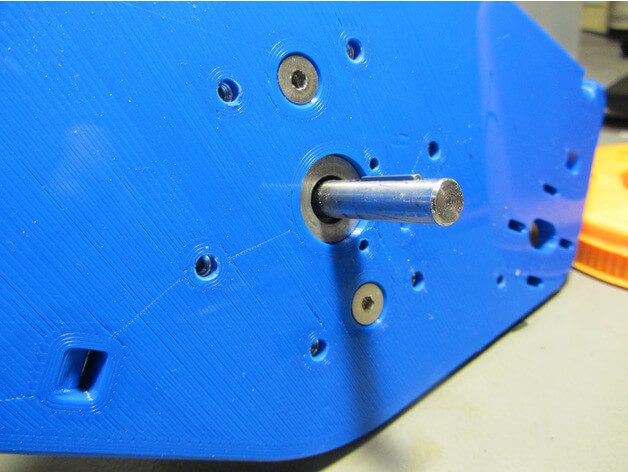

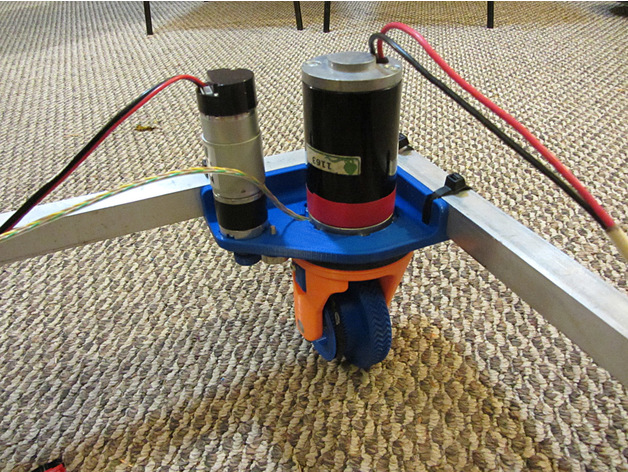

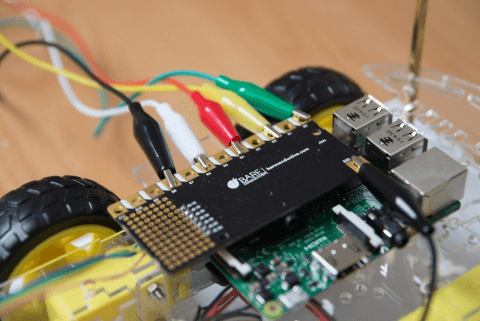

The machine itself

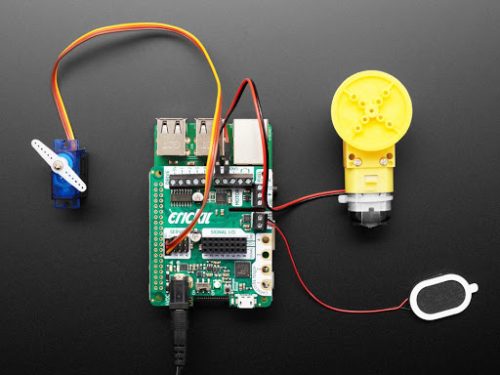

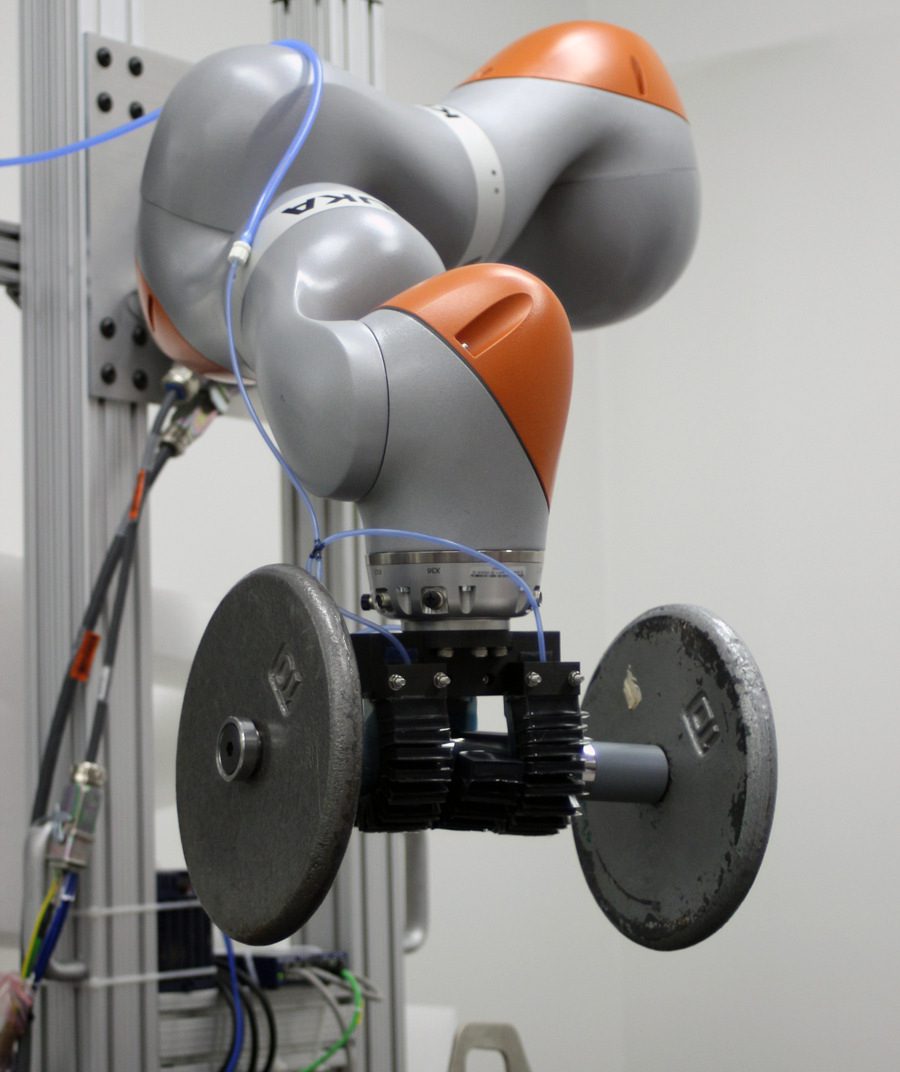

The painter robot, which I came to call Van Gogh because of its painting style, is a two-axis machine that can be programmed to move all over the canvas. The machine uses the technique of creating a color by mixing first basic pigments (blue, yellow, red) and then dipping the brush again into one of a series of containers grading from white to black. This is, Jose told me, how he would mix the paint: first dip into the different containers of basic color (e.g. to make a bright green, need to dip once in blue and maybe three times in yellow), second assign the luminosity by dipping into a certain gray color. When asked about whether the paint containers would not get dirty by doing so, Jose replied that so it goes when painting for him. The colors get dirty on the palette and you need to keep on adding new color. And this is when I realized that I was totally over engineering the project in my head when I tried to imagine how I would do it. Check the robot in action in the following video.

[youtube https://www.youtube.com/watch?v=oVwRXeMYCKI?feature=oembed&w=500&h=281]

Note the sponge used to clean the brush before reloading it with paint, yet another master move, in my opinion. You can read more about the machine by visiting the project’s write-up here.

The contest Jose is participating in

Jose has entered a robotics painting contest with the works made by his robot. One of the proposed pieces is actually my portrait. 🙂

The 2018 “3rd Annual” International Robotic Art Competition’s goal is to challenge teams to produce something visually beautiful with robotics – that is, to have a robot use physical brushes and paint to create an artwork.

Jose’s robot is all about brushes, as I already told you. And he is all for the competition, for which he teamed up with his kids who learned everything that was needed to make the robot paint as it does. The idea is that, in case he won this contest, 90% off the $100.000 USD prize would be donated to an NGO in the US. Are you interested in art? More specifically, are you into robotic art? Then visit the contest’s site, register, and vote for your favorite pieces. If you voted for Jose’s work, you could also help him choose an NGO where to give the money away: Red Cross, Black Girls Code, Learn2Teach-Teach2Learn… as he lives in Barcelona, he doesn’t really know who he would give the price to in the US. Jose is open to suggestions, but remember he needs your vote first!

Check the whole contest here and Jose’s entry here.

Read more about Jose

If you are interested in reading more about Jose’s project, his daughter, Flor, made a very nice interview and reflection about the role of the artist when there is a machine making the work. This is something I bet many readers were wondering by now: “if the machine paints it, who is the one to be credited, the machine or the person making the machine?” In my opinion, and since I am one of the models, I think we–the models giving away our image–should be also getting some credit, or? (Note: this last sentence was a joke!)

You can follow any responses to this entry through the RSS 2.0 feed. You can leave a response, or trackback from your own site.

Website: LINK