Smart home technology has a ton of useful and fascinating use cases for humans, but what about our pets? For most of us, our furry friends are members of the family, and if we can make modifications to our home to help them, we do it.

The good news here is that there are tons of home automation tools that you can use to make life easier and more fun for your pets, and many of them can be done with just a handful of starting materials and basic knowledge.

In this article, we’ll take a look at some of the ways smart homes can benefit pets, and explore some projects from the Arduino community.

Here are just a few of the ways smart home technology can improve your pets’ quality of life:

- Control the temperature through tools like automatic sensors and heating systems, ensuring the room is perfect for pets even when you’re not around

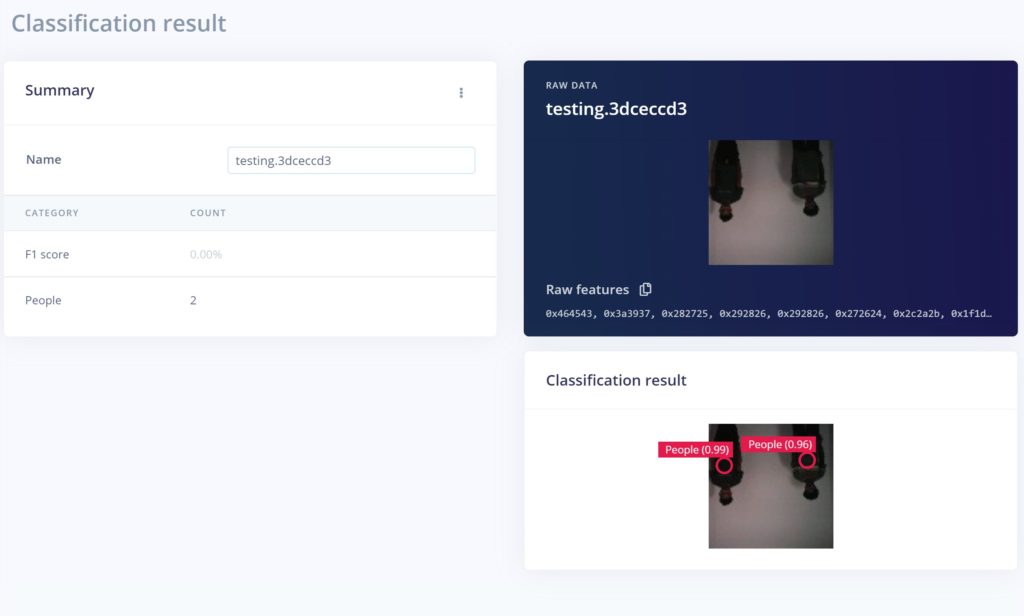

- Observe pets when you’re away, allowing you to quickly notice if they’re distressed or in trouble (or making trouble)

- Keeping your pets fed by automatically filling their bowls at the right times

- Keeping your pets entertained with robotic toys and activities

- Prevent theft with monitoring and tracking tools, alongside existing smart home security systems

Some examples of smart home pet tech

Now let’s take a look at some projects from the Arduino community geared toward making life easier for pets.

Remote pet feeder

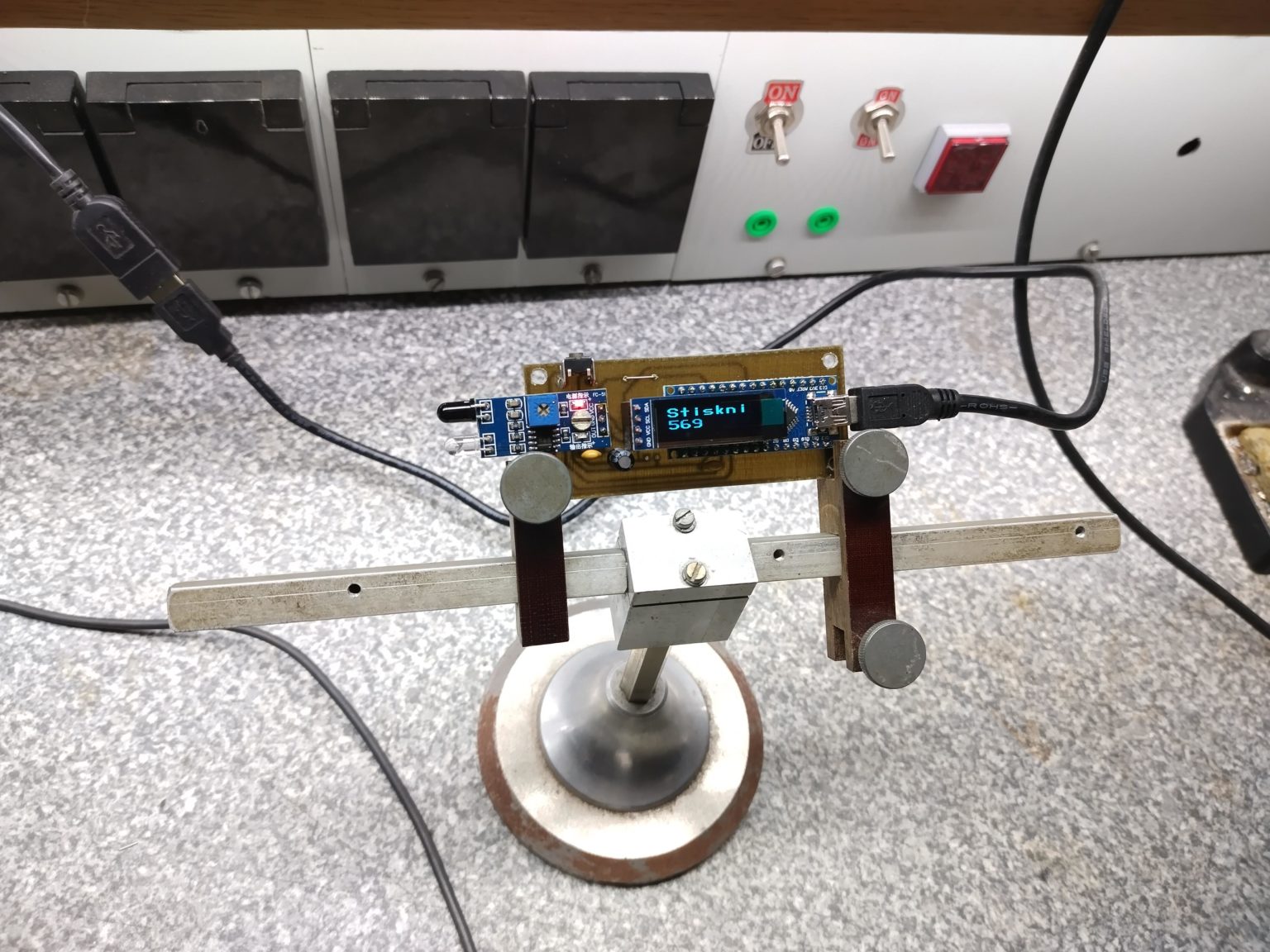

Community member Amal Mathew designed this project to make it possible to feed pets using a remote control. It’s pretty simple to get started — all you need is an Arduino Uno board (or similar), a plastic bottle, a servo motor, a TV remote, IR receiver (TS0P1738) and a small piece of cardboard.

With just a few clicks of the remote, you can instruct the plastic bottle of food to release a certain amount to be enjoyed by your pet — without even leaving the sofa. Check out the full project here.

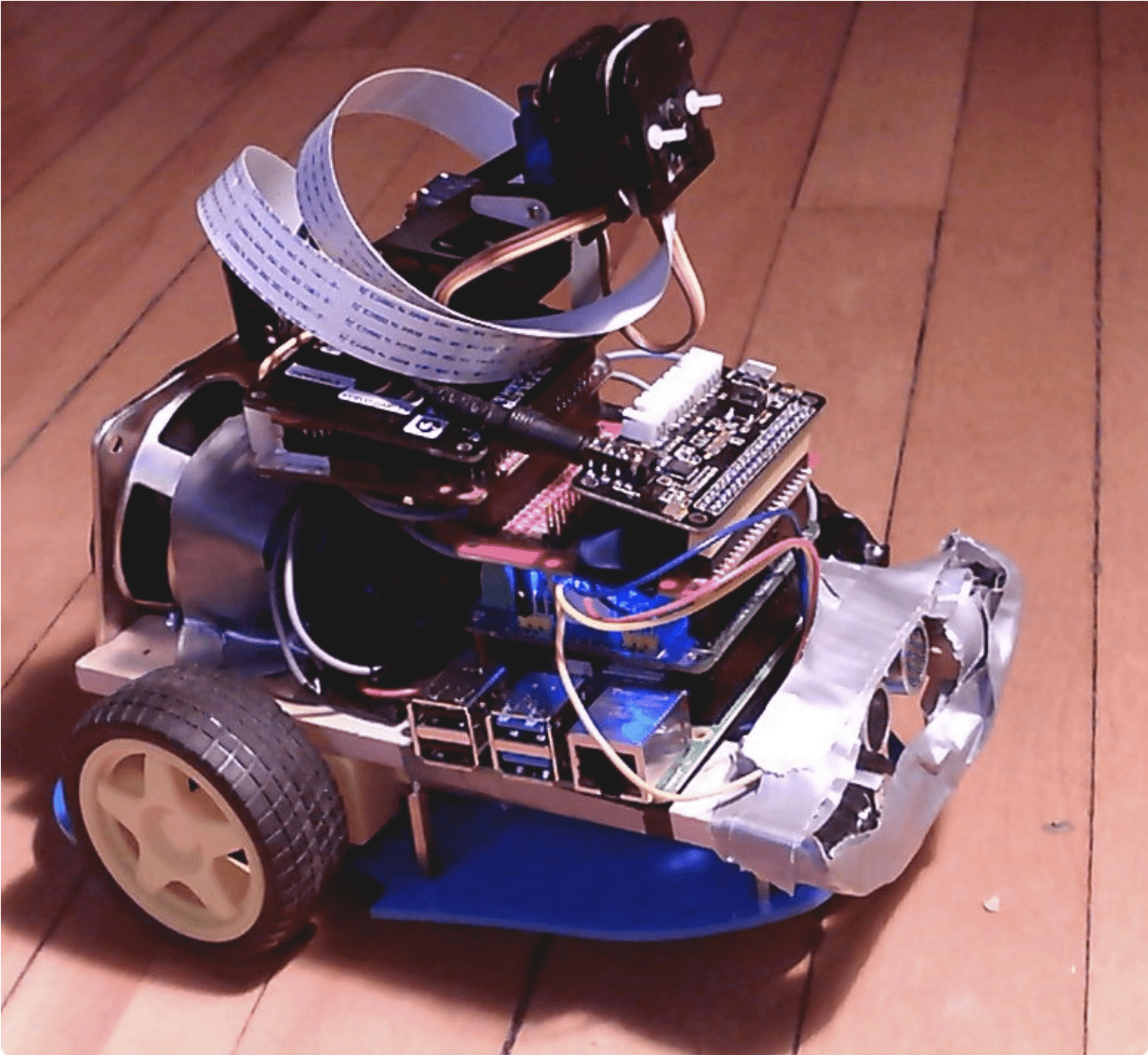

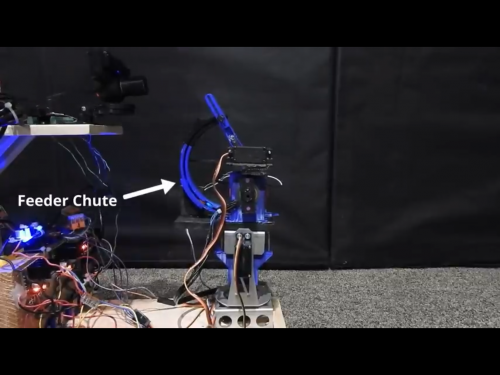

Pet entertainment centre

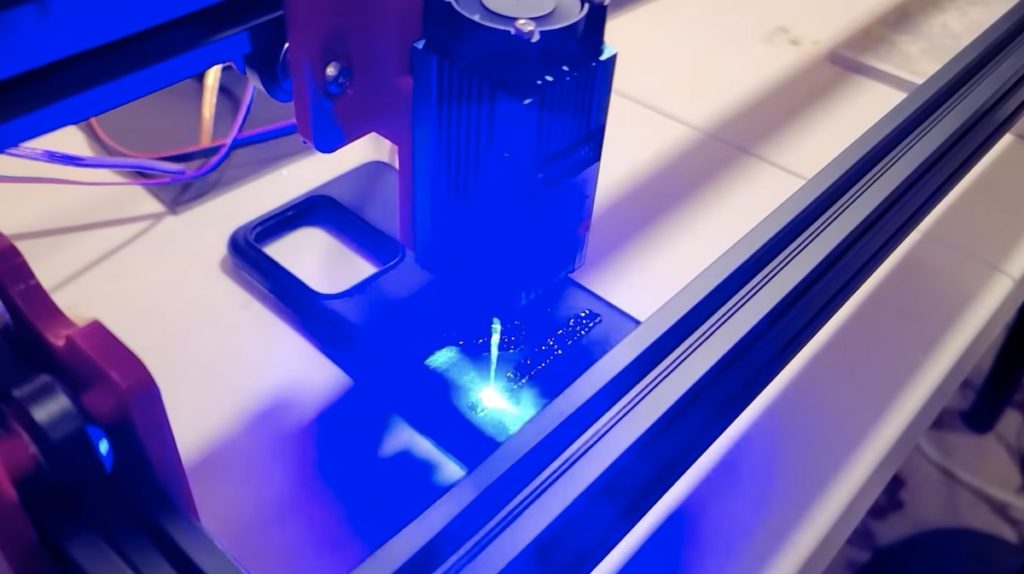

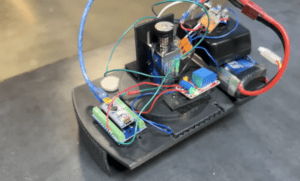

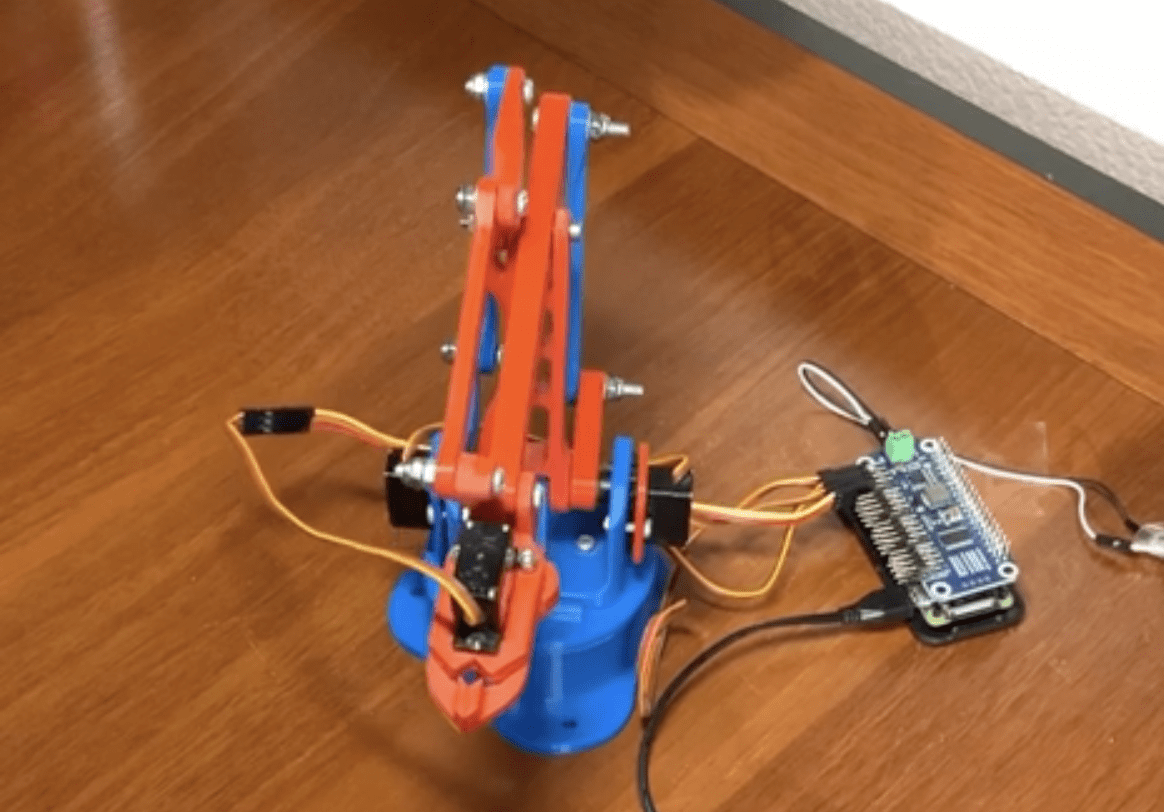

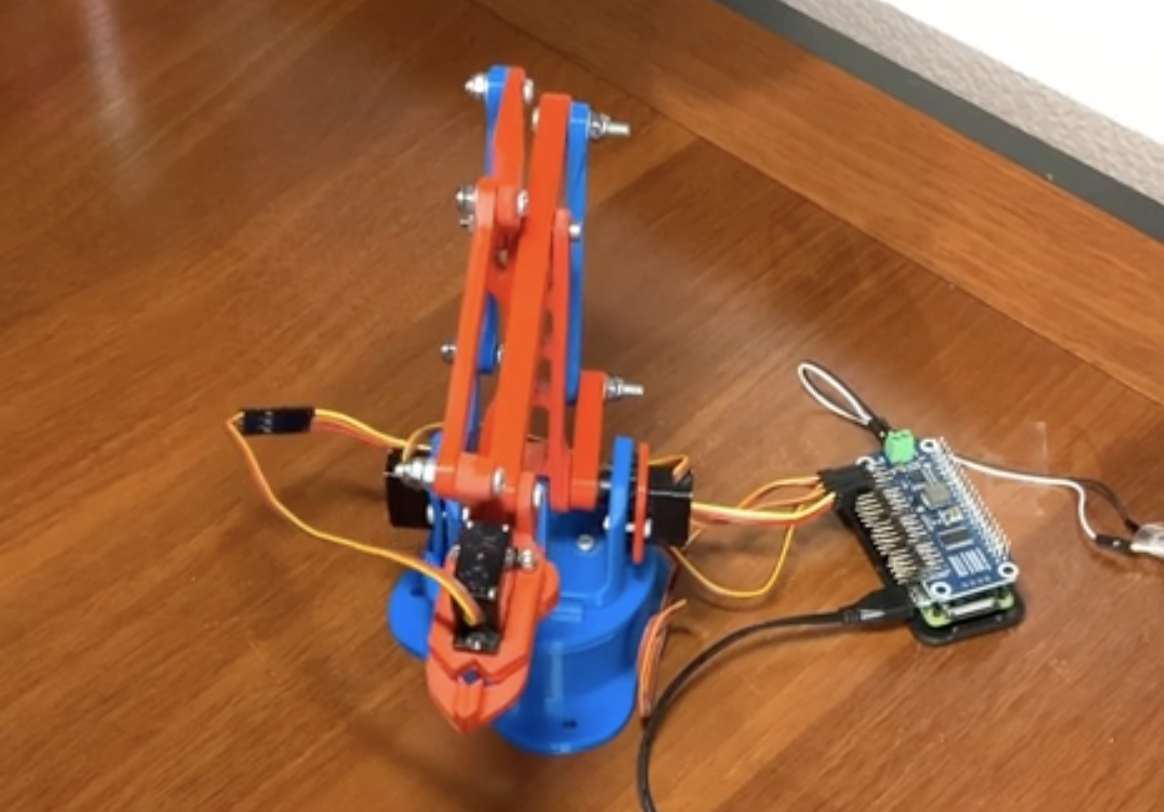

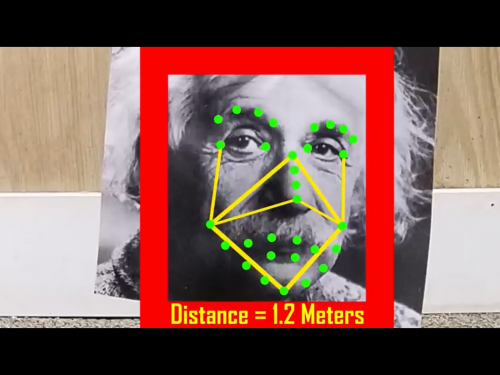

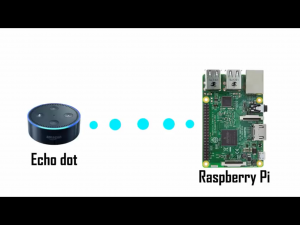

Vítor Barbosa was inspired by the Alexa and Arduino Smart Home challenge to build the pet entertainment centre along with two friends. As well as feeding pets, it also keeps them entertained with the use of a laser toy — although this is better suited to cats than dogs.

Every pet owner knows how useful it is to have a toy to keep your pets distracted when you need to focus on something else, and Vitor’s project uses smart home technology to build the perfect automated solution.

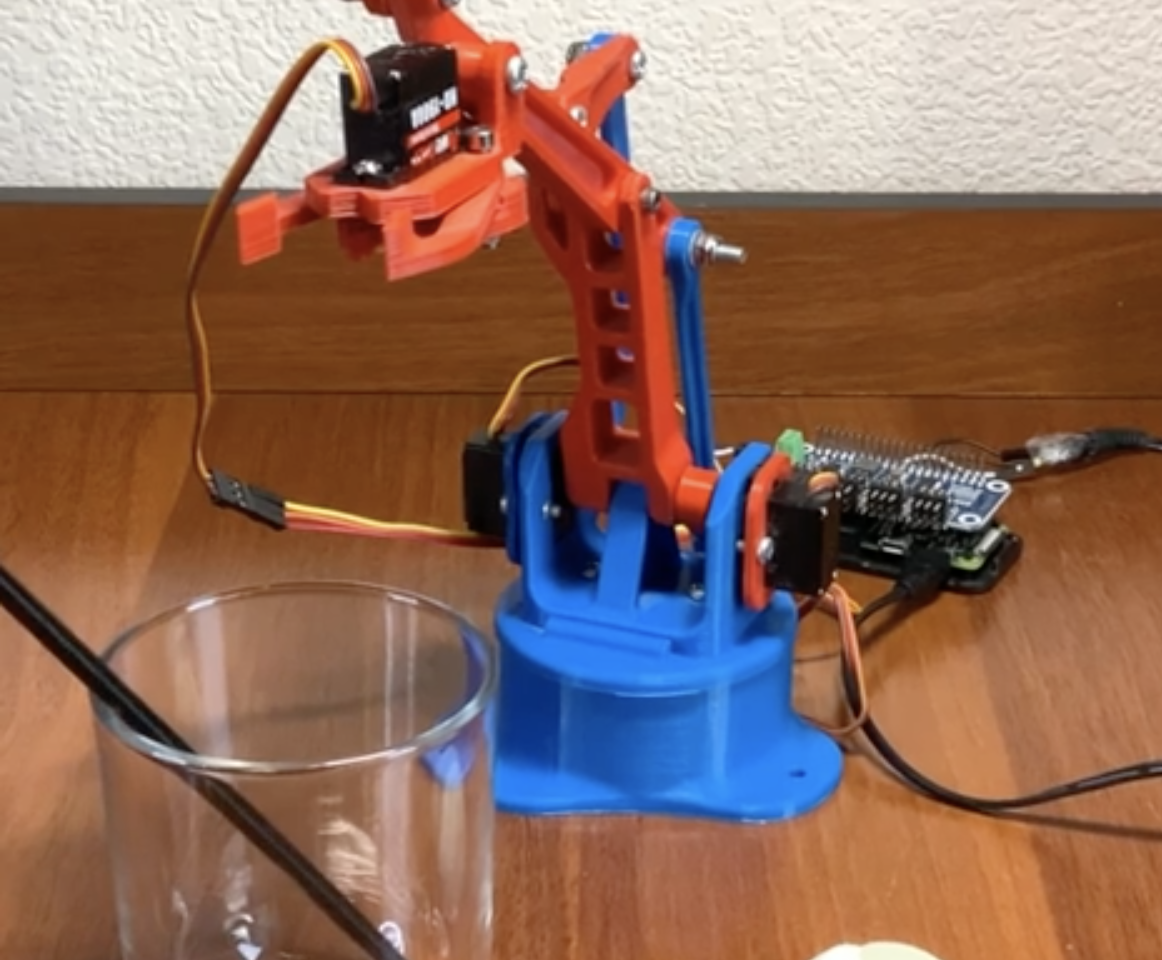

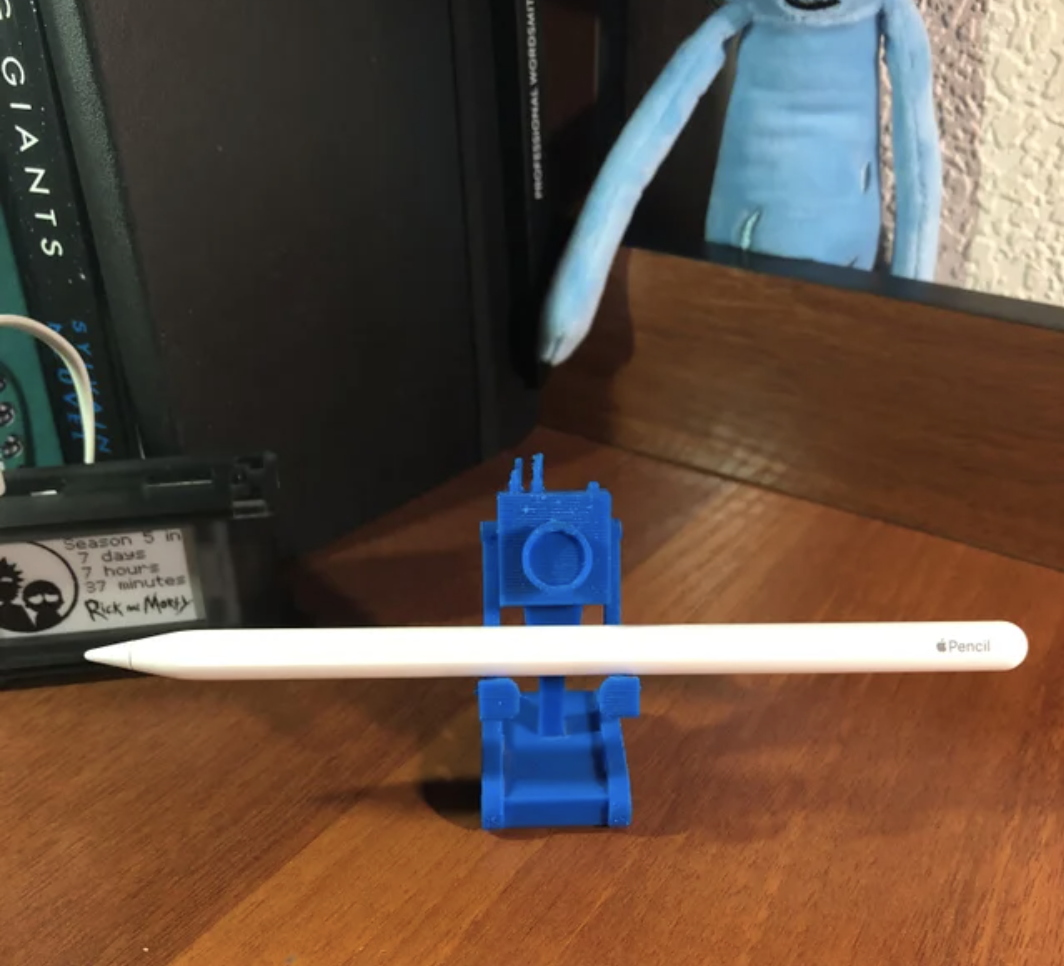

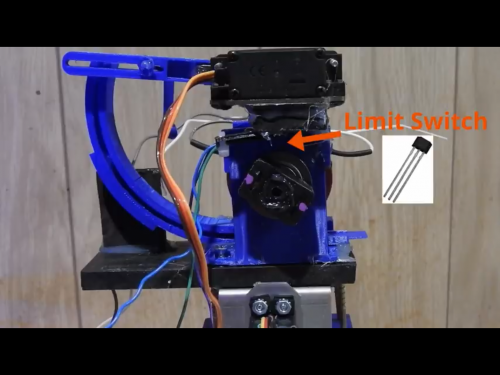

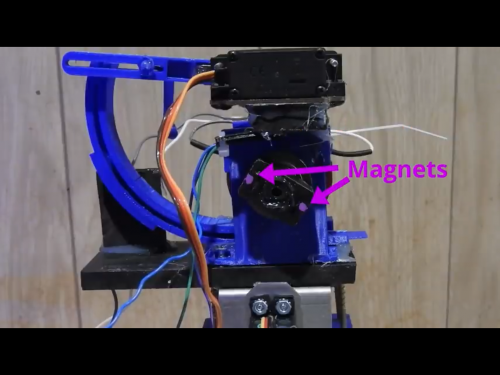

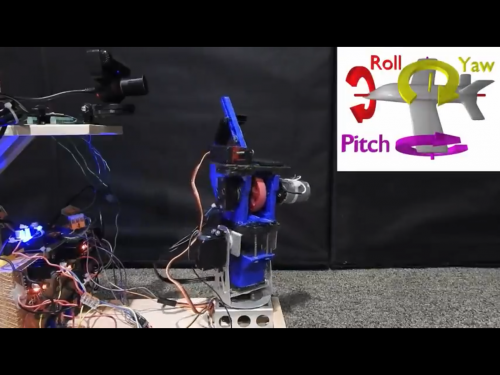

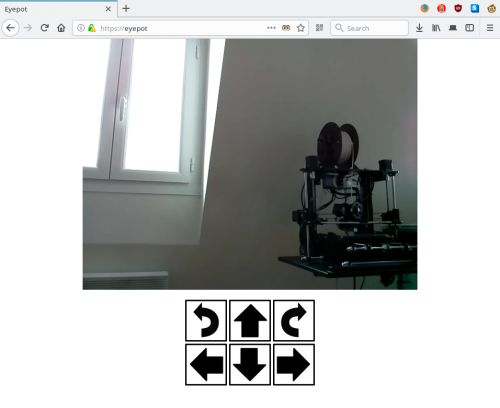

Pet feeder with 3D printed parts

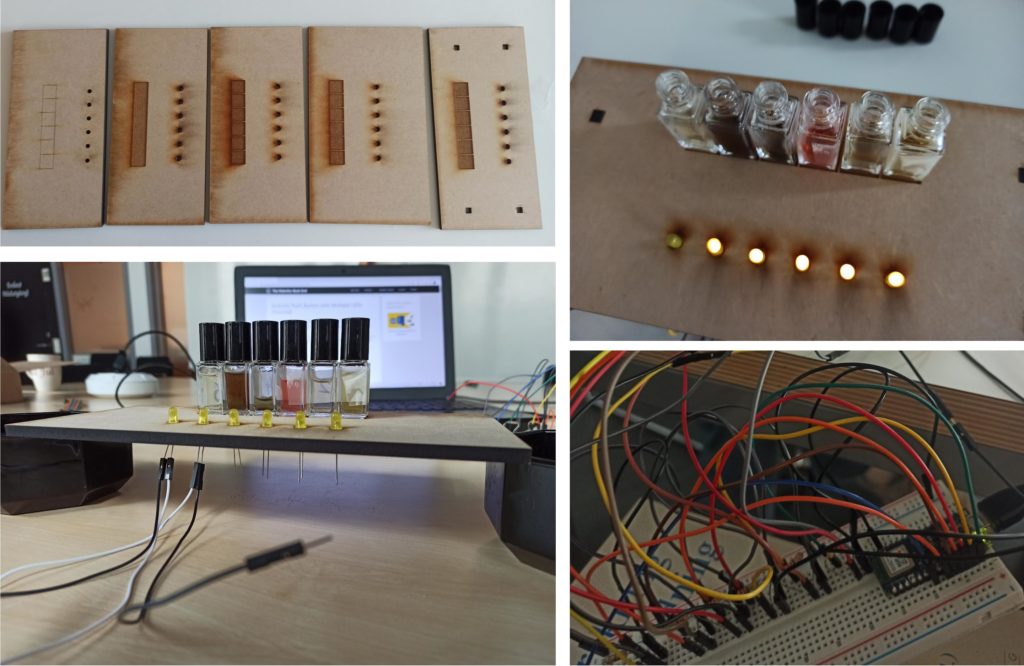

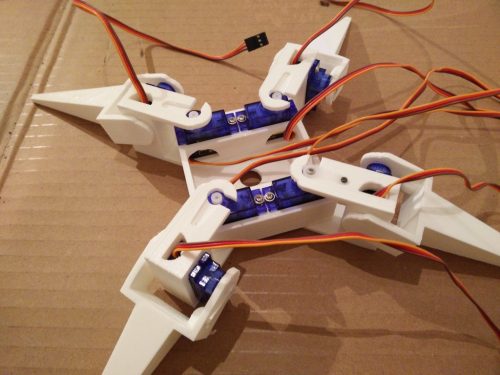

Before COVID-19, russo08 was working long, unpredictable hours and often ended up getting home late due to flooding and other disruptions. This made it tricky to feed his dog on time every day.

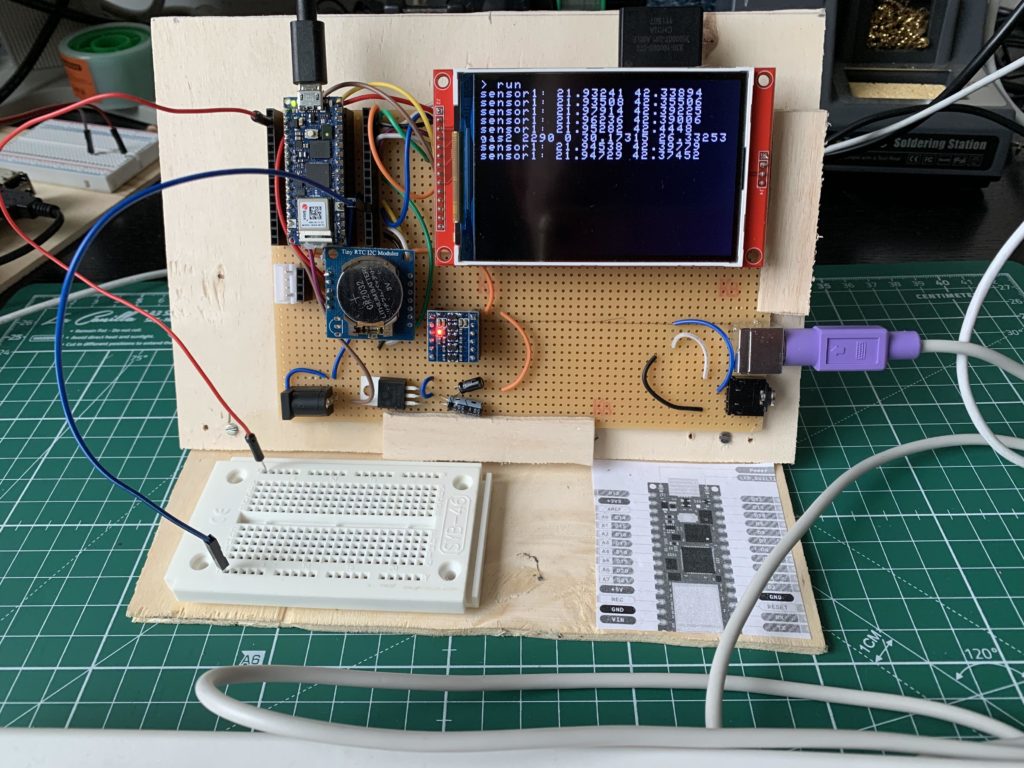

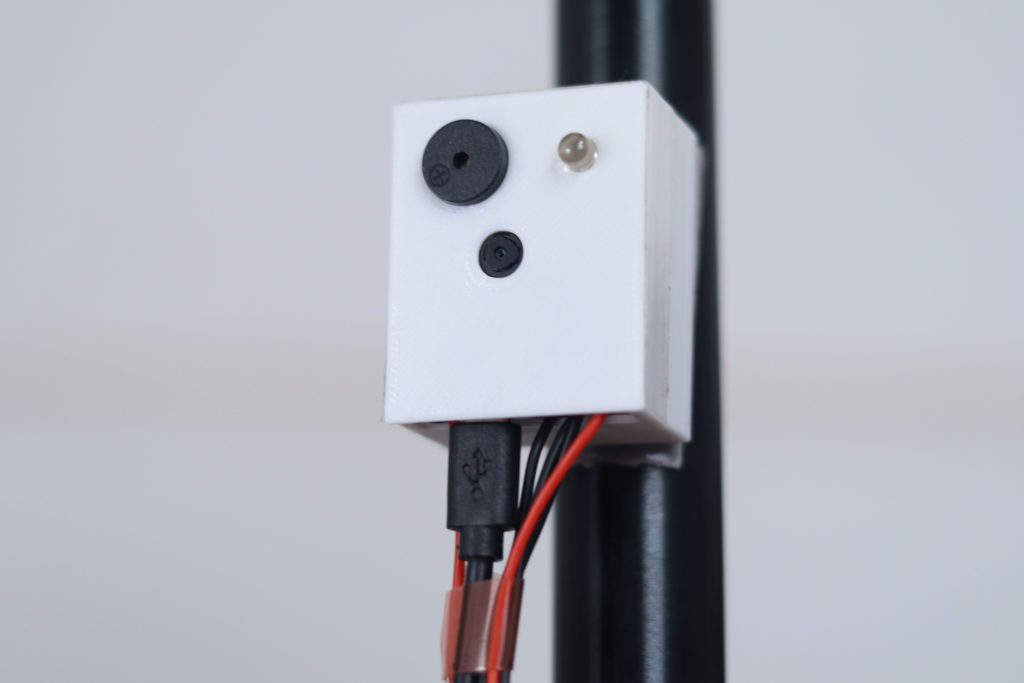

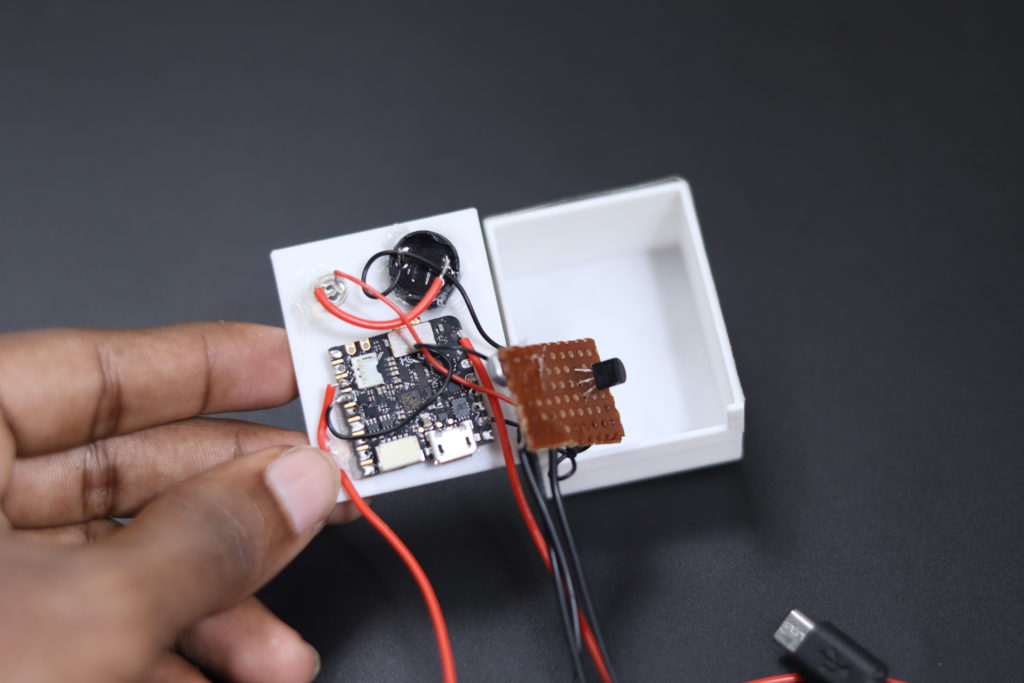

To ensure his pet was fed at the right times every day, russo08 decided to build an automated solution. He used an Arduino microcontroller and a handful of other components — including 3D printed parts — to build a custom dog feeder. Because of random power outages in the area, it was essential that the feeder had a solution for power outage recovery and food getting stuck in the dispensing mechanism.

Here’s the full list of features on russo08’s feeder:

- Two feedings per day

- Accurate timekeeping with real-time clock

- Manual time change of real-time clock

- Manual feeding option

- LED indication of hall sensor and real-time clock failure

- Overview of feed times, current time, and feeding completions on the main screen

- Easy to navigate menu

- Power outage feeding resume (will feed when the power comes back on)

- Feeding times and completions are safely stored in EEPROM

- Servo “jiggle” in the event of food getting stuck while dispensing

Improve your pet’s life with Arduino

Arduino’s solutions make it easier than ever to build your own smart home projects with relatively few starting materials and without the need to be a seasoned expert. Our community is filled with examples of DIY home automation projects that improved our makers’ lives in all kinds of ways.

When it comes to pets, Arduino’s technology can be used to build smart solutions like the ones in this article, making it easy to feed, water, protect, and care for our pets even when we aren’t physically present.

Check out this article where we look at how home automation can make it easier to care for your pets. We’ll also share some examples of projects from the Arduino Community, where many members have developed their own devices to keep their pets safe and happy.

Find out more about how Arduino works and get started with your own projects by checking out the main website.

Abstract: Caring for pets is one of the most important — if not THE most important — job you do at home. The good news is that technology can help in this area. Home automation can make it easier to feed, entertain, and care for your furry friends — find out some of the ways Arduino can help you do this.

Social post: Making sure your pets are fed, watered, and entertained can be a demanding job at times, especially when you’re busy with other things. The good news is that technology can shoulder some of the burden by automating some important pet care tasks.

Website: LINK

“ are trademarks of Sony Corporation.

“ are trademarks of Sony Corporation.