Reading Time: 9 minutes

By following our step-by-step guide, you will discover how to light up rainbow and heart images on the Sense HAT’s LED matrix, as well as showing a custom scrolling message. If you don’t have a Sense HAT, you can still try out the code using the Sense HAT Emulator in Raspbian.

Click here to download the code used in this project

Why use a Sense HAT?

The Sense HAT sits on top of your Raspberry Pi and adds the ability to sense and report details about the world around it. It can measure noise, temperature, humidity, and pressure, for example. The Sense HAT can show readings on an 8×8 LED matrix, but first needs to be instructed, using Python code, what sort of data it should look for. The Sense HAT’s visual display can also be programmed to show specific details including simple images. In this tutorial we’ll look at how to control the LED matrix. Don’t worry if you don’t have a Sense HAT as you can use the Sense HAT Emulator and try out the code in Raspbian.

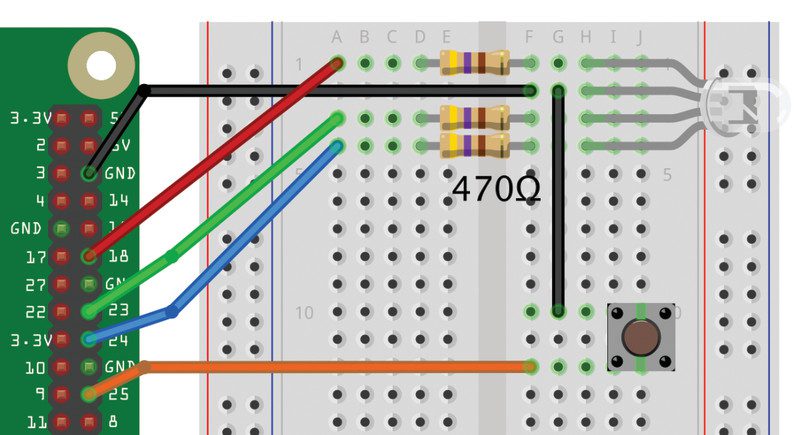

Attach the Sense HAT to Raspberry Pi

Shut down your Raspberry Pi (if it isn’t already) before attaching the Sense HAT to it. Hold the Sense HAT above your Raspberry Pi and line up the yellow holes at each corner with the corresponding ones on Raspberry Pi; make sure the header on Sense HAT lines up with the GPIO pins on Raspberry Pi. The white LED matrix should be at the opposite end of your Raspberry Pi from the USB ports. Gently push the Sense HAT onto Raspberry Pi’s GPIO pins and then screw the two boards together with standoffs. Now power up your Raspberry Pi as usual.

Open Thonny UDE

We’re going to use a program called Thonny to instruct our Sense HAT and tell it what to do. When Raspbian loads, select Programming from the top-left raspberry menu, then choose Thonny Python IDE. Click the New icon to open a new, untitled window. We need to get our program to recognise the Sense HAT module. To do this, type these two lines of code into the Thonny window:

from sense_hat import SenseHat sense = SenseHat()

Click the Save icon and name your file rainbow.py.

Say something

From now on, Thonny will know to use the Sense HAT whenever you type ‘sense.’ followed by a ‘.’ and a command. Let’s get the Sense HAT to say hello to us. Add this line of code to line 4 in Thonny:

sense.show_message("Hello Rosie")

Of course, you can use your own name. Click Run and the letters should scroll across the LED display. If you get an error in the Shell at the bottom of the Thonny window, check your code carefully against the sense_hello.py listing. Every letter has to match.

sense_hello.py

from sense_hat import SenseHat

sense = SenseHat() sense.show_message("Hello Rosie")

Choose your colours

We are going to get the Sense HAT to light up a rainbow and display a heart. We do this with the set_pixel() function.

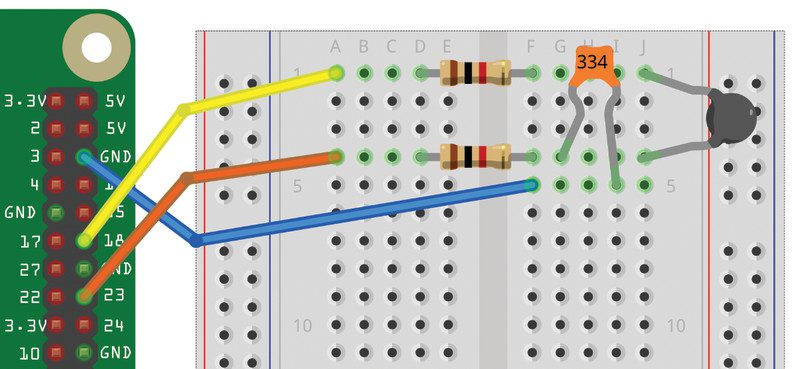

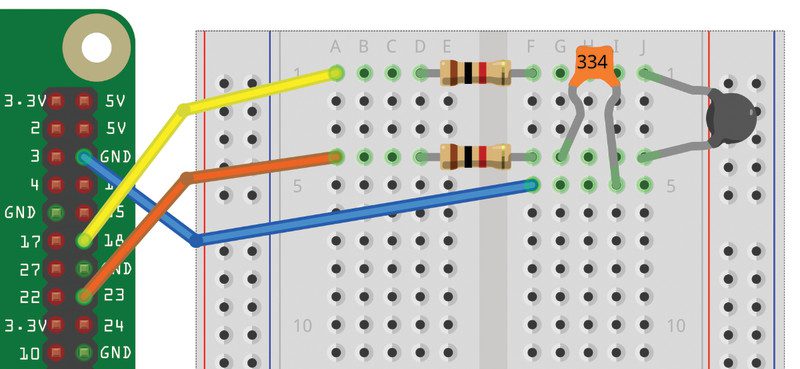

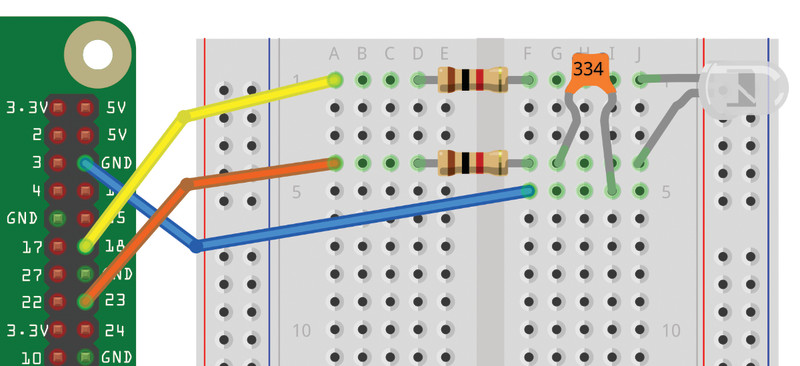

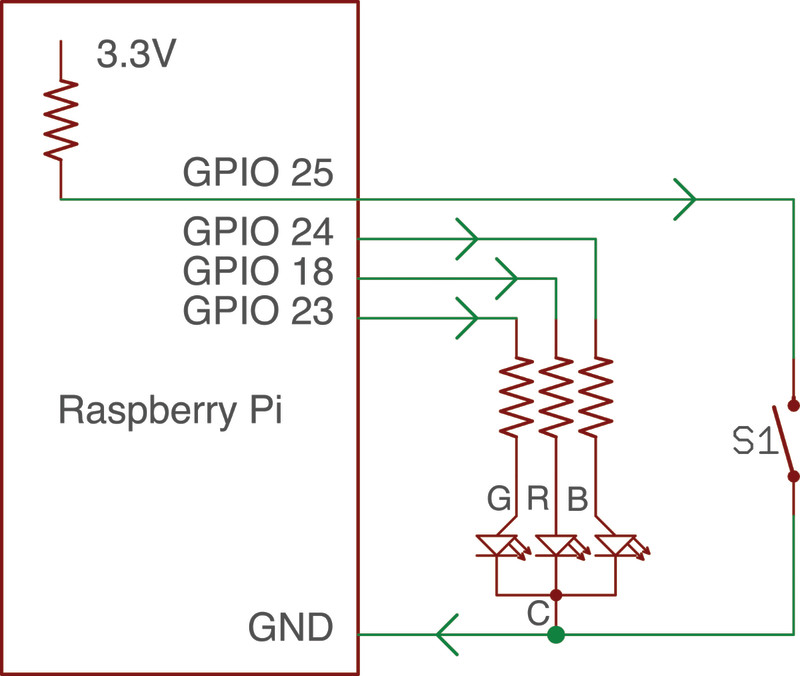

We need to tell set_pixel() which LEDs we want to light up, using x and y variables to correlate to the axes of the Sense HAT’s 8×8 grid of LEDs – see Figure 1. We also need to tell set_pixel() the colour using a three-digit code that matches the RGB (red, green, blue) value for each light.

We’re also going to start with sense.clear(), which clears any currently lit up LEDs. The 8×8 LED display is numbered 0 to 7 in both the x (left to right) and y (top to bottom) axes. Let’s make the top-left LED red. Delete the show_message() line from your code and enter:

sense.clear() sense.set_pixel(7, 4, 255, 0, 0)

The 7, 4, locates the pixel in the last column, and four rows down, and then the RGB value for red is 255, 0, 0 (which is 255 red, 0 blue, 0 green).

Now let’s add a third line, to light up another pixel in blue:

sense.set_pixel(0, 2, 0, 0, 255)

Check your code against the sense_pixels.py listing. Click Run to see your two dots light up. To add more colours, repeat this step choosing different shades and specifying different locations on the LED matrix.

sense_pixels.py

from sense_hat import SenseHat

sense = SenseHat() sense.clear()

sense.set_pixel(7, 4, 255, 0, 0)

sense.set_pixel(0, 2, 0, 0, 255)

Colour variables

Working with the RGB values soon becomes frustrating. So it is much easier to create a set of variables for each three-number value. You can then use the easy-to-remember variable whenever you need that colour.

r = (255, 0, 0) # red o = (255, 128, 0) # orange y = (255, 255, 0) # yellow g = (0, 255, 0) # green c = (0, 255, 255) # cyan b = (0, 0, 255) # blue p = (255, 0, 255) # purple n = (255, 128, 128) # pink w = (255, 255, 255) # white k = (0, 0, 0) # blank

Then you can just use each letter for a colour: ‘r’ for red and ‘p’ for purple, and so on. The # is a comment: the words after it don’t do anything to the code (they are just so you can quickly see which letter is which colour).

How to pick any colour

If you’re looking for a colour, then use the w3schools RGB Color Picker tool. Choose your colour and write down its RGB number.

Make a heart

Now we’ve got our colours, let’s make a heart. The code in sense_heat.py enables us to draw a heart using ‘r’ letters for red lights, and ‘k’ for blank. This line of code then draws the heart:

sense.set_pixels(heart)

from sense_hat import SenseHat sense = SenseHat() r = (255, 0, 0) # red

o = (255, 128, 0) # orange

y = (255, 255, 0) # yellow

g = (0, 255, 0) # green

c = (0, 255, 255) # cyan

b = (0, 0, 255) # blue

p = (255, 0, 255) # purple

n = (255, 128, 128) # pink

w =(255, 255, 255) # white

k = (0, 0, 0) # blank heart = [ k, r, r, k, k, r, r, k, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, k, r, r, r, r, r, r, k, k, k, r, r, r, r, k, k, k, k, k, r, r, k, k, k ] sense.set_pixels(heart)

Check your code against the sense_heart.py listing. Click Run and you will see a heart.

Draw a rainbow

Creating a rainbow is a little tricker. We could just draw out each line, like a heart. But we want ours to build up line by line. For this we’ll need to light up each pixel in a row, then pause.

On the second row (underneath from sense_hat import SenseHat), add this line of code:

from time import sleep

We now have the sleep() function to slow down our program. To add a three-second pause before new pixels are lit up, you would type:

sleep(3)

You can change the number of seconds by changing the number in brackets.

Create blocks of colour

To turn an entire row on the LED matrix red, you could type:

for x in range(8): sense.set_pixel (x, 0, r)

Press TAB to indent the second line. This loops over each LED in the first row (x, 0), replacing x with the numbers 0–7.

We could repeat this, to set the second line to yellow, with this command:

for x in range(8): sense.set_pixel (x, 1, y)

…And repeat this step until you have eight different coloured lines of LEDs. But this seems a bit clunky.

Make a rainbow on command

We think it’s neater to create two nested for loops for the x and y columns. Enter this code:

for y in range(8): colour = rainbow[y] for x in range(8): sense.set_pixel(x, y, colour) sleep(1)

Take a look at the sense_rainbow.py listing to see how the code should look. Click Run and a rainbow will build up line by line.

sense_rainbow.py

from sense_hat import SenseHat

from time import sleep sense = SenseHat() r = (255, 0, 0) # red

o = (255, 128, 0) # orange

y = (255, 255, 0) # yellow

g = (0, 255, 0) # green

c = (0, 255, 255) # cyan

b = (0, 0, 255) # blue

p = (255, 0, 255) # purple

n = (255, 128, 128) # pink

w =(255, 255, 255) # white

k = (0, 0, 0) # blank rainbow = [r, o, y, g, c, b, p, n] sense.clear() for y in range(8): colour = rainbow[y] for x in range(8): sense.set_pixel(x, y, colour) sleep(1)

Write a message

Writing messages in Python to show on your Sense HAT is really straightforward. You just need to decide on a few words to say and type them into a sense.show_message() command, as we did right at the start.

You can easily specify the text and background colours, too. Choose a contrasting colour for the background. To use your colours in a message, type: sense.show_message(„text here“ text_colour = , followed by the colour value you chose for the text, back_colour = , followed by the colour value you chose for the background.

Since we have already defined several colours, we can refer to any of them by name in our code. If you haven’t already consulted our rainbow colour list, take a look at the sense_rainbow.py listing and add them to your code.

For instance, type:

sense.show_message("THANK YOU NHS!", text_colour = w, back_colour = b)

Click Run and your rainbow, followed by your message, should appear on your Sense HAT’s display.

Bring it together

We’re now going to take the three things we have created – the heart, the rainbow, and the text message – and bring them together in an infinite loop. This will run forever (or at least until we click the Stop button).

while True:

All the code indented underneath the while True: line will replay as a loop until you press Stop. Inside it we will put our code for the heart, rainbow, and text message.

By now, you will be able to see the potential of making patterns to display on your Sense HAT. You can experiment by making the colours chase each other around the LED matrix and by altering how long each colour appears.

Enter all the code from rainbow.py and press Run to see the final message.

rainbow.py

from sense_hat import SenseHat

from time import sleep sense = SenseHat() r = (255, 0, 0) # red

o = (255, 128, 0) # orange

y = (255, 255, 0) # yellow

g = (0, 255, 0) # green

c = (0, 255, 255) # cyan

b = (0, 0, 255) # blue

p = (255, 0, 255) # purple

n = (255, 128, 128) # pink

w =(255, 255, 255) # white

k = (0, 0, 0) # blank rainbow = [r, o, y, g, c, b, p, n] heart = [ k, r, r, k, k, r, r, k, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, r, k, r, r, r, r, r, r, k, k, k, r, r, r, r, k, k, k, k, k, r, r, k, k, k ] while True: sense.clear() sense.set_pixels(heart) sleep(3) for y in range(8): colour = rainbow[y] for x in range(8): sense.set_pixel(x, y, colour) sleep(1) sleep(3) sense.show_message("THANK YOU NHS!", text_colour = w, back_colour = b) sleep(3)

Sense HAT Emulator

You can try out this tutorial using the Sense HAT Emulator in Raspbian. To install it, search for ‘sense’ in the Recommended Software tool. Note: If using this Emulator, you’ll need to replace from sense_hat with from sense_emu at the top of your Python code (but not if using the online Sense HAT emulator).