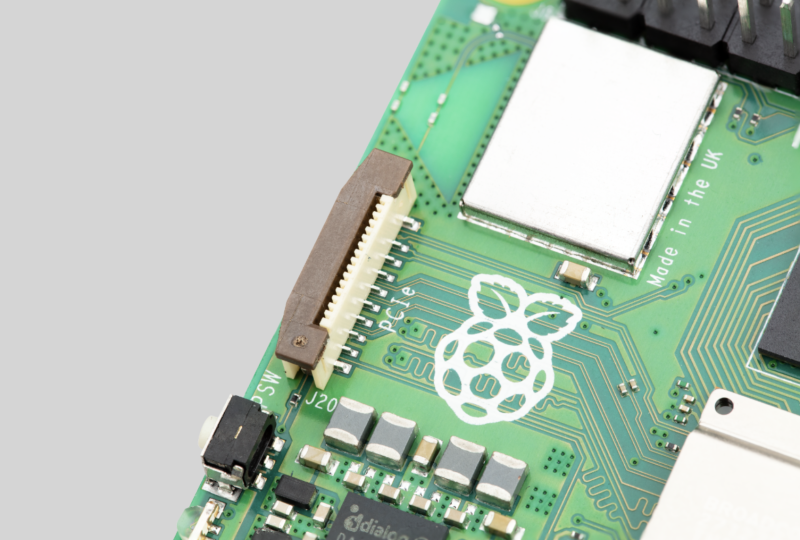

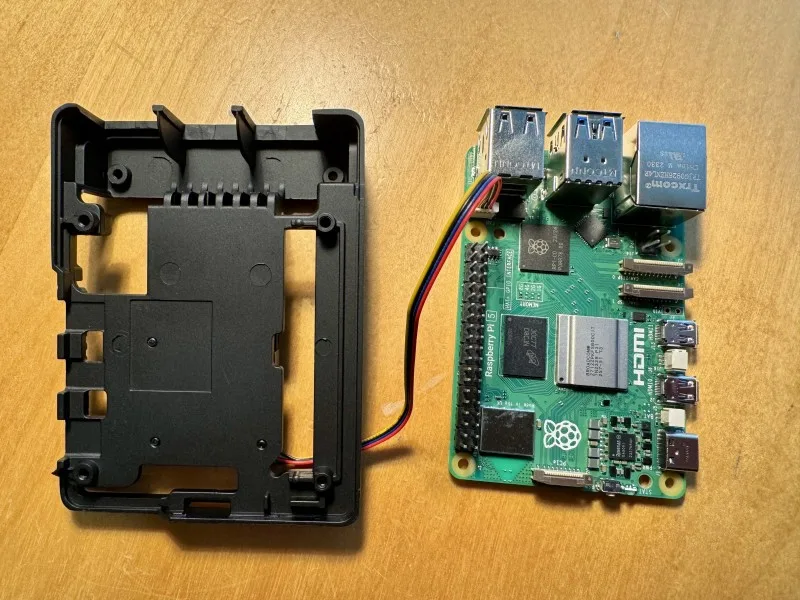

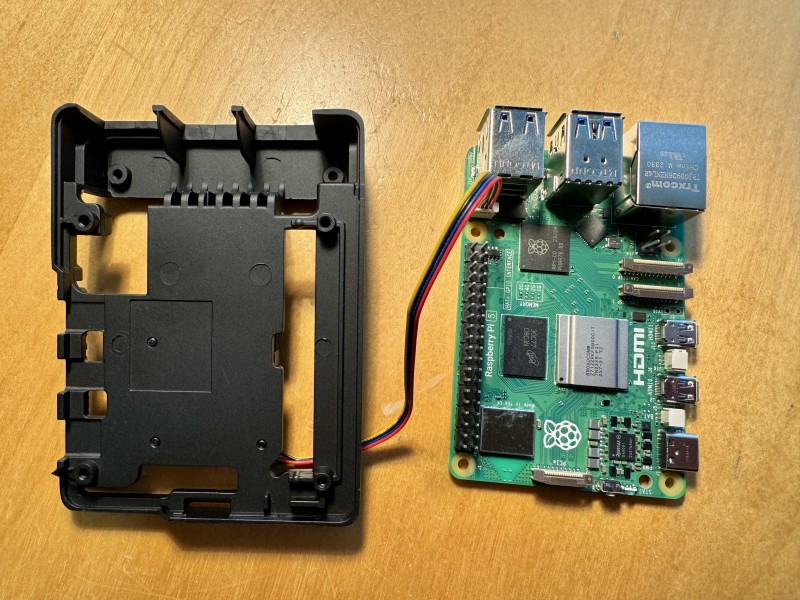

Reading Time: 3 minutesOnce people had recovered from the shock of seeing both a power button and a real-time clock on a Raspberry Pi, one of the most commented-on features of the new platform was the small, vertical, 16-way FFC (Flat Flexible Cable) connector on the left-hand side of the board, which exposes a single-lane PCI Express interface.

PCIe of cake

Peripheral Component Interconnect Express (PCI Express or PCIe) is, as the name suggests, a board-level interconnect that allows high-speed data transfer between a processor chip (in our case BCM2712) and external peripherals such as NVMe SSDs, Ethernet cards, or more exotic things such as AI/ML accelerators.

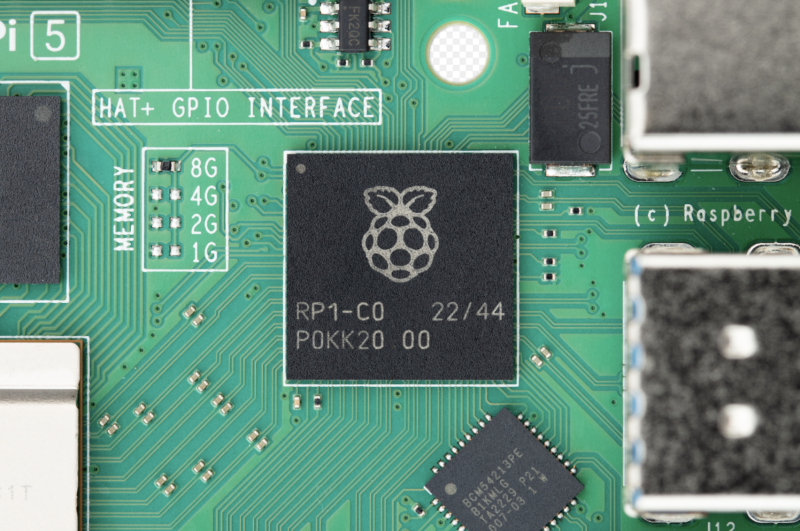

PCIe works by serialising data transfers and sending one bit at a time down a single channel. Higher-capacity PCIe interfaces have more lanes (×2, ×4, ×8, ×16); on Raspberry Pi 5, BCM2712 is connected to our RP1 I/O controller via a ×4 interface. Each lane runs at 5Gbits/s for PCIe 2.0 (the fastest mode that we officially support on Raspberry Pi 5); after coding overhead, this translates into a capacity of 4Gbits/s. Even taking into account other protocol overheads, you’re likely to see ~450MBytes/sec to and from a good NVMe SSD. Pretty fast!

Alongside the data and clock channels, the PCIe specification requires some sideband signals like reset, clock request (which does double duty as a power state signal), and wakeup. Our 16-way connector provides all these signals. We also have two pins that allow us to control board power, and to ensure that an appropriately designed PCIe peripheral is automatically detected by the Raspberry Pi firmware.

Not an M.2

Why didn’t we add an M.2 connector to the Raspberry Pi 5? The M.2 connector is large, relatively expensive, and would require us to provide a 3.3V, 3A power supply. Together, these preclude us offering it in the standard Raspberry Pi form factor.

Using a small, low-cost FFC connector allowed us to provide a PCIe interface without growing the board, or imposing the cost of an M.2 connector and its supporting power-supply circuitry on every Raspberry Pi user.

Specification the first

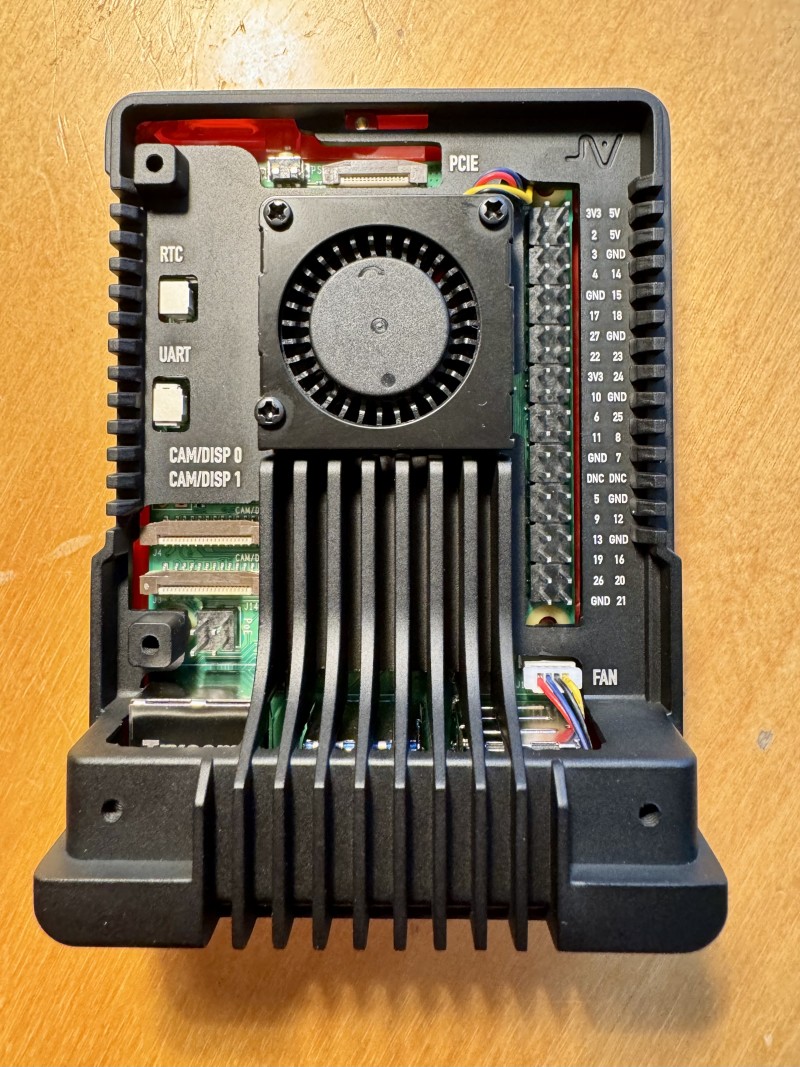

One thing we did not have ready at the time of the Raspberry Pi 5 launch was a specification for how to build peripherals that attach to the 16-way PCIe connector. The interaction of PCIe peripherals with Raspberry Pi power states and firmware required detailed consideration, and we wanted to make sure we had done extensive testing of our own prototype product to make sure everything was working exactly as expected.

Today, we’re releasing the first revision of that specification: Raspberry Pi Connector for PCIe A 16-way PCIe FFC Connector Specification. Our own M.2 M Key HAT+ is in the final stage of prototyping, and will be launched early next year.

Specification the second

For those of you reading closely, you’ll have noticed that we’re calling our M.2 HAT a “HAT+”. If one new specification wasn’t enough for you, today we’re also releasing a preliminary version of the new Raspberry Pi HAT+ Specification.

The original HAT specification was written back in 2014, so it is now very overdue for an update. A lot has changed since then. The new specification simplifies certain things, including the required EEPROM contents, and pulls everything into one document in the new Raspberry Pi documentation style, along with adding a few new features.

There’s still work to be done on this standard, and our EEPROM utilities haven’t yet been updated to support the generation of the new style of EEPROMs. So this release is very much for people that want to get a feel for how the HAT standard is changing.

We really wanted to get the HAT+ standard right, as it’s likely to be around for as long as the old HAT standard. One of the reasons for the delay in getting the PCIe connector standard published was our sense that PCIe boards that go on top, rather than boards that go beneath, should probably be HAT+ boards. Ours is going to be!

Standards for all!

If you want to discuss them with the community, head over to the Raspberry Pi forums, where you’ll find a dedicated area to talk about HATs, HAT+ and other peripherals.

Watch this space for the new M.2 HAT+, and a final version of the HAT+ standard, which we’ll release alongside it in 2024.

core, which include

core, which include