Schlagwort: Edge Impulse

-

Have you heard? Nicla Voice is out at CES 2023!

Reading Time: 2 minutesAs announced at CES 2023 in Las Vegas, our tiny form factor family keeps growing: the 22.86 x 22.86 mm Nicla range now includes Nicla Voice, allowing for easy implementation of always-on speech recognition on the edge. How? Let’s break it down. 1. The impressive sensor package. Nicla Voice comes with a…

-

Turning a K-Way jacket into an intelligent hike tracker with the Nicla Sense ME

Reading Time: 2 minutesGoing for a hike outdoors is a great way to relieve stress, do some exercise, and get closer to nature, but tracking them can be a challenge. Our recent collaboration with K-Way led Zalmotek to develop a small wearable device that can be paired to a jacket to track walking speed, steps taken,…

-

Arduino and iconic outdoor brand K-Way, with the support of Edge Impulse, launch a call for developers

Reading Time: 3 minutesFollowing the announcement of our K-Way collaboration during Maker Faire Rome, today officially opens the competition for developers based on the Nicla Sense ME, with the support of Edge Impulse. Imagine what could happen if you could put your hands on the most iconic rain jacket, paired with a Nicla Sense, and…

-

Arduino and K-Way, with the support of Edge Impulse, team up for a new idea of smart clothing

Reading Time: 2 minutesImagine the possibilities generated by integrating advanced AI and powerful sensors in to one of the most iconic outdoors jackets with a heritage that’s more than 50 years old. You could start sensing and interacting with the surroundings like never before. This is what we created here at Arduino: enclosing the Nicla…

-

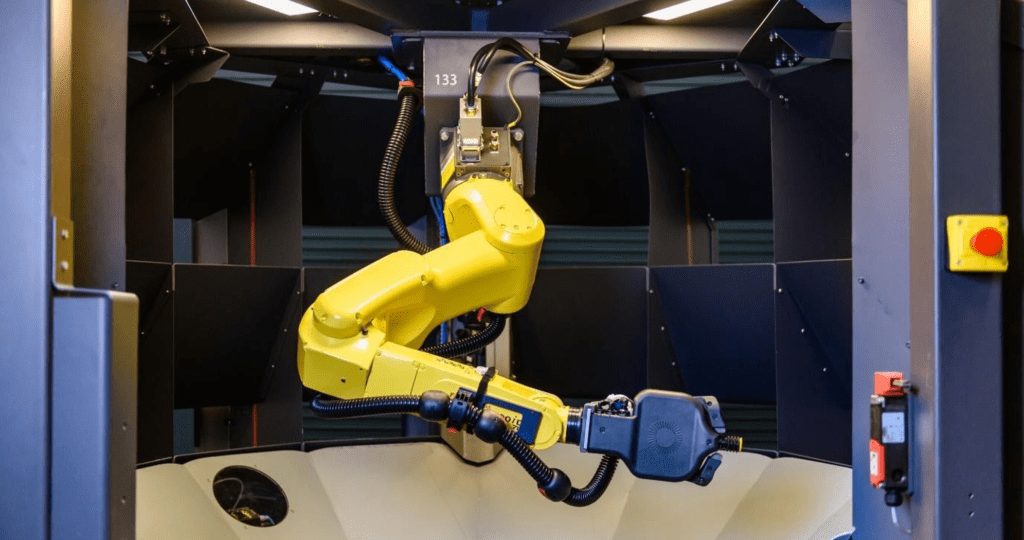

From embedded sensors to advanced intelligence: Driving Industry 4.0 innovation with TinyML

Reading Time: 5 minutesWevolver’s previous article about the Arduino Pro ecosystem outlined how embedded sensors play a key role in transforming machines and automation devices to Cyber Physical Production Systems (CPPS). Using CPPS systems, manufacturers and automation solution providers capture data from the shop floor and use it for optimizations in areas like production schedules,…

-

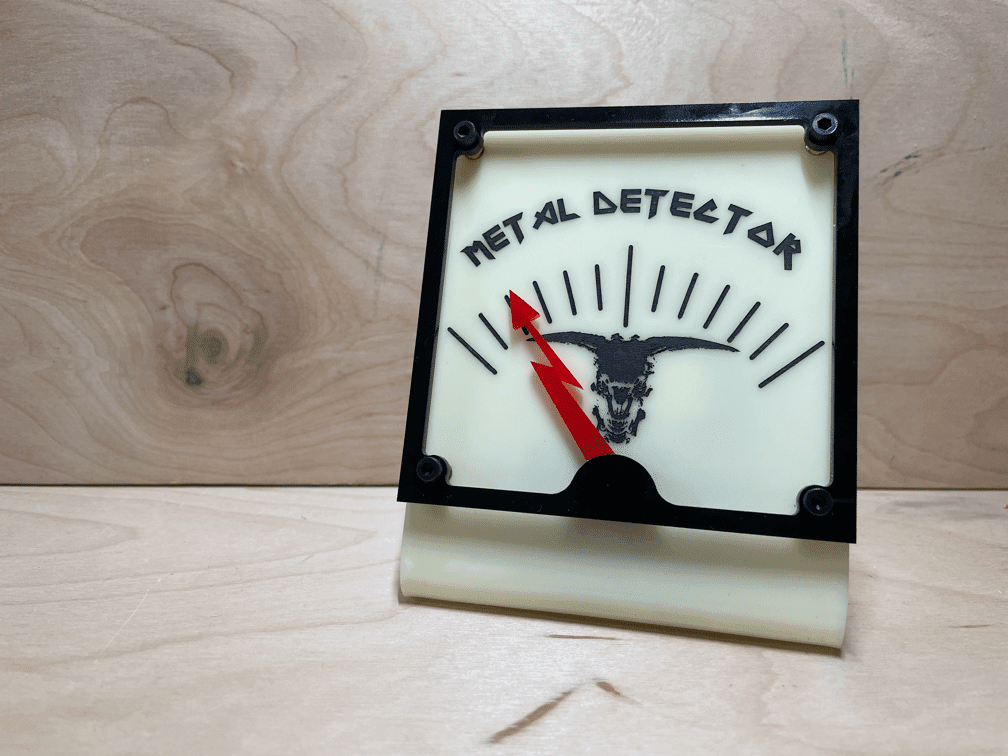

Instead of sensing the presence of metal, this tinyML device detects rock (music)

Reading Time: 2 minutesArduino Team — January 29th, 2022 After learning about the basics of embedded ML, industrial designer and educator Phil Caridi had the idea to build a metal detector, but rather than using a coil of wire to sense eddy currents, his device would use a microphone to determine if metal music is playing…

-

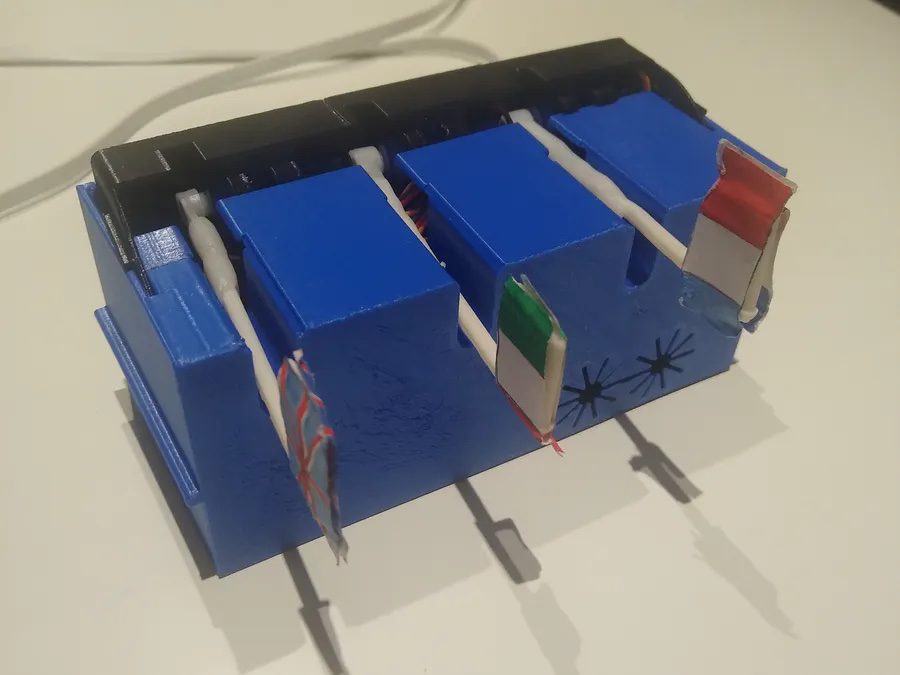

This Arduino device can detect which language is being spoken using tinyML

Reading Time: 2 minutesArduino Team — December 8th, 2021 Although smartphone users have had the ability to quickly translate spoken words into nearly any modern language for years now, this feat has been quite tough to accomplish on small, memory-constrained microcontrollers. In response to this challenge, Hackster.io user Enzo decided to create a proof-of-concept project that demonstrated…

-

This pocket-sized uses tinyML to analyze a COVID-19 patient’s health conditions

Reading Time: 2 minutesThis pocket-sized uses tinyML to analyze a COVID-19 patient’s health conditions Arduino Team — June 21st, 2021 In light of the ongoing COVID-19 pandemic, being able to quickly determine a person’s current health status is very important. This is why Manivannan S wanted to build his very own COVID Patient Health Assessment Device that could take…

-

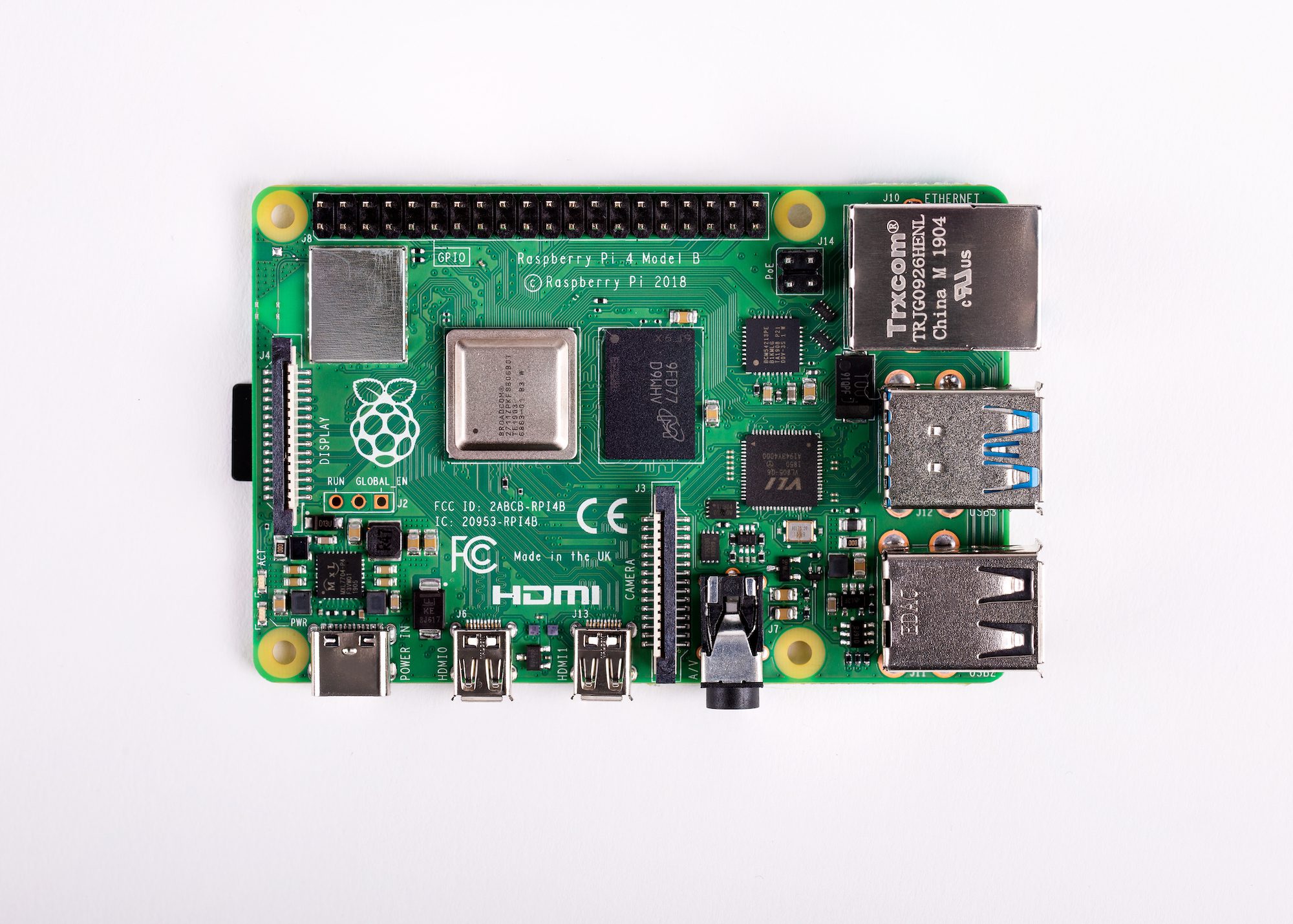

Edge Impulse and TinyML on Raspberry Pi

Reading Time: 8 minutesRaspberry Pi is probably the most affordable way to get started with embedded machine learning. The inferencing performance we see with Raspberry Pi 4 is comparable to or better than some of the new accelerator hardware, but your overall hardware cost is just that much lower. Raspberry Pi 4 Model B However, training…

-

Edge Impulse makes TinyML available to millions of Arduino developers

Reading Time: 4 minutesThis post is written by Jan Jongboom and Dominic Pajak. Running machine learning (ML) on microcontrollers is one of the most exciting developments of the past years, allowing small battery-powered devices to detect complex motions, recognize sounds, or find anomalies in sensor data. To make building and deploying these models accessible to…