Schlagwort: AI literacy

-

Teaching about AI explainability

Reading Time: 6 minutesIn the rapidly evolving digital landscape, students are increasingly interacting with AI-powered applications when listening to music, writing assignments, and shopping online. As educators, it’s our responsibility to equip them with the skills to critically evaluate these technologies. A key aspect of this is understanding ‘explainability’ in AI and machine learning (ML)…

-

AI isn’t just robots: How to talk to young children about AI

Reading Time: 5 minutesYoung children have a unique perspective on the world they live in. They often seem oblivious to what’s going on around them, but then they will ask a question that makes you realise they did get some insight from a news story or a conversation they overheard. This happened to me with…

-

Experience AI: Making AI relevant and accessible

Reading Time: 7 minutesGoogle DeepMind’s Aimee Welch discusses our partnership on the Experience AI learning programme and why equal access to AI education is key. This article also appears in issue 22 of Hello World on teaching and AI. From AI chatbots to self-driving cars, artificial intelligence (AI) is here and rapidly transforming our world.…

-

AI literacy for teachers and students all over the world

Reading Time: 5 minutesI am delighted to announce that the Raspberry Pi Foundation and Google DeepMind are building a global network of educational organisations to bring AI literacy to teachers and students all over the world, starting with Canada, Kenya, and Romania. Learners around the world will gain AI literacy skills through Experience AI. Experience…

-

The Experience AI Challenge: Make your own AI project

Reading Time: 4 minutesWe are pleased to announce a new AI-themed challenge for young people: the Experience AI Challenge invites and supports young people aged up to 18 to design and make their own AI applications. This is their chance to have a taste of getting creative with the powerful technology of machine learning. And…

-

Hello World #22 out now: Teaching and AI

Reading Time: 2 minutesRecent developments in artificial intelligence are changing how the world sees computing and challenging computing educators to rethink their approach to teaching. In the brand-new issue of Hello World, out today for free, we tackle some big questions about AI and computing education. We also get practical with resources for your classroom.…

-

What does AI mean for computing education?

Reading Time: 9 minutesIt’s been less than a year since ChatGPT catapulted generative artificial intelligence (AI) into mainstream public consciousness, reigniting the debate about the role that these powerful new technologies will play in all of our futures. ‘Will AI save or destroy humanity?’ might seem like an extreme title for a podcast, particularly if…

-

Experience AI: Teach about AI, chatbots, and biology

Reading Time: 4 minutesNew artificial intelligence (AI) tools have had a profound impact on many areas of our lives in the past twelve months, including on education. Teachers and schools have been exploring how AI tools can transform their work, and how they can teach their learners about this rapidly developing technology. As enabling all…

-

How we’re learning to explain AI terms for young people and educators

Reading Time: 6 minutesWhat do we talk about when we talk about artificial intelligence (AI)? It’s becoming a cliche to point out that, because the term “AI” is used to describe so many different things nowadays, it’s difficult to know straight away what anyone means when they say “AI”. However, it’s true that without a…

-

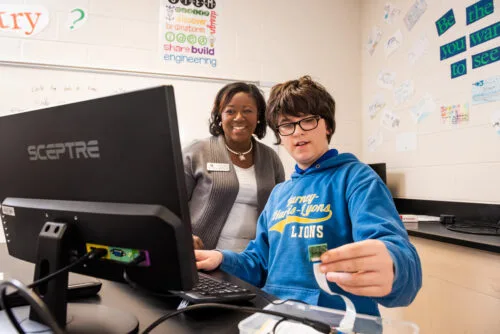

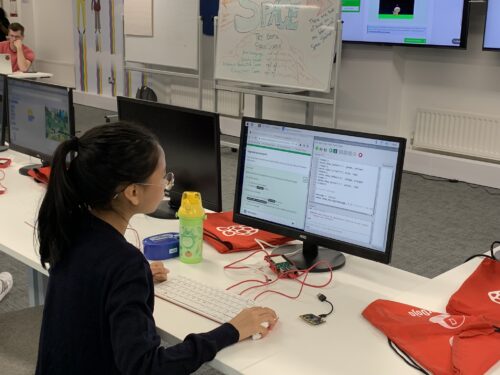

Experience AI: The excitement of AI in your classroom

Reading Time: 4 minutesWe are delighted to announce that we’ve launched Experience AI, our new learning programme to help educators to teach, inspire, and engage young people in the subject of artificial intelligence (AI) and machine learning (ML). Experience AI is a new educational programme that offers cutting-edge secondary school resources on AI and machine…

-

AI education resources: What do we teach young people?

Reading Time: 6 minutesPeople have many different reasons to think that children and teenagers need to learn about artificial intelligence (AI) technologies. Whether it’s that AI impacts young people’s lives today, or that understanding these technologies may open up careers in their future — there is broad agreement that school-level education about AI is important.…

-

Experience AI with the Raspberry Pi Foundation and DeepMind

Reading Time: 3 minutesI am delighted to announce a new collaboration between the Raspberry Pi Foundation and a leading AI company, DeepMind, to inspire the next generation of AI leaders. The Raspberry Pi Foundation’s mission is to enable young people to realise their full potential through the power of computing and digital technologies. Our vision…

-

AI literacy research: Children and families working together around smart devices

Reading Time: 6 minutesBetween September 2021 and March 2022, we’ve been partnering with The Alan Turing Institute to host a series of free research seminars about how to young people about AI and data science. In the final seminar of the series, we were excited to hear from Stefania Druga from the University of Washington,…