Kevin’s idea was to transcribe everything he said in real-time and display it on a screen that could be worn as a badge. Since he works at Deepgram – which offers a deep learning speech recognition system – he already knew what software he was going to use. One of Kevin’s friends, Bevis, then suggested he pick up a Raspberry Pi Zero computer for the project. “I wanted to create a wearable and I needed the smallest and lightest option,” he explains.

Listening intently

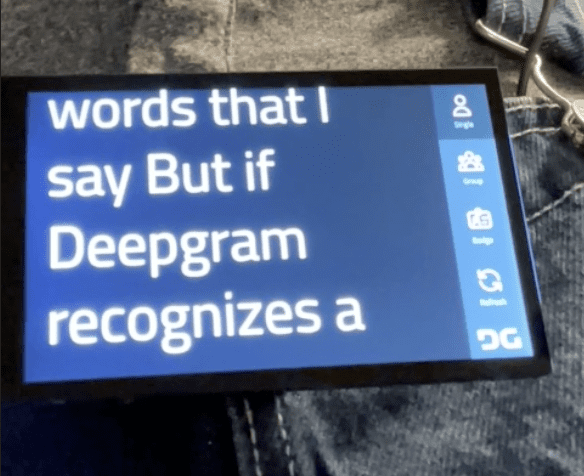

In essence, the project is a full-screen browser application. It runs on Raspberry Pi Zero which is connected to a hi-res HyperPixel 4.0 display and the setup is powered via a battery pack. A generic USB lapel microphone is also plugged in, and this is intended to sit on the wearer’s shoulder so that it picks up the person’s speech.

From there, it was a case of incorporating the Deepgram API into a program. “At its core, the whole Deepgram feature is just twelve lines of code. It asks for the microphone, accesses raw data, opens a persistent WebSocket connection with Deepgram, and sends mic data every 250 milliseconds,” Kevin says. “It then logs the results when they come back. Every spoken word is transcribed onto the screen.”

As a coder, Kevin had few problems. “The software development side of the project was perfectly within my wheelhouse,” he says. “But as the project grew in complexity, so did the software, from plain HTML and JavaScript to Vue.js, and finally implementing a back-end with Node.js to handle translation.”

Look who’s talking

Initially, the intention was to only transcribe his own voice, but the project soon evolved. Since Deepgram works by detecting everyone who is speaking (and can transcribe many languages), Kevin initially ensured that only the first voice would be displayed on the screen – this meant he could talk and know that only his words were being transcribed.

“But because the data was there, it was extremely simple to include a mode that would also show all of the user voices,” Kevin says. The spoken words of each person in range of the microphone are shown on the screen in a different colour. “A feature called ‘diarisation’ detects different speakers and returns data about who is speaking.”

After showcasing the project on Twitter, one of the most common requests was for a translation option so that words could appear in a different language. “Deepgram doesn’t do this,” Kevin says. “So I found a lovely, simple translation API called iTranslate and, with very minimal extra work, I could take Deepgram transcripts and pass them on for translation.”

Finally, he included a mode which would turn the display into a simple conference badge. “It’s just a static screen containing personal information and this was also a community suggestion. It only needed a few lines of code and it didn’t need to interact with an API.” Even so, this project could well be an essential conference accessory.

Schreibe einen Kommentar

Du musst angemeldet sein, um einen Kommentar abzugeben.