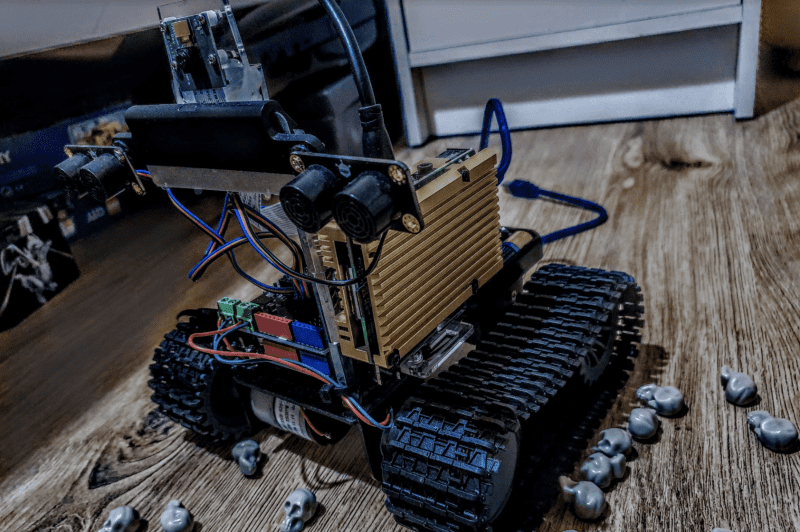

Fortunately for humankind today, Michael Darby’s DIY robot version of the HK Tank is a lot less deadly, firing beams of light from RGB LEDs. “I have always been a huge fan of the Terminator films and I’ve always wanted to recreate the robots from them,” he tells us. “The tracked chassis [a DFRobot Black Gladiator] was perfect for this – it’s not dangerous, but it may bump into someone. The lights on it are also kind of bright.”

Enemy detection

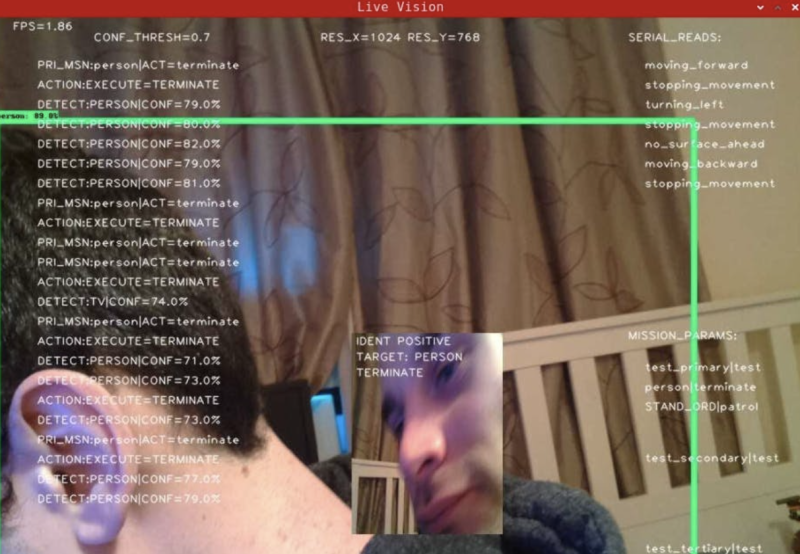

Not only does Michael’s robot look the part, it has numerous sensors to help it navigate, along with a Raspberry Pi Camera Module for spotting humans and objects. If a human is detected, ‘purple plasma’ – or rather, a flash of the RGB LEDs – is fired at them.

Mounted to one side, with a shiny gold heatsink, a Raspberry Pi 4 is the brain of the robot, handing the TensorFlow machine learning and AI functions. “It’s using a pre-trained model, so I think it has a good few thousand things at least it can detect,” says Michael. “In future I will look into getting it trained on more things.”

The robot’s Raspberry Pi also communicates with an Arduino which handles the low-level functions, such as controlling the two DC motors and reading no fewer than five ultrasonic sensors used for obstacle avoidance. “There are probably other sensors I could have used,” notes Michael, “but I thought these would be the easiest to implement. There are two on the front to make sure it doesn’t hit a wall (and also help with the aesthetic) and the others are all in place to prevent it from hitting things behind it, or driving off of the edge of things.”

Join the queue

With the project taking “a good few months” to develop, Michael says the most difficult was sorting out the code – written in Python – and getting it to do what he wanted. “This project had some really new stuff I was trying, such as the event queue to allow asynchronous processing; this is where it really got complex.”

Rather than handling one function at a time, the code is based on an event queue. All functions are threaded and running simultaneously, dropping their results or requests into the central queue. This prevents conflicts and is based on priority for the functions: “If there is a module that requires something to happen sooner, like some kind of priority action for moving to a location, or recognising a person, it will be handled before a lower-level action such as processing an object that isn’t a mission parameter.”

Mission parameters are stored in YAML files rather than hard-coded. “You can essentially give it commands via a YAML file that contains all of its standing orders and configurations,” explains Michael. “From here it can be set to identify certain things and react in a certain way, such as spot a human and light up the ‘plasma turret’ RGB LEDs.”

While the robot has received positive feedback from the community, he’s planning to make improvements, such as training the model on specific faces and “allowing for more complex patrol patterns and ‘missions’ and make it more of an autonomous bot.” Maybe it’s just as well it’s only firing light beams.

Schreibe einen Kommentar

Du musst angemeldet sein, um einen Kommentar abzugeben.