Schlagwort: TinyML

-

Training embedded audio classifiers for the Nicla Voice on synthetic datasets

Reading Time: 2 minutesThe task of gathering enough data to classify distinct sounds not captured in a larger, more robust dataset can be very time-consuming, at least until now. In his write-up, Shakhizat Nurgaliyev describes how he used an array of AI tools to automatically create a keyword spotting dataset without the need for speaking into a microphone. The…

-

Fall detection system with Nicla Sense ME

Reading Time: 4 minutesThe challenge Personal safety is a growing concern in a variety of settings: from high-risk jobs where HSE managers must guarantee workers’ security to the increasingly common work and study choices that drive family and friends far apart, sometimes leading to more isolated lives. In all of these situations, having a system…

-

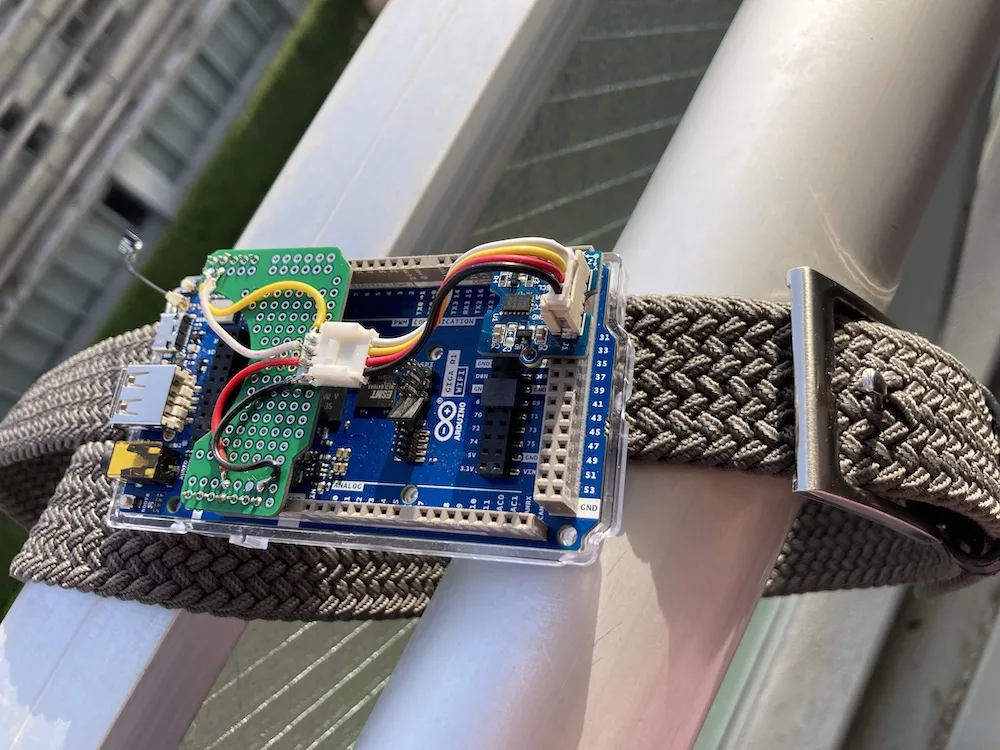

This GIGA R1 WiFi-powered wearable detects falls using a Transformer model

Reading Time: 2 minutesFor those aged 65 and over, falls can be one of the most serious health concerns they face either due to lower mobility or decreasing overall coordination. Recognizing this issue, Naveen Kumar set out to produce a wearable fall-detecting device that aims to increase the speed at which this occurs by utilizing…

-

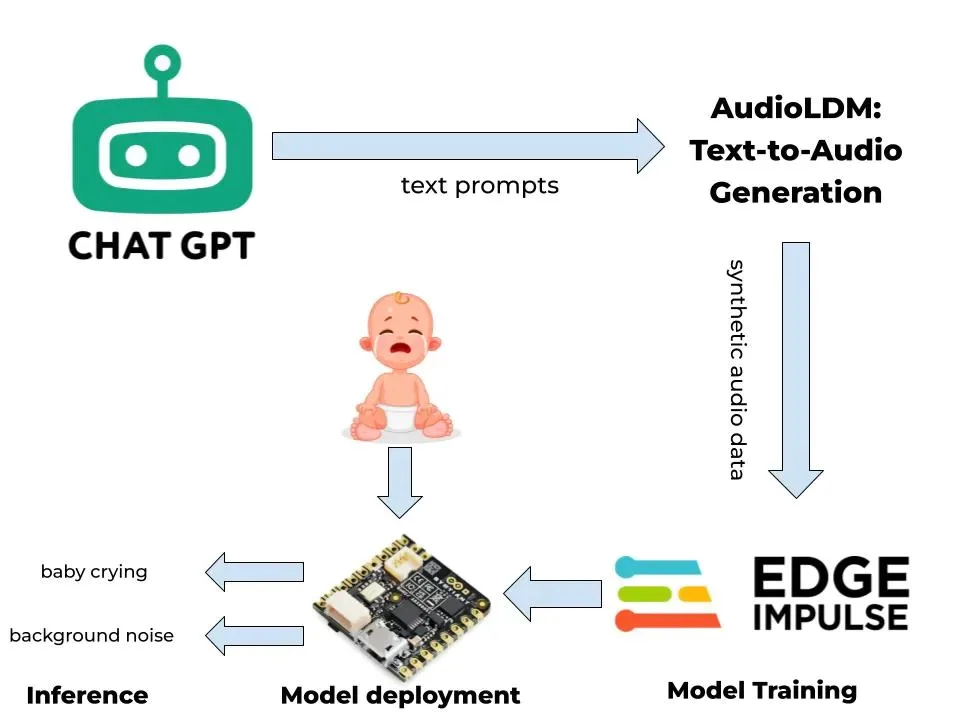

Detect a crying baby with tinyML and synthetic data

Reading Time: 2 minutesWhen a baby cries, it is almost always due to something that is wrong, which could include, among other things, hunger, thirst, stomach pain, or too much noise. In his project, Nurgaliyev Shakhizat demonstrated how he was able to leverage ML tools to build a cry-detection system without the need for collecting real-world data himself.…

-

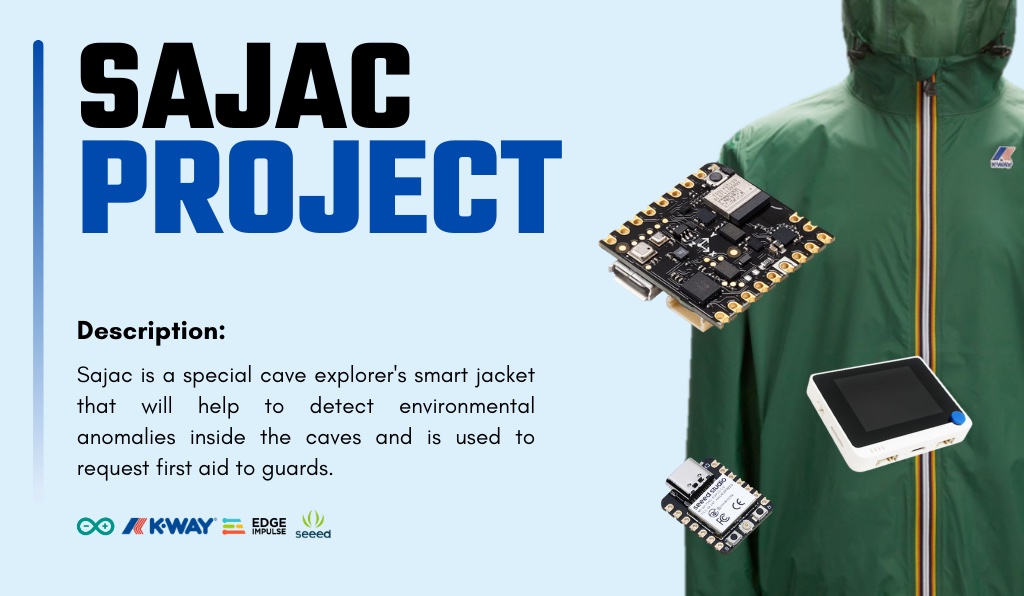

Cave exploration made safer with the Nicla Sense ME-powered Sajac Project

Reading Time: 2 minutesThe art of cave exploration, or spelunking, can get its practitioners far closer to nature and the land we inhabit, but it also comes with a host of potential dangers. Some include extreme environmental conditions, lack of oxygen/toxic gases, and simply having their path closed off due to rock falls. Seeing these…

-

Predicting when a fan fail by listening to it

Reading Time: 2 minutesEmbedded audio classification is a very powerful tool when it comes to predictive maintenance, as a wide variety of sounds can be distinguished as either normal or harmful several times per second automatically and reliably. To demonstrate how this pattern recognition could be incorporated into a commercial setting, Kevin Richmond created the Listen Up…

-

Using sensor fusion and tinyML to detect fires

Reading Time: 2 minutesThe damage and destruction caused by structure fires to both people and the property itself is immense, which is why accurate and reliable fire detection systems are a must-have. As Nekhil R. notes in his write-up, the current rule-based algorithms and simple sensor configurations can lead to reduced accuracy, thus showing a…

-

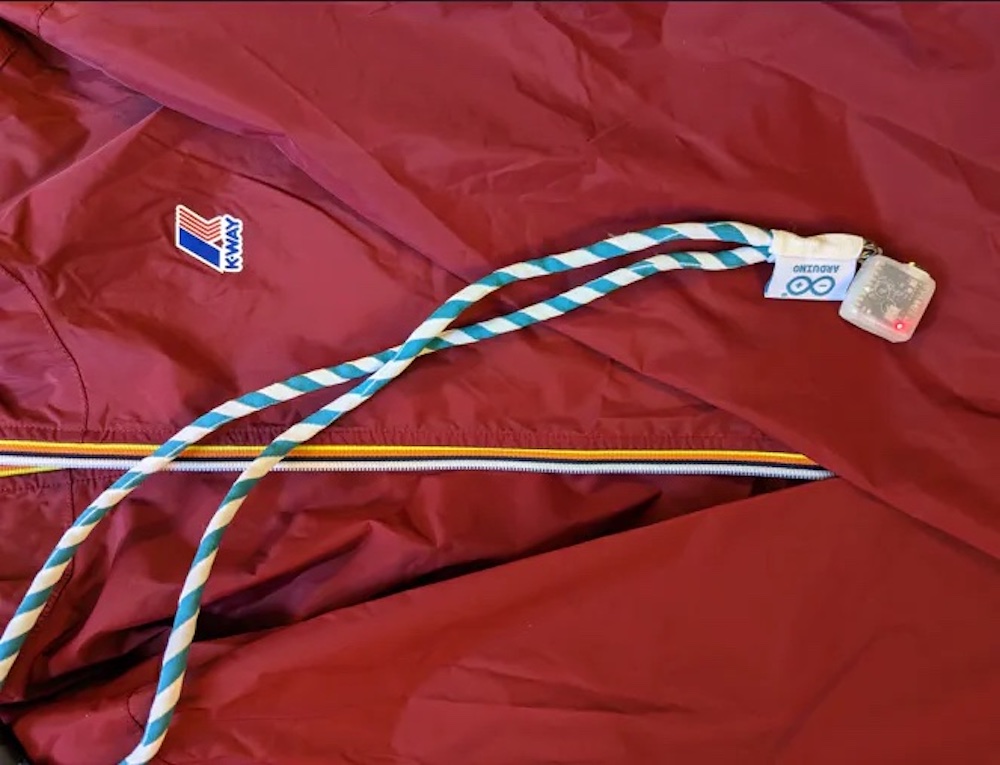

Add gesture recognition and environmental sensing to your hiking jacket with the Nicla Sense ME

Reading Time: 2 minutesAs part of our ongoing collaboration with K-Way, Justin Lutz set out to integrate intelligent electronics into one of the company’s iconic outdoor jackets. Due to the active lifestyle of the brand, Lutz chose to use the Arduino Nicla Sense ME board to detect gestures while hiking as well as monitor the barometric pressure…

-

This DIY Apple Pencil writes with gestures

Reading Time: 2 minutesReleased in 2015, the Apple Pencil is a technology-packed stylus that allows users to write on iPad screens with variations in pressure and angle — all while communicating with very low latencies. Nekhil Ravi and Shebin Jose Jacob of Coders Café were inspired by this piece of handheld tech to come up with their own…

-

Predicting potential motor failures just using sound

Reading Time: 2 minutesNearly every manufacturer uses a machine at some point in their process, and each of those machines is almost guaranteed to contain at least one motor. In order to maintain uptime and efficiency, these motors must always work correctly, as even a small breakdown can lead to disastrous effects. Predictive maintenance aims…

-

Detect vandalism using audio classification on the Nano 33 BLE Sense

Reading Time: 2 minutesHaving something broken into and/or destroyed is an act that most people hope to avoid altogether or at least catch the perpetrator in the act when it does occur. And as Nekhil R. notes in his project write-up, traditional deterrence/detection methods often fail, meaning that a newer type of solution was necessary.…

-

Turning a K-Way jacket into an intelligent hike tracker with the Nicla Sense ME

Reading Time: 2 minutesGoing for a hike outdoors is a great way to relieve stress, do some exercise, and get closer to nature, but tracking them can be a challenge. Our recent collaboration with K-Way led Zalmotek to develop a small wearable device that can be paired to a jacket to track walking speed, steps taken,…

-

This wearable cough monitor can help improve respiratory disease detection

Reading Time: 2 minutesA large number of diseases involve coughing as one of their primary symptoms, but none are quite as concerning as chronic obstructive pulmonary disease (COPD), which causes airflow blockages and other breathing problems in those afflicted by it. Consistently monitoring the frequency and intensity of coughing is vital for tracking how well…

-

Preventing excessive water consumption with tinyML

Reading Time: 2 minutesAs the frequency and intensity of droughts around the world continues to increase, being able to reduce our water usage is vital for maintaining already strained freshwater resources. And according to the EPA, leaving a faucet running, whether intentionally or by accident for just five minutes can consume over ten gallons of…

-

Spotting defects in solar panels with machine learning

Reading Time: 2 minutesLarge solar panel installations are vital for our future of energy production without the massive carbon dioxide emissions we currently produce. However, microscopic fractures, hot spots, and other defects on the surface can expand over time, thus leading to reductions in output and even failures if left undetected. Manivannan Sivan’s solution for tackling this…

-

The Smart-Badge recognizes kitchen activities with its suite of sensors

Reading Time: 2 minutesWe all strive to maintain healthier lifestyles, yet the kitchen is often the most challenging environment by far due to it containing a wide range of foods and beverages. The Smart-Badge project, created by a team of researchers from the German Research Centre for Artificial Intelligence (DFKI), aims to track just how many…

-

This tinyML-powered baby swing automatically starts when crying is detected

Reading Time: 2 minutesNo one enjoys hearing their baby cry, especially when it occurs in the middle of the night or when the parents are preoccupied with another task. Unfortunately, switching on a motorized baby swing requires physically getting up and pressing a switch or button, which is why Manivannan Sivan developed one that can automatically…

-

Add ML-controlled smart suspension adjustment to your bicycle

Reading Time: 3 minutesSome modern cars, trucks, and SUVs have smart active suspension systems that can adjust to different terrain conditions. They adjust in real-time to maintain safety or performance. But they tend to only come on high-end vehicles because they’re expensive, complicated, and add weight. That’s why it is so impressive that Jallson Suryo…

-

Count elevator passengers with the Nicla Vision and Edge Impulse

Reading Time: 3 minutesModern elevators are powerful, but they still have a payload limit. Most will contain a plaque with the maximum number of passengers (a number based on their average weight with lots of room for error). But nobody has ever read the capacity limit when stepping into an elevator or worried about exceeding…

-

tinyML device monitors packages for damage while in transit

Reading Time: 2 minutesArduino Team — September 10th, 2022 Although the advent of widespread online shopping has been a great convenience, it has also led to a sharp increase in the number of returned items. This can be blamed on a number of factors, but a large contributor to this issue is damage in shipping. Shebin…

-

This piece of art knows when it’s being photographed thanks to tinyML

Reading Time: 2 minutesThis piece of art knows when it’s being photographed thanks to tinyML Arduino Team — September 9th, 2022 Nearly all art functions in just a single direction by allowing the viewer to admire its beauty, creativity, and construction. But Estonian artist Tauno Erik has done something a bit different thanks to embedded…

-

Detecting and tracking worker falls with embedded ML

Reading Time: 2 minutesCertain industries rely on workers being able to reach high spaces through the use of ladders or mobile standing platforms. And because of their potential danger if a fall were to occur, Roni Bandini had the idea to create an integrated system that can detect a fall and report it automatically across a wide…