Schlagwort: Nicla Voice

-

Empowering the transportation of the future, with the Ohio State Buckeye Solar Racing Team

Reading Time: 3 minutesArduino is ready to graduate its educational efforts in support of university-level STEM and R&D programs across the United States: this is where students come together to explore the solutions that will soon define their future, in terms of their personal careers and more importantly of their impact on the world. Case…

-

Controlling a power strip with a keyword spotting model and the Nicla Voice

Reading Time: 2 minutesAs Jallson Suryo discusses in his project, adding voice controls to our appliances typically involves an internet connection and a smart assistant device such as Amazon Alexa or Google Assistant. This means extra latency, security concerns, and increased expenses due to the additional hardware and bandwidth requirements. This is why he created a…

-

Improve recycling with the Arduino Pro Portenta C33 and AI audio classification

Reading Time: 2 minutesIn July 2023, Samuel Alexander set out to reduce the amount of trash that gets thrown out due to poor sorting practices at the recycling bin. His original design relied on an Arduino Nano 33 BLE Sense to capture audio through its onboard microphone and then perform edge audio classification with an…

-

Building the OG smartwatch from Inspector Gadget

Reading Time: 2 minutesWe recently showed you Becky Stern’s recreation of the “computer book” carried by Penny in the Inspector Gadget cartoon, but Stern didn’t stop there. She also built a replica of Penny’s most iconic gadget: her watch. Penny was a trendsetter and rocked that decades before the Apple Watch hit the market. Stern’s replica looks just…

-

Improving comfort and energy efficiency in buildings with automated windows and blinds

Reading Time: 2 minutesWhen dealing with indoor climate controls, there are several variables to consider, such as the outside weather, people’s tolerance to hot or cold temperatures, and the desired level of energy savings. Windows can make this extra challenging, as they let in large amounts of light/heat and can create poorly insulated regions, which…

-

A snore-no-more device designed to help those with sleep apnea

Reading Time: 2 minutesDespite snoring itself being a relatively harmless condition, those who do snore while asleep can also be suffering from sleep apnea — a potentially serious disorder which causes the airway to repeatedly close and block oxygen from getting to the lungs. As an effort to alert those who might be unaware they…

-

Small-footprint keyword spotting for low-resource languages with the Nicla Voice

Reading Time: 2 minutesSpeech recognition is everywhere these days, yet some languages, such as Shakhizat Nurgaliyev and Askat Kuzdeuov’s native Kazakh, lack sufficiently large public datasets for training keyword spotting models. To make up for this disparity, the duo explored generating synthetic datasets using a neural text-to-speech system called Piper, and then extracting speech commands from the audio with…

-

Training embedded audio classifiers for the Nicla Voice on synthetic datasets

Reading Time: 2 minutesThe task of gathering enough data to classify distinct sounds not captured in a larger, more robust dataset can be very time-consuming, at least until now. In his write-up, Shakhizat Nurgaliyev describes how he used an array of AI tools to automatically create a keyword spotting dataset without the need for speaking into a microphone. The…

-

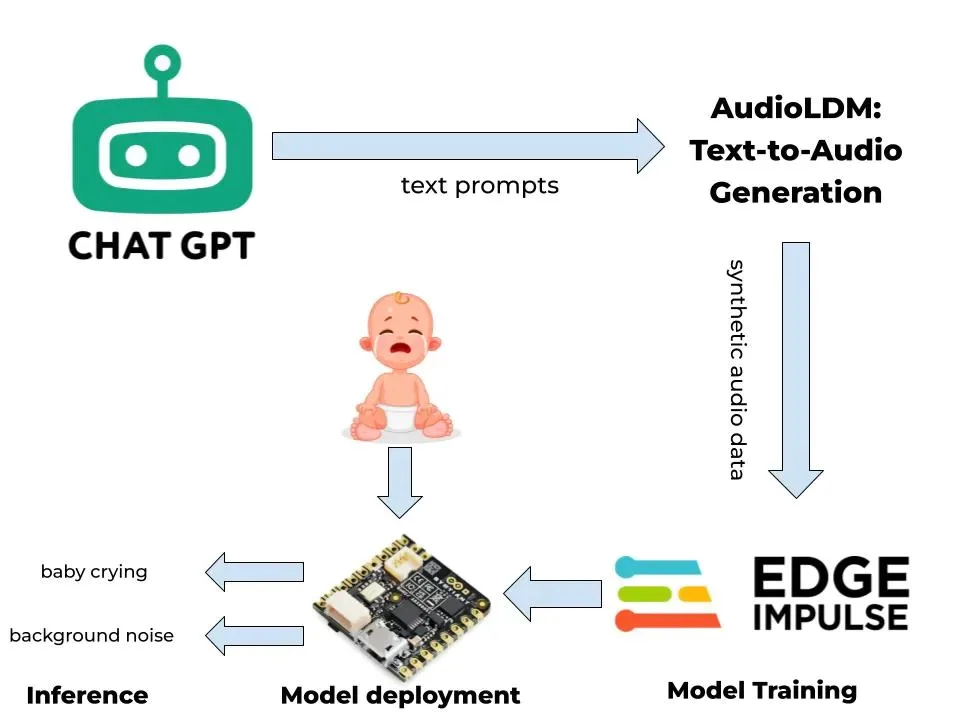

Detect a crying baby with tinyML and synthetic data

Reading Time: 2 minutesWhen a baby cries, it is almost always due to something that is wrong, which could include, among other things, hunger, thirst, stomach pain, or too much noise. In his project, Nurgaliyev Shakhizat demonstrated how he was able to leverage ML tools to build a cry-detection system without the need for collecting real-world data himself.…

-

Have you heard? Nicla Voice is out at CES 2023!

Reading Time: 2 minutesAs announced at CES 2023 in Las Vegas, our tiny form factor family keeps growing: the 22.86 x 22.86 mm Nicla range now includes Nicla Voice, allowing for easy implementation of always-on speech recognition on the edge. How? Let’s break it down. 1. The impressive sensor package. Nicla Voice comes with a…