Schlagwort: Nicla

-

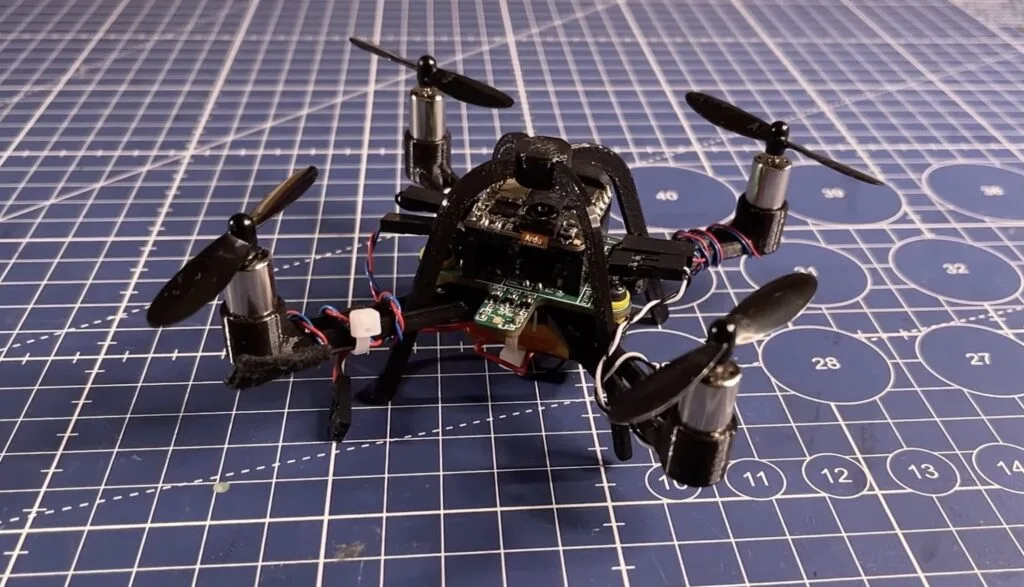

Using an Arduino Nicla Vision as a drone flight controller

Reading Time: 2 minutesDrone flight controllers do so much more than simply receive signals and tell the drone which way to move. They’re responsible for constantly tweaking the motor speeds in order to maintain stable flight, even with shifting winds and other unpredictable factors. For that reason, most flight controllers are purpose-built for the job.…

-

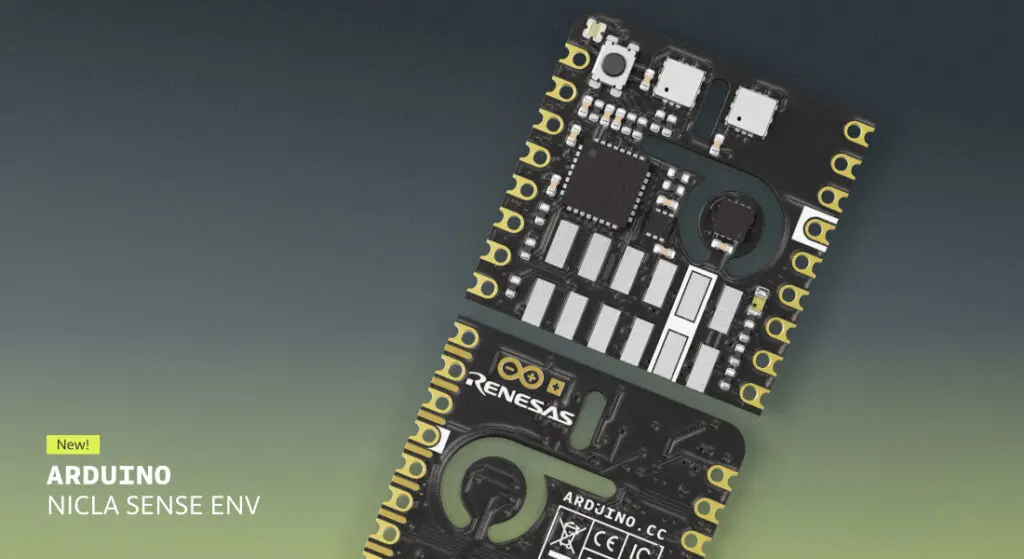

Arduino Nicla Sense Env: adding advanced environmental sensing to a broad range of applications

Reading Time: 4 minutesWe’re thrilled to announce the launch of Nicla Sense Env: the latest addition to our portfolio of system-on-modules and sensor nodes, empowering innovators with the tools to unlock new possibilities. This tiny yet powerful sensor node is designed to elevate your environmental sensing projects to new heights. Whether you’re a seasoned professional…

-

Making a car more secure with the Arduino Nicla Vision

Reading Time: 2 minutesShortly after attending a recent tinyML workshop in Sao Paolo, Brazil, Joao Vitor Freitas da Costa was looking for a way to incorporate some of the technologies and techniques he learned into a useful project. Given that he lives in an area which experiences elevated levels of pickpocketing and automotive theft, he turned…

-

This Nicla Vision-powered ornament covertly spies on the presents below

Reading Time: 2 minutesWhether it’s an elf that stealthily watches from across the room or an all-knowing Santa Claus that keeps a list of one’s actions, spying during the holidays is nothing new. But when it comes time to receive presents, the more eager among us might want to know what presents await us a…

-

Controlling a power strip with a keyword spotting model and the Nicla Voice

Reading Time: 2 minutesAs Jallson Suryo discusses in his project, adding voice controls to our appliances typically involves an internet connection and a smart assistant device such as Amazon Alexa or Google Assistant. This means extra latency, security concerns, and increased expenses due to the additional hardware and bandwidth requirements. This is why he created a…

-

Building the OG smartwatch from Inspector Gadget

Reading Time: 2 minutesWe recently showed you Becky Stern’s recreation of the “computer book” carried by Penny in the Inspector Gadget cartoon, but Stern didn’t stop there. She also built a replica of Penny’s most iconic gadget: her watch. Penny was a trendsetter and rocked that decades before the Apple Watch hit the market. Stern’s replica looks just…

-

Improving comfort and energy efficiency in buildings with automated windows and blinds

Reading Time: 2 minutesWhen dealing with indoor climate controls, there are several variables to consider, such as the outside weather, people’s tolerance to hot or cold temperatures, and the desired level of energy savings. Windows can make this extra challenging, as they let in large amounts of light/heat and can create poorly insulated regions, which…

-

Helping robot dogs feel through their paws

Reading Time: 2 minutesYour dog has nerve endings covering its entire body, giving it a sense of touch. It can feel the ground through its paws and use that information to gain better traction or detect harmful terrain. For robots to perform as well as their biological counterparts, they need a similar level of sensory…

-

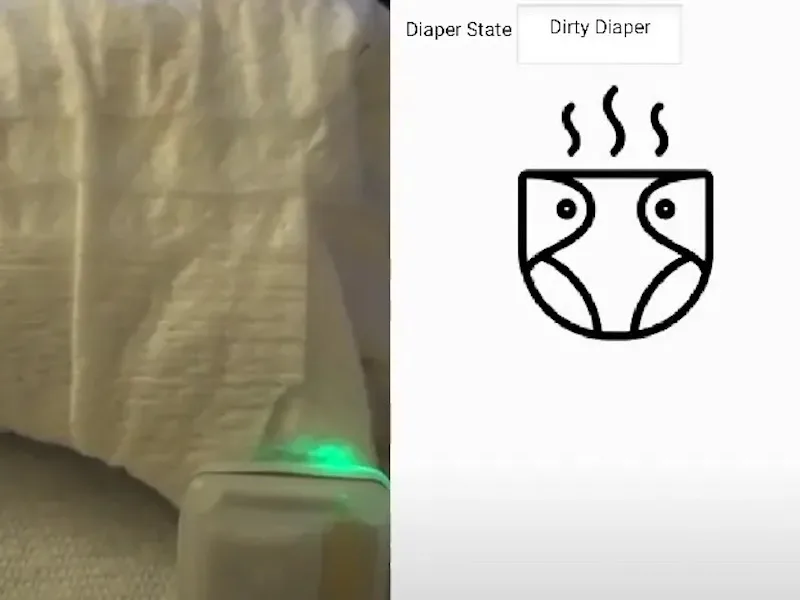

This smart diaper knows when it is ready to be changed

Reading Time: 2 minutesThe traditional method for changing a diaper starts when someone smells or feels the that the diaper has been soiled, and while it isn’t the greatest process, removing the soiled diaper as soon as possible is important for avoiding rashes and infections. Justin Lutz has created an intelligent solution to this situation by designing…

-

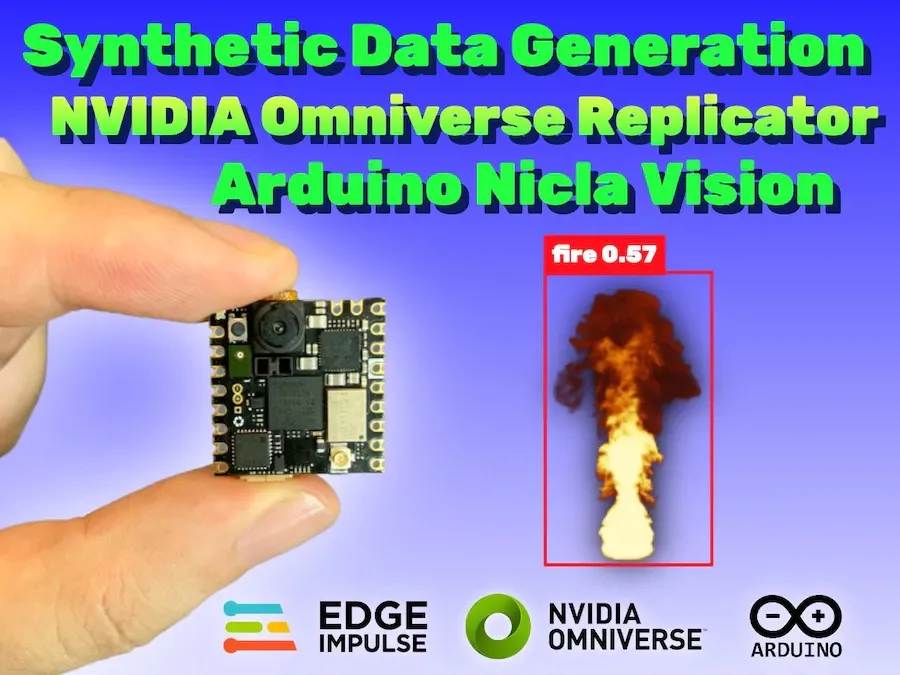

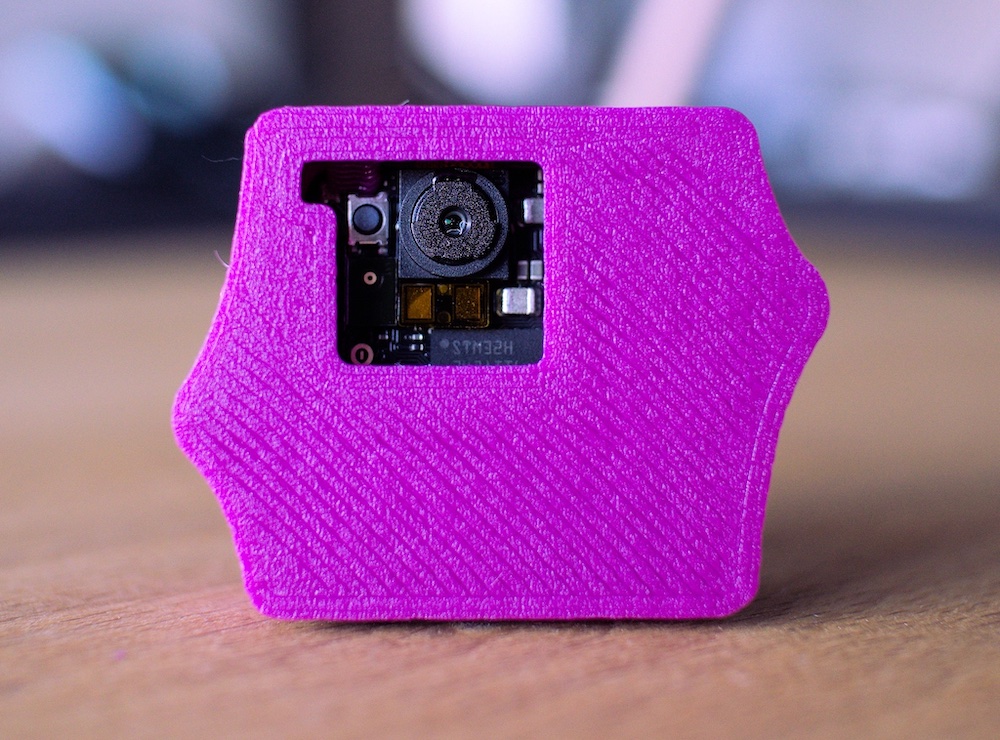

This Nicla Vision-based fire detector was trained entirely on synthetic data

Reading Time: 2 minutesDue to an ever-warming planet thanks to climate change and greatly increasing wildfire chances because of prolonged droughts, being able to quickly detect when a fire has broken out is vital for responding while it’s still in a containable stage. But one major hurdle to collecting machine learning model datasets on these…

-

A snore-no-more device designed to help those with sleep apnea

Reading Time: 2 minutesDespite snoring itself being a relatively harmless condition, those who do snore while asleep can also be suffering from sleep apnea — a potentially serious disorder which causes the airway to repeatedly close and block oxygen from getting to the lungs. As an effort to alert those who might be unaware they…

-

Small-footprint keyword spotting for low-resource languages with the Nicla Voice

Reading Time: 2 minutesSpeech recognition is everywhere these days, yet some languages, such as Shakhizat Nurgaliyev and Askat Kuzdeuov’s native Kazakh, lack sufficiently large public datasets for training keyword spotting models. To make up for this disparity, the duo explored generating synthetic datasets using a neural text-to-speech system called Piper, and then extracting speech commands from the audio with…

-

You have 3 ways to meet Massimo Banzi in the UK!

Reading Time: 3 minutesMassimo Banzi and the Arduino Pro team will be crossing the Channel soon for a short tour of Southern England, touching base with long-time partners and meeting many new Arduino fans! On July 11th at 4PM BST, Massimo has been invited to give a Tech Talk at Arm’s headquarters in Cambridge, as…

-

Intelligently control an HVAC system using the Arduino Nicla Vision

Reading Time: 2 minutesShortly after setting the desired temperature of a room, a building’s HVAC system will engage and work to either raise or lower the ambient temperature to match. While this approach generally works well to control the local environment, the strategy also leads to tremendous wastes of energy since it is unable to…

-

Enabling automated pipeline maintenance with edge AI

Reading Time: 2 minutesPipelines are integral to our modern way of life, as they enable the fast transportation of water and energy between central providers and the eventual consumers of that resource. However, the presence of cracks from mechanical or corrosive stress can lead to leaks, and thus waste of product or even potentially dangerous…

-

Arduino Pro at SPS Italy 2023

Reading Time: 3 minutesMark your calendars: May 23rd-25th we’ll be at SPS Italia, one of the country’s leading fairs for smart, digital, sustainable industry and a great place to find out what’s new in automation worldwide. We expect a lot of buzz around AI for IoT applications – and, of course, we’ll come prepared to…

-

Training embedded audio classifiers for the Nicla Voice on synthetic datasets

Reading Time: 2 minutesThe task of gathering enough data to classify distinct sounds not captured in a larger, more robust dataset can be very time-consuming, at least until now. In his write-up, Shakhizat Nurgaliyev describes how he used an array of AI tools to automatically create a keyword spotting dataset without the need for speaking into a microphone. The…

-

Fall detection system with Nicla Sense ME

Reading Time: 4 minutesThe challenge Personal safety is a growing concern in a variety of settings: from high-risk jobs where HSE managers must guarantee workers’ security to the increasingly common work and study choices that drive family and friends far apart, sometimes leading to more isolated lives. In all of these situations, having a system…

-

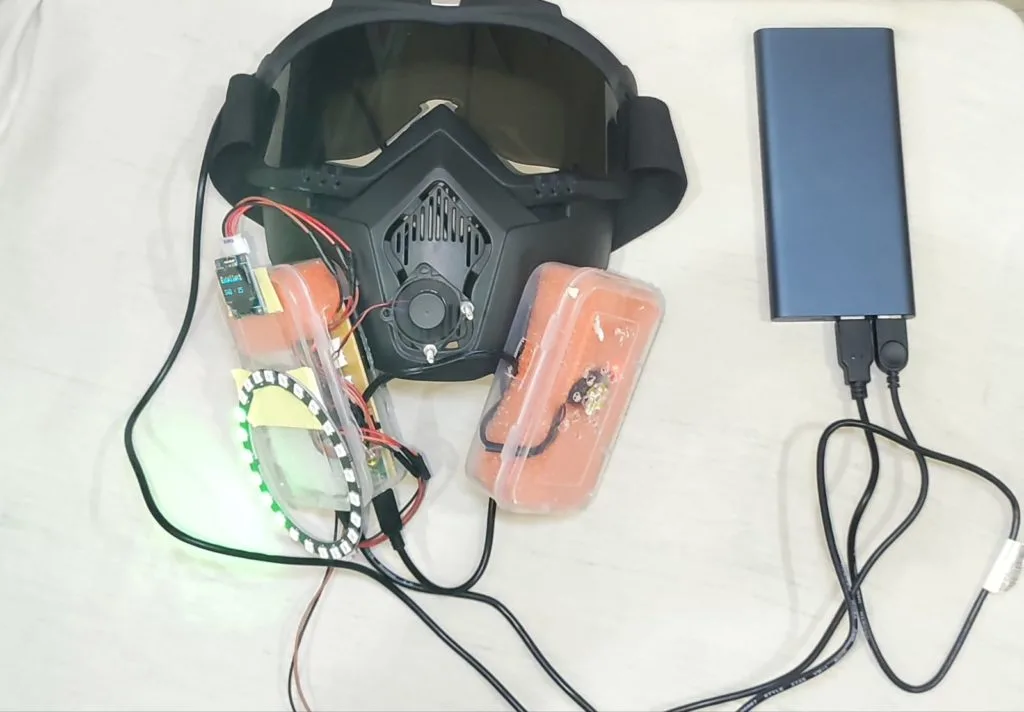

The Environmental Sense Mask monitors air quality in real-time

Reading Time: 2 minutesHazardous pollution in the form of excess CO2, nitrogen dioxide, microscopic particulates, and volatile organic compounds has become a growing concern, especially in developing countries where access to cleaner technologies might not be available or widely adopted. Krazye Karthik’s Environmental Sense Mask (ES-Mask) focuses on bringing attention to these harmful compounds by displaying ambient…

-

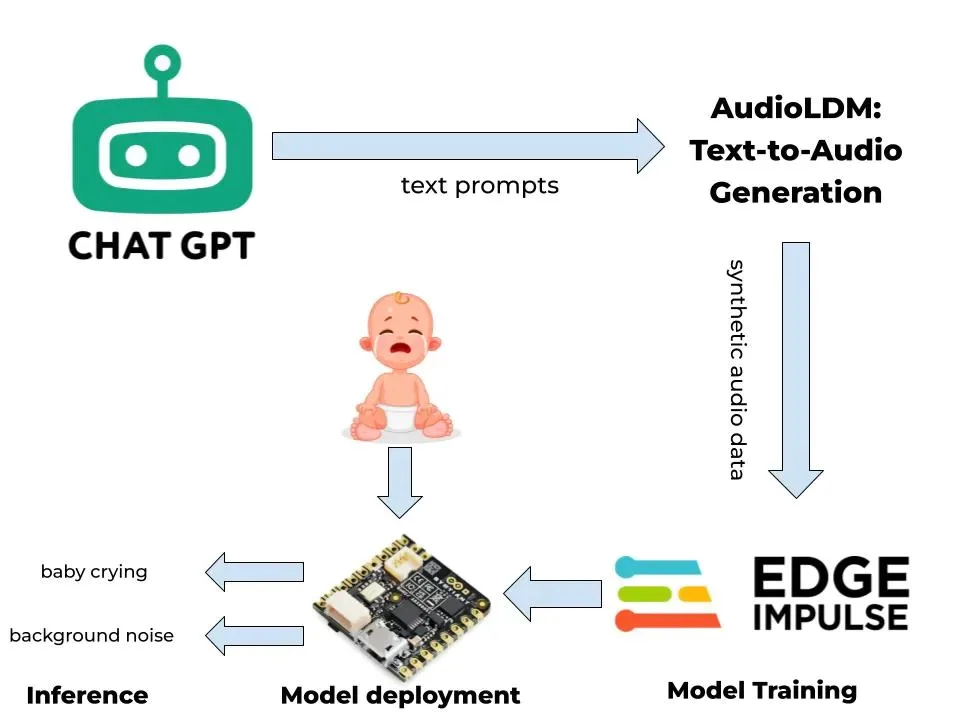

Detect a crying baby with tinyML and synthetic data

Reading Time: 2 minutesWhen a baby cries, it is almost always due to something that is wrong, which could include, among other things, hunger, thirst, stomach pain, or too much noise. In his project, Nurgaliyev Shakhizat demonstrated how he was able to leverage ML tools to build a cry-detection system without the need for collecting real-world data himself.…

-

Want to keep accurate inventory? Count containers with the Nicla Vision

Reading Time: 2 minutesMaintaining accurate records for both the quantities and locations of inventory is vital when running any business operations efficiently and at scale. By leveraging new technologies such as AI and computer vision, items in warehouses, store shelves, and even a customer’s hand can be better managed and used to forecast changes demand.…