Despite these alarmist news items gaining media coverage every single year for more than a decade, we punters just don’t seem to wise up to such confidence tricks. Tech entrepreneurs Kakapo Labs set about a more benign riff, on the idea that Joe Public loves a bit of flattery, using clever verbal flummery generated by AI darling ChatGPT, creating a Love Machine that dishes out compliments and chocolates in equal measure. So far, the gentle love-bombing experiment doesn’t seem to have a hidden agenda.

Sweet nothings

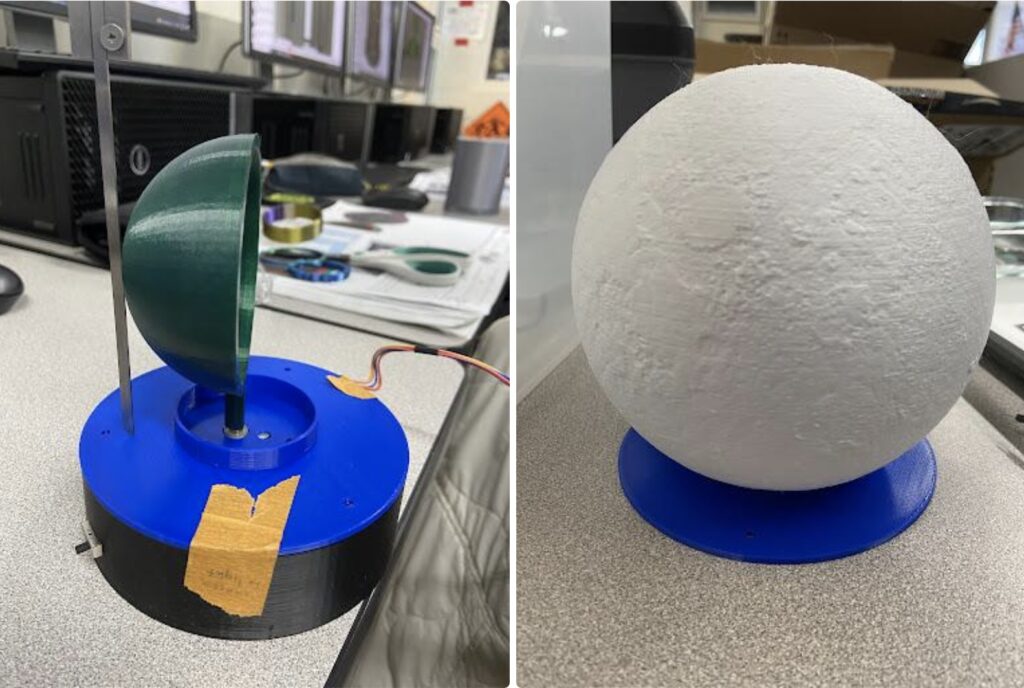

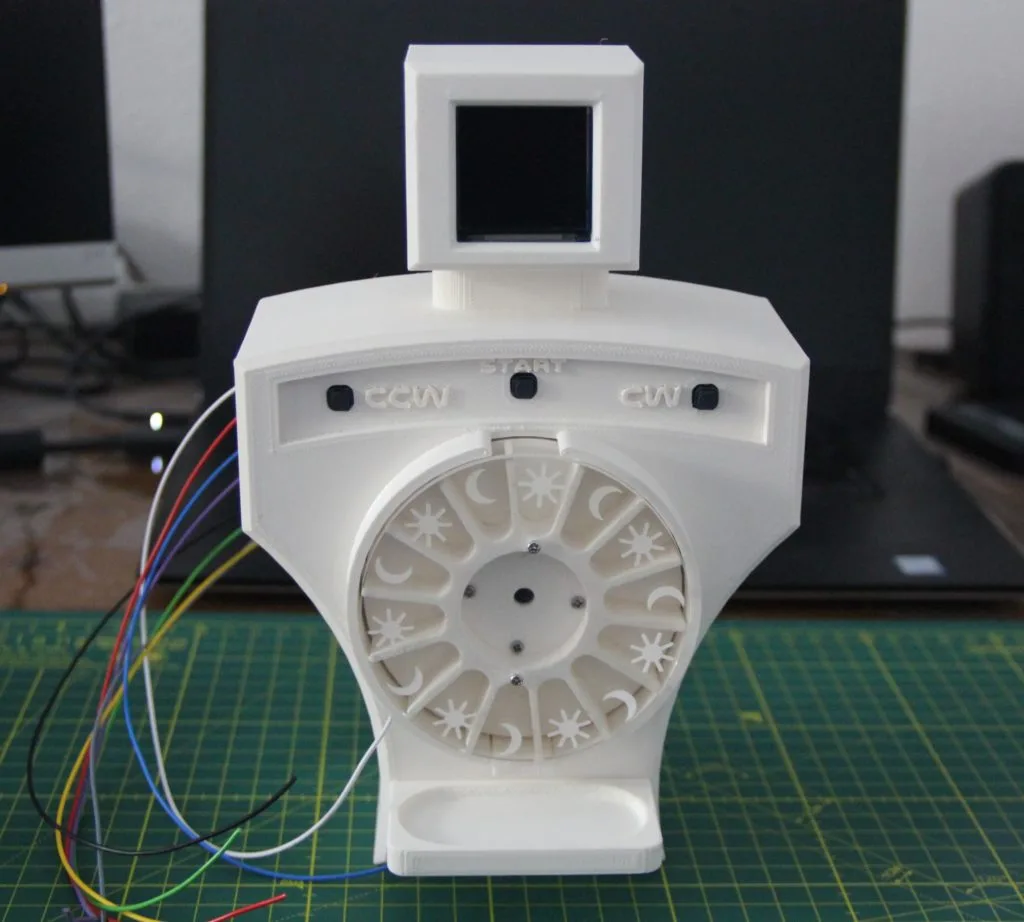

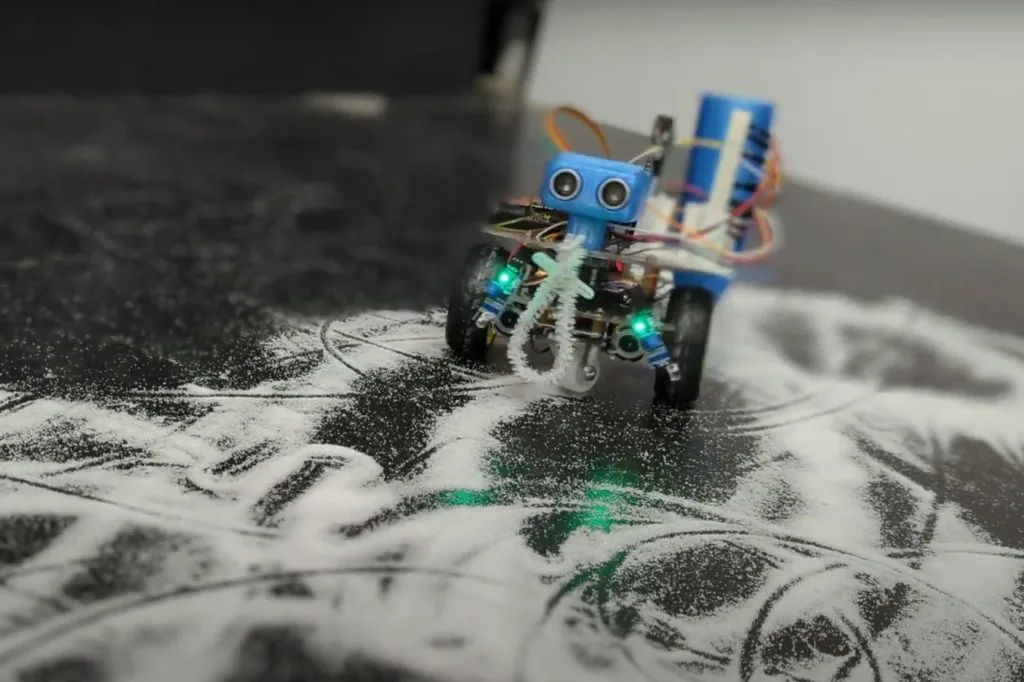

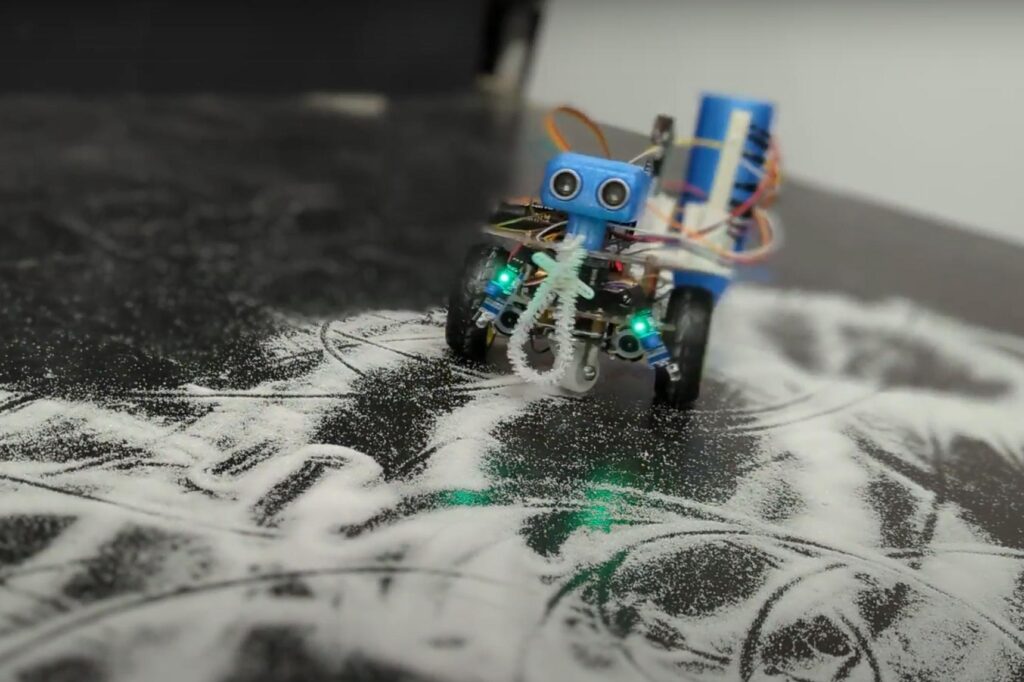

Will and India, from London-based Kakapo Labs, have backgrounds in electronics engineering and graphic design, respectively, and are interested in “building fun, positive things that cross the boundary between the internet and real-world objects.” As with the password insecurity mentioned above, Will notes that behavioural psychology research shows that it’s pretty clear people are highly motivated by small rewards. “We were interested in what people might do to get a small reward and how much they’d enjoy it. ChatGPT was in the news a lot around the time we started this project, but mainly related to its impact on work. “We thought instead we could try to use it to make some fun” – hence the AI cutey sporting a designer fluffy red skirt and matching glowing red LED matrix heart.

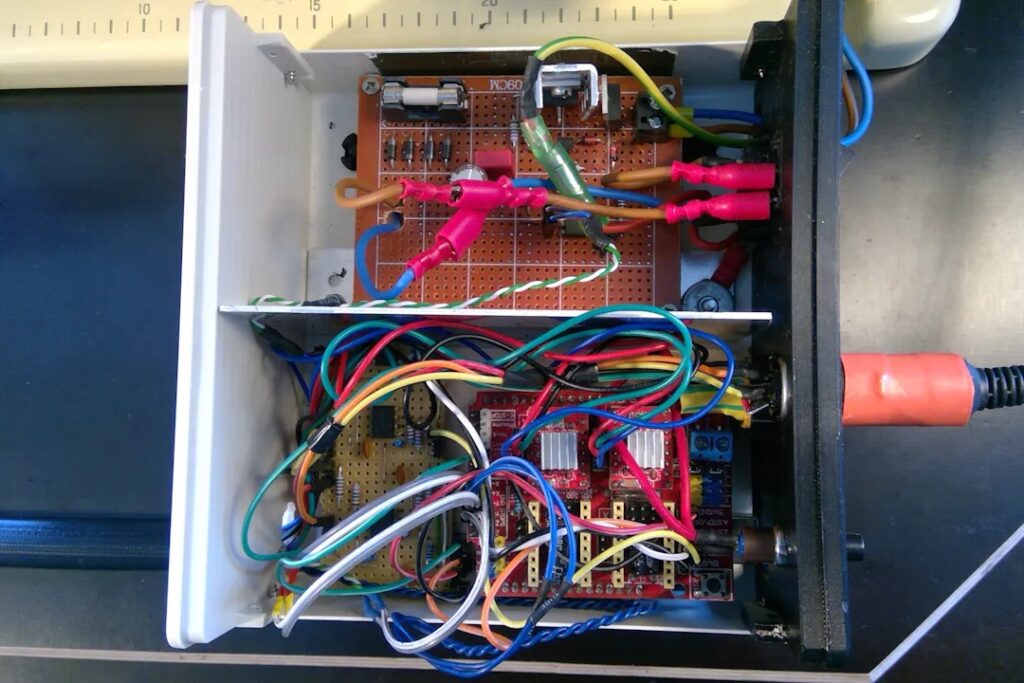

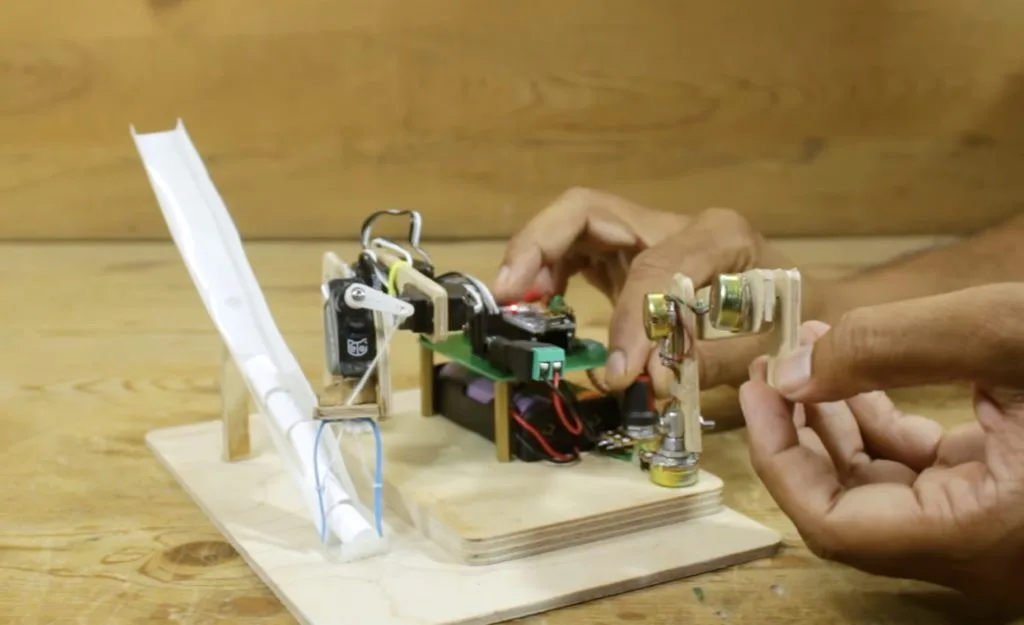

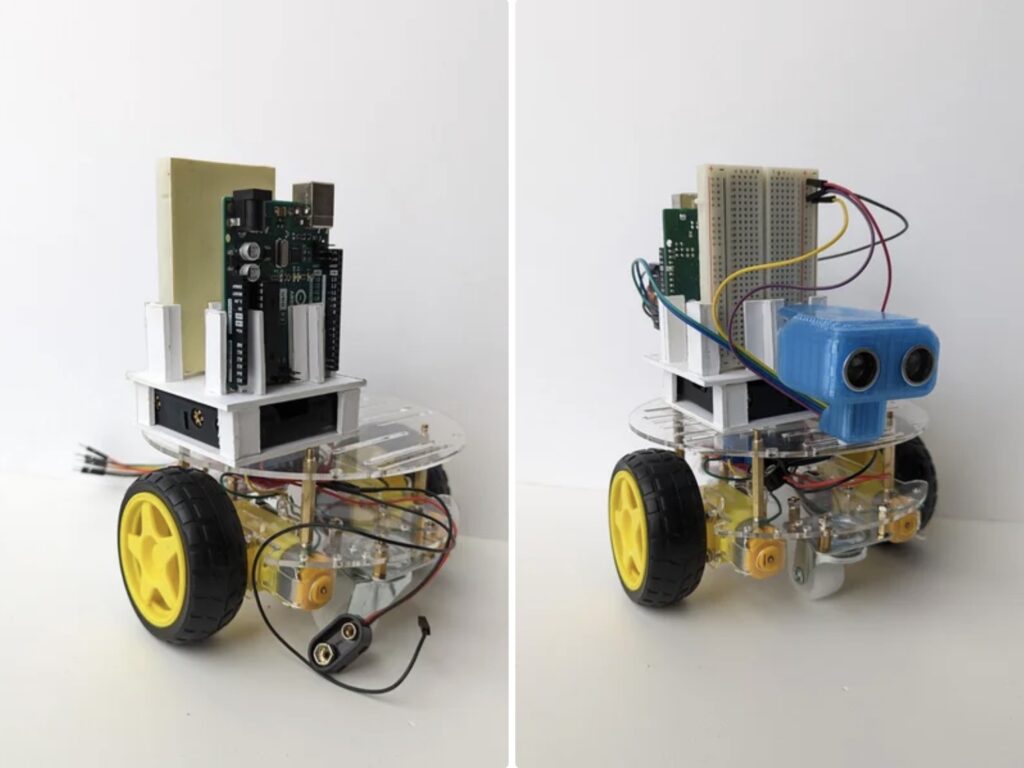

They chose Pico W for this ChatGPT Love Machine “because it’s powerful but also simple, low-cost, and small but, at the same time, can run a full wireless stack which is easy to use.” Kakapo Labs has more than a decade’s experience of using wireless microcontrollers, and often found them complicated to use “as the trade-offs needed to squeeze an internet stack into an environment without a full operating system, and limited resources meant it always felt like a bit of an awkward fit.” However, Will says that, with MicroPython on Pico W, things work painlessly and the development time is short.

Fiddling about

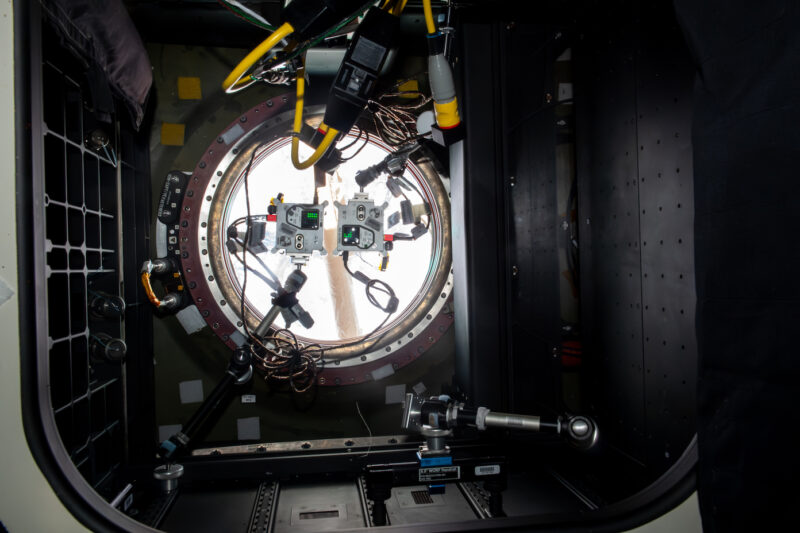

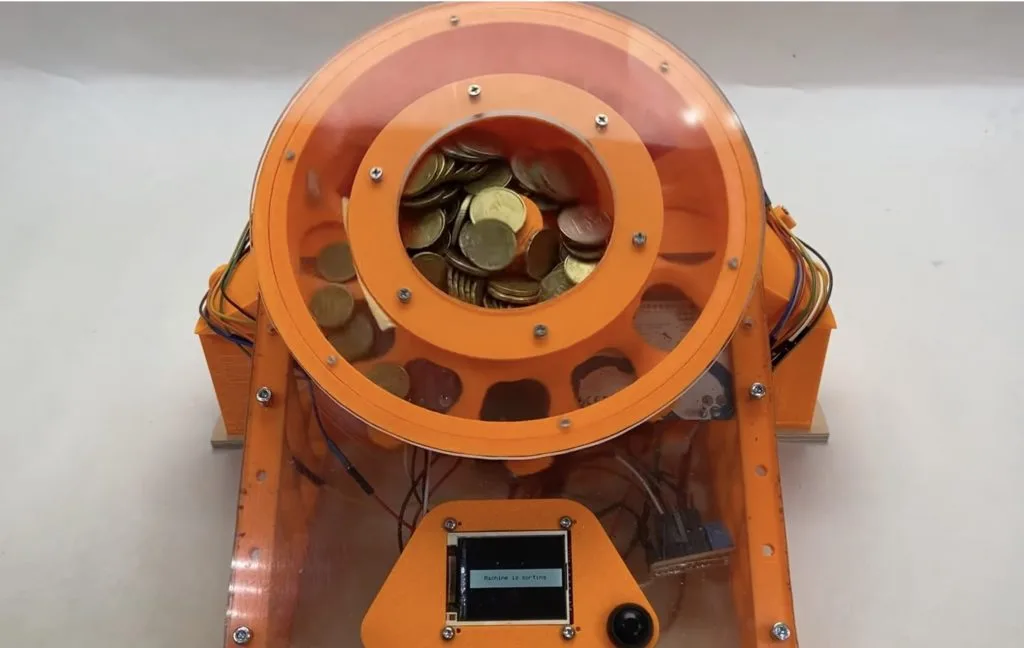

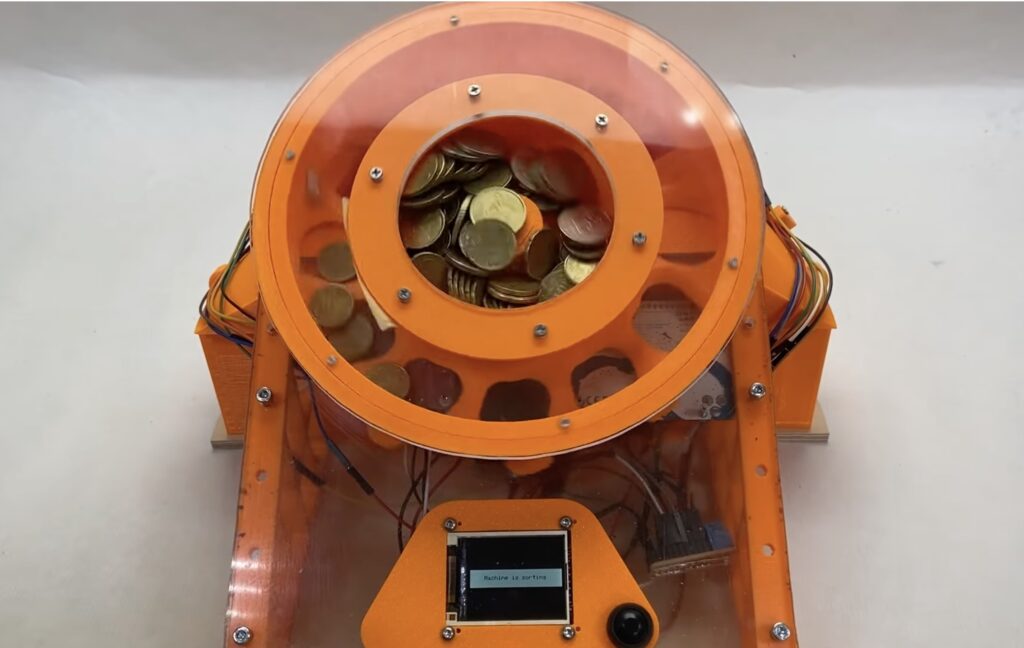

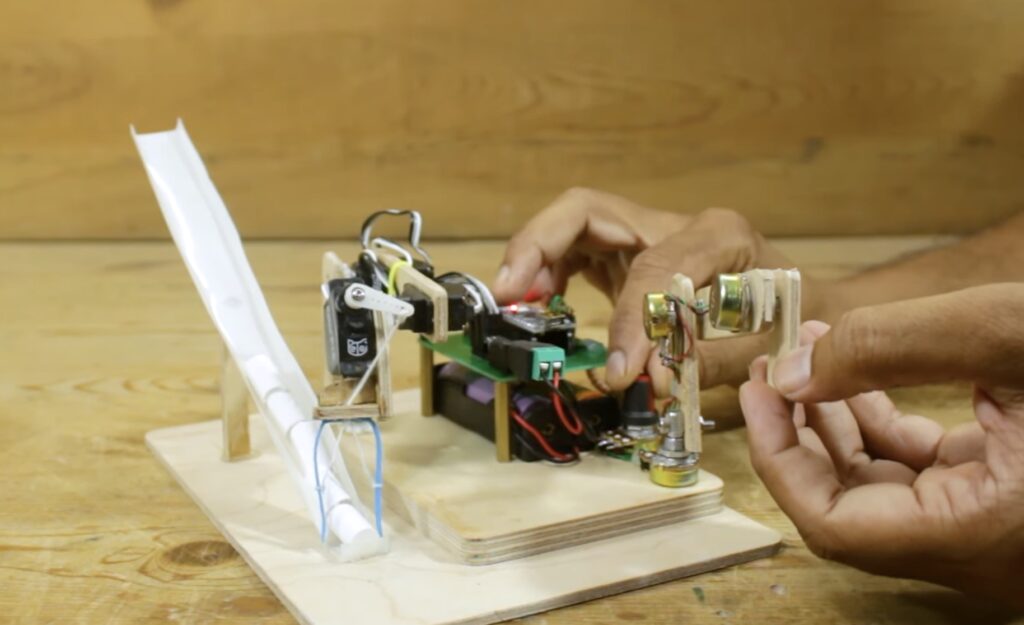

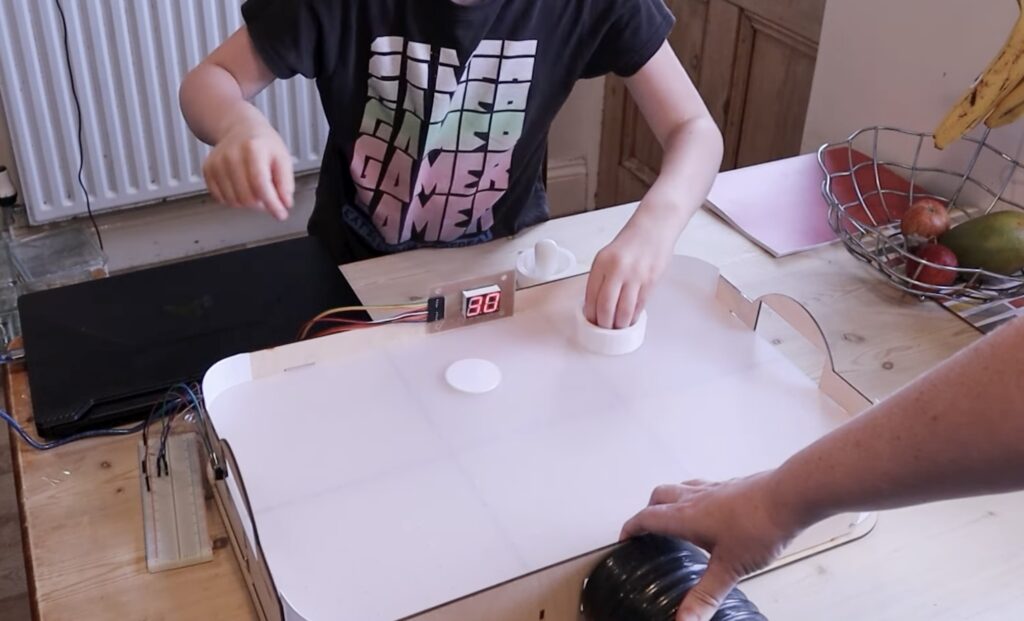

The Love Machine was originally a gum ball dispenser that Kakapo bought online for less than £100, replacing its 20p coin-operated mechanism with one they designed in Inkscape and laser-cut themselves. This involved several stacked-up pieces sandwiched together, plus a retractable gear wheel attached to a stepper motor. With lots of fiddly parts to connect up, Will and India realised they could simplify access by removing the vending machine’s base and upending it. They boosted its power using a voltage converter but tried not to over-egg things and cause jams that could damage the mechanical cogs. A stirrer used to push gum balls towards the dispensing slot was not needed; removing this made things work more reliably. They also used brass inserts pushed into the acrylic sheet, instead of nuts, reducing “the number of hands/fingers/things to hold simultaneously and [making] assembly and disassembly quicker and easier.”

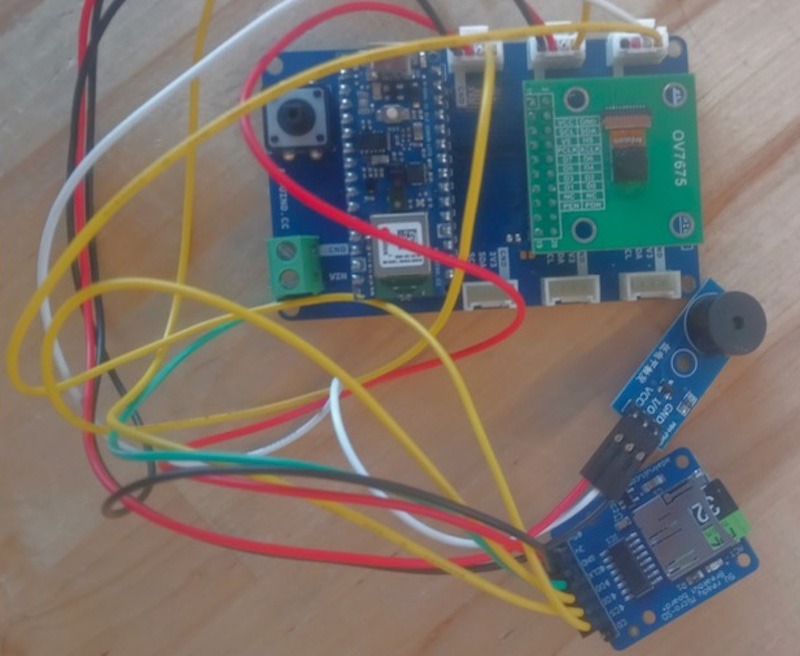

Pimoroni’s ‘phew’ web server provides an access point to the software and allows the team to control access and connect the ChatGPT Love Machine to Wi-Fi via their phones. The Love Machine is controlled using a web socket including, on the client side, the ‘micropython_async_websocket_client’ library for which WSS (Secure WebSocket) support was so recent that its code hadn’t yet been merged into the main library. Using the AWS API gateway keeps running costs down as there’s no need to have a server instance constantly handling requests.

This technology is hidden behind the Love Machine’s greeting board which tells passers-by how to interact. Users send WhatsApp messages to the compliment machine, configured using Twilio, and ChatGPT “provides the conversation and judges whether people are sending compliments,” says Will.

With a company named after David Attenborough’s favourite breed of parrot, it was important to the Kakapo team that the build was fast to complete and fun, making it an ideal project that would catch people’s imagination and then discover that they could create similar ones.

“We think getting people to have a go with tech when they’re young is really important! Making things can be very rewarding and is the ideal career for some people. They are also diversity advocates – opening up the chance to have a go can help people who didn’t realise ‘someone like me can do this’.”