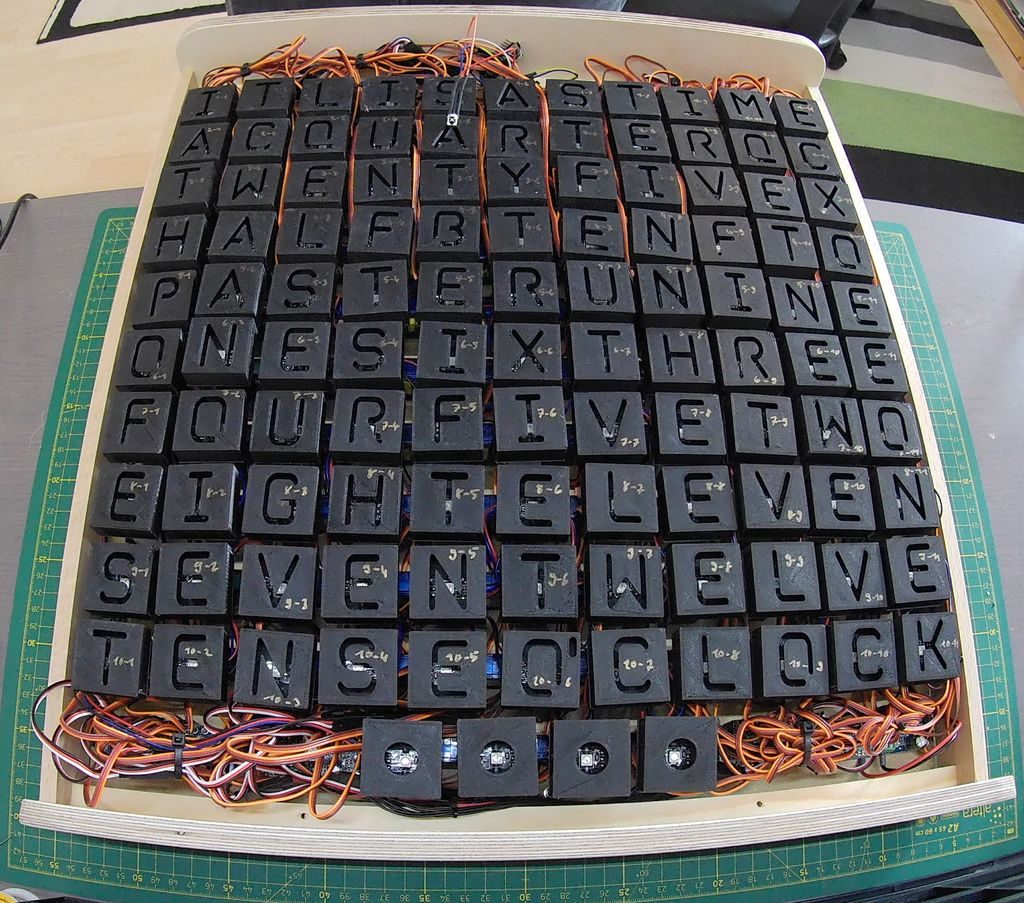

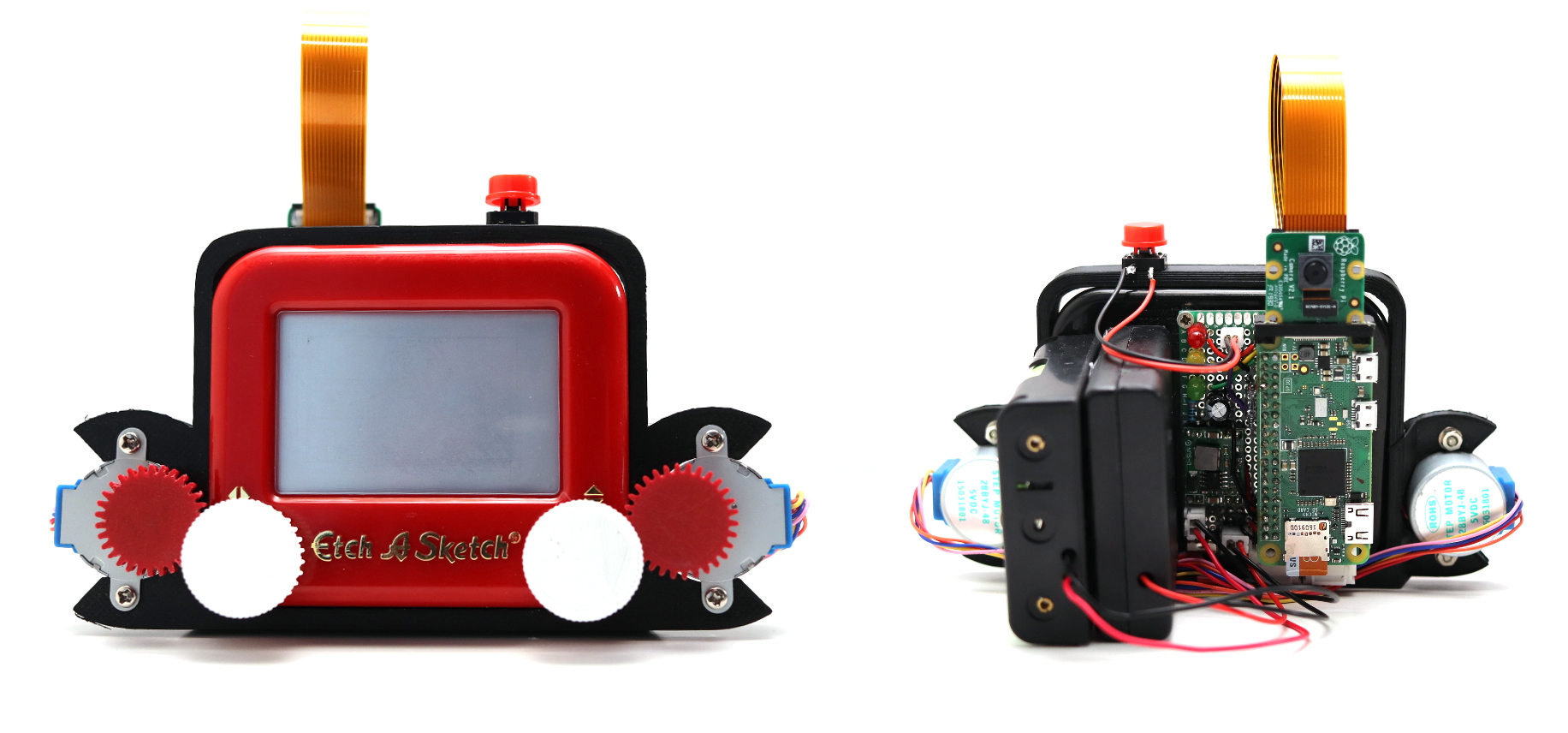

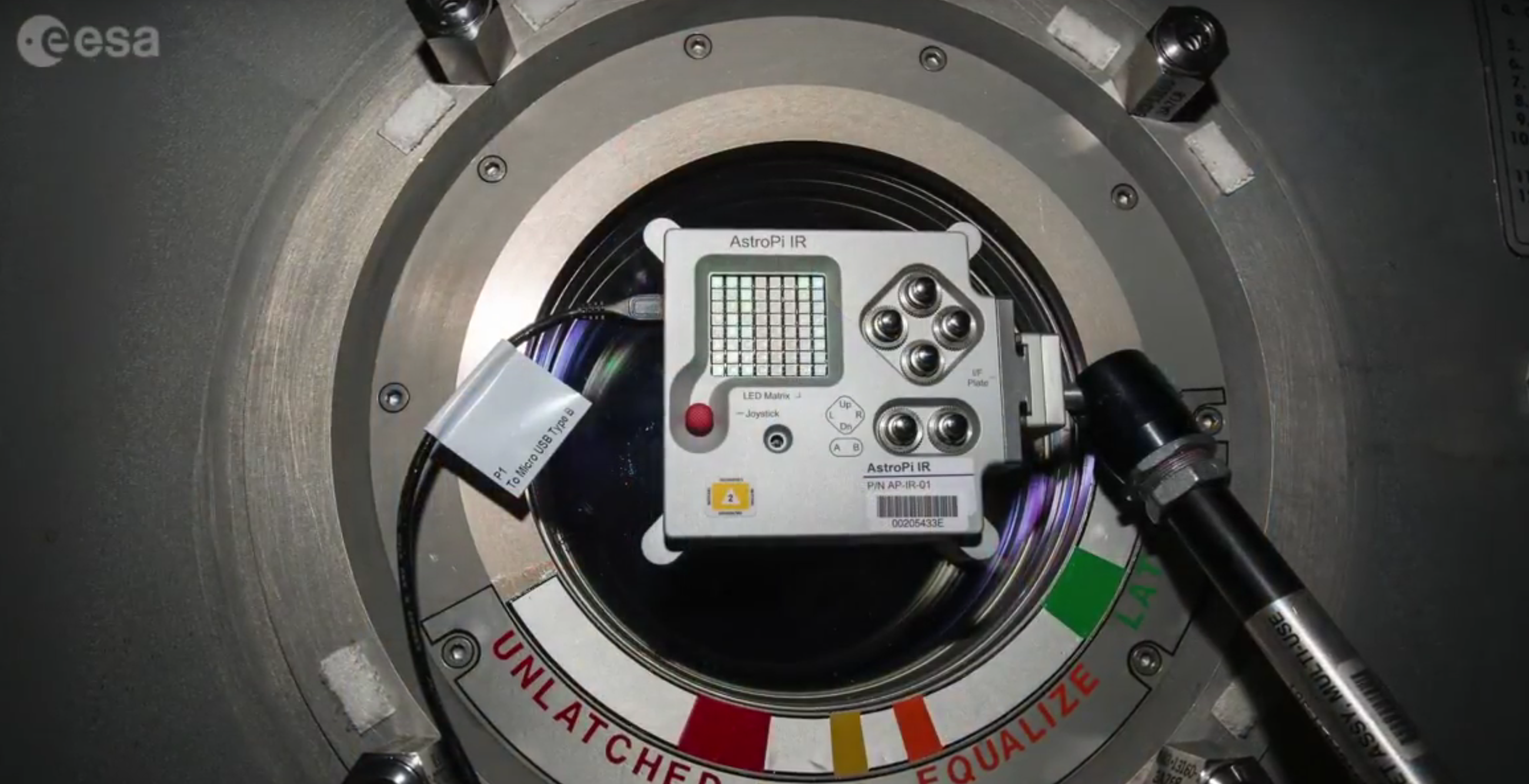

Pi VizuWall is a multi-Raspberry Pi MPI computing system with a difference. And the difference is servo motors!

Pi VizWall at Maker Faire Miami

We can thank Estefannie for this gem. While attending Maker Faire Miami earlier this month, she shared a video of Pi VizWall on her Instagram Stories. And it didn’t take long for me to ask for an introduction to the project’s owner, Matt Trask.

I sent Matt a series of questions in relation to the project so I could write a blog post, but Matt’s replies were so wonderfully detailed that it seems foolish to try and reword them.

So here are the contents of Matt’s email replies, in their entirety, for you all to enjoy.

Parallel computing system

The project is a parallel computing system built according to the Beowulf cluster architecture, the same as most of the world’s largest and fastest supercomputers. It runs a system called MPI (Message Passing Interface) that breaks a program up into smaller pieces that can be sent over the network to other nodes for execution.

Beowulf clusters and MPI were invented in 1994 by a pair of NASA contractors, and they totally disrupted the high-performance computer industry by driving the cost of parallel computing way down. By now, twenty-five years later, the Beowulf cluster architecture is found in approximately 88% of the world’s largest parallel computing systems.

Going back to university

I’m currently an undergraduate student at Florida Atlantic University, completing a neglected Bachelor’s Degree from 1983. In the interim, I have had a wonderful career as a Computer Engineer, working with every generation of Personal Computer technology. My main research that I do at the University is focused on a new architecture for parallel clusters that uses traditional Beowulf hardware (enterprise-class servers with InfiniBand as the interconnect fabric) but modifies the Linux operating system in order to combine the resources (RAM, processor cores) from all the nodes in the cluster and make them appear as a single system that is the sum of all the resources. This is also known as a ‘virtual mainframe’.

The Ninja Gap

In the world of parallel supercomputers (branded ‘high-performance computing, or HPC), system manufacturers are motivated to sell their HPC products to industry, but industry has pushed back due to what they call the “Ninja Gap”. MPI programming is hard. It is usually not learned until the programmer is in grad school at the earliest, and given that it takes a couple of years to achieve mastery of any particular discipline, most of the proficient MPI programmers are PhDs. And this, is the Ninja Gap — industry understands that the academic system cannot and will not be able to generate enough ‘ninjas’ to meet the needs of industry if industry were to adopt HPC technology.

Studying Message Passing Interface

As part of my research into parallel computing systems, I have studied the process of learning to program with MPI and have found that almost all current practitioners are self-taught, coming from disciplines other than computer science. Actual undergraduate CS programs rarely offer MPI programming. Thus my motivation for building a low-cost cluster system with Raspberry Pis, in order to drive down the entry-level costs.

This parallel computing system, with a cost of under $1000, could be deployed at any college or community college rather than just at elite research institutions, as is done [for parallel computing systems] today.

Moving parts

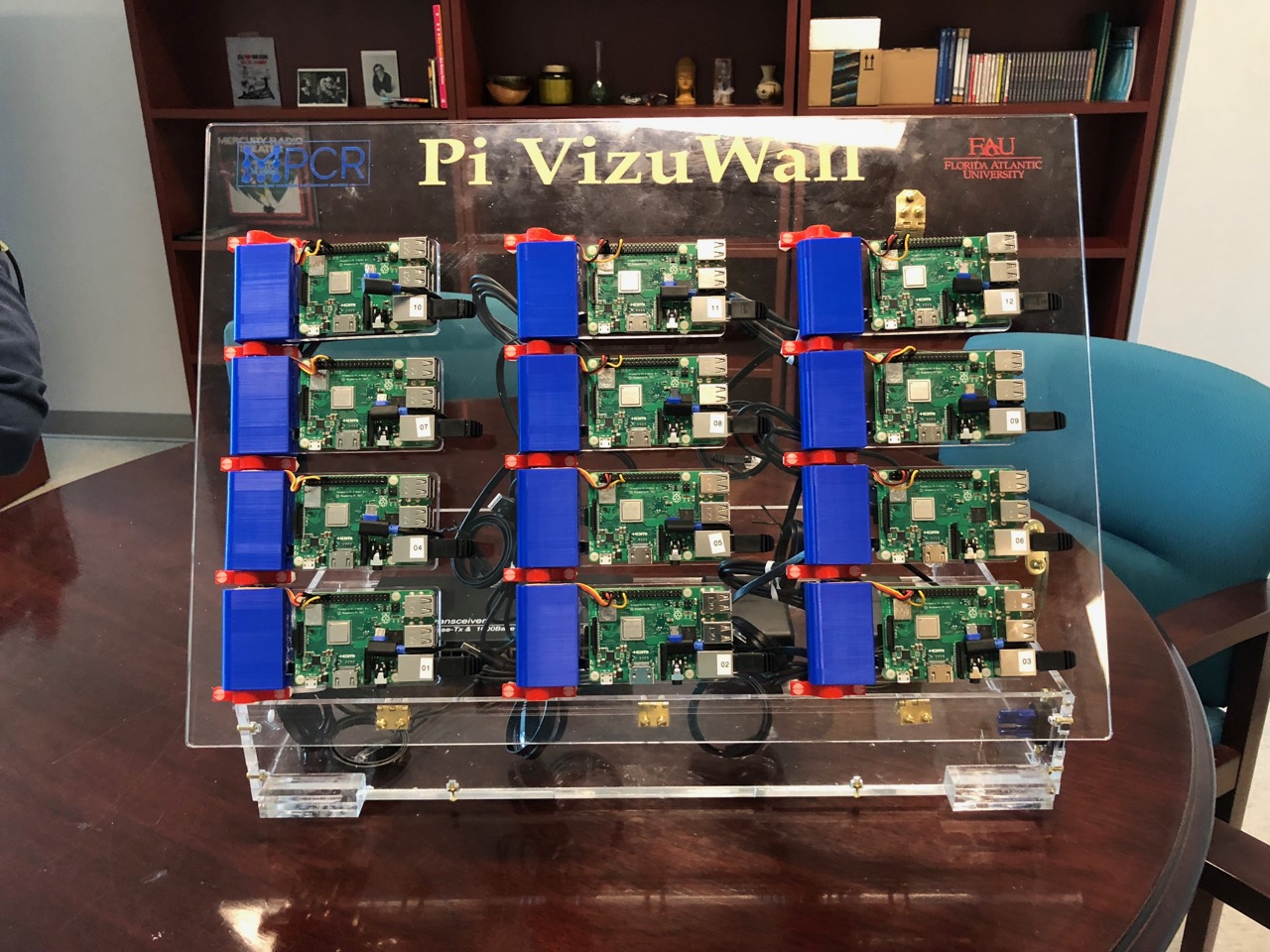

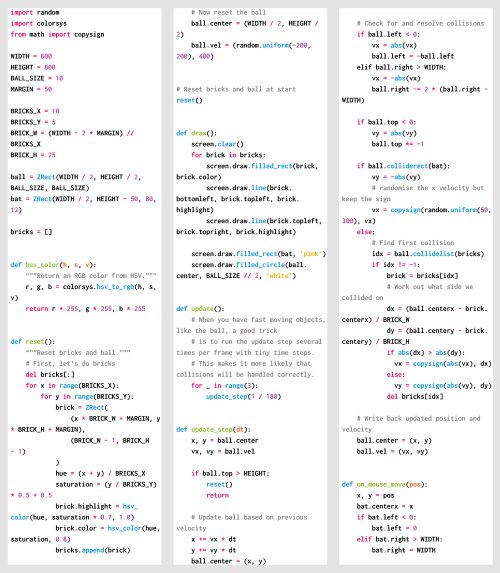

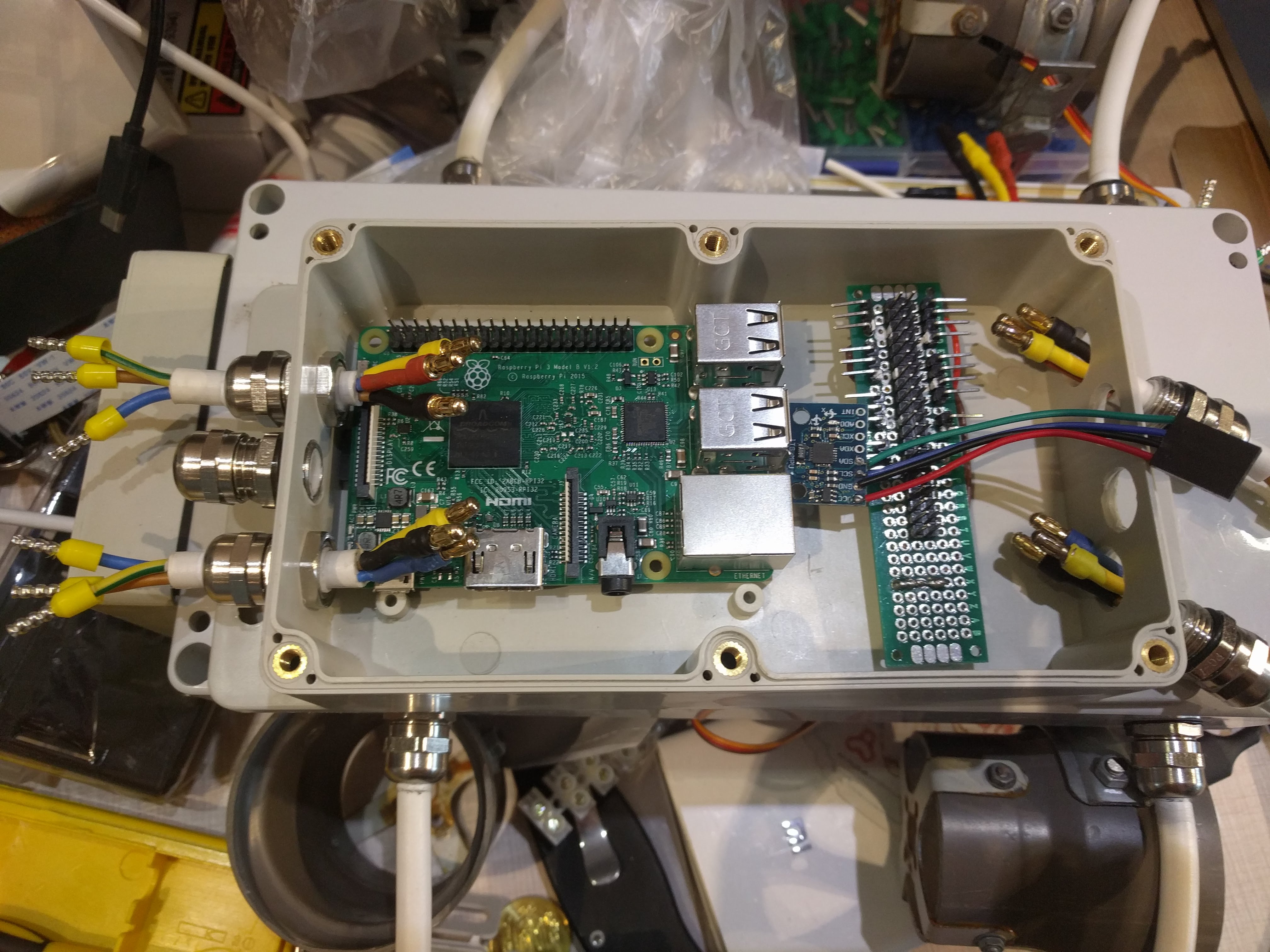

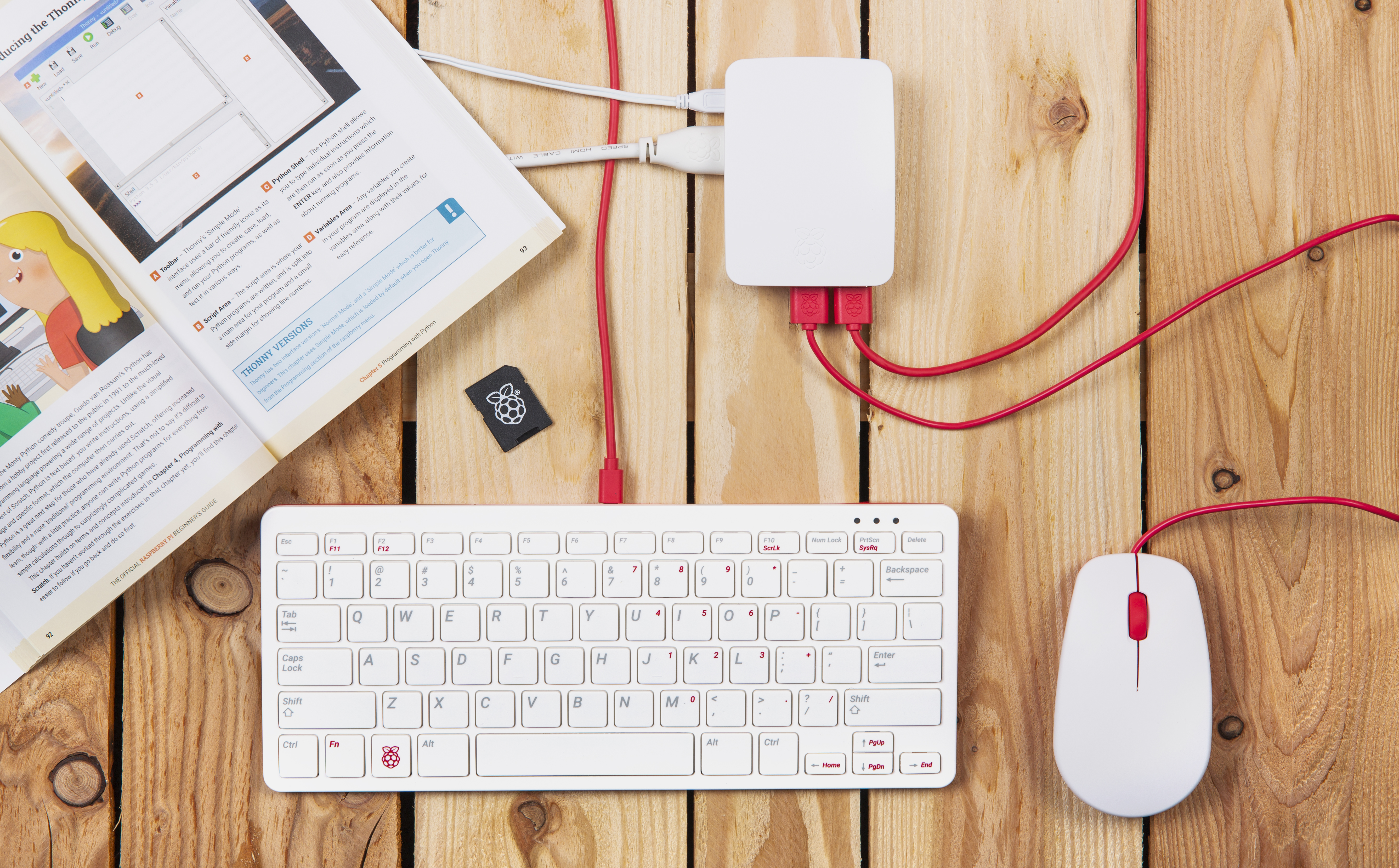

The system is entirely open source, using only standard Raspberry Pi 3B+ boards and Raspbian Linux. The version of MPI that is used is called MPICH, another open-source technology that is readily available.

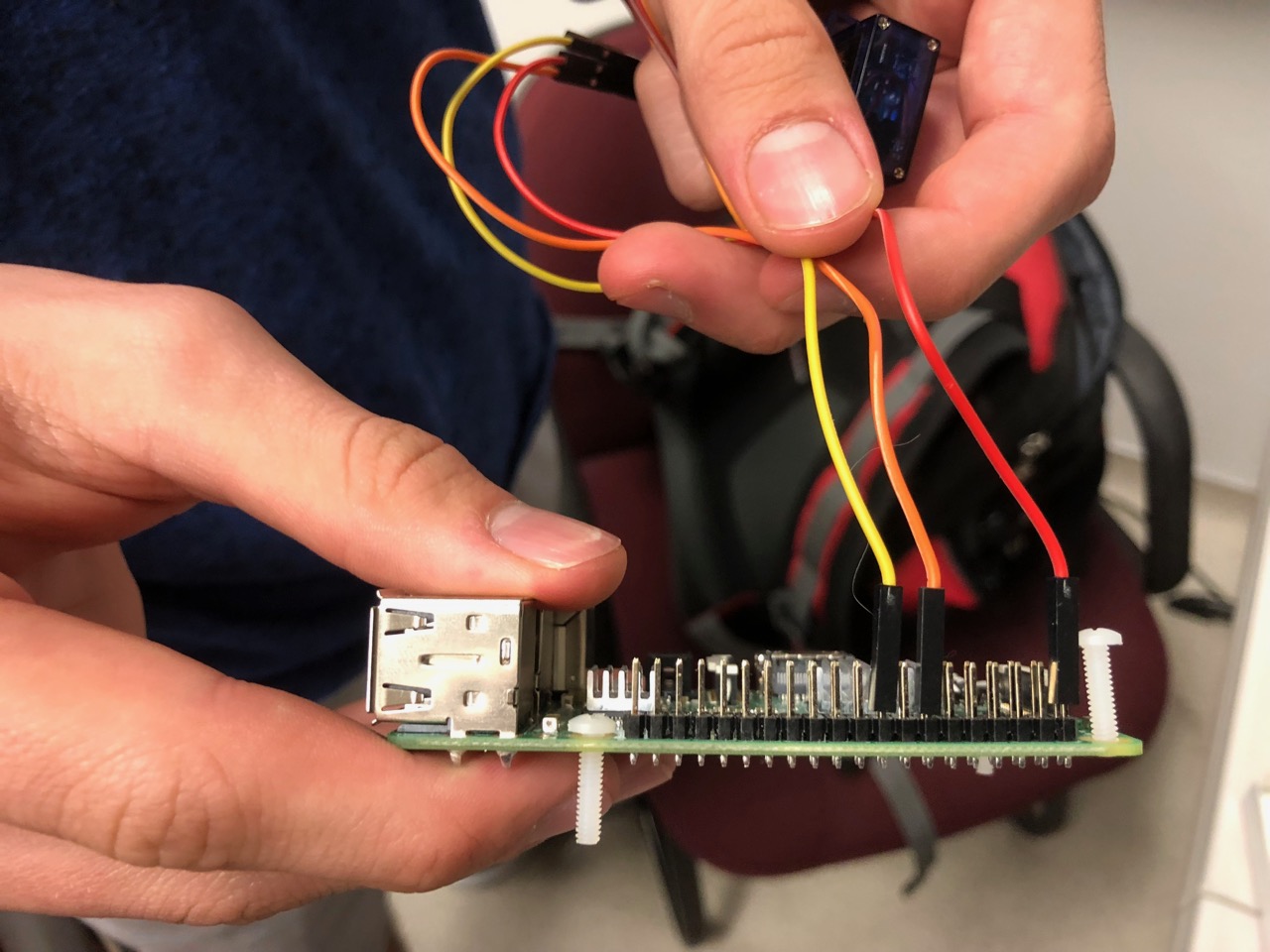

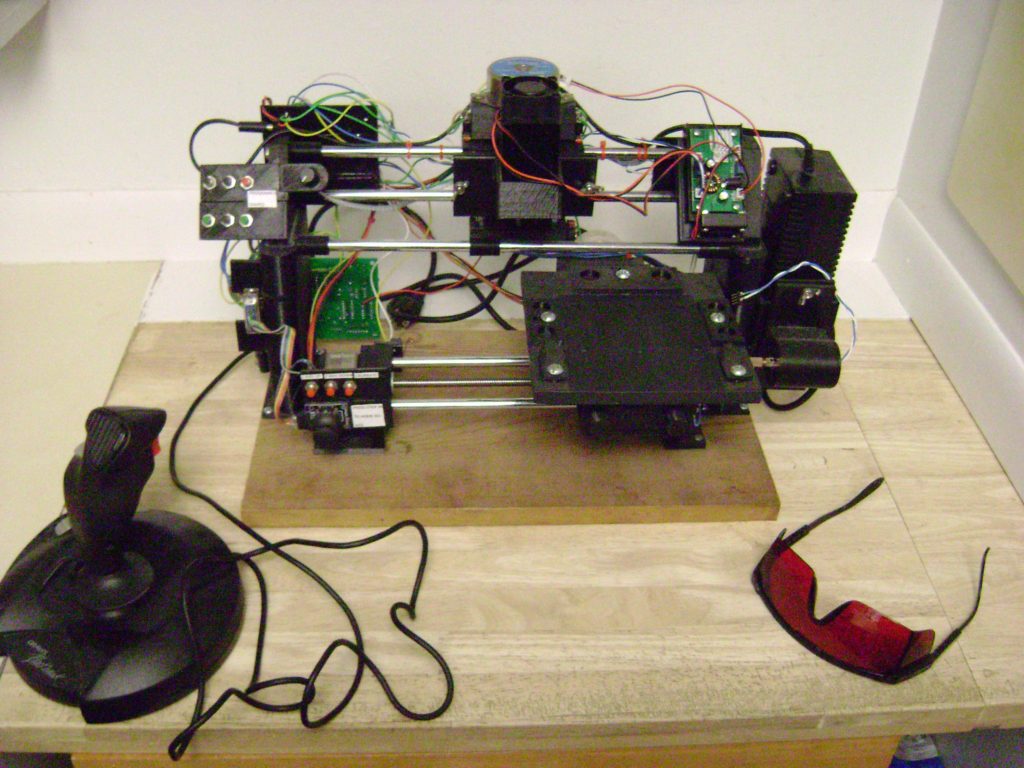

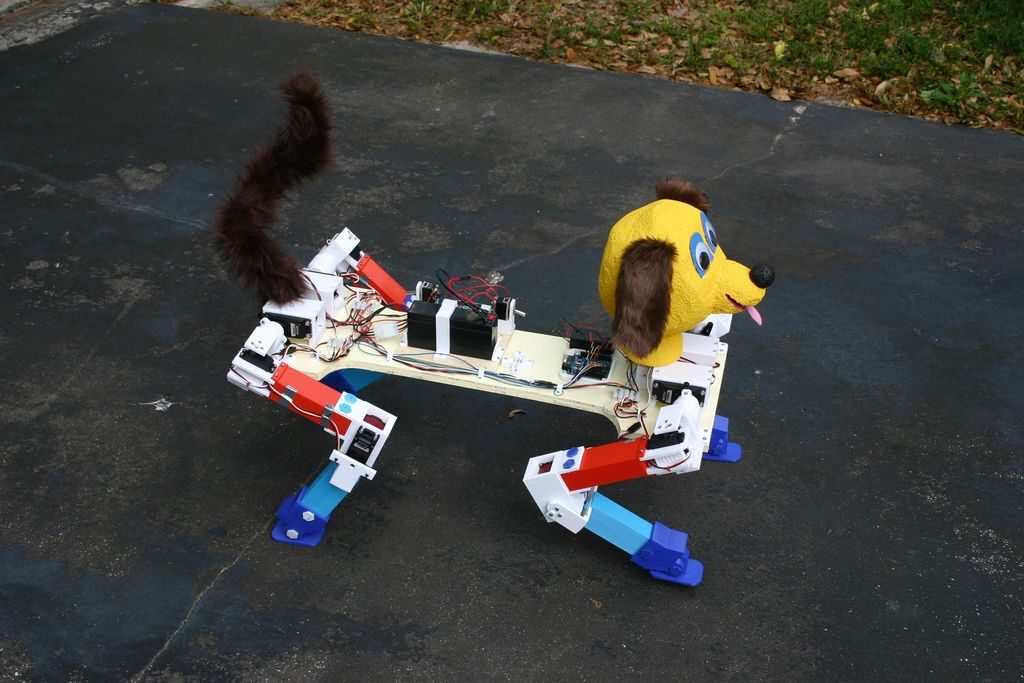

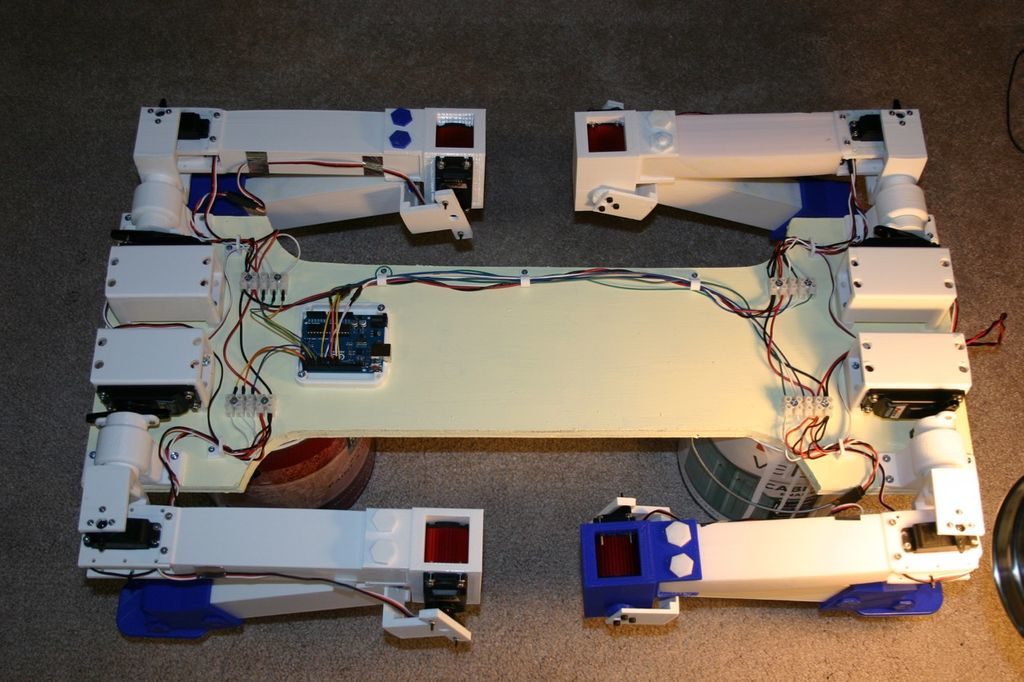

Perhaps one of the more interesting features of the cluster is that each of the Pi boards is mounted on a clear acrylic plate that is attached to a hinging mechanism. Each node is capable of moving through about 90 degrees under software control because a small electric servo motor is embedded in the hinging mechanism. The acrylic parts are laser-cut, and the hinge parts have been 3D printed for this prototype.

Raspbian Linux, like every other Linux version, contains information about CPU utilization as part of the kernel’s internal data. This performance data is available through the /proc filesystem at runtime, allowing a relatively simple program to maintain percent-busy averages over time. This data is used to position the node via its servo, with a fully idle node laying down against the backboard and a full busy node rotating up to ninety degrees.

Visualizing node activity

The purpose of this motion-related activity is to permit the user to visualize the operation of the cluster while executing a parallel program, showing the level of activity at each node via proportional motion. Thus the name Pi VizuWall.

Other than the twelve Pi 3s, I used 12 Tower Pro micro servos (SG90 Digital) and assorted laser-cut acrylic and 3D-printed parts (AI and STL files available on request), as well as a 14-port Ethernet switch for interconnects and two 12A 6-port USB power supplies along with Ethernet cable and USB cables for power.

The future of Pi VizuWall

The original plan for this project was to make a 4ft × 8ft cluster with 300 Raspberry Pis wired as a Beowulf cluster running MPICH. When I proposed this project to my Lab Directors at the university, they balked at the estimated cost of $20–25K and suggested a scaled-down prototype first. We have learned a number of lessons while building this prototype that should serve us well when we move on to building the bigger one. The first lesson is to use CNC’d aluminum for the motor housings instead of 3D-printed plastic — we’ve seen some minor distortion of the printed plastic from the heat generated in the servos. But mainly, this will permit us to have finer resolution when creating the splines that engage with the shaft of the servo motor, solving the problem of occasional slippage under load that we have seen with this version.

The other major challenge was power distribution. We look forward to using the Pi’s PoE capabilities in the next version to simplify power distribution. We also anticipate evaluating whether the Pi’s wireless LAN capability is suitable for carrying the MPI message traffic, given that the wired Ethernet has greater bandwidth. If the wireless bandwidth is sufficient, we will potentially use Pi Zero W computers instead of Pi 3s, doubling the number of nodes we can install on a 4×8’ backboard.

Website: LINK