Inspired by this, maker Jeff Stockman looked to automate the process as part of his Internet of Things course at the University of Washington Tacoma. “I’d made a bird feeder a few years ago and used a first-generation Raspberry Pi computer and a basic webcam connected to my local network,” he says. “But I wanted to incorporate both edge and cloud technologies to improve the feeder’s capabilities.”

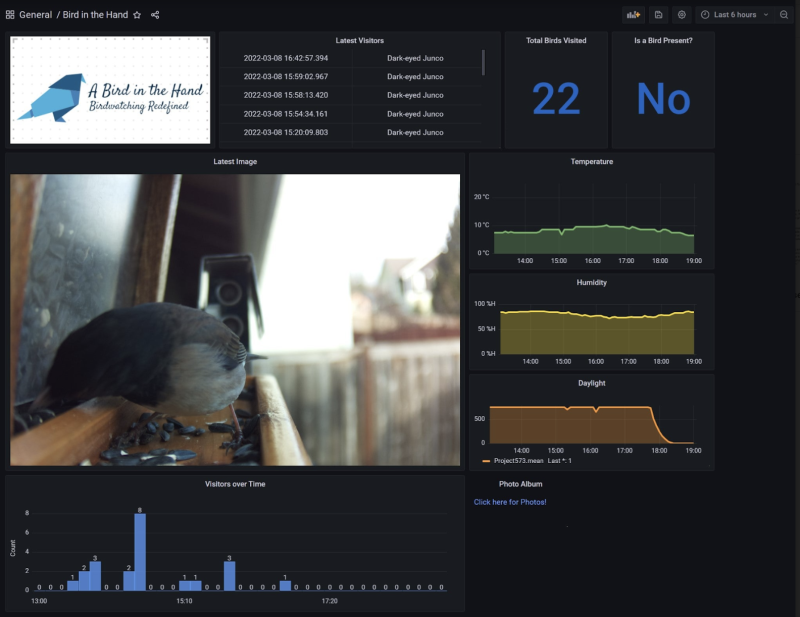

The result has been a smart bird feeder that uses motion sensing and image recognition to monitor birds dropping by for something to eat. “It uses an ultrasonic ranger to detect the presence of a bird,” Jeff explains. “This detects the distance of the bird from the feeder and, when it decreases below 14cm, it triggers the camera to snap a photo. Once the bird is more than 14cm away, the camera is ready to take a photo again.”

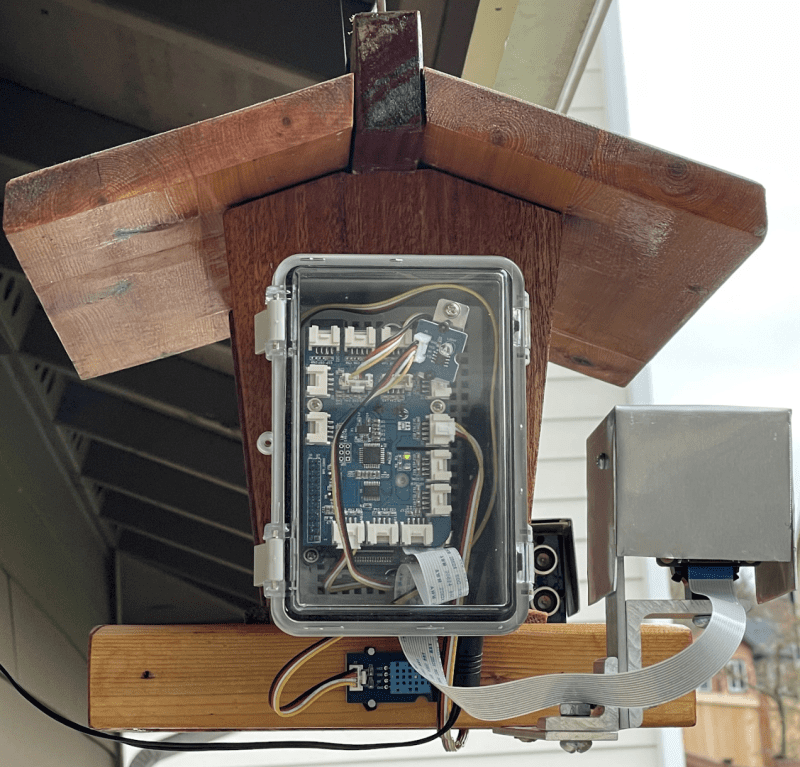

To achieve this, Jeff used a Raspberry Pi 3B computer connected to a Raspberry Pi HQ camera module. “Using Raspberry Pi was a main requirement for the course,” Jeff explains. He also used the flow-based development tool Node-RED to simplify the coding as well as the GrovePi+ HAT, which allows a variety of sensors to be easily connected – Jeff added temperature, humidity and light sensors as well.

Training the model

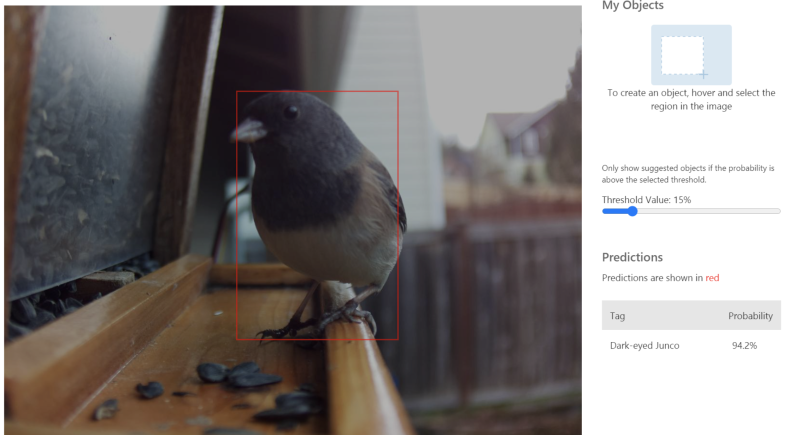

Once a photo is taken, the image is analysed using the free tier of Microsoft’s Azure Custom Vision machine learning service. “I wanted image recognition to understand which bird species were present throughout the year and to identify migratory patterns, as well as year-on-year differences in bird populations,” Jeff tells us.

This required Jeff to train the model. “I pulled 20 to 25 images from Google for each of the popular bird species that frequent my feeder. I then uploaded them to Azure and tagged them with the correct species, testing the model from the convenience of my desk by printing out different pictures of birds.” Jeff then asked Azure to return the species name if the probability exceeded 50 percent. “This would increment the count in a database by one,” he adds.

Caught on camera

The model continued to be trained once the smart bird feeder was installed outside. “I could verify the photos and species tags on the Azure website. If incorrect, I’d retag the images and those would then get stored in Azure. Once I had enough real-world images, I retrained the model with new images and additional species that appeared. The model accurately identified 60, then 70, then 80 percent of birds over three iterations of the model.”

There were some difficulties. Jeff would like a live feed but says the image capture doesn’t trigger when this feature is active. “The GrovePi+ ultrasonic sensor was also sporadic in its measurements – the measurements ranged from 15 to 27 cm,” Jeff adds. But the project has proven effective. The data and images are shared with the interactive visualisation web app, Grafana, allowing Jeff to see data and photos in real time. He’s also been able to track birds’ eating habits with some surprising results. “It identified quickly that there were no early birds,” he says. “None appeared before 10am!”

Schreibe einen Kommentar

Du musst angemeldet sein, um einen Kommentar abzugeben.