Kategorie: PC

-

Adapting primary Computing resources for cultural responsiveness: Bringing in learners’ identity

Reading Time: 6 minutesIn recent years, the emphasis on creating culturally responsive educational practices has gained significant traction in schools worldwide. This approach aims to tailor teaching and learning experiences to better reflect and respect the diverse cultural backgrounds of students, thereby enhancing their engagement and success in school. In one of our recent research…

-

Raspberry Pi PLC 38R review

Reading Time: 3 minutesWhile the company also produces PLCs based on Arduino and ESP32 microcontrollers, the model reviewed here is one of the Raspberry Pi-based range and therefore benefits from superior processing power – an advantage when handling multiple real-time processes – and the ability to run a full Linux operating system, the familiar Raspberry…

-

Experience AI at UNESCO’s Digital Learning Week

Reading Time: 5 minutesLast week, we were honoured to attend UNESCO’s Digital Learning Week conference to present our free Experience AI resources and how they can help teachers demystify AI for their learners. The conference drew a worldwide audience in-person and online to hear about the work educators and policy makers are doing to support…

-

Meet Andrew Gregory: a new face in The MagPi

Reading Time: 3 minutesWhat is your history with making? A lot of people who get into making reckon that they used to take things apart and put them back together when they were kids. Whenever I tried doing that I got told off. Instead, whenever anything broke, it was my job to take it apart…

-

ReComputer R1000 industrial grade edge IoT controller review

Reading Time: 2 minutesOut of the box it looks a bit like an unassuming full Raspberry Pi in a nice heat-sink case, albeit a fair bit chunkier. The size comes from the sheer number of features packed into the box – UPS modules, power-over-Ethernet, multiple RJ45 ports, 4G modules, LoRa capabilities, external antenna ports, SSD…

-

Experience AI expands to reach over 2 million students

Reading Time: 4 minutesTwo years ago, we announced Experience AI, a collaboration between the Raspberry Pi Foundation and Google DeepMind to inspire the next generation of AI leaders. Today I am excited to announce that we are expanding the programme with the aim of reaching more than 2 million students over the next 3 years,…

-

Thumby Color mini gaming device review

Reading Time: 3 minutesThe faster dual-core RP2350 processor running at 150Mhz enables Thumby Color to run an 0.85-inch 128×128px 16-bit backlit colour TFT LCD display inside an absolutely miniscule case measuring 51.6 × 30 × 11.6mm. The case has a hole through it enabling Thumby Color to double up as a keychain fob; enabling you…

-

Join the UK Bebras Challenge 2024

Reading Time: 4 minutesThe UK Bebras Challenge, the nation’s largest computing competition, is back and open for entries from schools. This year’s challenge will be open for entries from 4–15 November. Last year, over 400,000 students from across the UK took part. Read on to learn how your school can get involved. What is UK…

-

Welcoming HackSpace

Reading Time: 2 minutesFrom our perspective, this gives us a bigger and better magazine. It also opens up a new aspect of making that we haven’t traditionally given as much thought to as HackSpace. While The MagPi magazine tends to focus heavily on Raspberry Pi products – it is “the Official Raspberry Pi magazine” after…

-

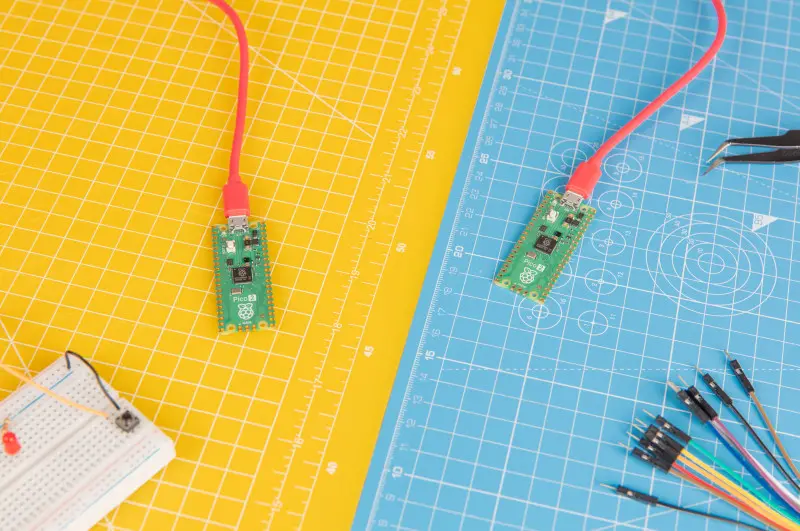

Pico 2 and RP2350 in The MagPi magazine #145

Reading Time: 3 minutesPico 2 & RP2350 It has faster processors, more memory, greater power efficiency, and industry-leading security features and you can choose between Arm and RISC-V cores. The new Pico 2 is an incredible microcontroller board and we’ve secured interviews with the Raspberry Pi engineering team. RP2350 Products out now Plenty of companies…

-

Bridging the gap from Scratch to Python: Introducing ‘Paint with Python’

Reading Time: 3 minutesWe have developed an innovative activity to support young people as they transition from visual programming languages like Scratch to text-based programming languages like Python. This activity introduces a unique interface that empowers learners to easily interact with Python while they create a customised painting app. “The kids liked the self-paced learning,…

-

Win! One of 20 Raspberry Pi Pico 2

Reading Time: < 1 minuteSave 35% off the cover price with a subscription to The MagPi magazine. UK subscribers get three issues for just £10 and a FREE Raspberry Pi Pico W, then pay £30 every six issues. You’ll save money and get a regular supply of in-depth reviews, features, guides and other Raspberry Pi…

-

10 amazing big builds

Reading Time: 3 minutesRaftberry Floating dock It can be nice to pootle around a lake, especially with some delicious food and company. This Raspberry Pi-powered raft uses arcade controls to move around on the water. Teslonda Custom electric car Taking a 1981 Honda Accord and souping it up is one thing, then there’s making it…

-

Get ready for Moonhack 2024: Projects on climate change

Reading Time: 3 minutesMoonhack is a free, international coding challenge for young people run online every year by Code Club Australia, powered by our partner the Telstra Foundation. The yearly challenge is open to young people worldwide, and in 2023, over 44,500 young people registered to take part. Moonhack 2024 runs from 14 to 31…

-

LR1302 LoRaWAN HAT + Gateway Module review

Reading Time: 2 minutesConstruction is simple – simply slot the Gateway Module into the HAT’s mini PCIe connector, and slot the HAT on top of your Raspberry Pi. There are external antennas to add as well, including a fancy GPS module in case you need to know its location. From source The software is a…

-

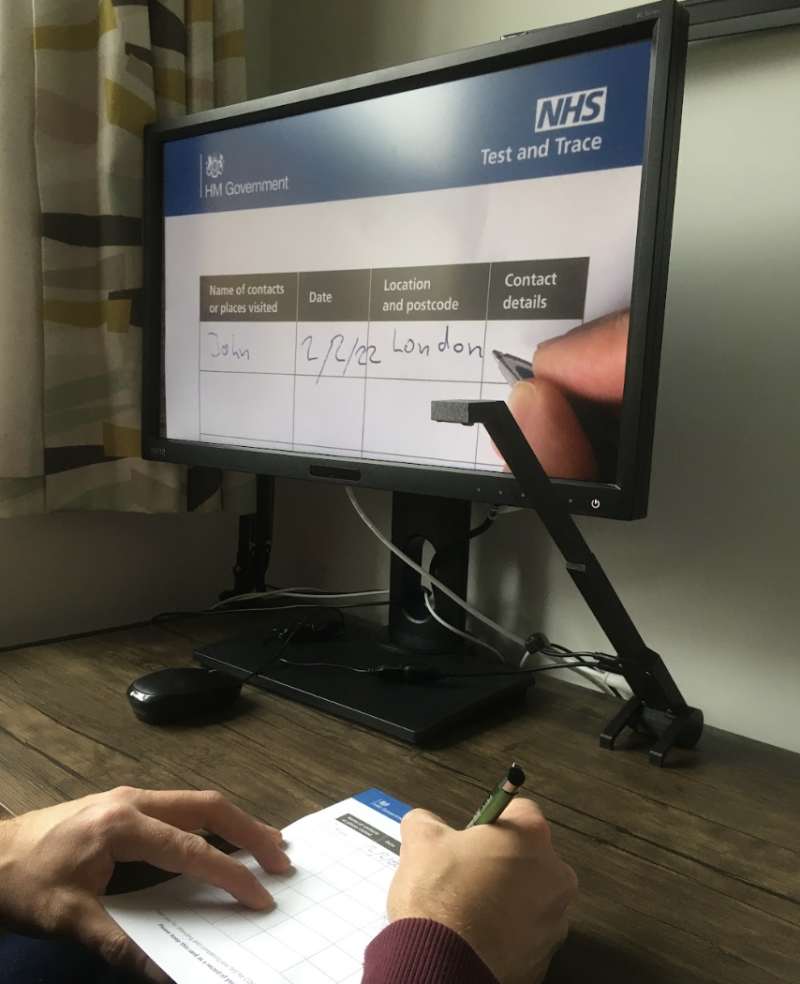

Video magnifier

Reading Time: 3 minutes“[It’s a] technical version of a magnifying glass to help people with low vision,” Markus tells us. “It’s basically a camera that can be connected to any HDMI screen, with a simple interface to scale and modify images. There are lots of professional devices out there, and a few DIY takes on…

-

Laptop dock CrowView Note now on Kickstarter

Reading Time: < 1 minuteWhile we’ve not had enough time to assess it for review yet, we’ve had a chance to play with one for a bit with a Raspberry Pi 5 and we’re very intrigued by it. CrowView has speakers, a webcam, a microphone, and an in-built battery so all you need to do…

-

John Sheehan interview

Reading Time: 3 minutesWhat is your history with being a maker? As a kid, I was always taking things apart to see how they worked. Most of those things even got put back together. Taking after my older brother, I started tinkering with electronics when I was a teen. Continuing to follow in my brother’s…

-

CSTA 2024: What happened in Las Vegas

Reading Time: 4 minutesAbout three weeks ago, a small team from the Raspberry Pi Foundation braved high temperatures and expensive coffees (and a scarcity of tea) to spend time with educators at the CSTA Annual Conference in Las Vegas. With thousands of attendees from across the US and beyond participating in engaging workshops, thought-provoking talks,…

-

TouchBerry Pi Panel PC 10.1 review

Reading Time: 3 minutesThe touchscreen is surrounded by quite a large bezel which forms part of the protective case. With a lot of metal parts, it’s a pretty hefty unit that feels really solid – and heavy, at 1.67kg. Six mount points (two top and bottom, one either side) enable it to be mounted using…

-

Why we’re taking a problem-first approach to the development of AI systems

Reading Time: 7 minutesIf you are into tech, keeping up with the latest updates can be tough, particularly when it comes to artificial intelligence (AI) and generative AI (GenAI). Sometimes I admit to feeling this way myself, however, there was one update recently that really caught my attention. OpenAI launched their latest iteration of ChatGPT,…