Kategorie: PC

-

Highlights from Coolest Projects South Africa 2024

Reading Time: 4 minutesAfandi Indiatsi, our Programme Coordinator in Africa, recently attended Coolest Projects South Africa 2024. Read on to hear her highlights. What happens when creativity, enthusiasm, fun, and innovation come together? You get Coolest Projects South Africa 2024 — a vibrant showcase of students from all walks of life displaying their talent and…

-

Ready to remix? Favourite projects to tinker with

Reading Time: 4 minutesFrom crafting interactive stories to designing captivating games, the Raspberry Pi Foundation’s coding projects offer a hands-on approach to learning, igniting creativity and developing the skills young people need, like perseverance and problem-solving. In this blog, I explore two of my favourite projects that young coders will love. Our projects are free…

-

Computing Curriculum Framework: Adapting to India’s diverse landscapes

Reading Time: 5 minutesThe digital revolution has reshaped every facet of our lives, underscoring the need for robust computing education. At the Raspberry Pi Foundation our mission is to enable young people to realise their full potential through the power of computing and digital technologies. Since starting out in 2008 as a UK-based educational charity,…

-

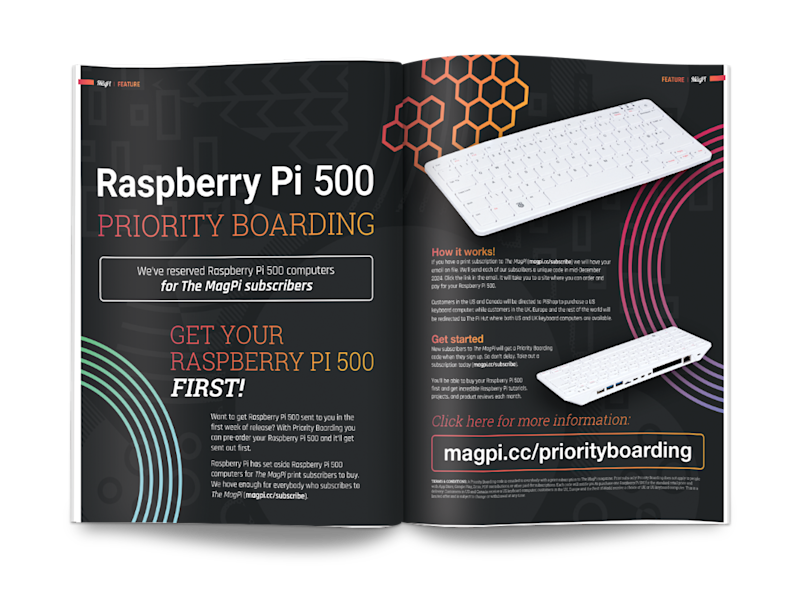

Raspberry Pi 500 and Monitor in The MagPi 149

Reading Time: 3 minutesThe latest edition of The MagPi covers all the new products in depth, with detailed specifications, documentation, and interviews with the CM5 engineer. We’ve also got information on the new Raspberry Pi Pico 2 W, Raspberry Pi Hub, and Raspberry Pi Connect service. There’s a lot of new products this month and…

-

Win! One of five Raspberry Pi Monitors

Reading Time: < 1 minuteWe’ve been looking forward to the new Raspberry Pi Monitor for ages now – the inexpensive and lightweight display is perfect for so many uses, whether you’re in a classroom, at your desk, or on the go. We have five to giveaway, and you can enter the competition below…

-

Five reasons to join the Astro Pi Challenge, backed by our impact report

Reading Time: 4 minutesWe are excited to share our report on the impact of the 2023/24 Astro Pi Challenge. Earlier this year we conducted surveys and focus groups with mentors who took part in the Astro Pi Challenge, to understand the value and impact the challenge offers to young people and mentors. You can read…

-

Wax: digital music manager

Reading Time: 4 minutesWax differs from most existing music managers in three ways. Instead of individual tracks, music is catalogued as ‘works’ – such as an album, a symphony, an opera, etc. Secondly, works are categorised by genre, but it also allows you to tag works in a way that is relevant to the genre…

-

How can we teach students about AI and data science? Join our 2025 seminar series to learn more about the topic

Reading Time: 4 minutesAI, machine learning (ML), and data science infuse our daily lives, from the recommendation functionality on music apps to technologies that influence our healthcare, transport, education, defence, and more. What jobs will be affected by AL, ML, and data science remains to be seen, but it is increasingly clear that students will…

-

Bumpin‘ Sticker

Reading Time: 5 minutes“I love the idea of using bumper stickers as a form of self-expression, but I got to thinking about how ‘permanent’ they are, and how my own style, mood and taste tends to change relatively quickly,” he says. “I wanted to see how I could resolve those things – could I make…

-

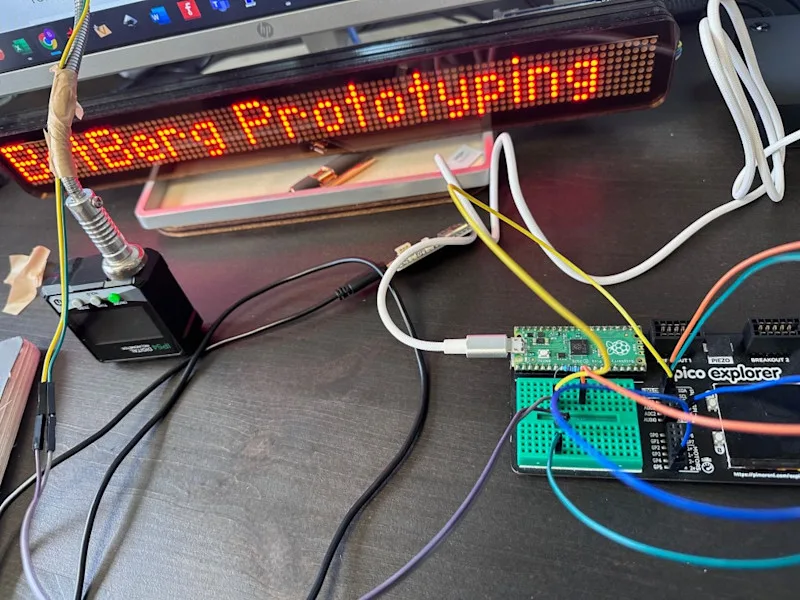

T-Rex Game Auto Jumper

Reading Time: 2 minutes“Using a Raspberry Pi Pico, a light dependent resistor (LDR), a breadboard, some DuPont cables, and tape, I automated the famous Google T-Rex game,” Bas explains. “The LDR detects differences in analogue measurements whenever it senses cacti, which are always dark-coloured and appear on the same plane. The analogue-digital converter [ADC] port…

-

Addressing the digital skills gap

Reading Time: 3 minutesThe digital skills gap is one of the biggest challenges for today’s workforce. It’s a growing concern for educators, employers, and anyone passionate about helping young people succeed. Digital literacy is essential in today’s world, whether or not you’re aiming for a tech career — yet too many young people are entering…

-

Does AI-assisted coding boost novice programmers’ skills or is it just a shortcut?

Reading Time: 6 minutesArtificial intelligence (AI) is transforming industries, and education is no exception. AI-driven development environments (AIDEs), like GitHub Copilot, are opening up new possibilities, and educators and researchers are keen to understand how these tools impact students learning to code. In our 50th research seminar, Nicholas Gardella, a PhD candidate at the University…

-

Argon Poly+ 5 Raspberry Pi case review

Reading Time: 2 minutesThe Poly+ 5 is a Raspberry Pi 5 case in two flavours and colours. The case itself is moulded plastic with none of the aluminium work we’ve come to expect. The slightly transparent slidable top cover is available in red or black with a black base in both cases. The standard model…

-

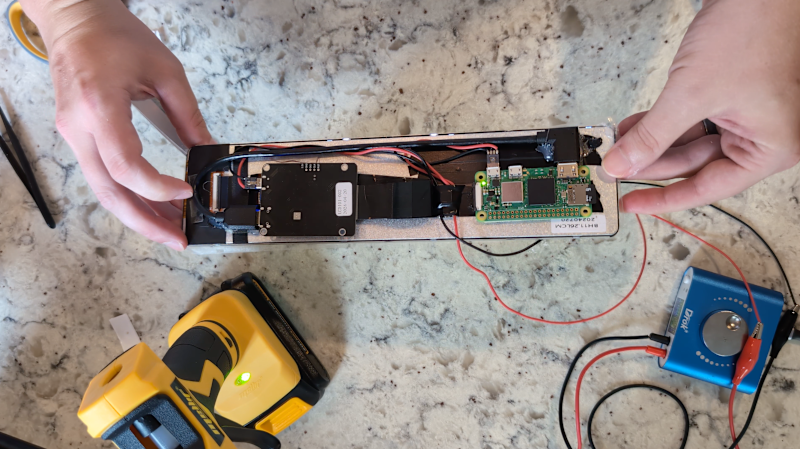

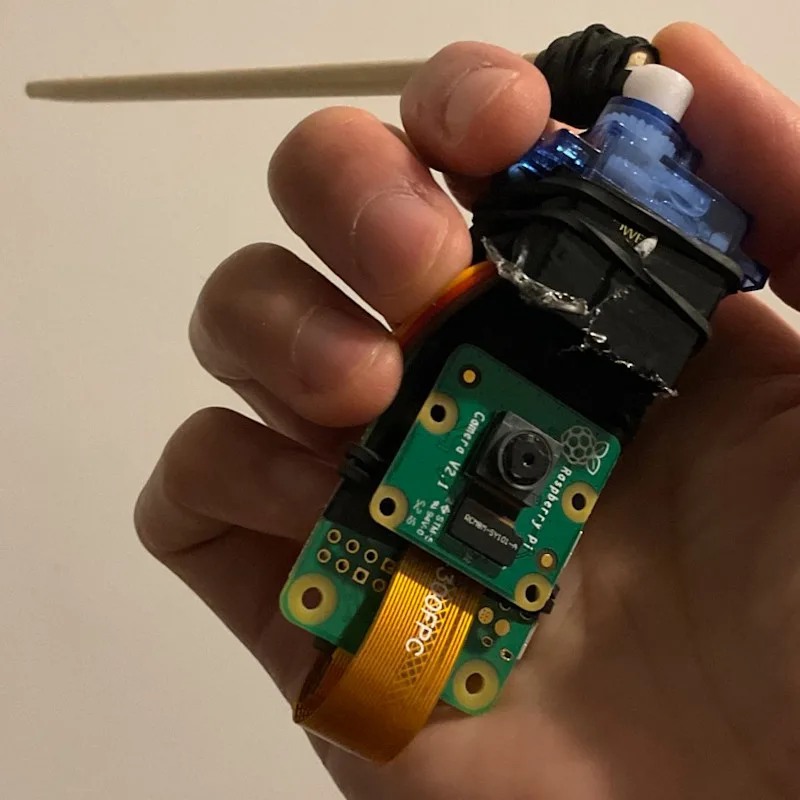

CatBot animal feeder

Reading Time: 2 minutes“I used Raspberry Pi because I was recently working with Raspberry Pi and cameras for another project, a digital sensor for a film camera,” says Michael. “Although there are definitely simpler solutions with cheaper microcontrollers, I find it valuable to start with techniques I know rather than going down rabbit holes of…

-

Ocean Prompting Process: How to get the results you want from an LLM

Reading Time: 5 minutesHave you heard of ChatGPT, Gemini, or Claude, but haven’t tried any of them yourself? Navigating the world of large language models (LLMs) might feel a bit daunting. However, with the right approach, these tools can really enhance your teaching and make classroom admin and planning easier and quicker. That’s where the…

-

Putting AI to use

Reading Time: < 1 minuteLucy Hattersley has all the AI kit and an urge to build something real

-

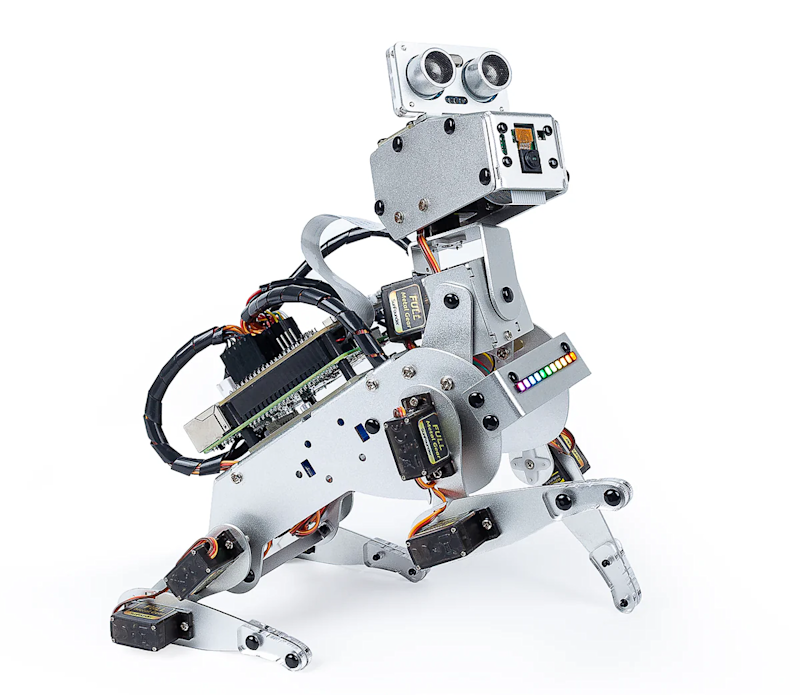

PiDog robot review

Reading Time: 3 minutesThe first thing to decide is which Raspberry Pi model to use before assembling the kit. PiDog will work with Raspberry Pi 4, 3B+, 3B, and Zero 2 W. Using a Raspberry Pi 5 is not recommended since its extra power requirements put too much of a strain on the battery power…