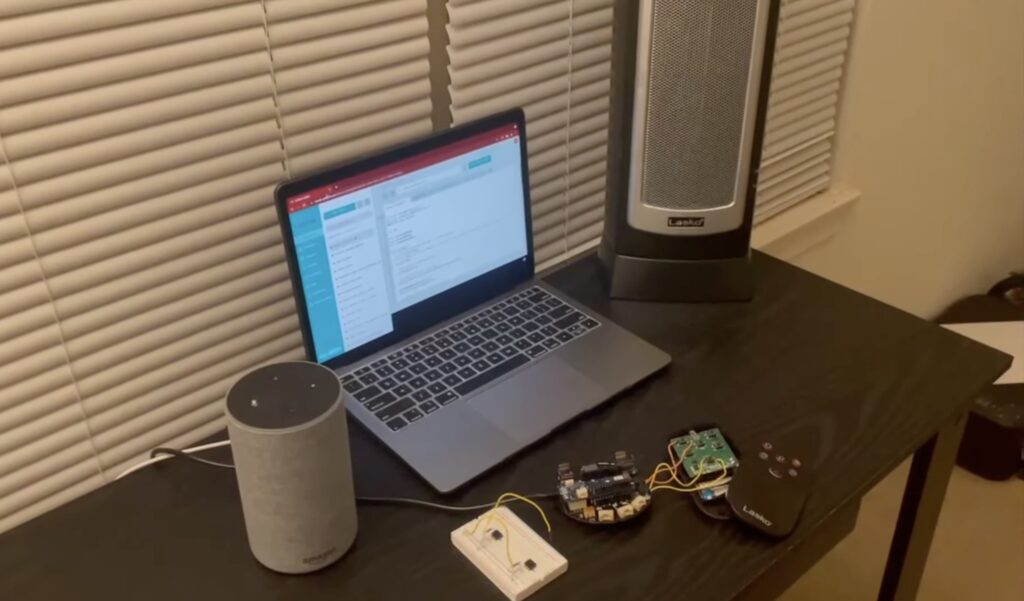

A month ago, ElectronicLab modified his office chair with an electric car jack, giving it motorized height adjustment. That worked well, but required that he push buttons to raise or lower the seat. Pushing those buttons is a hassle when one’s hands are full, so ElectronicLab went back to the workbench to add voice control capabilities.

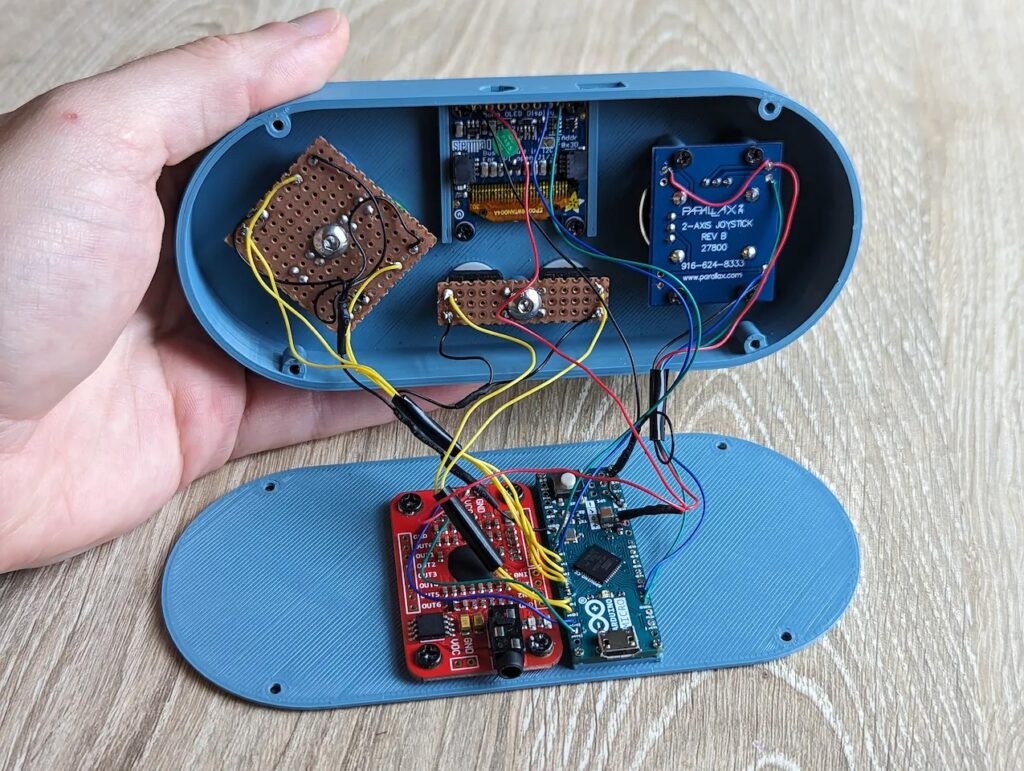

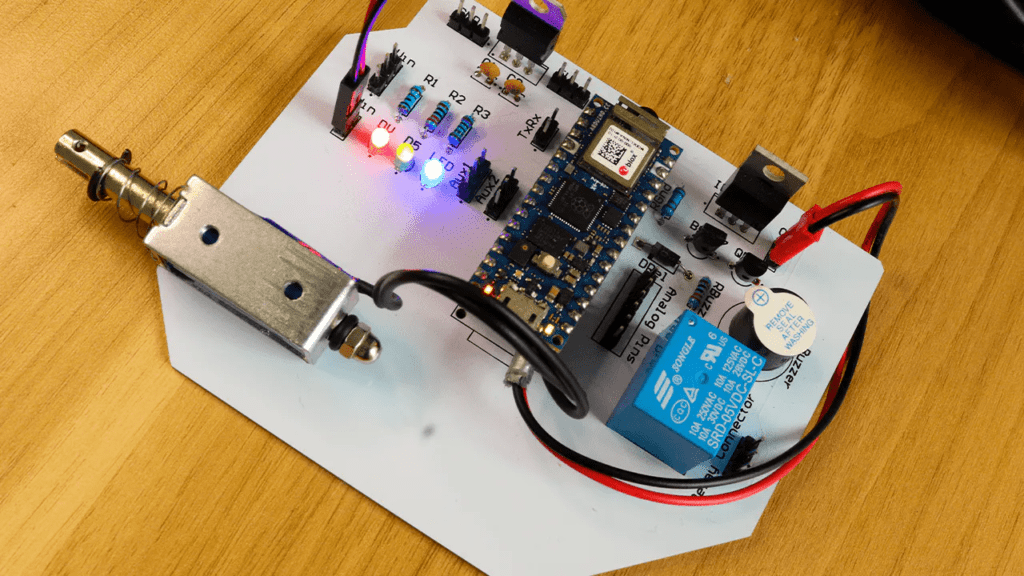

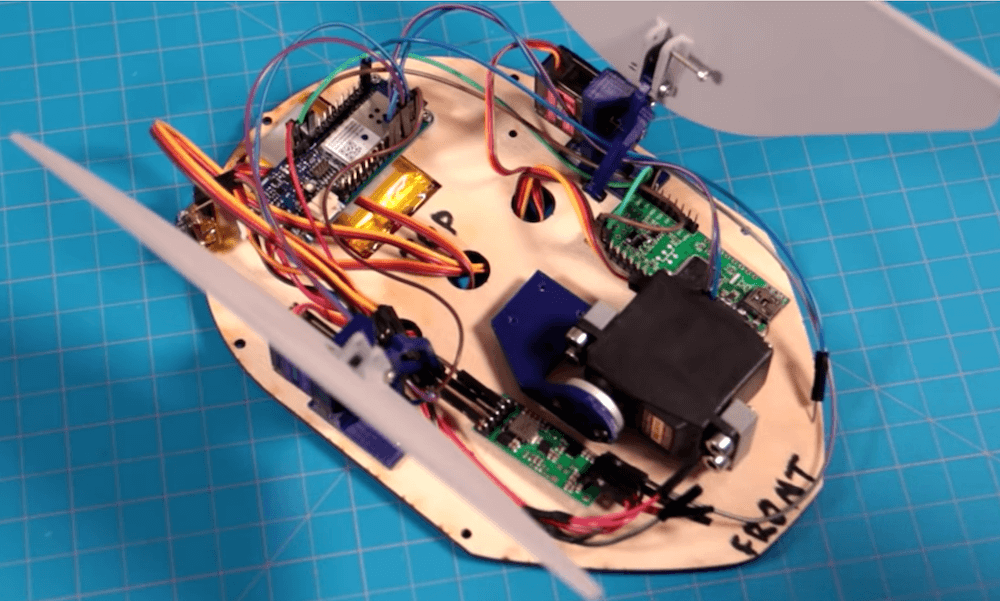

ElectronicLab was using an Arduino Nano to control the electric jack motor in response to button presses, so he already had most of the hardware necessary to make the system smarter. He just needed the Arduino to recognize specific voice commands, which he was able to achieve using an ELECHOUSE Voice Recognition Module V3.

That voice recognition modules supports up to 80 voice commands, but ElectronicLab only needed a few of them — just enough to tell the chair which direction to move and how far to go. The module came with a microphone, which ElectronicLab was able to attach outside of the 3D-printed enclosure where it could pick up his voice.

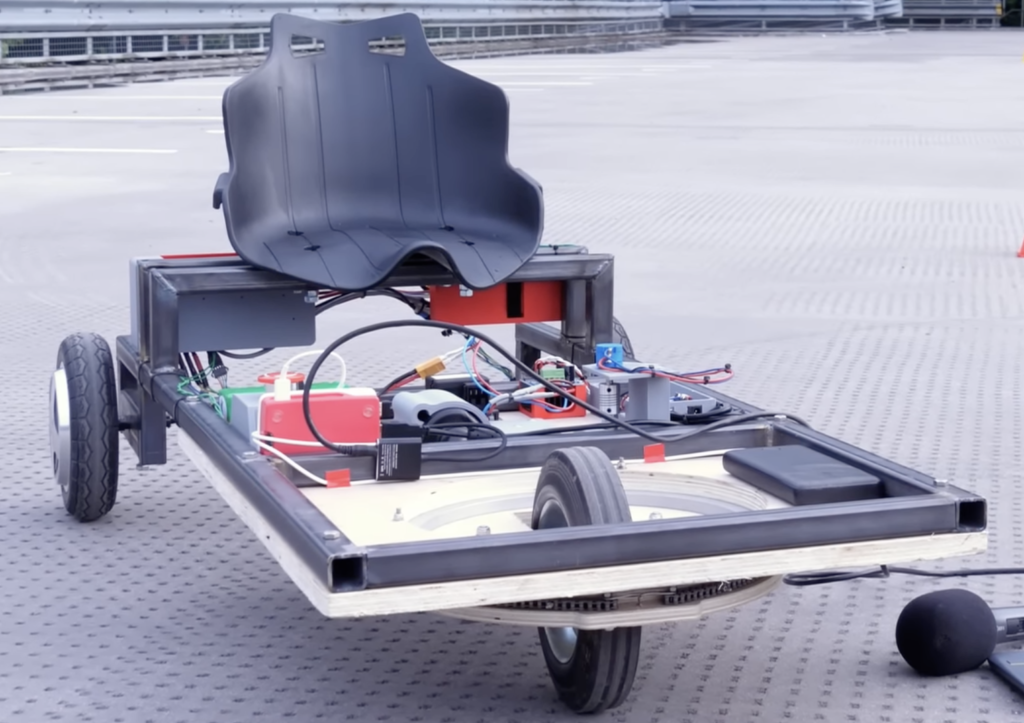

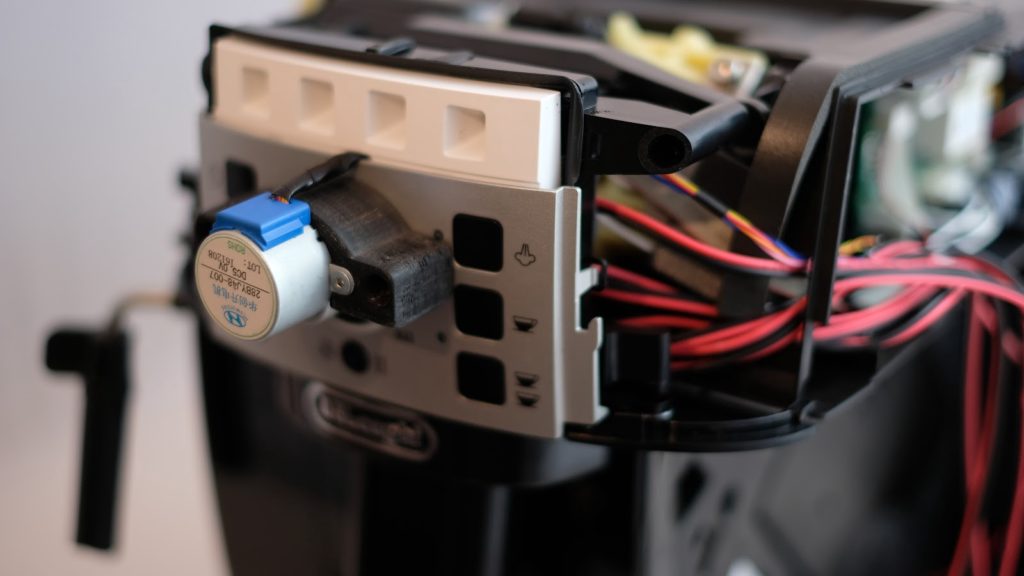

But there was still one problem: the movement was very slow. The jack was designed to lift a car, so it uses a high-torque motor with a 10:1 planetary gearset to drive a hydraulic pump. ElectronicLab didn’t need that much torque, so he welded the planetary gears to give the motor a direct 1:1 ratio. Sadly, that was a mistake. The hydraulic oil can’t flow fast enough to keep up, so the motor pulls way too much current for the driver.

Still, the voice control was a success and so ElectronicLab can simply swap out the motor.

The post Adjusting office chair height with simple voice commands appeared first on Arduino Blog.

Website: LINK