Schlagwort: TinyML

-

tinyML in Malawi: Empowering local communities through technology

Reading Time: 3 minutesDr. David Cuartielles, co-founder of Arduino, recently participated in a workshop titled “TinyML for Sustainable Development” in Zomba, organized by the International Centre for Theoretical Physics (ICTP), a category 1 UNESCO institute, and the University of Malawi. Bringing together students, educators, and professionals from Malawi and neighboring countries, as well as international…

-

Reimagining the chicken coop with predator detection, Wi-Fi control, and more

Reading Time: 2 minutesThe traditional backyard chicken coop is a very simple structure that typically consists of a nesting area, an egg-retrieval panel, and a way to provide food and water as needed. Realizing that some aspects of raising chickens are too labor-intensive, the Coders Cafe crew decided to automate most of the daily care…

-

Making fire detection more accurate with ML sensor fusion

Reading Time: 2 minutesThe mere presence of a flame in a controlled environment, such as a candle, is perfectly acceptable, but when tasked with determining if there is cause for alarm solely using vision data, embedded AI models can struggle with false positives. Solomon Githu’s project aims to lower the rate of incorrect detections with a multi-input…

-

Making a car more secure with the Arduino Nicla Vision

Reading Time: 2 minutesShortly after attending a recent tinyML workshop in Sao Paolo, Brazil, Joao Vitor Freitas da Costa was looking for a way to incorporate some of the technologies and techniques he learned into a useful project. Given that he lives in an area which experiences elevated levels of pickpocketing and automotive theft, he turned…

-

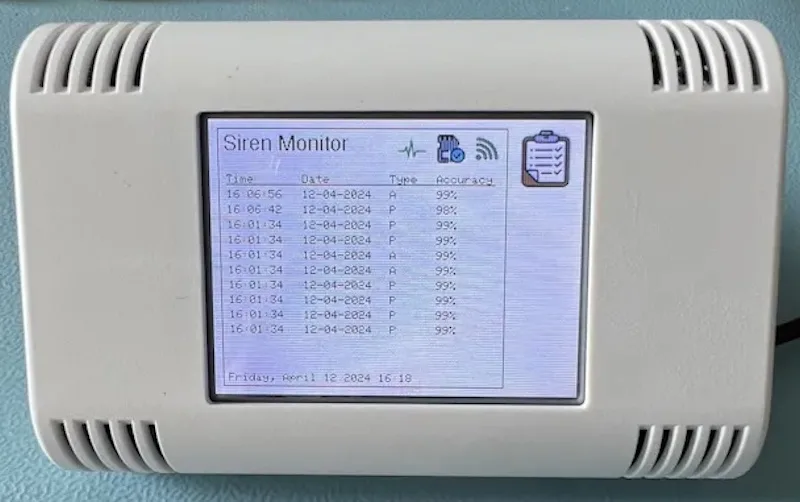

Classify nearby annoyances with this sound monitoring device

Reading Time: 2 minutesSoon after a police station opened near his house, Christopher Cooper noticed a substantial increase in the amount of emergency vehicle traffic and their associated noises even though local officials had promised that it would not be disruptive. But rather than write down every occurrence to track the volume of disturbances, he came up with…

-

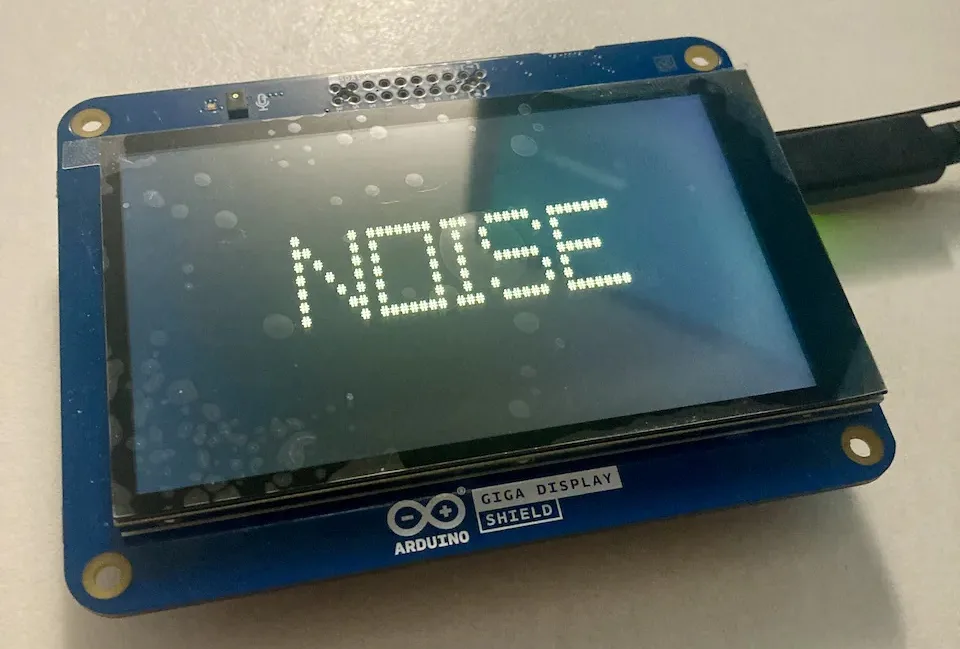

Classifying audio on the GIGA R1 WiFi from purely synthetic data

Reading Time: 2 minutesOne of the main difficulties that people encounter when trying to build their edge ML models is gathering a large, yet simultaneously diverse, dataset. Audio models normally require setting up a microphone, capturing long sequences of sounds, and then manually removing bad data from the resulting files. Shakhizat Nurgaliyev’s project, however, eliminates the…

-

Controlling a power strip with a keyword spotting model and the Nicla Voice

Reading Time: 2 minutesAs Jallson Suryo discusses in his project, adding voice controls to our appliances typically involves an internet connection and a smart assistant device such as Amazon Alexa or Google Assistant. This means extra latency, security concerns, and increased expenses due to the additional hardware and bandwidth requirements. This is why he created a…

-

Improve recycling with the Arduino Pro Portenta C33 and AI audio classification

Reading Time: 2 minutesIn July 2023, Samuel Alexander set out to reduce the amount of trash that gets thrown out due to poor sorting practices at the recycling bin. His original design relied on an Arduino Nano 33 BLE Sense to capture audio through its onboard microphone and then perform edge audio classification with an…

-

Building the OG smartwatch from Inspector Gadget

Reading Time: 2 minutesWe recently showed you Becky Stern’s recreation of the “computer book” carried by Penny in the Inspector Gadget cartoon, but Stern didn’t stop there. She also built a replica of Penny’s most iconic gadget: her watch. Penny was a trendsetter and rocked that decades before the Apple Watch hit the market. Stern’s replica looks just…

-

Improving comfort and energy efficiency in buildings with automated windows and blinds

Reading Time: 2 minutesWhen dealing with indoor climate controls, there are several variables to consider, such as the outside weather, people’s tolerance to hot or cold temperatures, and the desired level of energy savings. Windows can make this extra challenging, as they let in large amounts of light/heat and can create poorly insulated regions, which…

-

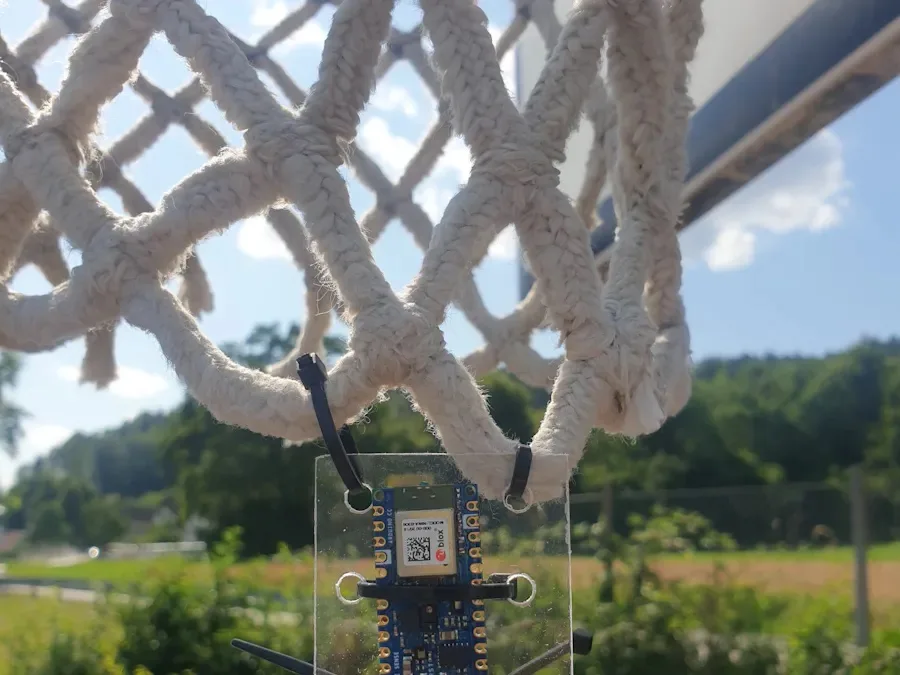

Nothin’ but (neural) net: Track your basketball score with a Nano 33 BLE Sense

Reading Time: 2 minutesWhen playing a short game of basketball, few people enjoy having to consciously track their number of successful throws. Yet when it comes to automation, nearly all systems rely on infrared or visual proximity detection as a way to determine when a shot has gone through the basket versus missed. This is…

-

Helping robot dogs feel through their paws

Reading Time: 2 minutesYour dog has nerve endings covering its entire body, giving it a sense of touch. It can feel the ground through its paws and use that information to gain better traction or detect harmful terrain. For robots to perform as well as their biological counterparts, they need a similar level of sensory…

-

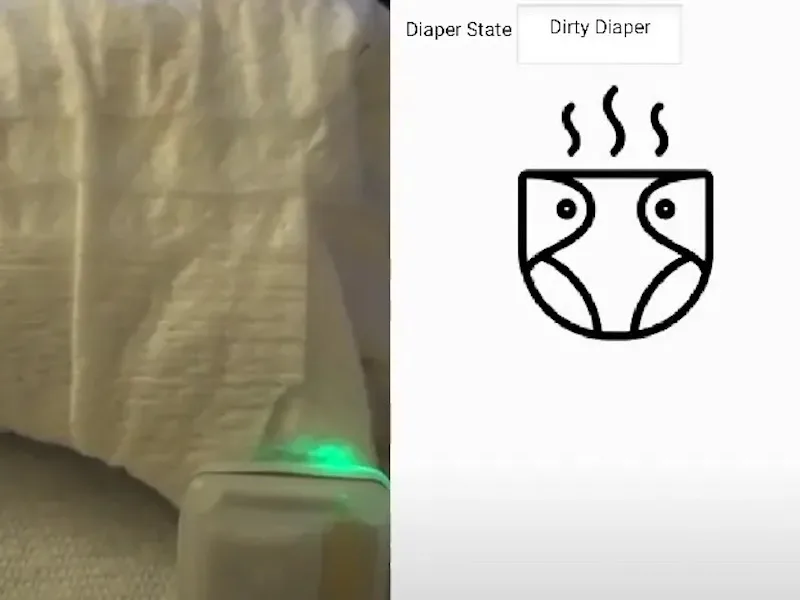

This smart diaper knows when it is ready to be changed

Reading Time: 2 minutesThe traditional method for changing a diaper starts when someone smells or feels the that the diaper has been soiled, and while it isn’t the greatest process, removing the soiled diaper as soon as possible is important for avoiding rashes and infections. Justin Lutz has created an intelligent solution to this situation by designing…

-

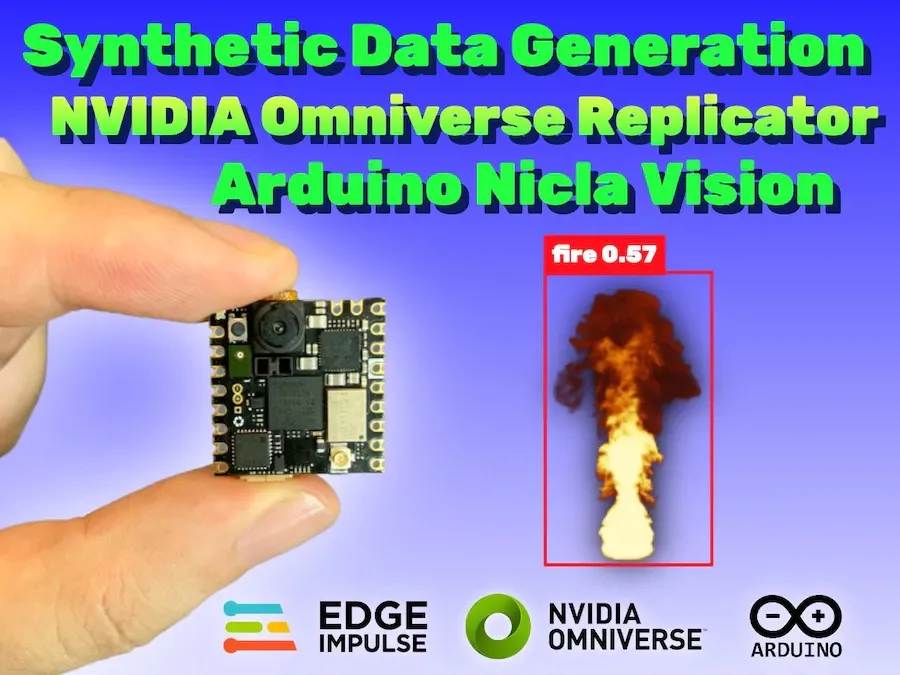

This Nicla Vision-based fire detector was trained entirely on synthetic data

Reading Time: 2 minutesDue to an ever-warming planet thanks to climate change and greatly increasing wildfire chances because of prolonged droughts, being able to quickly detect when a fire has broken out is vital for responding while it’s still in a containable stage. But one major hurdle to collecting machine learning model datasets on these…

-

Small-footprint keyword spotting for low-resource languages with the Nicla Voice

Reading Time: 2 minutesSpeech recognition is everywhere these days, yet some languages, such as Shakhizat Nurgaliyev and Askat Kuzdeuov’s native Kazakh, lack sufficiently large public datasets for training keyword spotting models. To make up for this disparity, the duo explored generating synthetic datasets using a neural text-to-speech system called Piper, and then extracting speech commands from the audio with…

-

This recycling bin sorts waste using audio classification

Reading Time: 2 minutesAlthough a large percentage of our trash can be recycled, only a small percentage actually makes it to the proper facility due, in part, to being improperly sorted. So as an effort to help keep more of our trash out of landfills without the need for extra work, Samuel Alexander built a smart recycling…

-

Predicting soccer games with ML on the UNO R4 Minima

Reading Time: 2 minutesBased on the Renesas RA4M1 microcontroller, the new Arduino UNO R4 boasts 16x the RAM, 8x the flash, and a much faster CPU compared to the previous UNO R3. This means that unlike its predecessor, the R4 is capable of running machine learning at the edge to perform inferencing of incoming data.…

-

Meet Arduino Pro at tinyML EMEA Innovation Forum 2023

Reading Time: 3 minutesOn June 26th-28th, the Arduino Pro team will be in Amsterdam for the tinyML EMEA Innovation Forum – one of the year’s major events for the world where AI models meet agile, low-power devices. This is an exciting time for companies like Arduino and anyone interested in accelerating the adoption of tiny…

-

Enabling automated pipeline maintenance with edge AI

Reading Time: 2 minutesPipelines are integral to our modern way of life, as they enable the fast transportation of water and energy between central providers and the eventual consumers of that resource. However, the presence of cracks from mechanical or corrosive stress can lead to leaks, and thus waste of product or even potentially dangerous…