Schlagwort: robotics

-

Alvik Fight Club: A creative twist on coding, competition, and collaboration

Reading Time: 3 minutesWhat happens when you hand an educational robot to a group of developers and ask them to build something fun? At Arduino, you get a multiplayer robot showdown that’s part battle, part programming lesson, and entirely Alvik. The idea for Alvik Fight Club first came to life during one of our internal…

-

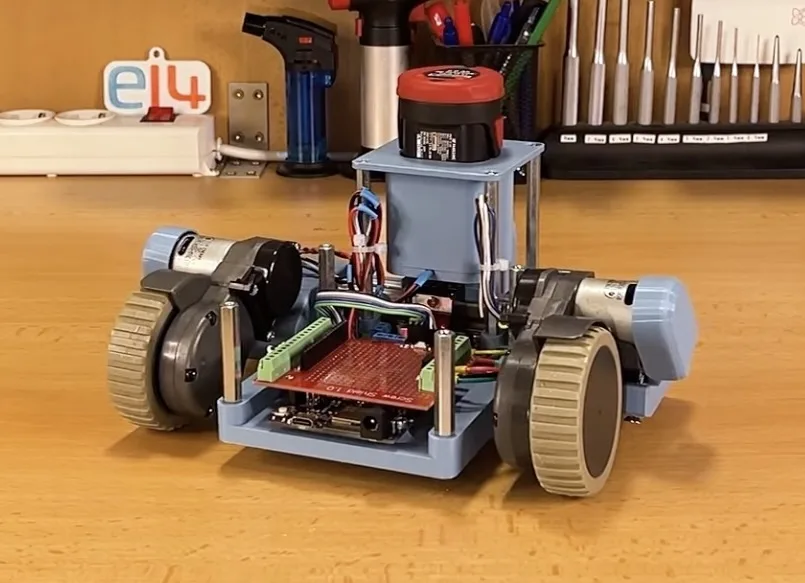

The PlatypusBot is a TurtleBot3-inspired robot built from vacuum cleaner parts

Reading Time: 2 minutesWe all love the immense convenience provided by robot vacuum cleaners, but what happens when they get too old to function? Rather than throwing it away, Milos Rasic from element14 Presents wanted to extract the often-expensive components and repurpose them into an entirely new robot, inspired by the TurtleBot3: the PlatypusBot. Rasic…

-

This three-fingered robot hand makes use of serial bus servos

Reading Time: 2 minutesA small startup called K-Scale Labs is in the process of developing an affordable, open-source humanoid robot and Mike Rigsby wanted to build a compatible hand. This three-fingered robot hand is the result, and it makes use of serial bus servos from Waveshare. Most Arduino users are familiar with full-duplex serial communication,…

-

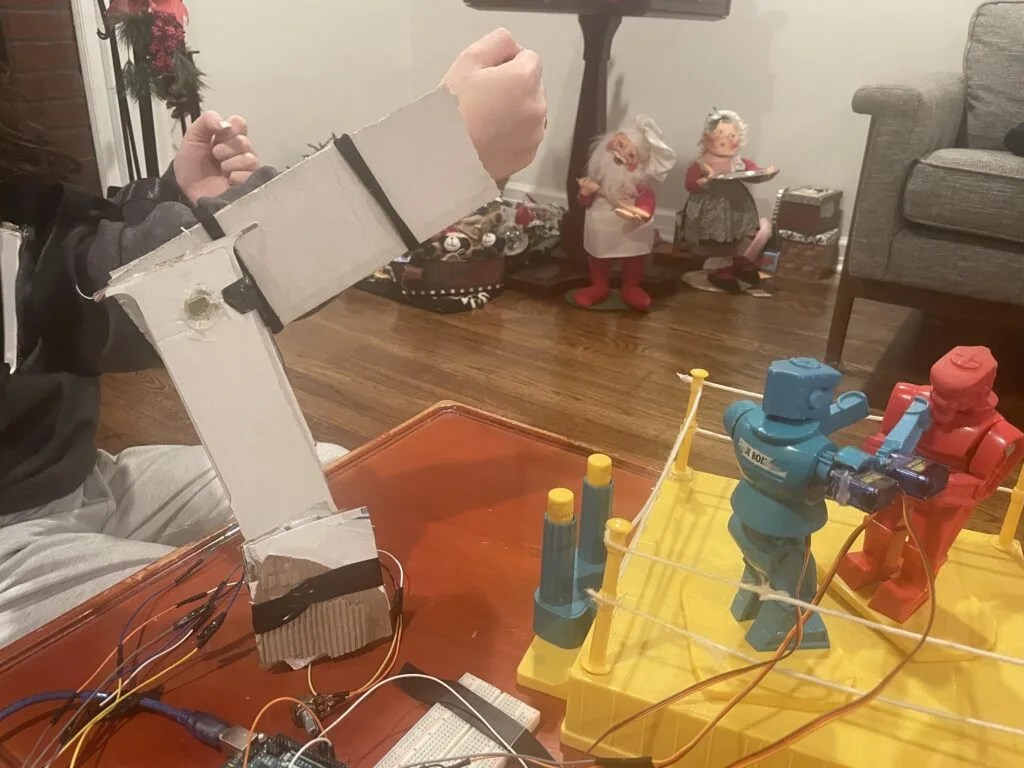

Motion-controlled Rock ‘Em Sock ‘Em Robots will make you feel like Jackman in Real Steel

Reading Time: 2 minutes2011’s Real Steel may have vanished from the public consciousness in a remarkably short amount of time, but the concept was pretty neat. There is something exciting about the idea of fighting through motion-controlled humanoid robots. That is completely possible today — it would just be wildly expensive at the scale seen in the…

-

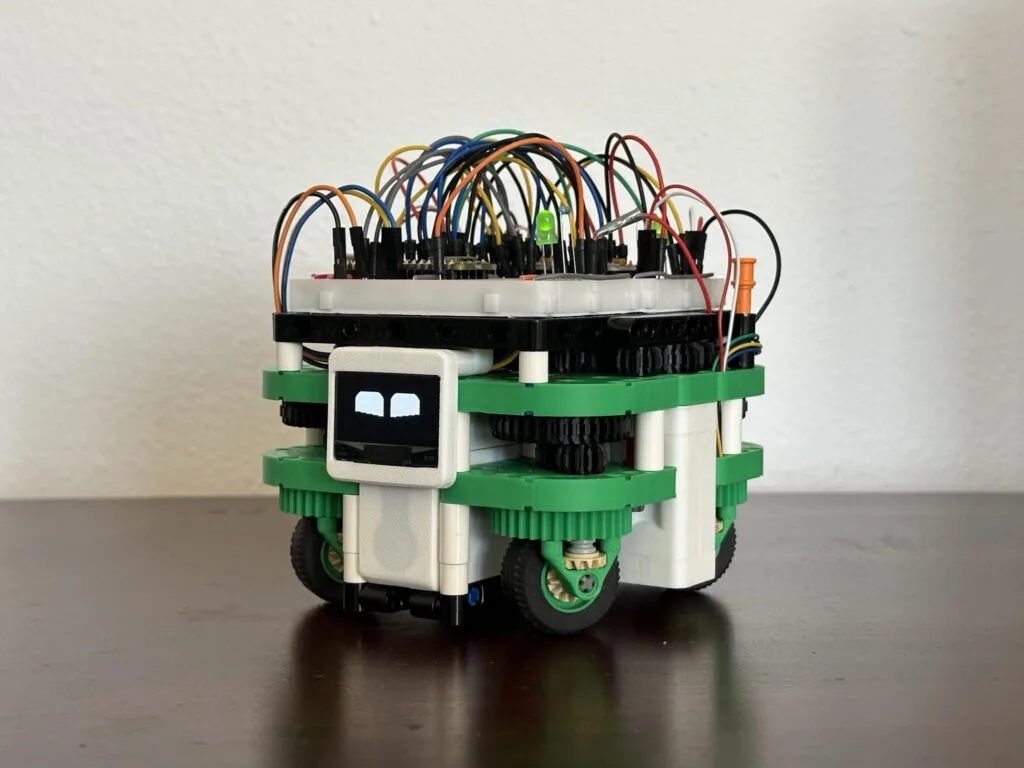

The Swervebot is an omnidirectional robot that combines LEGO and 3D-printed parts

Reading Time: 2 minutesRobotic vehicles can have a wide variety of drive mechanisms that range from a simple tricycle setup all the way to crawling legs. Alex Le’s project leverages the reliability of LEGO blocks with the customizability of 3D-printed pieces to create a highly mobile omnidirectional robot called Swervebot, which is controllable over Wi-Fi thanks…

-

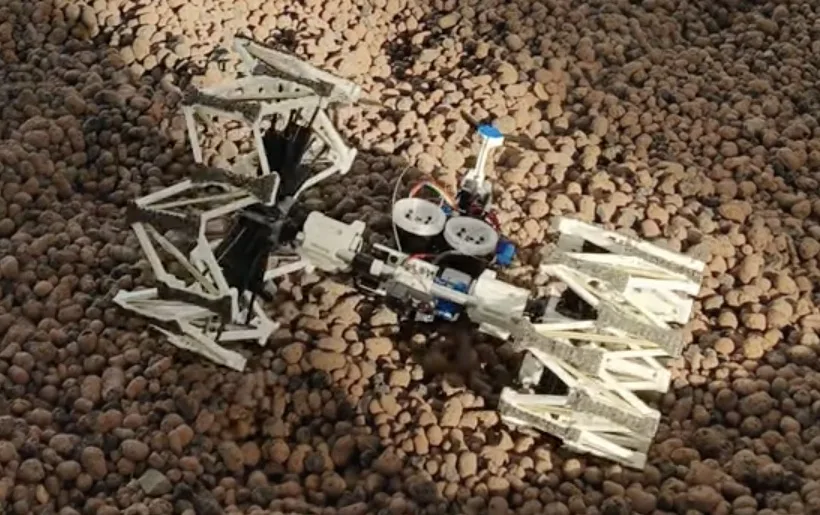

This robot can dynamically change its wheel diameter to suit the terrain

Reading Time: 2 minutesA vehicle’s wheel diameter has a dramatic effect on several aspects of performance. The most obvious is gearing, with larger wheels increasing the ultimate gear ratio — though transmission and transfer case gearing can counteract that. But wheel size also affects mobility over terrain, which is why Gourav Moger and Huseyin Atakan…

-

Exploring Alvik: 3 fun and creative projects with Arduino’s educational robot platform

Reading Time: 3 minutesAlvik is cute, it’s smart, it’s fun… so what can it actually do? To answer this question, we decided to have fun and put the robot to the test with some of the most creative people we know – our own team! A dozen Arduino employees volunteered for a dedicated Make Tank…

-

Exploring fungal intelligence with biohybrid robots powered by Arduino

Reading Time: 3 minutesAt Cornell University, Dr. Anand Kumar Mishra and his team have been conducting groundbreaking research that brings together the fields of robotics, biology, and engineering. Their recent experiments, published in Science, explore how fungal mycelia can be used to control robots. The team has successfully created biohybrid robots that move based on…

-

This perplexing robotic performer operates under the control of three different Arduino boards

Reading Time: 2 minutesEvery decade or two, humanity seems to develop a renewed interest in humanoid robots and their potential within our world. Because the practical applications are actually pretty limited (given the high cost), we inevitably begin to consider how those robots might function as entertainment. But Jon Hamilton did more than just wonder,…

-

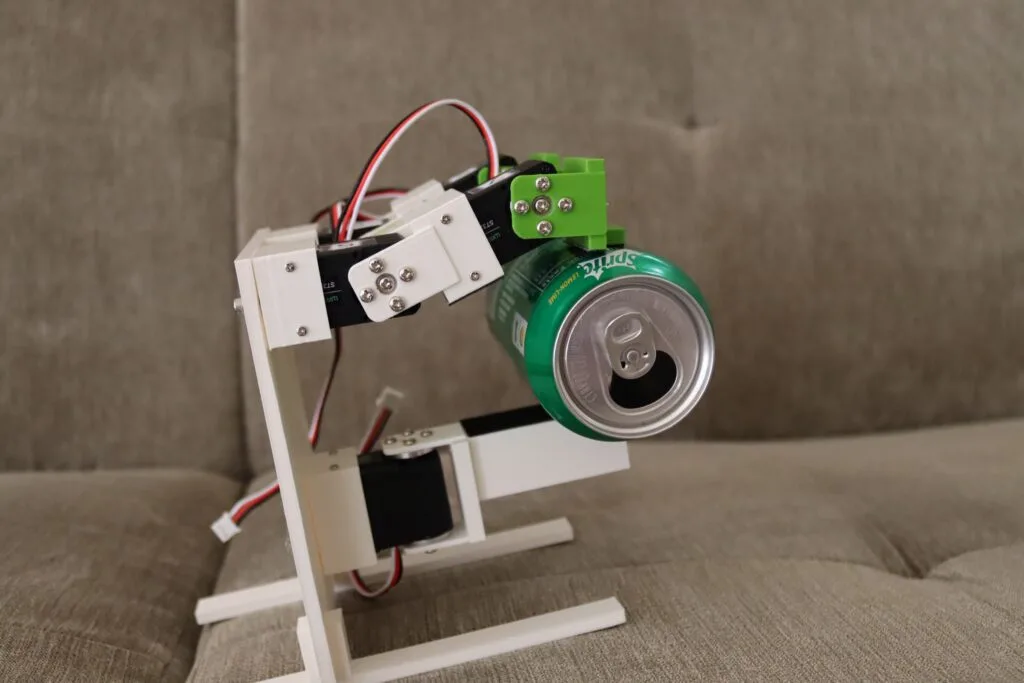

This 3D-printed robotic arm can be built with just a few inexpensive components

Reading Time: 2 minutesRobotics is already an intimidating field, thanks to the complexity involved. And the cost of parts, such as actuators, only increases that feeling of inaccessibility. But as FABRI Creator shows in their most recent video, you can build a useful robotic arm with just a handful of inexpensive components. This is pint-sized…

-

Amassing a mobile Minion militia

Reading Time: 2 minutesChanneling his inner Gru, YouTuber Electo built a robotic minion army to terrorize and amuse the public in local shopping malls. Building one minion robot is, in theory, pretty straightforward. That is especially true when, like these, that robot isn’t actually bipedal and instead rolls around on little wheels attached to the…

-

Meet Real Robot One V2: A mini DIY industrial robot arm

Reading Time: 2 minutesStarted in 2022 as an exploration of what’s possible in the field of DIY robotics, Pavel Surynek’s Real Robot One (RR1) project is a fully-featured 6+1-axis robot arm based on 3D-printed parts and widely available electronics. The initial release was constructed with PETG filament, custom gearboxes for transferring the motor torque to the actuators,…

-

What if robots could communicate with humans by emitting scents?

Reading Time: 2 minutesAlmost all human-robot interaction (HRI) approaches today rely on three senses: hearing, sight, and touch. Your robot vacuum might beep at you, or play recorded or synthesized speech. An LED on its enclosure might blink to red to signify a problem. And cutting-edge humanoid robots may even shake your hand. But what…

-

Massive tentacle robot draws massive attention at EMF Camp

Reading Time: 2 minutesMost of the robots we feature only require a single Arduino board, because one Arduino can control several motors and monitor a bunch of sensors. But what if the robot is enormous and the motors are far apart? James Bruton found himself in that situation when he constructed this huge “tentacle” robot…

-

Meet Mr. Wallplate, an animatronic wall plate that speaks to you

Reading Time: 2 minutesInteractive robots always bring an element of intrigue, and even more so when they feature unusual parts and techniques to perform their actions. Mr. Wallplate, affectionately named by Tony K on Instructables, is one such robot that is contained within an electrical wall plate and uses a servo motor connected to an Arduino UNO…

-

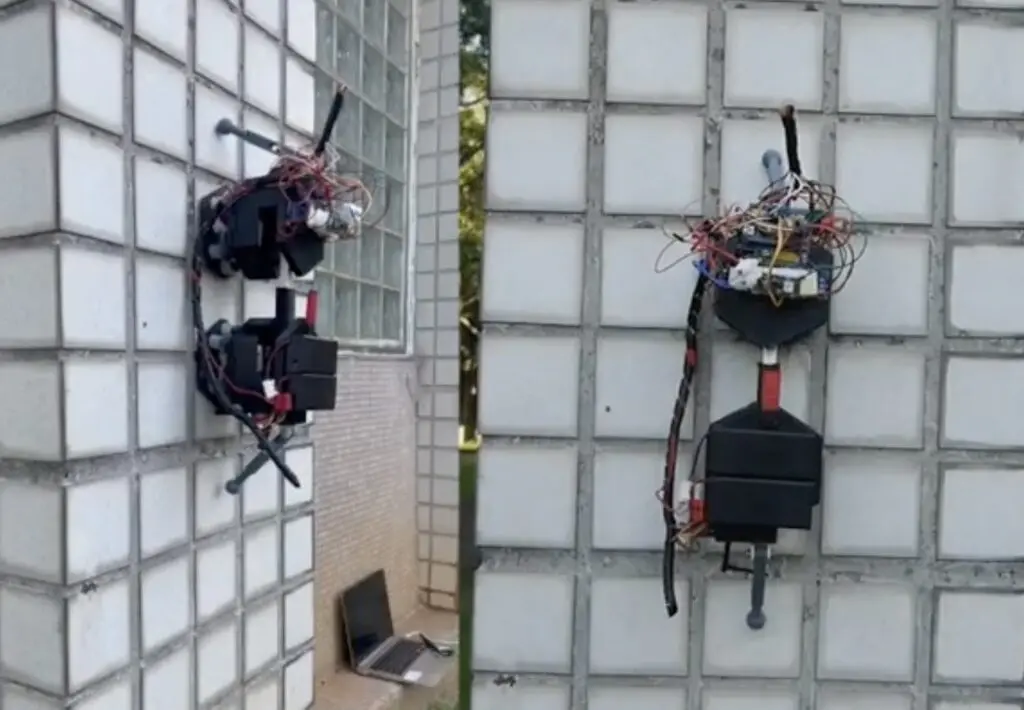

GLEWBOT scales buildings like a gecko to inspect wall tiles

Reading Time: 2 minutesA great deal of building maintenance expenses are the result of simple inaccessibility. Cleaning the windows are your house is a trivial chore, but cleaning the windows on a skyscraper is serious undertaking that needs specialized equipment and training. To make exterior wall tile inspection efficient and affordable, the GLEWBOT team turned…

-

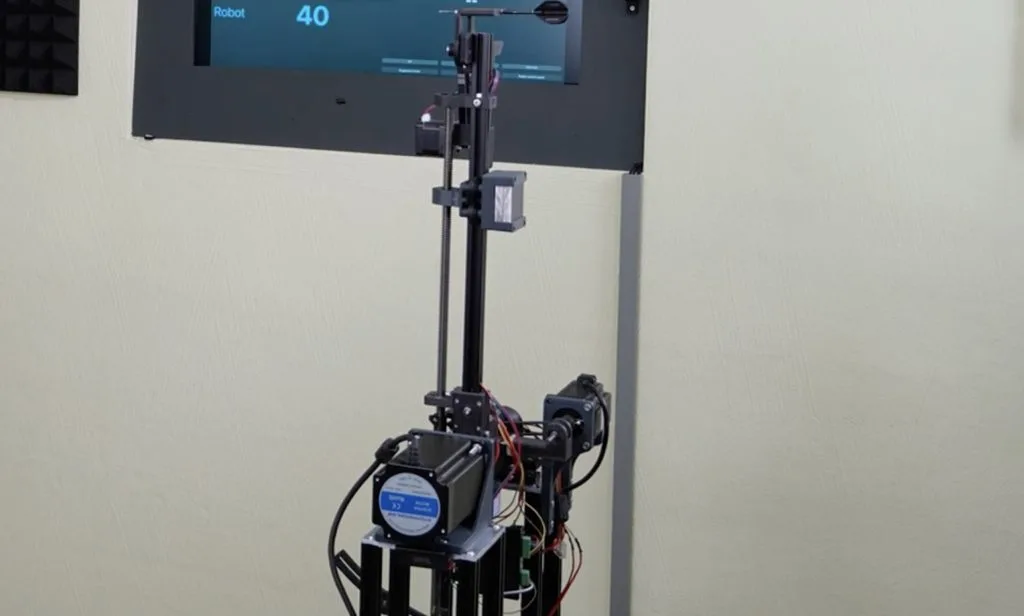

This robot dominates dart games

Reading Time: 2 minutesYou’ll find dartboards in just about every dive bar in the world, like cheaper and pokier alternatives to pool. But that doesn’t mean that darts is a casual game to everyone. It takes a lot of skill to play on a competitive level and many of us struggle to perform well. Niklas…

-

Can I build my own robot with Arduino?

Reading Time: 5 minutesWhen you think of automation, what’s the first image that comes to mind? For many of us, it’s a robot. From the blocky, square-headed characters of sci-fi comic fame to household more complex creations like the Replicants of Blade Runner — robots have captured our collective imagination for a long time. It’s…

-

Autochef-9000 can cook an entire breakfast automatically

Reading Time: 2 minutesFans off Wallace and Gromit will all remember two things about the franchise: the sort of creepy — but mostly delightful — stop-motion animation and Wallace’s Rube Goldberg-esque inventions. YouTuber Gregulations was inspired by Wallace’s Autochef breakfast-cooking contraption and decided to build his own robot to prepare morning meals. Gregulations wanted his Autochef-9000 to…

-

Build yourself this simple app-controlled robot dog

Reading Time: 2 minutesIf you have an interest in robotics, it can be really difficult to know where to start. There are so many designs and kits out there that it becomes overwhelming. But it is best to start with the basics and then expand from there after you learn the ropes. One way to…

-

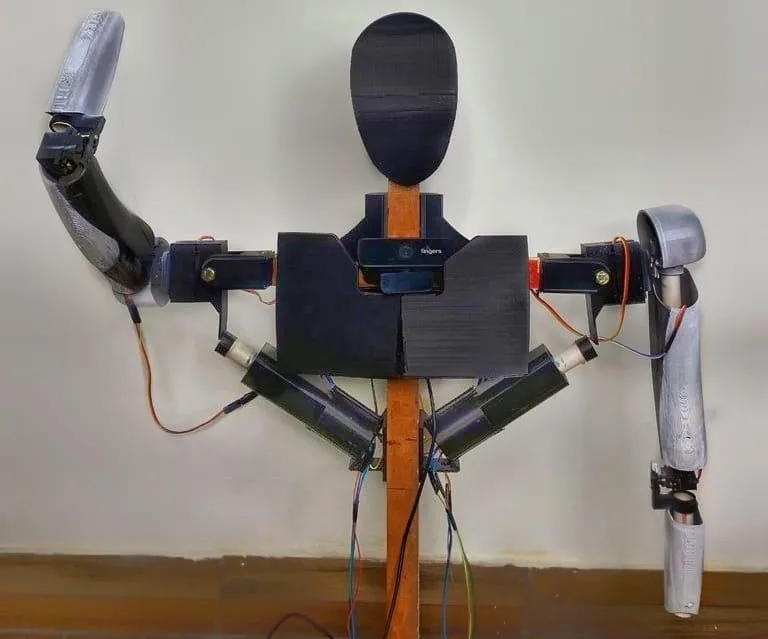

This DIY humanoid robot talks back to you

Reading Time: 2 minutesMost people with an interest in robotics probably dream of building android-style humanoid robots. But when they dip their toes into the field, they quickly learn the reality that such robots are incredibly complex and expensive. However, everyone needs to start somewhere. If you want to begin that journey, you can follow…

-

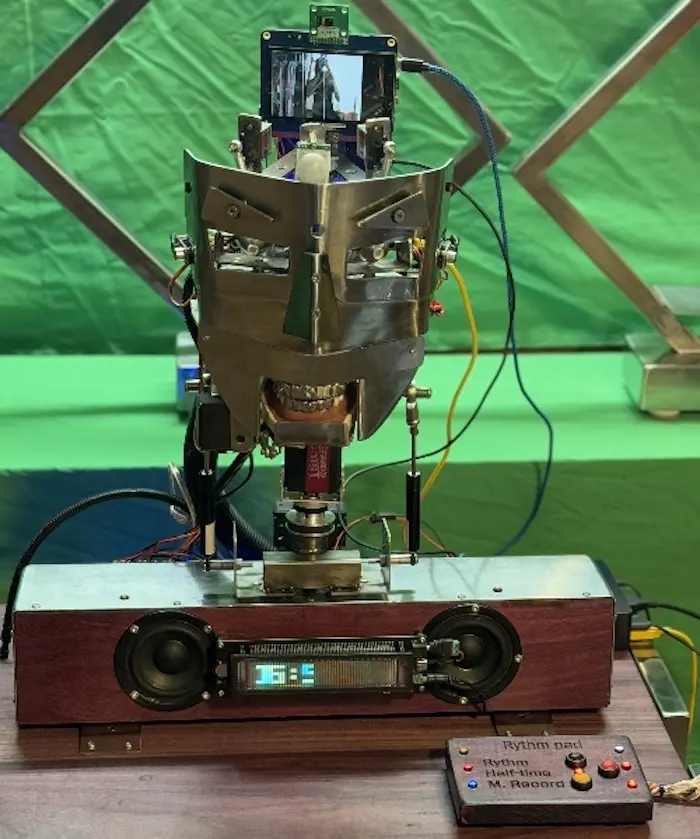

TAST-E is an animatronic robot head with a sense of taste and smell

Reading Time: 2 minutesThere are many theories that attempt to explain the uncanny valley, which is a range of humanoid realness that is very disconcerting to people. When something looks almost human, we find it disturbing. That often applies to robots with faces — or robots that are faces, as is the case with the TAST-E robot that has…