Schlagwort: OpenCV

-

LEGO-firing turret targets tender tootsies

Reading Time: 2 minutesArduino Team — August 4th, 2022 Stepping on LEGO bricks is a meme for a reason: it really @#$%&! hurts. LEGO brick design is ingenious, but the engineers did not consider the ramifications of their minimalist construction system. We’ve seen people do crazy things for Internet points, such as walk across a…

-

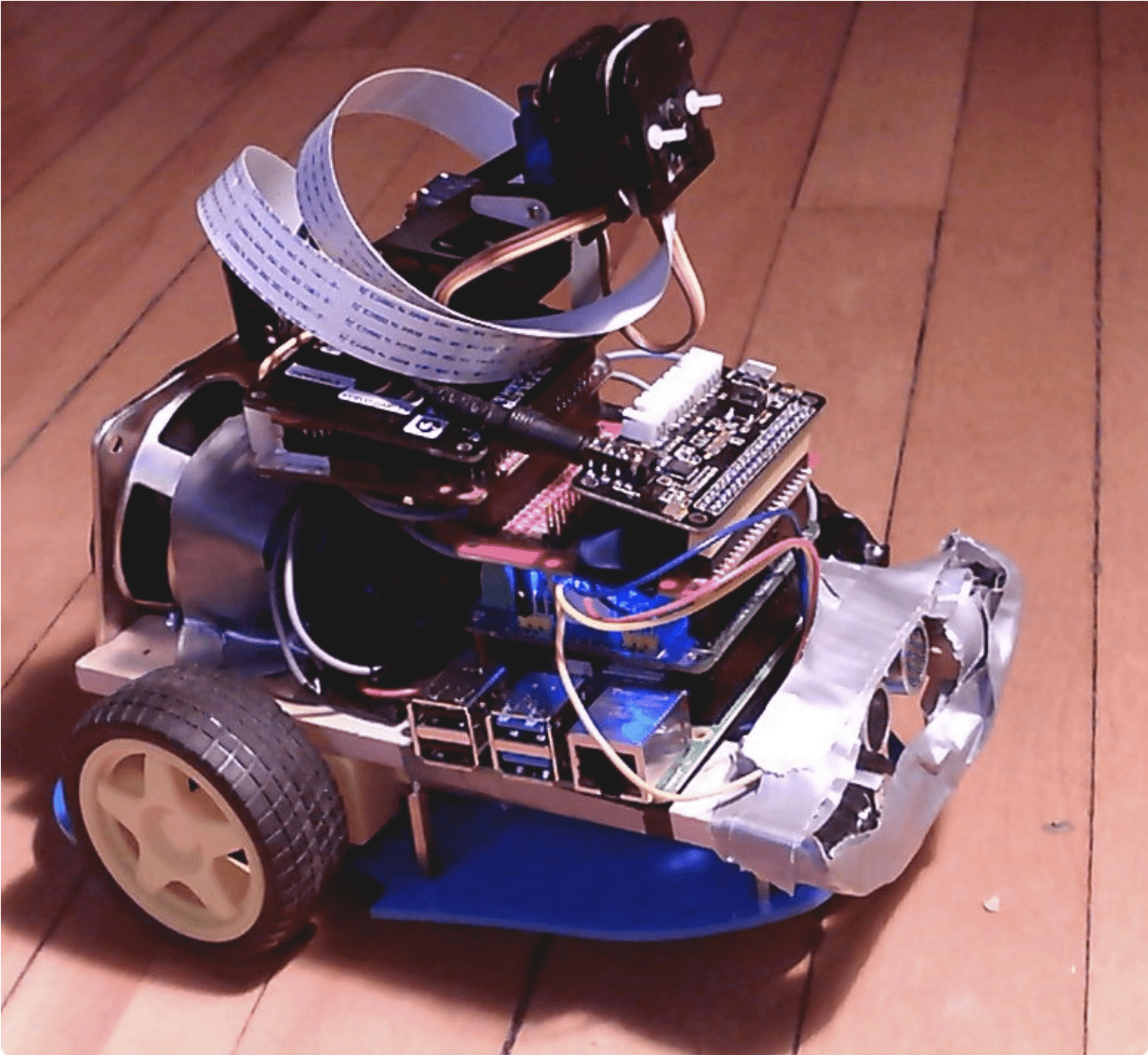

Nandu’s lockdown Raspberry Pi robot project

Reading Time: 2 minutesNandu Vadakkath was inspired by a line-following robot built (literally) entirely from salvage materials that could wait patiently and purchase beer for its maker in Tamil Nadu, India. So he set about making his own, but with the goal of making it capable of slightly more sophisticated tasks. [youtube https://www.youtube.com/watch?v=Y5zBCSHnulc?feature=oembed&w=500&h=281] “Robot, can…

-

Raspberry Pi retro player

Reading Time: 2 minutesWe found this project at TeCoEd and we loved the combination of an OLED display housed inside a retro Argus slide viewer. It uses a Raspberry Pi 3 with Python and OpenCV to pull out single frames from a video and write them to the display in real time. TeCoEd names this…

-

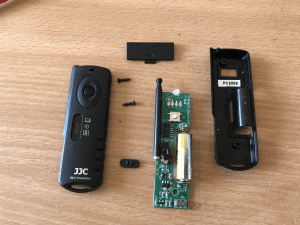

DSLR Motion Capture with Raspberry Pi and OpenCV

Reading Time: 3 minutesOne of our favourite makers, Pi & Chips (AKA David Pride), wanted to see if they could trigger a DSLR camera to take pictures by using motion detection with OpenCV on Raspberry Pi. You could certainly do this with a Raspberry Pi High Quality Camera, but David wanted to try with his…

-

This clock really, really doesn’t want to tell you the time

Reading Time: 2 minutesWhat’s worse than a clock that doesn’t work? One that makes an “unbearably loud screeching noise” every minute of every day is a strong contender. That was the aural nightmare facing YouTuber Burke McCabe. But rather than just fix the problem, he decided, in true Raspberry Pi community fashion, to go one…

-

Take the Wizarding World of Harry Potter home with you

Reading Time: 3 minutesIf you’ve visited the Wizarding World of Harry Potter and found yourself in possession of an interactive magic wand as a souvenir, then you’ll no doubt be wondering by now, “What do I do with it at home though?” While the wand was great for setting off window displays at the park…

-

Playback your favourite records with Plynth

Reading Time: 2 minutesUse album artwork to trigger playback of your favourite music with Plynth, the Raspberry Pi–powered, camera-enhanced record stand. Plynth Demo This is “Plynth Demo” by Plynth on Vimeo, the home for high quality videos and the people who love them. Record playback with Plynth Plynth uses a Raspberry Pi and Pi Camera…

-

Playback your favourite records with Plynth

Reading Time: 2 minutesUse album artwork to trigger playback of your favourite music with Plynth, the Raspberry Pi–powered, camera-enhanced record stand. Plynth Demo This is “Plynth Demo” by Plynth on Vimeo, the home for high quality videos and the people who love them. Record playback with Plynth Plynth uses a Raspberry Pi and Pi Camera…

-

Playback your favourite records with Plynth

Reading Time: 2 minutesUse album artwork to trigger playback of your favourite music with Plynth, the Raspberry Pi–powered, camera-enhanced record stand. Plynth Demo This is “Plynth Demo” by Plynth on Vimeo, the home for high quality videos and the people who love them. Record playback with Plynth Plynth uses a Raspberry Pi and Pi Camera…

-

Sean Hodgins’ Haunted Jack in the Box

Reading Time: 3 minutesAfter making a delightful Bitcoin lottery using a Raspberry Pi, Sean Hodgins brings us more Pi-powered goodness in time for every maker’s favourite holiday: Easter! Just kidding, it’s Halloween. Check out his hair-raising new build, the Haunted Jack in the Box. Haunted Jack in the Box – DIY Raspberry Pi Project This…