Schlagwort: Nicla Vision

-

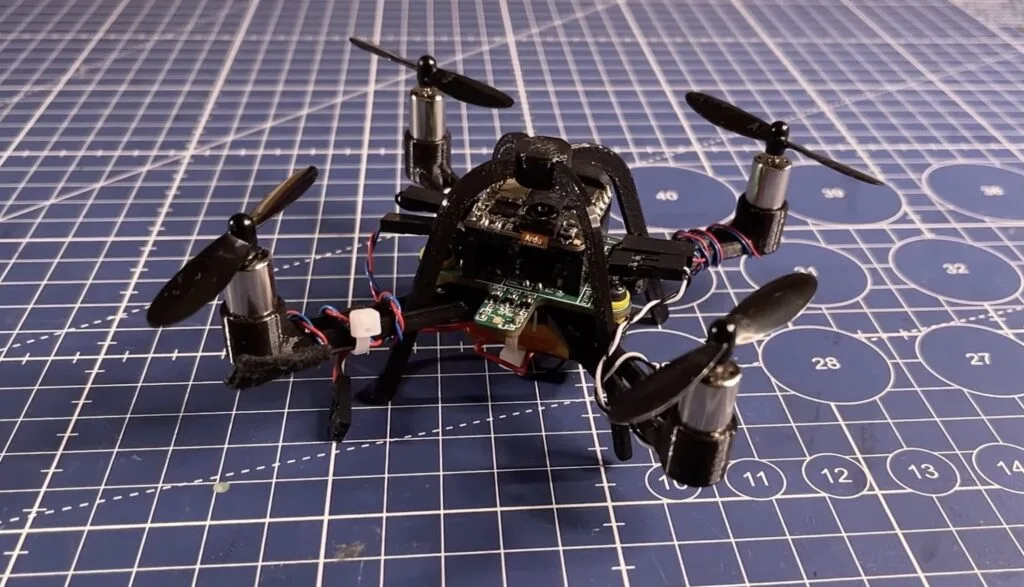

Using an Arduino Nicla Vision as a drone flight controller

Reading Time: 2 minutesDrone flight controllers do so much more than simply receive signals and tell the drone which way to move. They’re responsible for constantly tweaking the motor speeds in order to maintain stable flight, even with shifting winds and other unpredictable factors. For that reason, most flight controllers are purpose-built for the job.…

-

Making a car more secure with the Arduino Nicla Vision

Reading Time: 2 minutesShortly after attending a recent tinyML workshop in Sao Paolo, Brazil, Joao Vitor Freitas da Costa was looking for a way to incorporate some of the technologies and techniques he learned into a useful project. Given that he lives in an area which experiences elevated levels of pickpocketing and automotive theft, he turned…

-

Empowering the transportation of the future, with the Ohio State Buckeye Solar Racing Team

Reading Time: 3 minutesArduino is ready to graduate its educational efforts in support of university-level STEM and R&D programs across the United States: this is where students come together to explore the solutions that will soon define their future, in terms of their personal careers and more importantly of their impact on the world. Case…

-

This Nicla Vision-powered ornament covertly spies on the presents below

Reading Time: 2 minutesWhether it’s an elf that stealthily watches from across the room or an all-knowing Santa Claus that keeps a list of one’s actions, spying during the holidays is nothing new. But when it comes time to receive presents, the more eager among us might want to know what presents await us a…

-

Helping robot dogs feel through their paws

Reading Time: 2 minutesYour dog has nerve endings covering its entire body, giving it a sense of touch. It can feel the ground through its paws and use that information to gain better traction or detect harmful terrain. For robots to perform as well as their biological counterparts, they need a similar level of sensory…

-

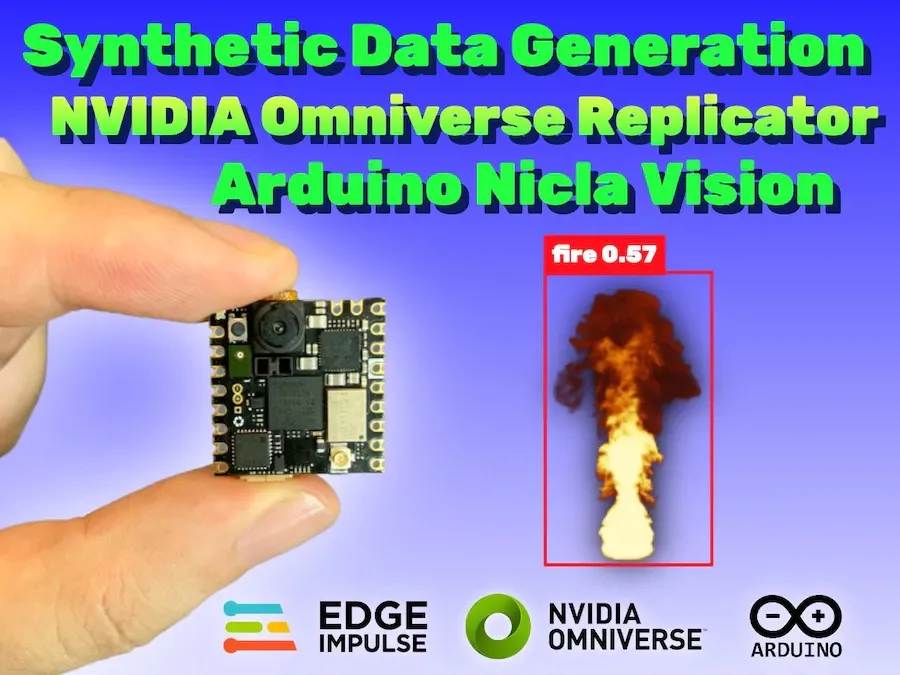

This Nicla Vision-based fire detector was trained entirely on synthetic data

Reading Time: 2 minutesDue to an ever-warming planet thanks to climate change and greatly increasing wildfire chances because of prolonged droughts, being able to quickly detect when a fire has broken out is vital for responding while it’s still in a containable stage. But one major hurdle to collecting machine learning model datasets on these…

-

Intelligently control an HVAC system using the Arduino Nicla Vision

Reading Time: 2 minutesShortly after setting the desired temperature of a room, a building’s HVAC system will engage and work to either raise or lower the ambient temperature to match. While this approach generally works well to control the local environment, the strategy also leads to tremendous wastes of energy since it is unable to…

-

Meet Arduino Pro at tinyML EMEA Innovation Forum 2023

Reading Time: 3 minutesOn June 26th-28th, the Arduino Pro team will be in Amsterdam for the tinyML EMEA Innovation Forum – one of the year’s major events for the world where AI models meet agile, low-power devices. This is an exciting time for companies like Arduino and anyone interested in accelerating the adoption of tiny…

-

Enabling automated pipeline maintenance with edge AI

Reading Time: 2 minutesPipelines are integral to our modern way of life, as they enable the fast transportation of water and energy between central providers and the eventual consumers of that resource. However, the presence of cracks from mechanical or corrosive stress can lead to leaks, and thus waste of product or even potentially dangerous…

-

Want to keep accurate inventory? Count containers with the Nicla Vision

Reading Time: 2 minutesMaintaining accurate records for both the quantities and locations of inventory is vital when running any business operations efficiently and at scale. By leveraging new technologies such as AI and computer vision, items in warehouses, store shelves, and even a customer’s hand can be better managed and used to forecast changes demand.…

-

Vineyard pest monitoring with Arduino Pro

Reading Time: 7 minutesThe challenge Pest monitoring is essential for the proper management of any vineyard as it allows for the early detection and management of any potential pest infestations. By regularly monitoring the vineyard, growers can identify pests at early stages and take action to prevent further damage. Monitoring can also provide valuable data…

-

Environmental monitoring of corporate offices with Arduino Pro

Reading Time: 7 minutesThe challenge The quality of the air we breathe has a direct impact on our health. Poor air quality can cause a variety of health problems, including respiratory infections, headaches, and fatigue. It can also aggravate existing conditions such as asthma and allergies. That’s why it’s so important to monitor the air…

-

Count elevator passengers with the Nicla Vision and Edge Impulse

Reading Time: 3 minutesModern elevators are powerful, but they still have a payload limit. Most will contain a plaque with the maximum number of passengers (a number based on their average weight with lots of room for error). But nobody has ever read the capacity limit when stepping into an elevator or worried about exceeding…

-

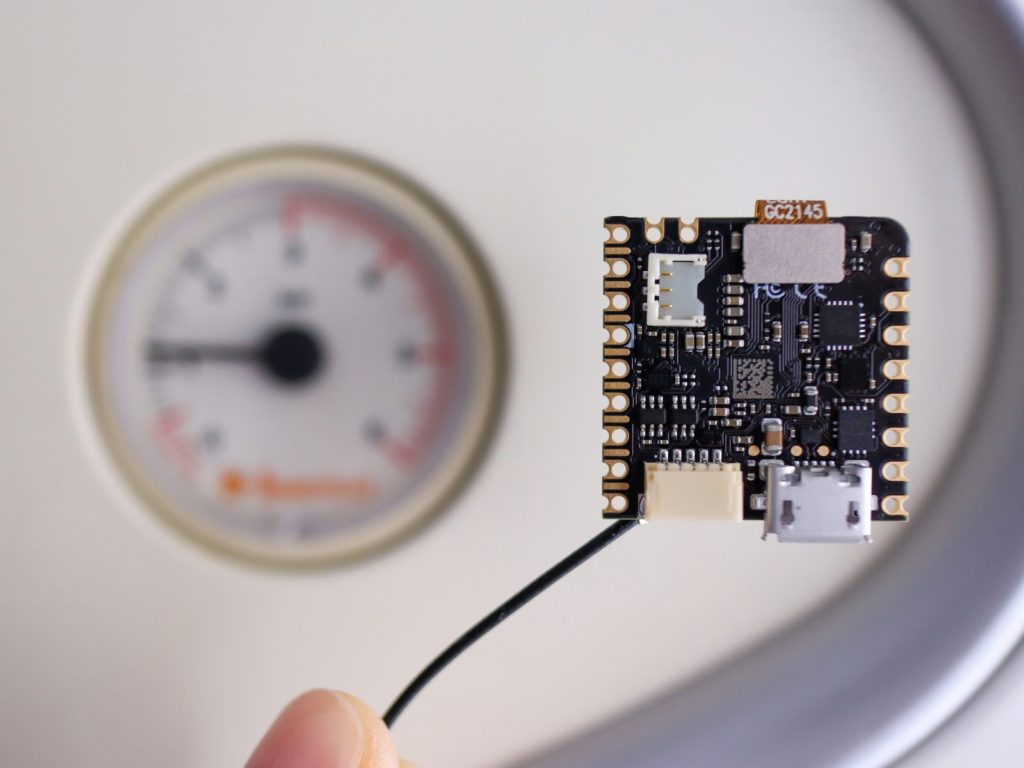

Reading analog gauges with the Nicla Vision

Reading Time: 2 minutesArduino Team — August 13th, 2022 Analog instruments are everywhere and used to measure pressure, temperature, power levels, and much more. Due to the advent of digital sensors, many of these became quickly obsolete, leaving the remaining ones to require either conversions to a digital format or frequent human monitoring. However, the…

-

Meet Nikola, a camera-enabled smart companion robot

Reading Time: 2 minutesArduino Team — June 6th, 2022 For this year’s Embedded Vision Summit, Hackster.io’s Alex Glow created a companion robot successor to her previous Archimedes bot called Nikola. This time, the goal was to embed a privacy-focused camera and microphone system as well as several other components that would increase its adorability. The vision system uses…

-

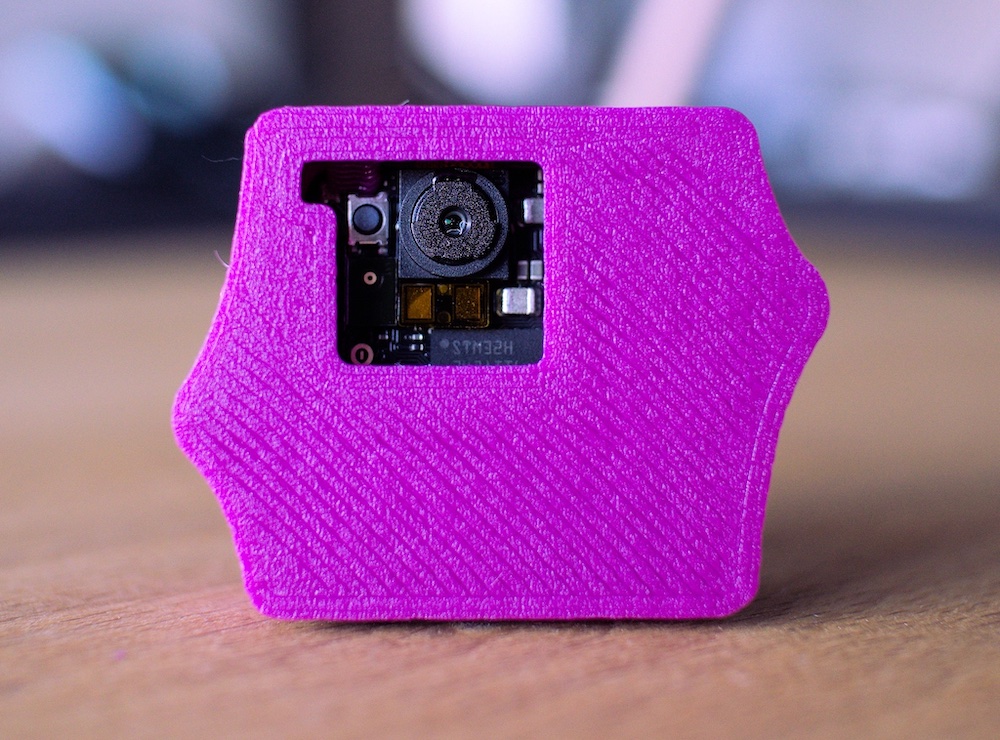

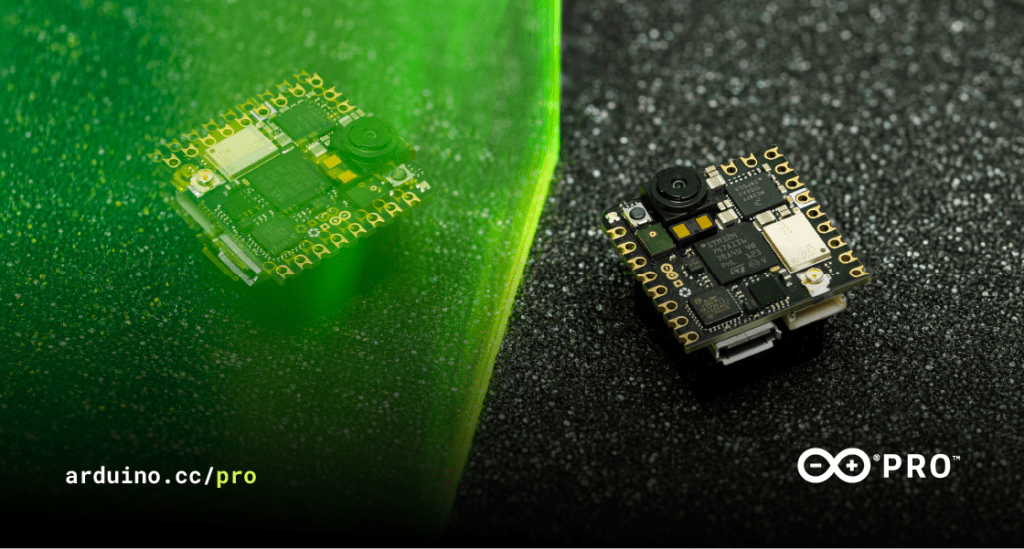

Meet the Nicla Vision: Love at first sight!

Reading Time: 3 minutesArduino Team — March 8th, 2022 We’re proud to announce a new addition to the Arduino ecosystem, the Nicla Vision. This is a brand new, ready-to-use, 2MP standalone camera that lets you analyze and process images on the edge for advanced machine vision and edge computing applications. Now you can add image…