Schlagwort: Nano 33 BLE Sense

-

This Arduino device knows how a bike is being ridden using tinyML

Reading Time: 2 minutesArduino Team — December 28th, 2021 Fabio Antonini loves to ride his bike, and while nearly all bike computers offer information such as cadence, distance, speed, and elevation, they lack the ability to tell if the cyclist is sitting or standing at any given time. So, after doing some research, he came across…

-

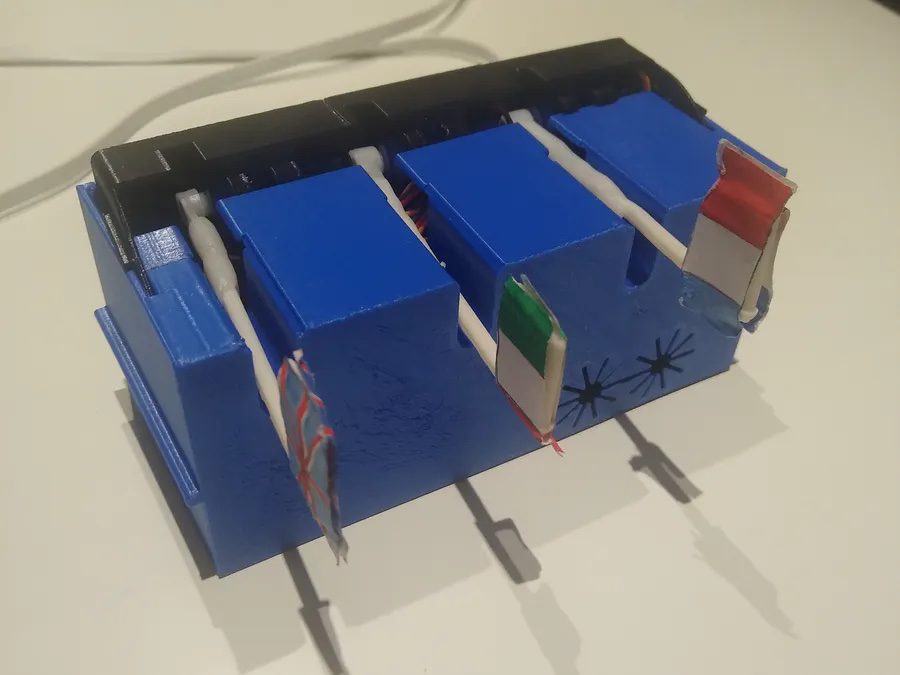

This Arduino device can detect which language is being spoken using tinyML

Reading Time: 2 minutesArduino Team — December 8th, 2021 Although smartphone users have had the ability to quickly translate spoken words into nearly any modern language for years now, this feat has been quite tough to accomplish on small, memory-constrained microcontrollers. In response to this challenge, Hackster.io user Enzo decided to create a proof-of-concept project that demonstrated…

-

This tinyML system helps soothe your dog’s separation anxiety with sounds of your voice

Reading Time: 2 minutesThis tinyML system helps soothe your dog’s separation anxiety with sounds of your voice Arduino Team — November 17th, 2021 Due to the ongoing pandemic, Nathaniel Felleke’s family dog, Clairette, had gotten used to having people around her all the time and thus developed separation anxiety when the family would leave the…

-

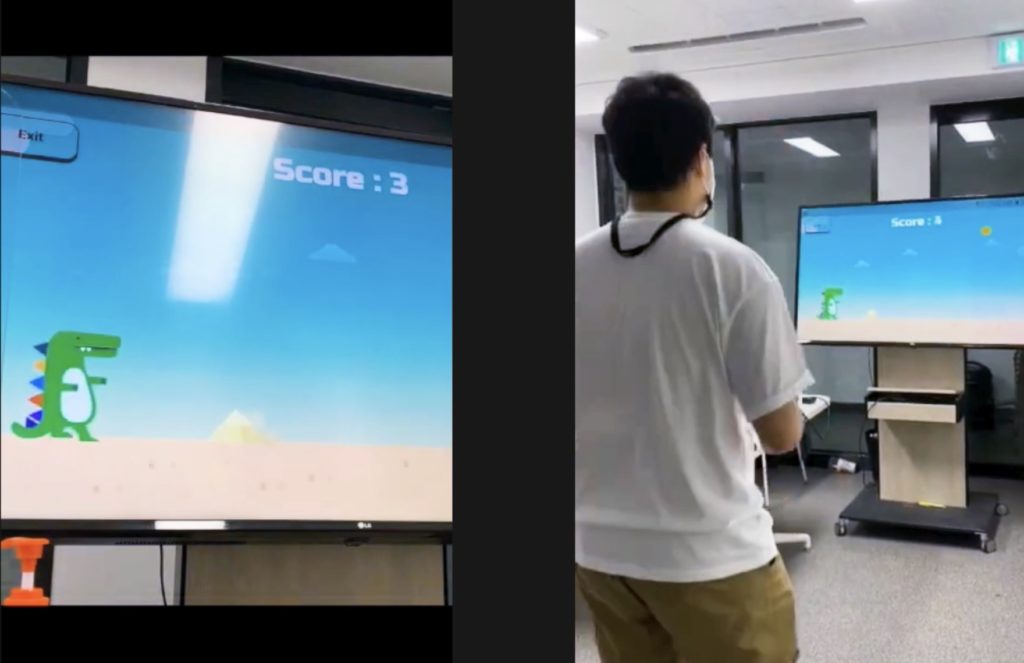

Move! makes burning calories a bit more fun

Reading Time: 2 minutesArduino Team — October 25th, 2021 Gamifying exercise allows people to become more motivated and participate more often in physical activities while also being distracted by doing something fun at the same time. This inspired a team of students from the Handong Global University in Pohang, South Korea to come up with a…

-

Mapping Dance syncs movement and stage lighting using tinyML

Reading Time: 2 minutesArduino Team — October 22nd, 2021 Being able to add dynamic lighting and images that can synchronize with a dancer is important to many performances, which rely on both music and visual effects to create the show. Eduardo Padrón aimed to do exactly that by monitoring a performer’s moves with an accelerometer and triggering the…

-

This tinyML device counts your squats while you focus on your form

Reading Time: 2 minutesArduino Team — October 22nd, 2021 Getting in your daily exercise is vital to living a healthy life and having proper form when squatting can go a long way towards achieving that goal without causing joint pain from doing them incorrectly. The Squats Counter is a device worn around the thigh that…

-

Use the Nano 33 BLE Sense’s IMU and gesture sensor to control a DJI Tello drone

Reading Time: 2 minutesUse the Nano 33 BLE Sense’s IMU and gesture sensor to control a DJI Tello drone Arduino Team — September 8th, 2021 Piloting a drone with something other than a set of virtual joysticks on a phone screen is exciting due to the endless possibilities. DJI’s Tello can do just this, as…

-

Monitor the pH levels of a hydroponic plant’s water supply with Arduino and tinyML

Reading Time: 2 minutesMonitor the pH levels of a hydroponic plant’s water supply with Arduino and tinyML Arduino Team — September 2nd, 2021 Many plants are notorious for how picky they are about their environmental conditions. Having the wrong temperature, humidity, soil type, and even elevation can produce devastating effects. But none are perhaps as…

-

Predicting a lithium-ion battery’s life cycle with tinyML

Reading Time: 2 minutesPredicting a lithium-ion battery’s life cycle with tinyML Arduino Team — August 24th, 2021 Nothing is perhaps more frustrating than suddenly discovering your favorite battery-powered device has shut down due to a lack of charge, and because almost no one finds joy in calculating how long it will live based on current…

-

Power of Python for Arduino Nano RP2040 Connect and Nano 33 BLE

Reading Time: 3 minutesPython support for three of the hottest Arduino boards out there is now yours. Through our partnership with OpenMV, the Nano RP2040 Connect, Nano 33 BLE and Nano 33 BLE Sense can now be programmed with the popular MicroPython language. Which means you get OpenMV’s powerful computer vision and machine learning capabilities…

-

This lamp consists of 122 LED-lit domes on a sphere, controllable over Bluetooth

Reading Time: 2 minutesArduino Team — August 11th, 2021 There are already countless projects that utilize individually addressable RGB LED strips in some way or another, except most of them lack a “wow” factor. This is one problem that Philipp Niedermayer’s Sphere2 Lamp does not suffer from, as it is a giant sphere comprised of 122 smaller domes…

-

Meet your new table tennis coach, a tinyML-powered paddle!

Reading Time: 2 minutesArduino Team — July 23rd, 2021 Shortly after the COVID-19 pandemic began, Samuel Alexander and his housemates purchased a ping pong set and began to play — a lot. Becoming quite good at the game, Alexander realized that his style was not consistent with how more professional table tennis players hit the ball, as…

-

The Snoring Guardian listens while you sleep and vibrates when you start to snore

Reading Time: 2 minutesArduino Team — July 20th, 2021 Snoring is an annoying problem that affects nearly half of all adults and can cause others to lose sleep. Additionally, the ailment can be a symptom of a more serious underlying condition, so being able to know exactly when it occurs could be lifesaving. To help…

-

VoiceTurn is a voice-controlled turn signal system for safer bike rides

Reading Time: 2 minutesArduino Team — July 19th, 2021 Whether commuting to work or simply having fun around town, riding a bike can be a great way to get exercise while also enjoying the scenery. However, riding around on the road presents a danger as cars or other cyclists / pedestrians might not be paying…

-

‘Droop, There It Is!’ is a smart irrigation system that uses ML to visually diagnose drought stress

Reading Time: 2 minutes‘Droop, There It Is!’ is a smart irrigation system that uses ML to visually diagnose drought stress Arduino Team — July 13th, 2021 Throughout the day as the sun evaporates the water from a plant’s leaves via a process called transpiration, observers will notice that they tend to get a little bit droopy.…

-

This wearable device sends an alert whenever it detects a fall

Reading Time: 2 minutesArduino Team — July 2nd, 2021 A dangerous fall can happen to anyone, but they are particularly dangerous among the elderly as that demographic might not have effective ways to get help when needed. Rather than having to purchase an expensive device that costs up to $100 per month to use, Nathaniel F. on…

-

This low-cost device uses tinyML on Arduino to detect respiratory diseases in pigs

Reading Time: 2 minutesArduino Team — June 30th, 2021 One major drawback to the largescale farming of animals for meat consumption is the tendency for diseases to spread rapidly and decimate the population. This widespread issue is what drove Clinton Oduor to build a tinyML-powered device that can perform precision livestock farming tasks intelligently. His project works by continuously…

-

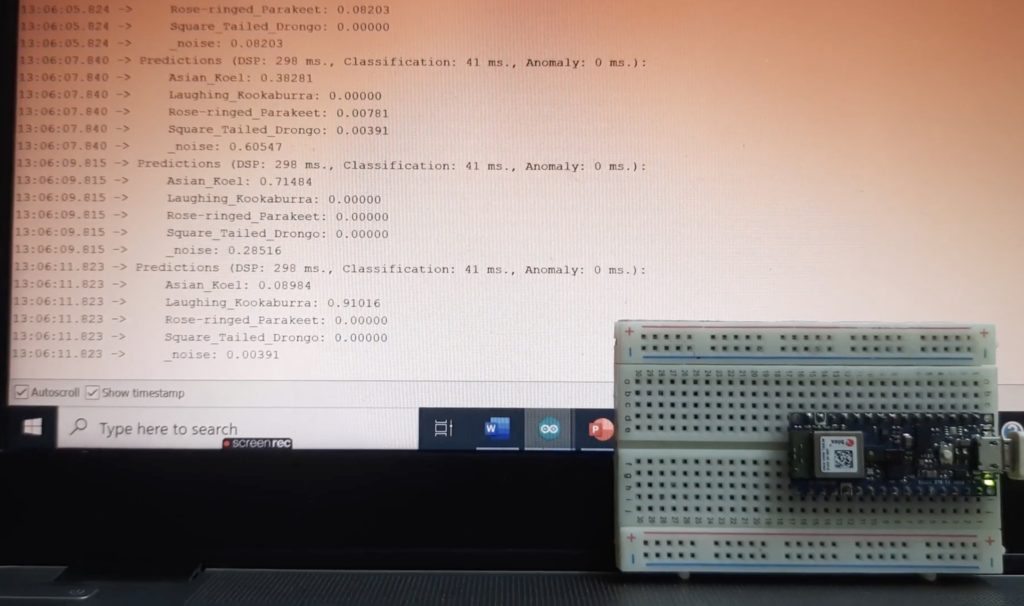

Use tinyML on the Nano 33 BLE Sense to classify different bird calls

Reading Time: 2 minutesArduino Team — June 28th, 2021 There are thousands of bird species in the world, with numerous different and unique ones living in various areas. Developers Errol Joshua, Mahesh Nayak, Ajith K J, and Supriya Nickam wanted to build a simple device that would allow them to automatically recognize the feathered friends…

-

This pocket-sized uses tinyML to analyze a COVID-19 patient’s health conditions

Reading Time: 2 minutesThis pocket-sized uses tinyML to analyze a COVID-19 patient’s health conditions Arduino Team — June 21st, 2021 In light of the ongoing COVID-19 pandemic, being able to quickly determine a person’s current health status is very important. This is why Manivannan S wanted to build his very own COVID Patient Health Assessment Device that could take…

-

PUPPI is a tinyML device designed to interpret your dog’s mood via sound analysis

Reading Time: 2 minutesPUPPI is a tinyML device designed to interpret your dog’s mood via sound analysis Arduino Team — June 18th, 2021 Dogs are not known to be the most advanced communicators, so figuring out what they want based on a few noises and pleading looks can be tough. This problem is what inspired…

-

This system uses machine learning and haptic feedback to enable deaf parents to communicate with their kids

Reading Time: 2 minutesArduino Team — June 10th, 2021 For the hearing impaired, communicating with others can be a real challenge, and this is especially problematic when it is a deaf parent trying to understand what their child needs, as the child is too young to learn sign language. Mithun Das was able to come up…

-

Epilet is a tinyML-powered bracelet for detecting epileptic seizures

Reading Time: 2 minutesArduino Team — June 8th, 2021 Epilepsy can be a very terrifying and dangerous condition, as sufferers often experience seizures that can result in a lack of motor control and even consciousness, which is why one team of developers wanted to do something about it. They came up with a simple yet…