Schlagwort: mega

-

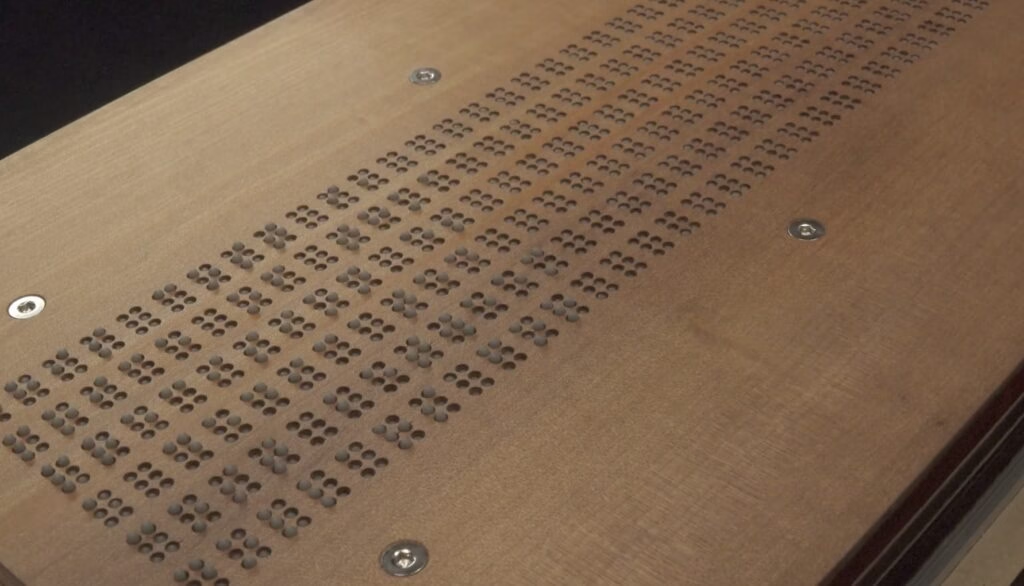

Novel mechanism makes refreshable braille displays practical

Reading Time: 2 minutesTactile displays — particularly for use as refreshable braille displays — have always been a challenge to design and fabricate, as they require so many moving parts. Every dot needs its own actuated mechanism and there needs to be dozens or hundreds of dots squeezed into a small space. Conventional micro actuators…

-

SoilTile turns earth into an oversized touchpad

Reading Time: 2 minutesWe’re used to interacting with electronic technology that is cold, rigid, and overwhelmingly artificial. The device you’re reading this on doesn’t resemble anything found in nature and, consciously or not, you see it as something separate from the natural world. But what if the dividing boundary was less distinct? How would that…

-

This 1D camera captures 2D images of things it can’t see

Reading Time: 2 minutesYes, the title of this article sounds pretty crazy. But not only is it entirely possible through the lens of physics, but it is also practical to achieve in the real world using affordable parts. Jon Bumstead pulled it off with an Arduino, a photoresistor, and an inexpensive portable projector. Today’s digital…

-

This novel wearable provides touchless haptic feedback for VR typing

Reading Time: 2 minutesOne reason that fans prefer mechanical keyboards over membrane alternatives is that mechanical key switches provide a very noticeable tactile sensation at the moment a key press registers. Whether consciously or not, users notice that and stop pressing the key all the way through the maximum travel — reducing strain and RSI…

-

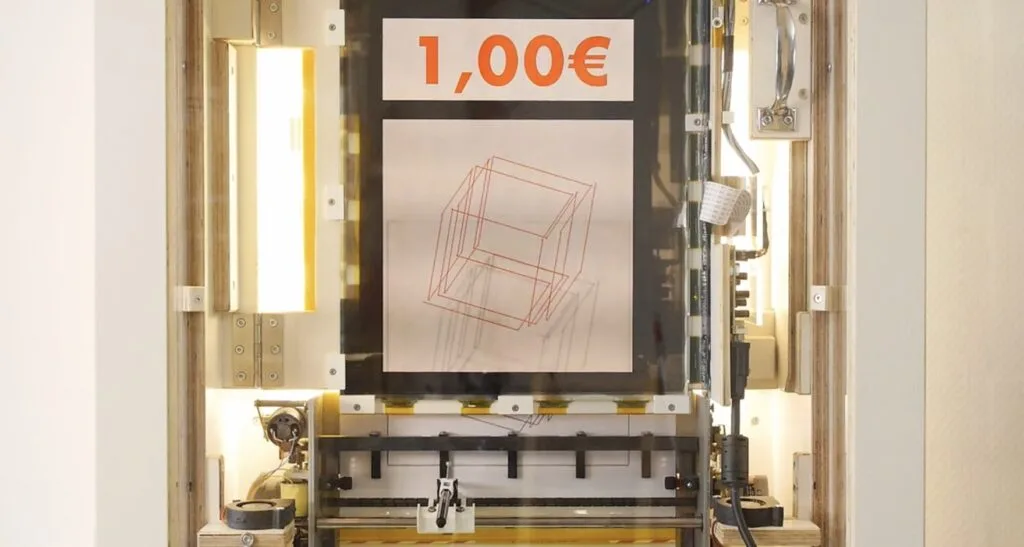

This vending machine draws generative art for just a euro

Reading Time: 2 minutesIf you hear the term “generative art” today, you probably subconsciously add “AI” to the beginning without even thinking about it. But generative art techniques existed long before modern AI came along — they even predate digital computing altogether. Despite that long history, generative art remains interesting as consumers attempt to identify…

-

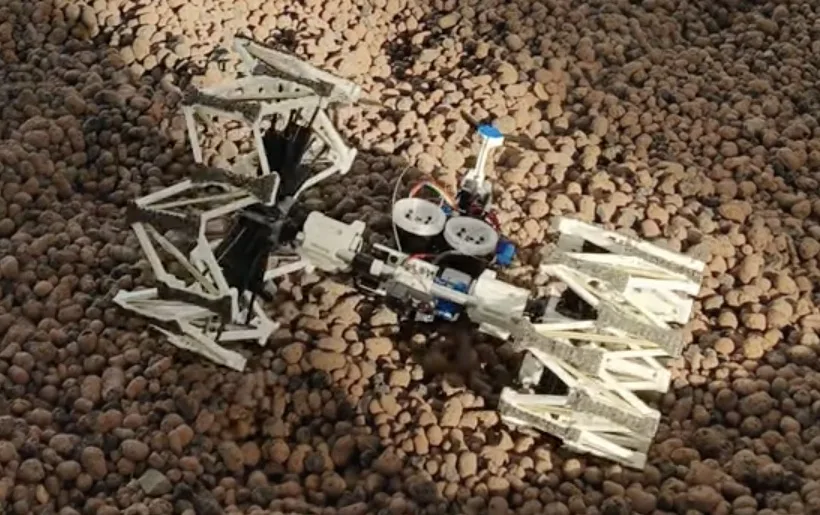

This robot can dynamically change its wheel diameter to suit the terrain

Reading Time: 2 minutesA vehicle’s wheel diameter has a dramatic effect on several aspects of performance. The most obvious is gearing, with larger wheels increasing the ultimate gear ratio — though transmission and transfer case gearing can counteract that. But wheel size also affects mobility over terrain, which is why Gourav Moger and Huseyin Atakan…

-

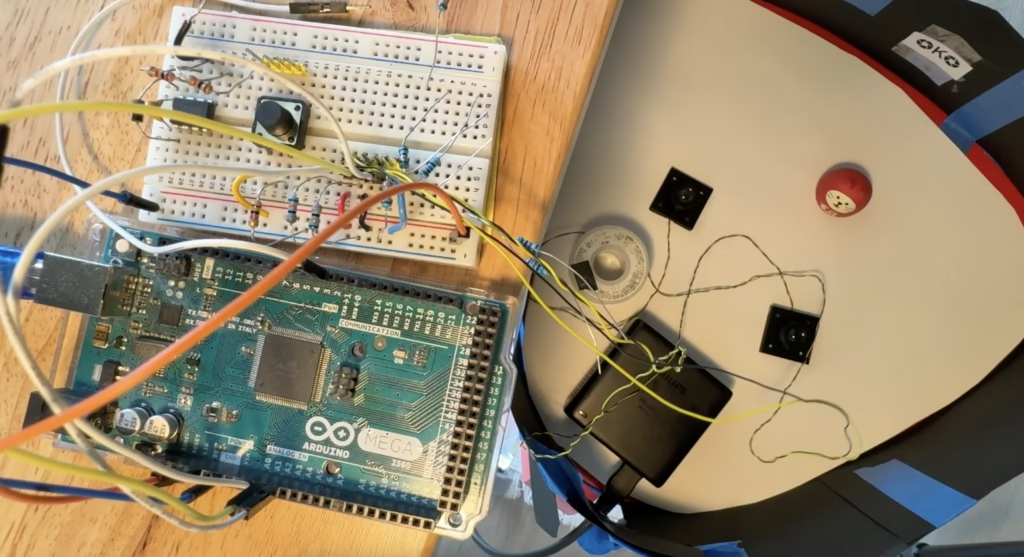

See how this homemade spectrometer analyzes substances with an Arduino Mega

Reading Time: 2 minutesMaterials, when exposed to light, will reflect or absorb certain portions of the electromagnetic spectrum that can give valuable information about their chemical or physical compositions. Traditional setups use a single lamp to emit white light before it is split apart into a spectrum of colors via a system of prisms, mirrors,…

-

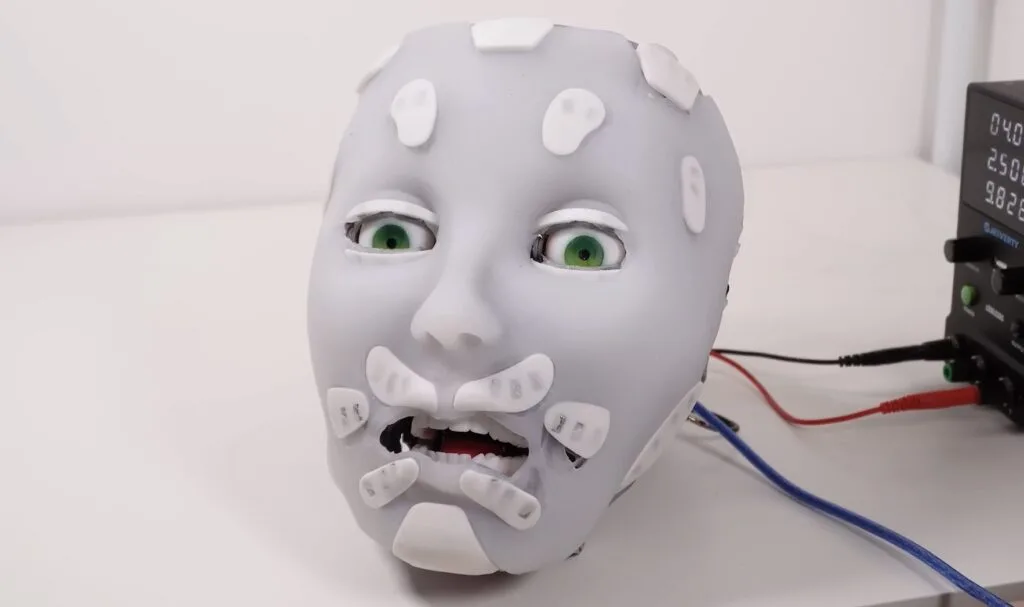

This frighteningly realistic animatronic head features expressive silicone skin

Reading Time: 2 minutesThe human face is remarkably complex, with 43 different muscles contorting the skin in all kinds of ways. Some of that is utilitarian — your jaw muscles are good for chewing, after all. But a lot of it seems to be the result of evolution giving us fantastic non-verbal communication abilities. That…

-

Zoo elephants get a musical toy to enrich their lives

Reading Time: 2 minutesEveryone loves looking at exotic animals and most of us only get to do that at zoos. But, of course, there is a lot to be said about the morality of keeping those animals in captivity. So, good zoos put a lot of effort into keeping their animals healthy and happy. For…

-

It’s silver, it’s green, it’s the Batteryrunner! An Arduino-powered, fully custom electric car

Reading Time: 5 minutesInventor Charly Bosch and his daughter Leonie have crafted something truly remarkable: a fully electric, Arduino-powered car that’s as innovative as it is sustainable. Called the Batteryrunner, this vehicle is designed with a focus on environmental impact, simplicity, and custom craftsmanship. Get ready to be inspired by a car that embodies the…

-

Exploring fungal intelligence with biohybrid robots powered by Arduino

Reading Time: 3 minutesAt Cornell University, Dr. Anand Kumar Mishra and his team have been conducting groundbreaking research that brings together the fields of robotics, biology, and engineering. Their recent experiments, published in Science, explore how fungal mycelia can be used to control robots. The team has successfully created biohybrid robots that move based on…

-

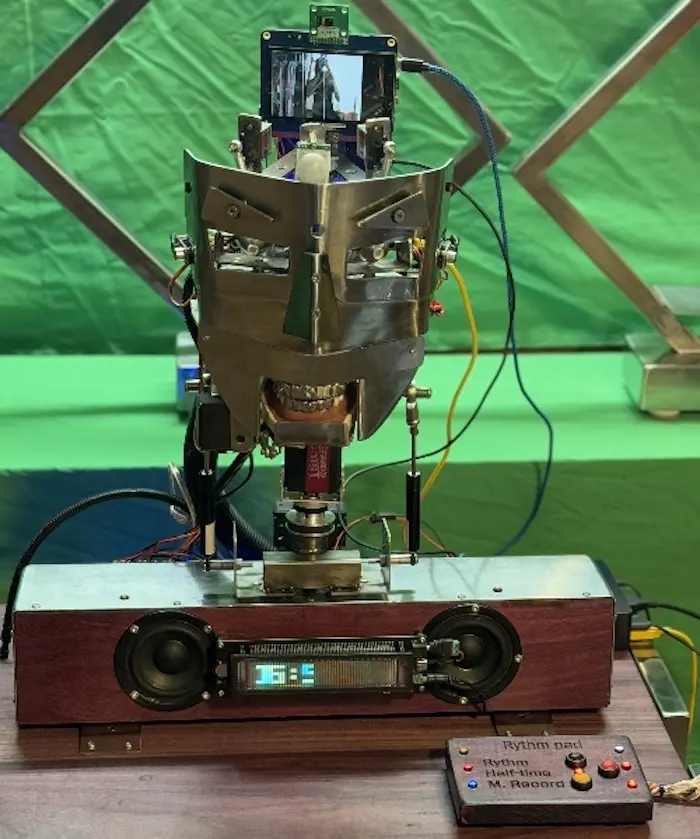

This perplexing robotic performer operates under the control of three different Arduino boards

Reading Time: 2 minutesEvery decade or two, humanity seems to develop a renewed interest in humanoid robots and their potential within our world. Because the practical applications are actually pretty limited (given the high cost), we inevitably begin to consider how those robots might function as entertainment. But Jon Hamilton did more than just wonder,…

-

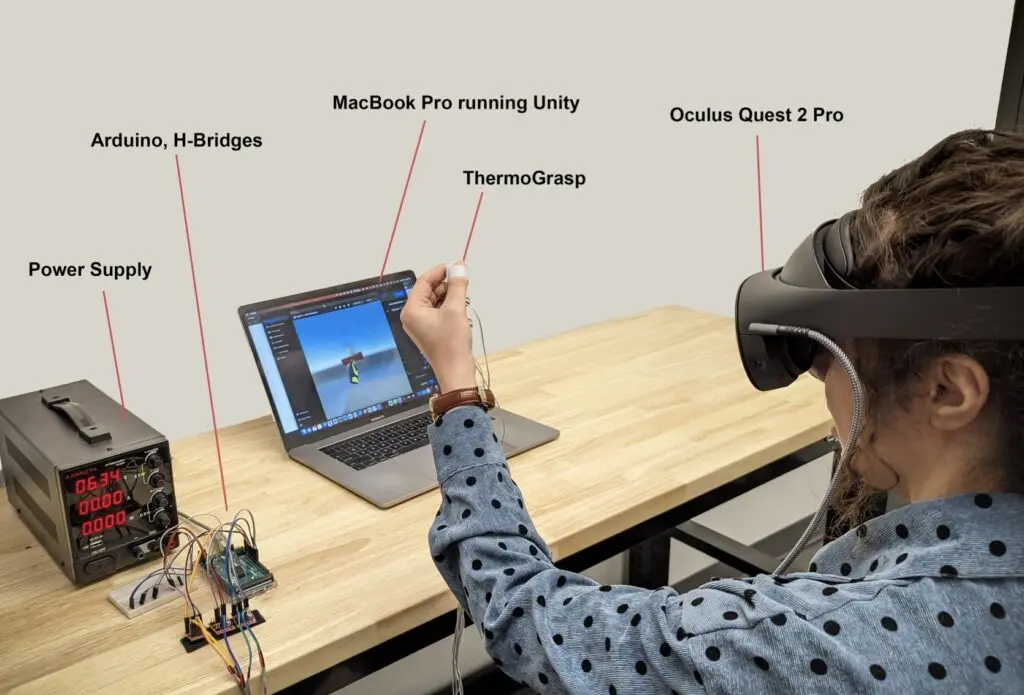

ThermoGrasp brings thermal feedback to virtual reality

Reading Time: 2 minutesImagine playing Half-Life: Alyx and feeling the gun heat up in your hand as you take down The Combine. Or operating a robot through augmented reality and feeling coldness on your fingers when you get close to exceeding the robot’s limits. A prototype device called ThermoGrasp brings that thermal feedback to the mixed reality…

-

Venderoo is an Arduino Mega-powered DIY vending machine

Reading Time: 2 minutesFor now-college student Joel Grayson, making something that combined his interests in mechanics, electronics, and programming while being simultaneously useful to those around him was a longtime goal. His recent Venderoo project is exactly that, as the creatively named vending machine was designed and built from the ground-up to dispense snacks in…

-

Explore underwater with this Arduino-controlled DIY ROV

Reading Time: 2 minutesWho doesn’t want to explore underwater? To take a journey beneath the surface of a lake or even the ocean? But a remotely operated vehicle (ROV), which is the kind of robot you’d use for such an adventure, isn’t exactly the kind of thing you’ll find on the shelf at your local…

-

This ‘smocking display’ adds data physicalization to clothing

Reading Time: 2 minutesElastic use in the textile industry is relatively recent. So, what did garment makers do before elastic came along? They relied on smocking, which is a technique for bunching up fabric so that it can stretch to better fit the form of a body. Now a team of computer science researchers from…

-

Coolest controllers ever? Icy gamepads melt in users’ hands

Reading Time: 2 minutesNintendo’s Joy-Con controller system is very innovative and generally well-regarded, with one major exception: stick drift. That’s a reliability issue that eventually affects a large percentage of Joy-Cons, to the frustration of gamers. But what if that was intentional and gamepads were designed to deteriorate in short order? That’s the idea behind…

-

A desktop-sized DIY vending machine for your room

Reading Time: 2 minutesHave you ever wanted your very own vending machine? If so, you likely found that they’re expensive and too bulky to fit in most homes. But now you can experience vending bliss thanks to this miniature vending machine designed by m22pj, which you can craft yourself using an Arduino and other materials…

-

Massive tentacle robot draws massive attention at EMF Camp

Reading Time: 2 minutesMost of the robots we feature only require a single Arduino board, because one Arduino can control several motors and monitor a bunch of sensors. But what if the robot is enormous and the motors are far apart? James Bruton found himself in that situation when he constructed this huge “tentacle” robot…

-

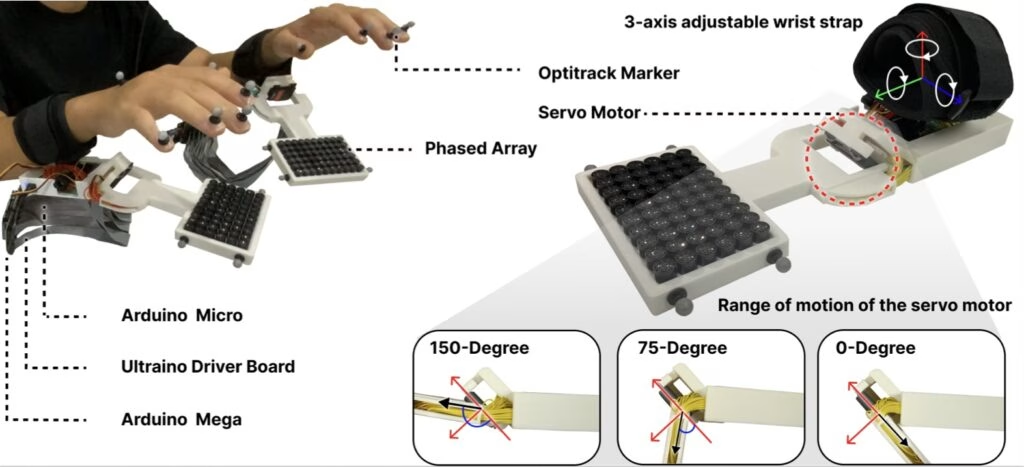

Check out these Arduino-powered research projects from CHI 2024

Reading Time: 4 minutesHeld in Hawaii this year, the Association of Computing Machinery (ACM) hosted its annual conference on Human Factors in Computing Systems (CHI) that focuses on the latest developments in human-computer interaction. Students from universities all across the world attended the event and showcased how their devices and control systems could revolutionize how…

-

Seaside Sweeper keeps beaches pristine

Reading Time: 2 minutesWithout anyone caring for them, beaches quickly become trash-covered swaths of disappointment. That care is necessary to maintain the beautiful sandy havens that we all want to enjoy, but it requires a lot of labor. A capstone team of students from the University of Colorado Boulder’s Creative Technology & Design program recognized…

-

Autochef-9000 can cook an entire breakfast automatically

Reading Time: 2 minutesFans off Wallace and Gromit will all remember two things about the franchise: the sort of creepy — but mostly delightful — stop-motion animation and Wallace’s Rube Goldberg-esque inventions. YouTuber Gregulations was inspired by Wallace’s Autochef breakfast-cooking contraption and decided to build his own robot to prepare morning meals. Gregulations wanted his Autochef-9000 to…