Schlagwort: LiDAR

-

Teaching an Arduino UNO R4-powered robot to navigate obstacles autonomously

Reading Time: 2 minutesThe rapid rise of edge AI capabilities on embedded targets has proven that relatively low-resource microcontrollers are capable of some incredible things. And following the recent release of the Arduino UNO R4 with its Renesas RA4M1 processor, the ceiling has gotten even higher as YouTuber Nikodem Bartnik has demonstrated with his lidar-equipped mobile robot. Bartnik’s…

-

See how Nikodem Bartnik integrated LIDAR room mapping into his DIY robotics platform

Reading Time: 2 minutesArduino Team — January 31st, 2022 As part of his ongoing autonomous robot project, YouTuber Nikodem Bartnik wanted to add LIDAR mapping/navigation functionality so that his device could see the world in much greater resolution and actively avoid obstacles. In short, LIDAR works by sending out short pulses of invisible light and measuring how much time…

-

Making a mini 360° LiDAR for $40

Reading Time: 2 minutesMaking a mini 360° LiDAR for $40 Arduino Team — December 21st, 2020 LiDAR (or “light detection and ranging”) sensors are all the rage these days, from their potential uses in autonomous vehicles, to their implementation on the iPhone 12. As cool as they are, these (traditionally) spinning sensors tend to be…

-

DolphinView headset lets you see the world like Flipper!

Reading Time: 2 minutesDolphinView headset lets you see the world like Flipper! Arduino Team — July 26th, 2018 Dolphins are not only amazing swimmers and extremely intelligent, but can also navigate their surroundings using echolocation. While extremely useful in murky water, Andrew Thaler decided to make a device that would enable him to observe his (normally…

-

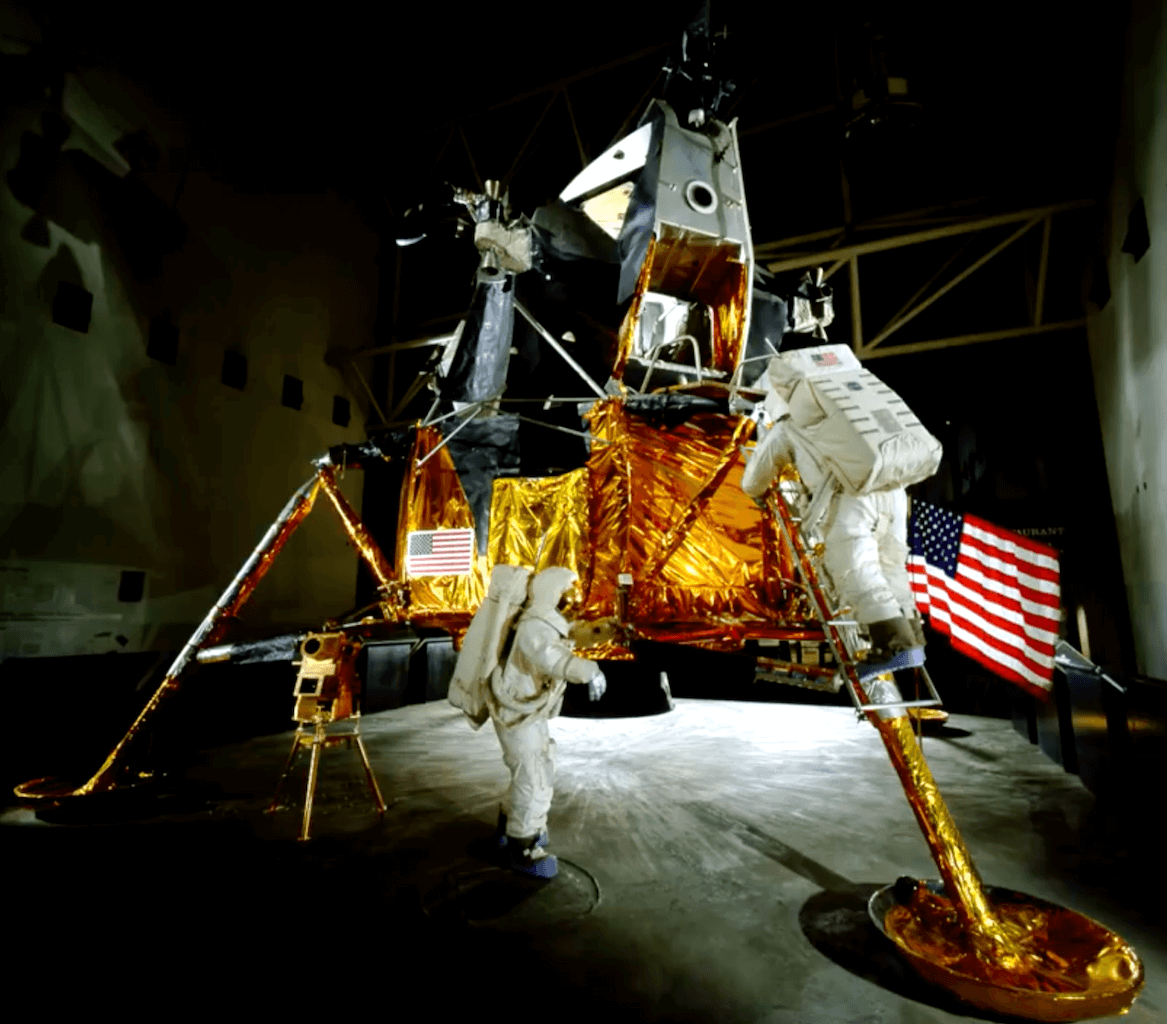

Lunar landing conspiracy put to rest(?) with LIDAR

Reading Time: 2 minutesLunar landing conspiracy put to rest(?) with LIDAR Arduino Team — February 20th, 2018 On July 20th, 1969 man first set foot on the moon with the Apollo 11 mission, or so they say. If it was faked, or so the theory goes, one would think that there were a few details…

-

LiDAR Scanning Technology Helps Archaeologists Uncover Forgotten Cities

Reading Time: 3 minutesLiDAR scanning technology offers tremendous potential to help archaeologists discover lost cities across the world. Archaeologists are able to speed up their process of discovery and exploration using a high-tech laser technique originally developed to scan the surface of the moon. The technology is based on light detection and ranging scanning (LiDAR)…