Schlagwort: Gesture-Controlled Drone

-

Use the Nano 33 BLE Sense’s IMU and gesture sensor to control a DJI Tello drone

Reading Time: 2 minutesUse the Nano 33 BLE Sense’s IMU and gesture sensor to control a DJI Tello drone Arduino Team — September 8th, 2021 Piloting a drone with something other than a set of virtual joysticks on a phone screen is exciting due to the endless possibilities. DJI’s Tello can do just this, as…

-

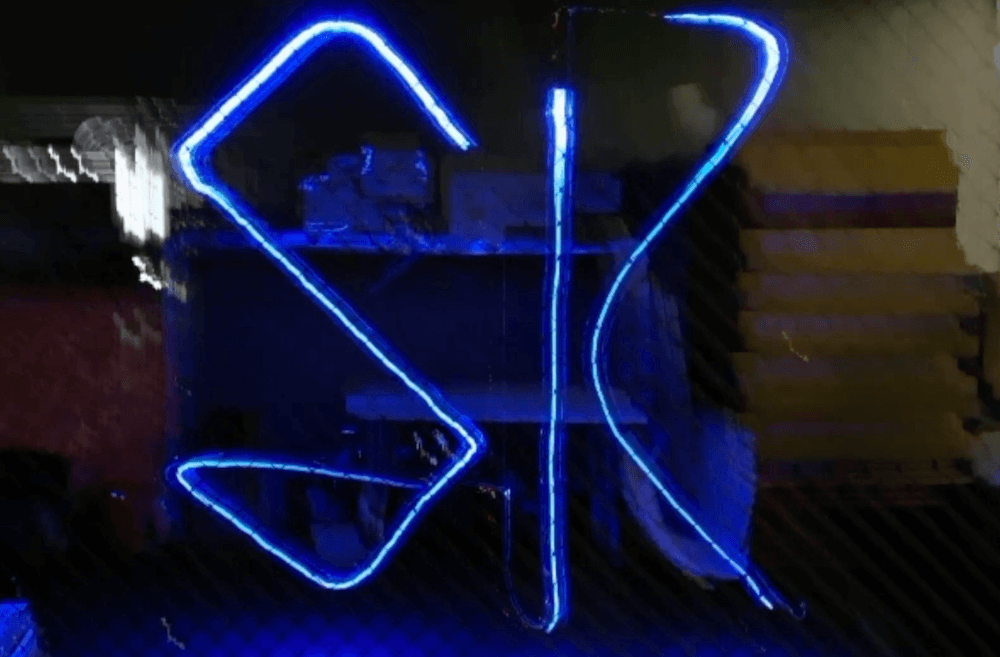

Light painting with a gesture-controlled drone

Reading Time: < 1 minuteLight painting with a gesture-controlled drone Arduino Team — October 9th, 2020 Researchers at the Skolkovo Institute of Science and Technology (Skoltech) in Moscow, Russia have come up with a novel way to interface with a drone via hand movements. As shown in the video below, the device can be used to create…