Schlagwort: Gesture Control

-

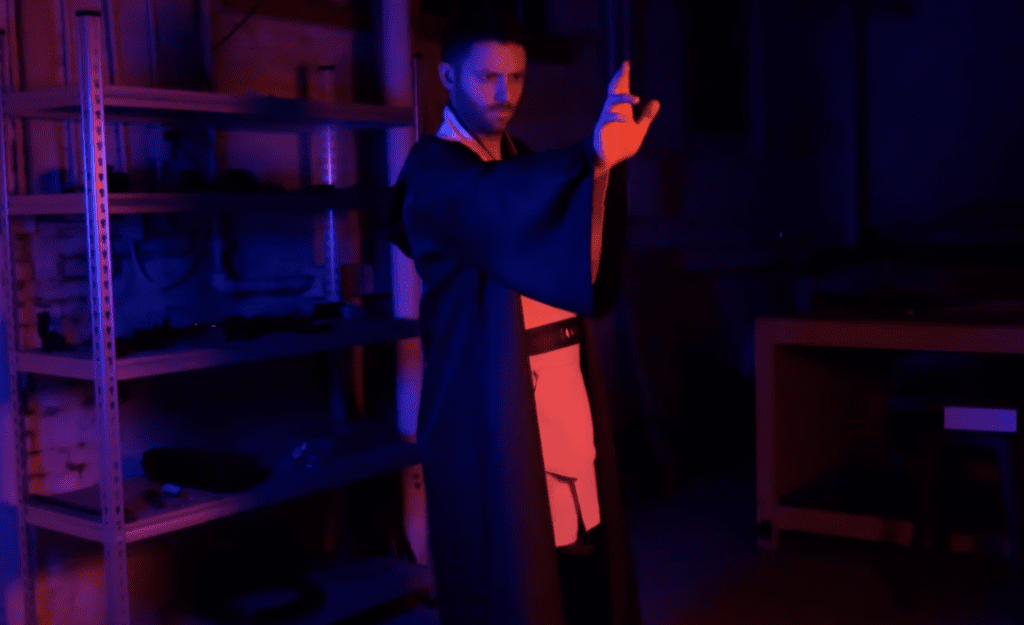

Using The Force to open a door

Reading Time: 2 minutesArduino Team — May 28th, 2022 In the Star Wars Universe, the Jedi and Sith use The Force for battle and mayhem. But in the real world, people would use The Force for much more mundane everyday tasks. Obi Wan even does this in the prequel trilogy when he closes a door…

-

This MP3 player is controlled with a twirl of your finger and wave of your hand

Reading Time: 2 minutesArduino Team — November 9th, 2021 The classic MP3 player was a truly innovative device for its time, however with the advent of modern smartphones and other do-it-all gadgets, they have largely fallen by the wayside. In order to add a new twist, Norbert Zare decided to implement an MP3 player that not only responds to…

-

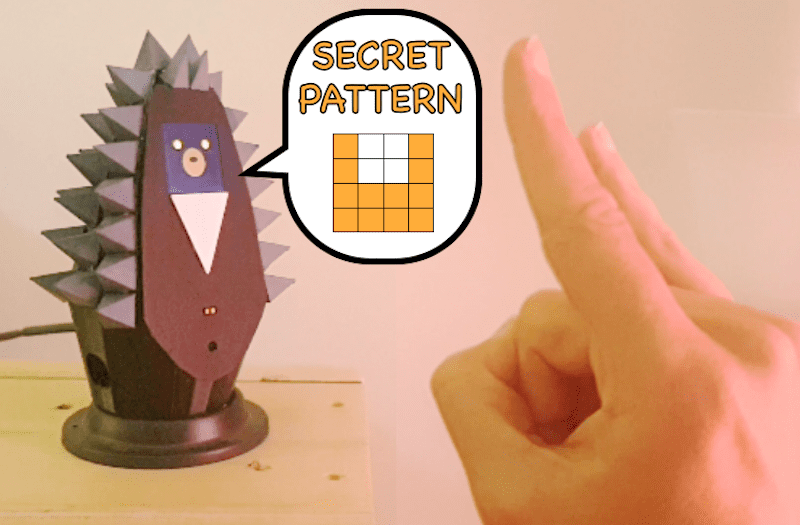

Meet Grumpy Hedgehog, an adorable gesture-sensing companion

Reading Time: 2 minutesArduino Team — November 3rd, 2021 Detecting shapes and gestures has traditionally been performed by camera systems due to their large arrays of pixels. However, Jean Peradel has come up with a method that uses cheap time-of-flight (ToF) sensors to sense both objects and movement over time. Better yet, his entire project is housed within a…

-

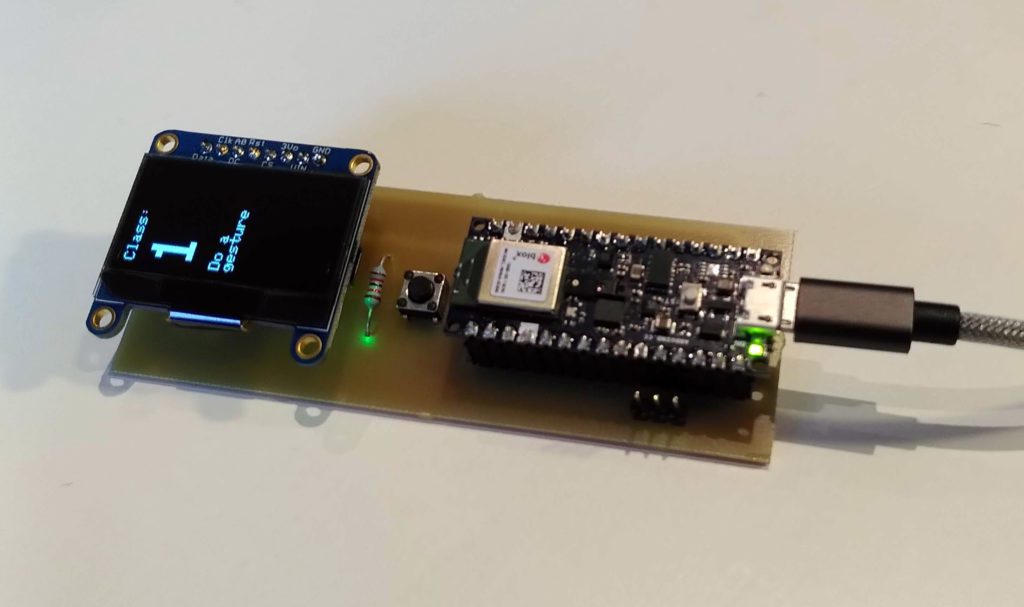

Customizable artificial intelligence and gesture recognition

Reading Time: 2 minutesArduino Team — April 15th, 2021 In many respects we think of artificial intelligence as being all encompassing. One AI will do any task we ask of it. But in reality, even when AI reaches the advanced levels we envision, it won’t automatically be able to do everything. The Fraunhofer Institute for…

-

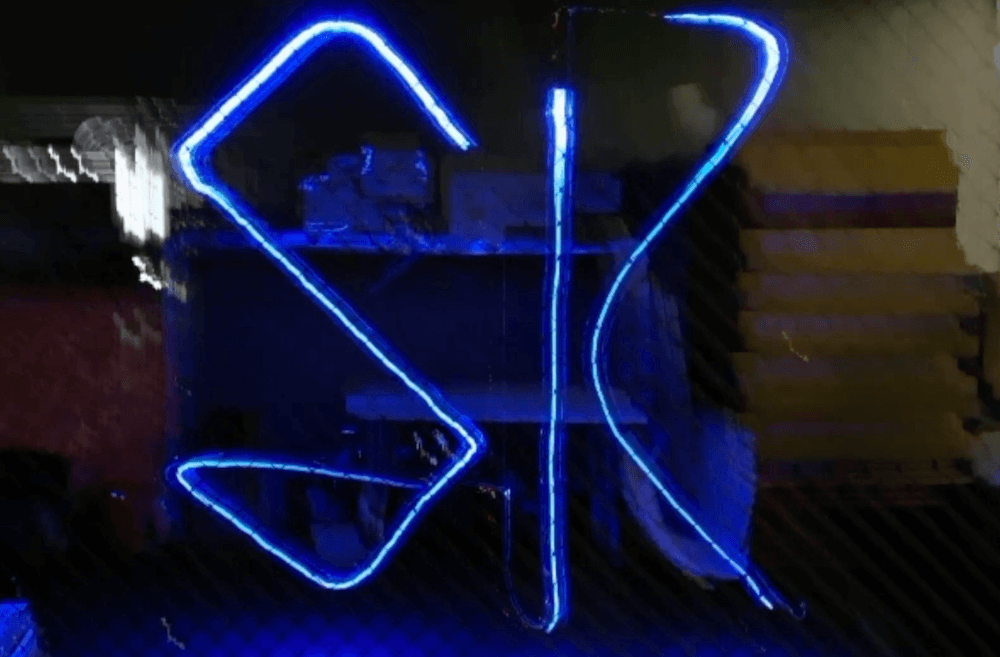

Light painting with a gesture-controlled drone

Reading Time: < 1 minuteLight painting with a gesture-controlled drone Arduino Team — October 9th, 2020 Researchers at the Skolkovo Institute of Science and Technology (Skoltech) in Moscow, Russia have come up with a novel way to interface with a drone via hand movements. As shown in the video below, the device can be used to create…

-

GesturePod is a clip-on smartphone interface for the visually impaired

Reading Time: 2 minutesGesturePod is a clip-on smartphone interface for the visually impaired Arduino Team — November 6th, 2019 Smartphones have become a part of our day-to-day lives, but for those with visual impairments, accessing one can be a challenge. This can be especially difficult if one is using a cane that must be put…