Schlagwort: conservation

-

Ultrasonically detect bats with Raspberry Pi

Reading Time: 3 minutesWelcome to October, the month in which spiderwebs become decor and anything vaguely gruesome is considered ‘seasonal’. Such as bats. Bats are in fact cute, furry creatures, but as they are part of the ‘Halloweeny animal’ canon, I have a perfect excuse to sing their praises. SEE? Baby bats wrapped up cute…

-

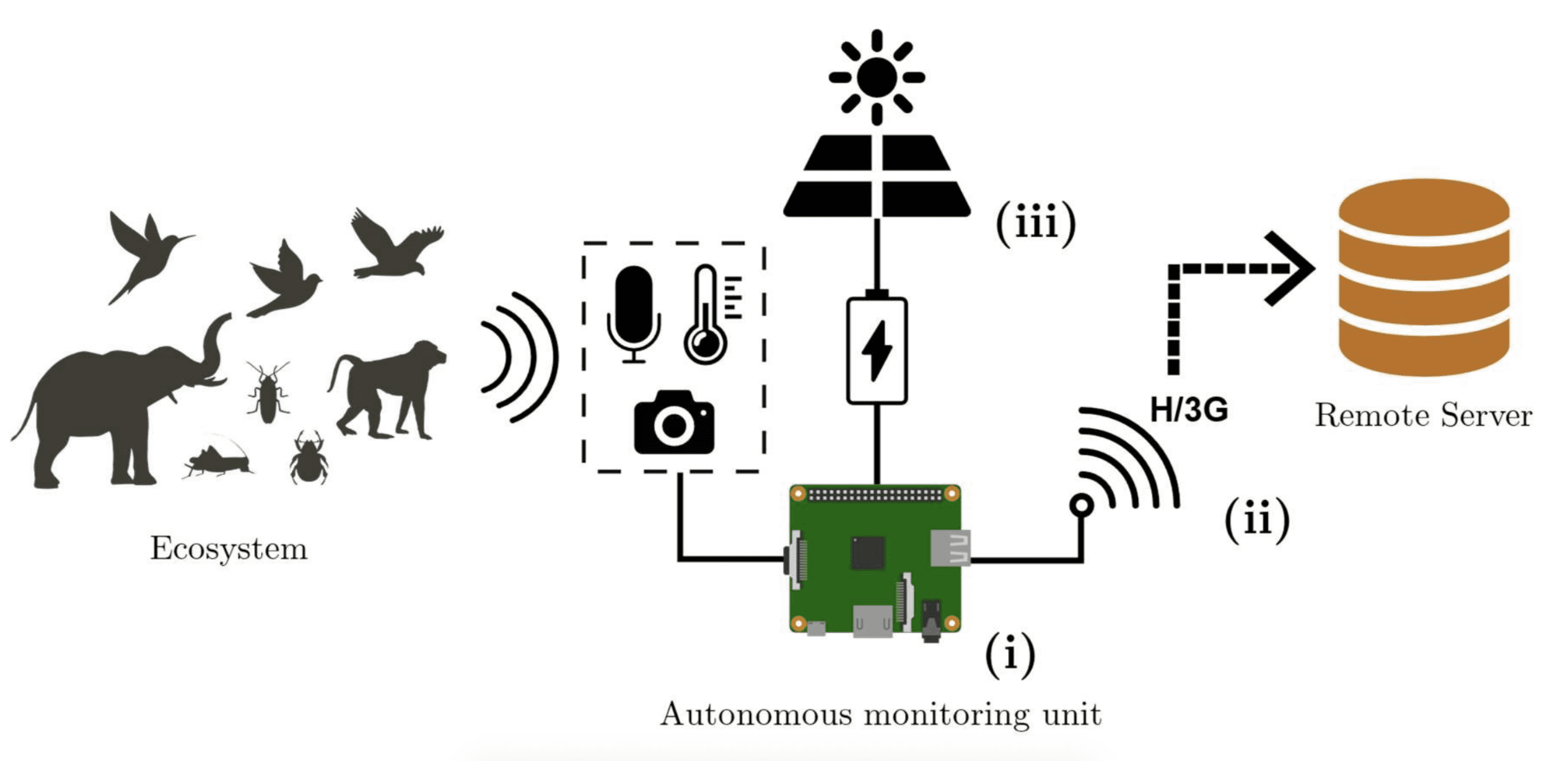

Raspberry Pi listening posts ‘hear’ the Borneo rainforest

Reading Time: 2 minutesThese award-winning, solar-powered audio recorders, built on Raspberry Pi, have been installed in the Borneo rainforest so researchers can listen to the local ecosystem 24/7. The health of a forest ecosystem can often be gaged according to how much noise it creates, as this signals how many species are around. And you…

-

Clean up the planet with awesome robot arms in Trash Rage from Giant Lazer

Reading Time: 8 minutesVR has the power to educate as well as entertain, but designing experiences that do both successfully is easier said than done. Luckily, the team over at Giant Lazer were more than up to the task when they created the sci-fi arcade experience Trash Rage. Tasked with cleaning up a planet ravaged…

-

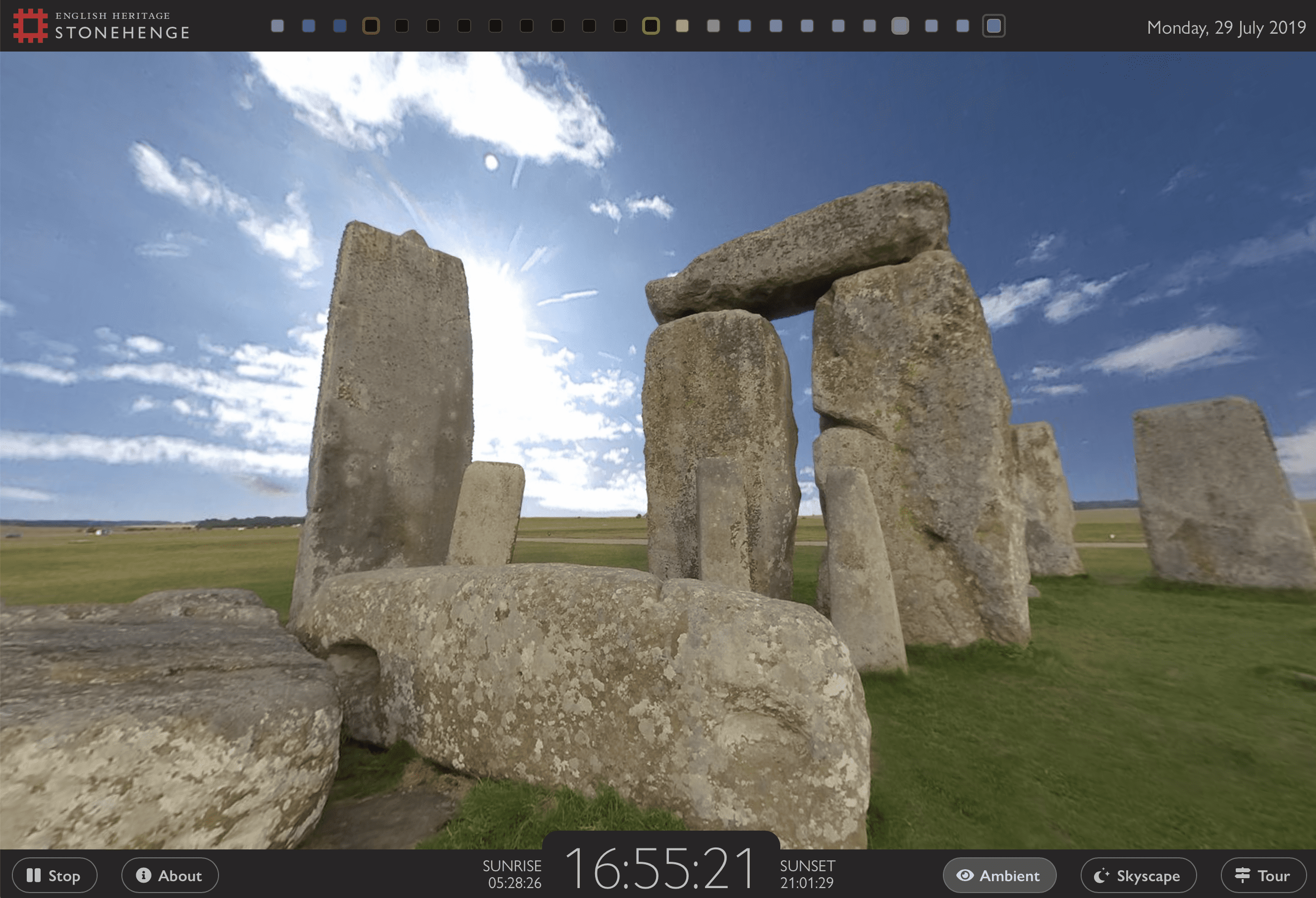

View Stonehenge in real time via Raspberry Pi

Reading Time: 4 minutesYou can see how the skies above Stonehenge affect the iconic stones via a web browser thanks to a Raspberry Pi computer. Stonehenge Stonehenge is Britain’s greatest monument and it currently attracts more than 1.5 million visitors each year. It’s possible to walk around the iconic stone circle and visit the Neolithic…

-

Penguin Watch — Pi Zeros and Camera Modules in the Antarctic

Reading Time: 2 minutesLong-time readers will remember Penguin Lifelines, one of our very favourite projects from back in the mists of time (which is to say 2014 — we have short memories around here). Click on penguins for fun and conservation Penguin Lifelines was a programme run by the Zoological Society of London, crowdsourcing the tracking…

-

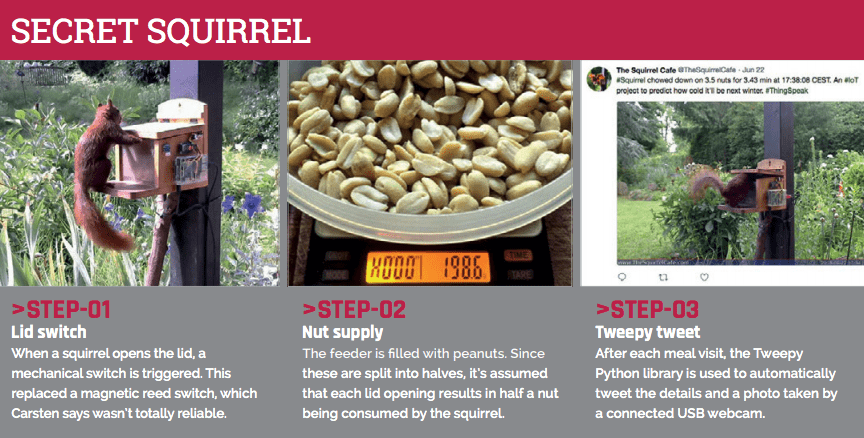

Prepare yourself for winter with the help of squirrels

Reading Time: 3 minutesThis article from The MagPi issue 72 explores Carsten Dannat’s Squirrel Cafe project and his mission to predict winter weather conditions based on the eating habits of local squirrels. Get your copy of The MagPi in stores now, or download it as a free PDF here. The Squirrel Cafe on Twitter Squirrel chowed down on 5.0…

-

Protecting coral reefs with Nemo-Pi, the underwater monitor

Reading Time: 3 minutesThe German charity Save Nemo works to protect coral reefs, and they are developing Nemo-Pi, an underwater “weather station” that monitors ocean conditions. Right now, you can vote for Save Nemo in the Google.org Impact Challenge. Save Nemo The organisation says there are two major threats to coral reefs: divers, and climate…

-

Journeying with green sea turtles and the Arribada Initiative

Reading Time: 4 minutesToday, a guest post: Alasdair Davies, co-founder of Naturebytes, ZSL London’s Conservation Technology Specialist and Shuttleworth Foundation Fellow, shares the work of the Arribada Initiative. The project uses the Raspberry Pi Zero and camera module to follow the journey of green sea turtles. The footage captured from the backs of these magnificent creatures…