Schlagwort: augmented reality

-

How makers can use AR and VR

Reading Time: 4 minutesAugmented reality (AR) and virtual reality (VR) are both currently experiencing a meteoric rise in popularity, with the combined market expected to reach $77 billion by 2025, from just $15.3 billion in 2020. For makers, AR and VR represent exciting opportunities to build new types of projects, tapping into entirely new possibilities…

-

Augmented reality fire drills make training more effective

Reading Time: 2 minutesWhile we adults don’t experience them often, school kids practice fire drills on a regular basis. Those drills are important for safety, but kids don’t take them seriously. At most, they see the drills as a way to get a break from their lessons for a short time. But what if they…

-

This project facilitates augmented reality Minecraft gaming

Reading Time: 2 minutesAugmented reality (AR) is distinct from virtual reality (VR) in that it brings the real world into virtual gameplay. The most famous example of AR is Pokémon Go, which lets players find the pocket monsters throughout their own physical region. Minecraft is the best-selling video game of all time, but lacks any official AR gameplay.…

-

Computer vision and project mapping enable AR PCB debugging bliss

Reading Time: 2 minutesImagine if you could identify a component and its schematic label by simply touching that component on your PCB. Imagine if you selected a pin in KiCAD and it started glowing on your real, physical PCB so you can find it easily. Imagine if you could see through your PCB’s solder mask…

-

Embodied Axes is an Arduino-powered controller for 3D imagery and data visualizations in AR

Reading Time: 2 minutesEmbodied Axes is an Arduino-powered controller for 3D imagery and data visualizations in AR Arduino Team — May 25th, 2020 Researchers across several universities have developed a controller that provides tangible interaction for 3D augmented reality data spaces. The device is comprised of three orthogonal arms, embodying X, Y, and Z axes…

-

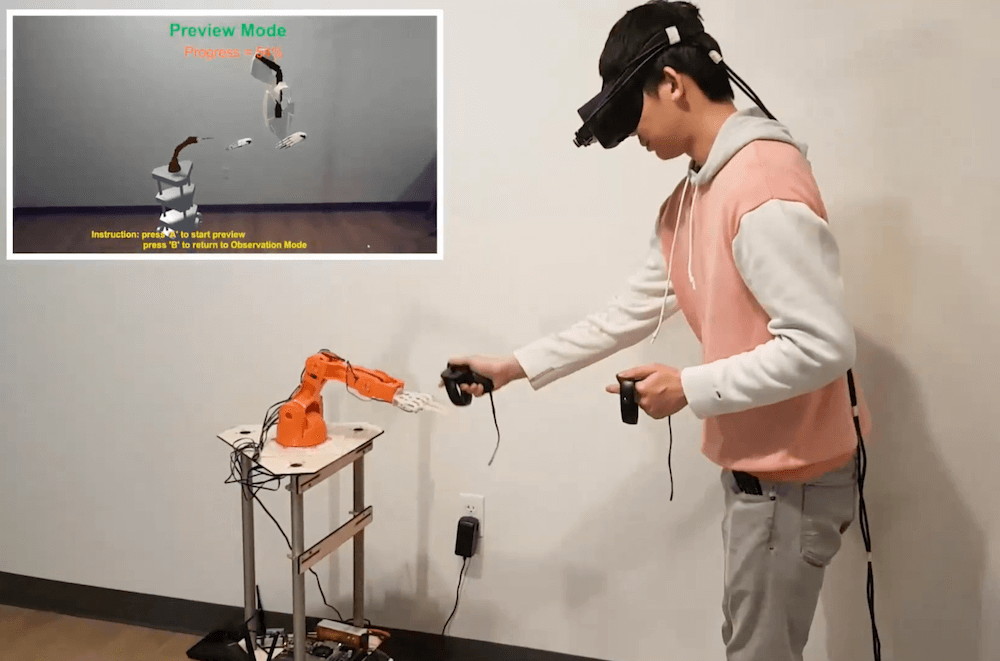

Improve human-robot collaboration with GhostAR

Reading Time: 2 minutesImprove human-robot collaboration with GhostAR Arduino Team — November 26th, 2019 As robotics advance, the future could certainly involve humans and automated elements working together as a team. The question then becomes, how do you design such an interaction? A team of researchers from Purdue University attempt to provide a solution with…

-

Ghost hunting in schools with Raspberry Pi | Hello World #9

Reading Time: 5 minutesIn Hello World issue 9, out today, Elliott Hall and Tom Bowtell discuss The Digital Ghost Hunt: an immersive theatre and augmented reality experience that takes a narrative-driven approach in order to make digital education accessible. The Digital Ghost Hunt combines coding education, augmented reality, and live performance to create an immersive…

-

Ghost hunting in schools with Raspberry Pi | Hello World #9

Reading Time: 5 minutesIn Hello World issue 9, out today, Elliott Hall and Tom Bowtell discuss The Digital Ghost Hunt: an immersive theatre and augmented reality experience that takes a narrative-driven approach in order to make digital education accessible. The Digital Ghost Hunt combines coding education, augmented reality, and live performance to create an immersive…

-

Play musical chairs with Marvel’s Avengers

Reading Time: 2 minutesYou read that title correctly. I played musical chairs against the Avengers in AR Planning on teaching a 12 week class on mixed reality development starting in June. Apply if interested – http://bit.ly/3016EdH Playing with the Avengers Abhishek Singh recently shared his latest Unity creation on Reddit. And when Simon, Righteous Keeper…

-

Dragon Ball Z head-mounted Scouter computer replica

Reading Time: 2 minutesDragon Ball Z head-mounted Scouter computer replica Arduino Team — October 26th, 2018 Those familiar with the Dragon Ball Z franchise will recognize the head-mounted Scouter computer often seen adorning character faces. As part of his Goku costume, Marcin Poblocki made an impressive replica, featuring a see-through lens that shows the “strength”…

-

World of Tanks AR Spectate: AR-Tabletop-Technologie auf der Gamescom 2018 vorgestellt

Reading Time: 2 minutesAuf der Gamescom 2018 präsentiert Entwicklerstudio Wargaming die AR-Erfahrung World of Tanks AR Spectate, welche die Panzergefechte von World of Tanks in die reale Welt transportiert. Dafür setzten die Entwickler/innen auf eine neue, experimentelle AR-Technologie. Diese erlaubt es, die Inhalte der PC-Version von World of Tanks realistisch in Echtzeit darzustellen. World of…

-

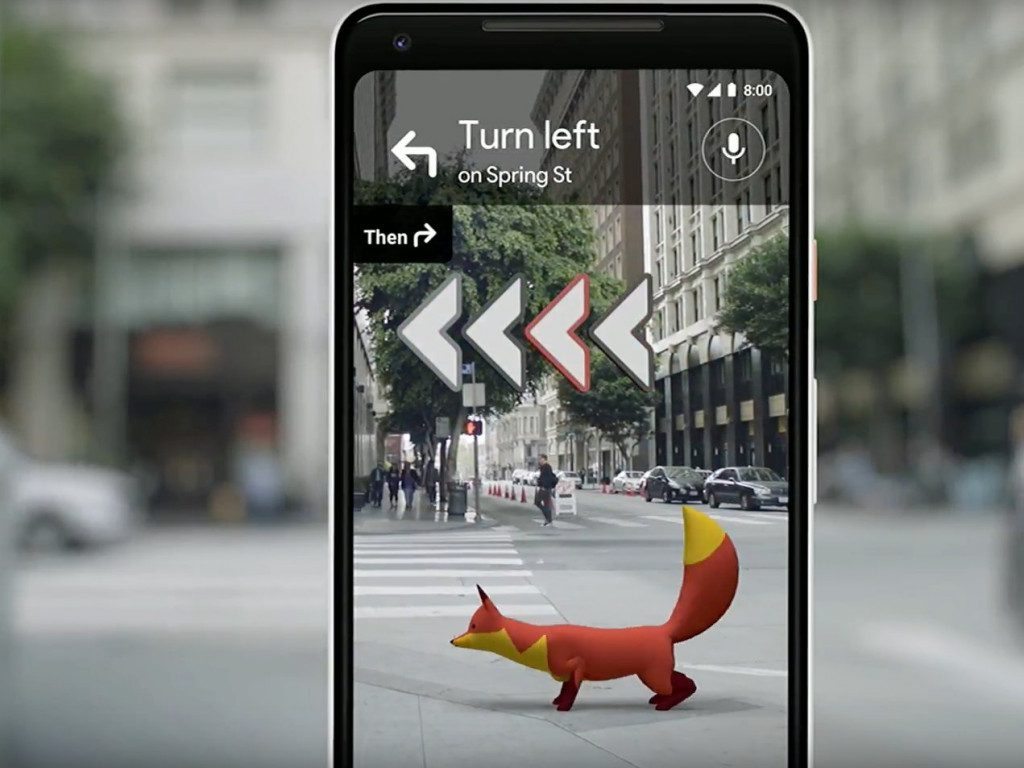

Google Maps to Improve Walking Navigation with AR Fox and Giant Arrows

Reading Time: 3 minutesAt Google’s I/O Developers conference this week, the company’s Vice President, Aparna Chennapragada, demonstrated how AR could be used to improve navigation when using Google Maps. The result is a cute AR fox and huge arrows to point you in the right direction. How often do you find yourself relying on Google…

-

Augmented-reality projection lamp with Raspberry Pi and Android Things

Reading Time: 3 minutesIf your day has been a little fraught so far, watch this video. It opens with a tableau of methodically laid-out components and then shows them soldered, screwed, and slotted neatly into place. Everything fits perfectly; nothing needs percussive adjustment. Then it shows us glimpses of an AR future just like the…

-

Snapchat Introduces Interactive AR Games Called Snappables

Reading Time: 2 minutesAs well as being able to use Snapchat for turning yourself into a dog and stalking your crush, you can now play their new AR experience, Snappables, with your friends. If you’re sat across from someone making weirder than normal faces at their phone, you can guarantee they’re trying out Snapchat’s latest…

-

Watch the HTC VIVE and VIVEPORT Keynotes from Mobile World Congress 2018

Reading Time: < 1 minuteLast week at Mobile World Congress 2018, HTC Chairwoman Cher Wang gave a keynote sharing her vision for HTC Vive and the VR industry. Watch the speech below to hear her thoughts on the on the convergence of major technologies like VR, AR, 5G and AI. [embedded content] Also at Mobile…

-

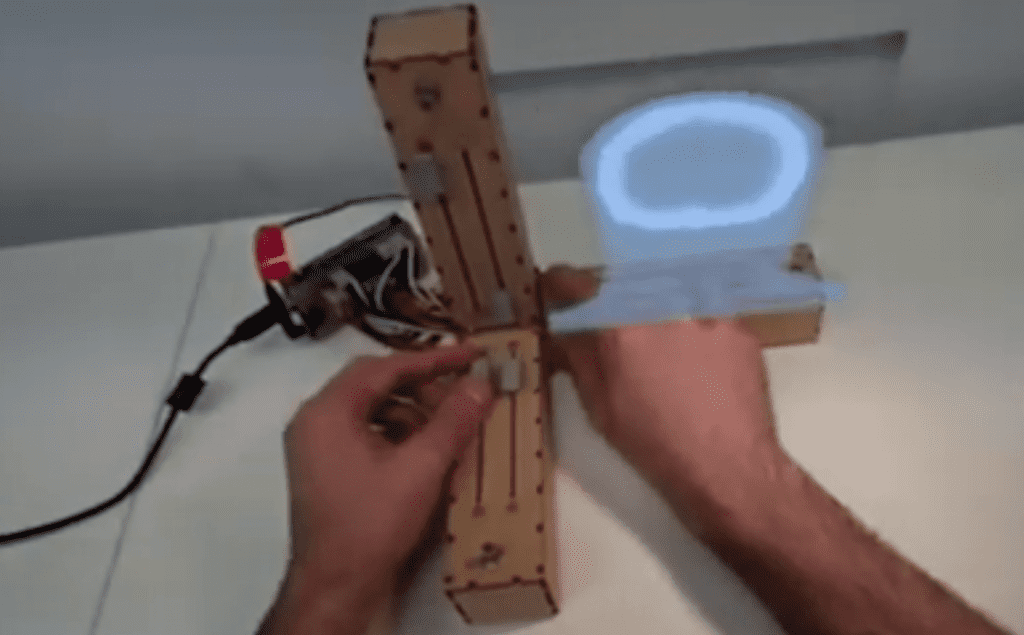

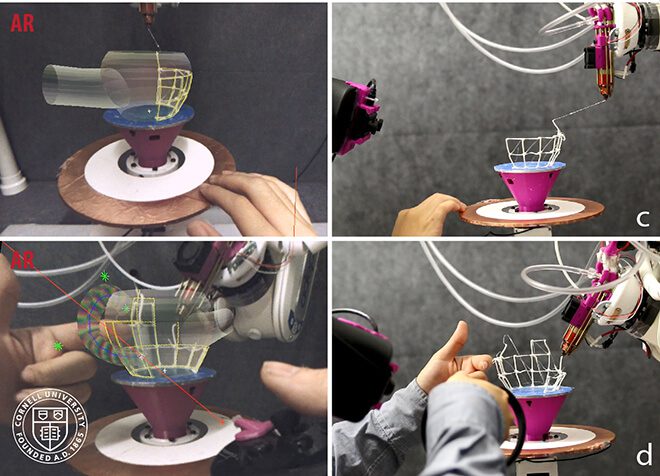

RoMA: Robotic Modeling Assistant Could be a Better Prototyping Machine

Reading Time: 3 minutesCornell and MIT are working on a joint project called the Robotic Modeling Assistant (RoMA) which will bring together multiple technologies to create an ultimate prototyping machine. Although 3D printing is certainly improving and streamlining prototyping, researchers from MIT and Cornell want to bring more emerging technologies together to improve such machines.…

-

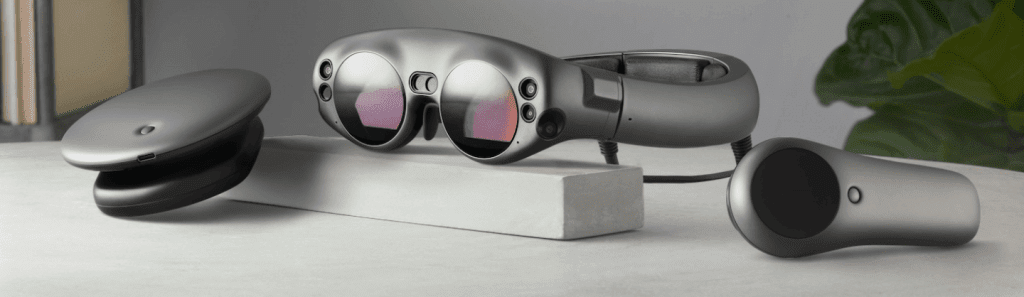

Job-Posting: Magic Leap will in den stationären Handel

Reading Time: 2 minutesDer Handel im Internet ist ja gut und schön, aber gerade Wearables wie die noch jungen VR Headsets und kommenden AR-Brillen benötigen eine konkrete Erfahrung, um sie einschätzen und verstehen zu können. In einer neuen Stellenanzeige sucht Magic Leap nun einen „leidenschaftlichen und furchtlosen“ Mitarbeiter, der Store-Konzepte designen und bei der Umsetzung…

-

VR Weekly: Tower Tag in Tokio und Magic Leap One

Reading Time: 2 minutesIn dieser Woche stehen in unserem VR Weekly hauptsächlich zwei Themen auf unserer Liste: Sega holte unser VR-Spiel Tower Tag in die riesige Arcade Joypolis in Tokio. Außerdem gibt es einige Neuigkeiten zur AR-Brille Magic Leap One, die Chris und Patrick diskutieren. VR Weekly Plus: Tower Tag und Magic Leap im Fokus…

-

Magic Leap Finally Reveal their First Augmented Reality Glasses

Reading Time: 3 minutesMagic Leap’s “One Creator Edition” is finally here. “Magic Leap One”, the first product of the company’s Augmented Reality technology, will be made public to developers in 2018. Still, there is no price available yet. After raising more than $1.8 billion across four investments rounds and making several bold announcements about changing the…

-

Es ist soweit – INTERACTION ist Live!

Reading Time: < 1 minuteDamit wir unser Ziel erreichen und INTERACTION Wirklichkeit werden kann, brauchen wir jetzt Deine Hilfe. Wir würden uns natürlich riesig freuen, wenn Du das Projekt finanziell unterstützt zum Beispiel mit dem „Early Bird“, da bekommst Du das komplette Spiel um nur 29 EUR! Hier geht’s zum Early Bird: https://bit.ly/LiveAtKS INTERACTION |…