Schlagwort: AI literacy

-

Experience AI receives global recognition from UNESCO

Reading Time: 4 minutesI am very proud to share the news that Experience AI has been recognised as a laureate for the 2025 UNESCO King Hamad Bin Isa Al-Khalifa Prize for the Use of ICT in Education. At the award ceremony of the 2025 UNESCO King Hamad Bin Isa Al-Khalifa Prize. © Government of the…

-

Introducing AI Quests: A new gamified learning experience within Experience AI

Reading Time: 3 minutesArtificial intelligence (AI) tools are shaping our world in many ways. Helping young people develop AI literacy — in other words, helping them understand how AI tools work and how to use them responsibly — is essential. At the Raspberry Pi Foundation, we’re committed to empowering educators around the world with everything…

-

Teaching Experience AI: Lessons from educators in Mexico

Reading Time: 4 minutesIn classrooms across Mexico, a transformation is unfolding. The Experience AI programme isn’t just teaching students about artificial intelligence, it’s empowering teachers and learners to explore, question, and create with it. By equipping educators with accessible tools and sparking curiosity among students, the initiative is shaping a new generation ready to use…

-

Promoting young people’s agency in the age of AI

Reading Time: 6 minutesPart of teaching young people AI literacy skills is teaching them to critically think about AI, and to design AI applications that address problems they care about. How to do this was the focus of our June research seminar. Working together to design AI Our June research seminar was delivered by Netta…

-

Why kids still need to learn to code in the age of AI

Reading Time: 3 minutesToday we’re publishing a position paper setting out five arguments for why we think that kids still need to learn to code in the age of artificial intelligence. Generated using ChatGPT. Just like every wave of technological innovation that has come before, the advances in artificial intelligence (AI) are raising profound questions…

-

UNESCO’s International Day of Education 2025: AI and the future of education

Reading Time: 6 minutesRecently, our Chief Learning Officer Rachel Arthur and I had the opportunity to attend UNESCO’s International Day of Education 2025, which focused on the role of education in helping people “understand and steer AI to better ensure that they retain control over this new class of technology and are able to direct…

-

The need to invest in AI skills in schools

Reading Time: 6 minutesEarlier this week, the UK Government published its AI Opportunities Action Plan, which sets out an ambitious vision to maintain the UK’s position as a global leader in artificial intelligence. Whether you’re from the UK or not, it’s a good read, setting out the opportunities and challenges facing any country that aspires…

-

Ocean Prompting Process: How to get the results you want from an LLM

Reading Time: 5 minutesHave you heard of ChatGPT, Gemini, or Claude, but haven’t tried any of them yourself? Navigating the world of large language models (LLMs) might feel a bit daunting. However, with the right approach, these tools can really enhance your teaching and make classroom admin and planning easier and quicker. That’s where the…

-

Exploring how well Experience AI maps to UNESCO’s AI competency framework for students

Reading Time: 9 minutesDuring this year’s annual Digital Learning Week conference in September, UNESCO launched their AI competency frameworks for students and teachers. What is the AI competency framework for students? The UNESCO competency framework for students serves as a guide for education systems across the world to help students develop the necessary skills in…

-

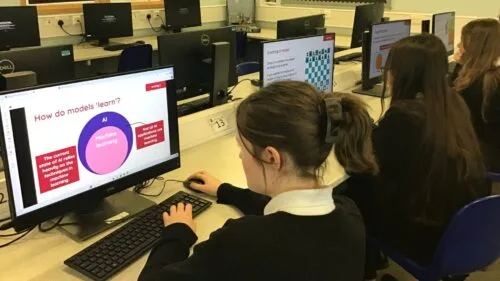

Impact of Experience AI: Reflections from students and teachers

Reading Time: 5 minutes“I’ve enjoyed actually learning about what AI is and how it works, because before I thought it was just a scary computer that thinks like a human,” a student learning with Experience AI at King Edward’s School, Bath, UK, told us. This is the essence of what we aim to do with…

-

Experience AI: How research continues to shape the resources

Reading Time: 5 minutesSince we launched the Experience AI learning programme in the UK in April 2023, educators in 130 countries have downloaded Experience AI lesson resources. They estimate reaching over 630,000 young people with the lessons, helping them to understand how AI works and to build the knowledge and confidence to use AI tools…

-

Experience AI at UNESCO’s Digital Learning Week

Reading Time: 5 minutesLast week, we were honoured to attend UNESCO’s Digital Learning Week conference to present our free Experience AI resources and how they can help teachers demystify AI for their learners. The conference drew a worldwide audience in-person and online to hear about the work educators and policy makers are doing to support…

-

Experience AI expands to reach over 2 million students

Reading Time: 4 minutesTwo years ago, we announced Experience AI, a collaboration between the Raspberry Pi Foundation and Google DeepMind to inspire the next generation of AI leaders. Today I am excited to announce that we are expanding the programme with the aim of reaching more than 2 million students over the next 3 years,…

-

Why we’re taking a problem-first approach to the development of AI systems

Reading Time: 7 minutesIf you are into tech, keeping up with the latest updates can be tough, particularly when it comes to artificial intelligence (AI) and generative AI (GenAI). Sometimes I admit to feeling this way myself, however, there was one update recently that really caught my attention. OpenAI launched their latest iteration of ChatGPT,…

-

New guide on using generative AI for teachers and schools

Reading Time: 5 minutesThe world of education is loud with discussions about the uses and risks of generative AI — tools for outputting human-seeming media content such as text, images, audio, and video. In answer, there’s a new practical guide on using generative AI aimed at Computing teachers (and others), written by a group of…

-

Four key learnings from teaching Experience AI lessons

Reading Time: 4 minutesDeveloped by us and Google DeepMind, Experience AI provides teachers with free resources to help them confidently deliver lessons that inspire and educate young people about artificial intelligence (AI) and the role it could play in their lives. Tracy Mayhead is a computer science teacher at Arthur Mellows Village College in Cambridgeshire.…

-

Imagining students’ progression in the era of generative AI

Reading Time: 6 minutesGenerative artificial intelligence (AI) tools are becoming more easily accessible to learners and educators, and increasingly better at generating code solutions to programming tasks, code explanations, computing lesson plans, and other learning resources. This raises many questions for educators in terms of what and how we teach students about computing and AI,…

-

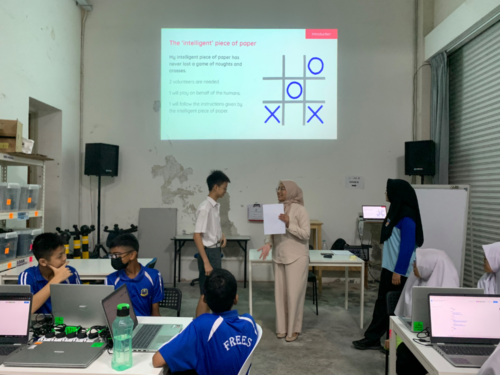

Teaching a generation of AI innovators in Malaysia with Experience AI

Reading Time: 4 minutesToday’s blog is from Aimy Lee, Chief Operating Officer at Penang Science Cluster, part of our global partner network for Experience AI. Artificial intelligence (AI) is transforming the world at an incredible pace, and at Penang Science Cluster, we are determined to be at the forefront of this fast-changing landscape. The Malaysian…

-

Localising AI education: Adapting Experience AI for global impact

Reading Time: 6 minutesIt’s been almost a year since we launched our first set of Experience AI resources in the UK, and we’re now working with partner organisations to bring AI literacy to teachers and students all over the world. Developed by the Raspberry Pi Foundation and Google DeepMind, Experience AI provides everything that teachers…

-

Insights into students’ attitudes to using AI tools in programming education

Reading Time: 4 minutesEducators around the world are grappling with the problem of whether to use artificial intelligence (AI) tools in the classroom. As more and more teachers start exploring the ways to use these tools for teaching and learning computing, there is an urgent need to understand the impact of their use to make…

-

Using an AI code generator with school-age beginner programmers

Reading Time: 5 minutesAI models for general-purpose programming, such as OpenAI Codex, which powers the AI pair programming tool GitHub Copilot, have the potential to significantly impact how we teach and learn programming. The basis of these tools is a ‘natural language to code’ approach, also called natural language programming. This allows users to generate…

-

The Experience AI Challenge: Find out all you need to know

Reading Time: 3 minutesWe’re really excited to see that Experience AI Challenge mentors are starting to submit AI projects created by young people. There’s still time for you to get involved in the Challenge: the submission deadline is 24 May 2024. If you want to find out more about the Challenge, join our live webinar…