Schlagwort: ai

-

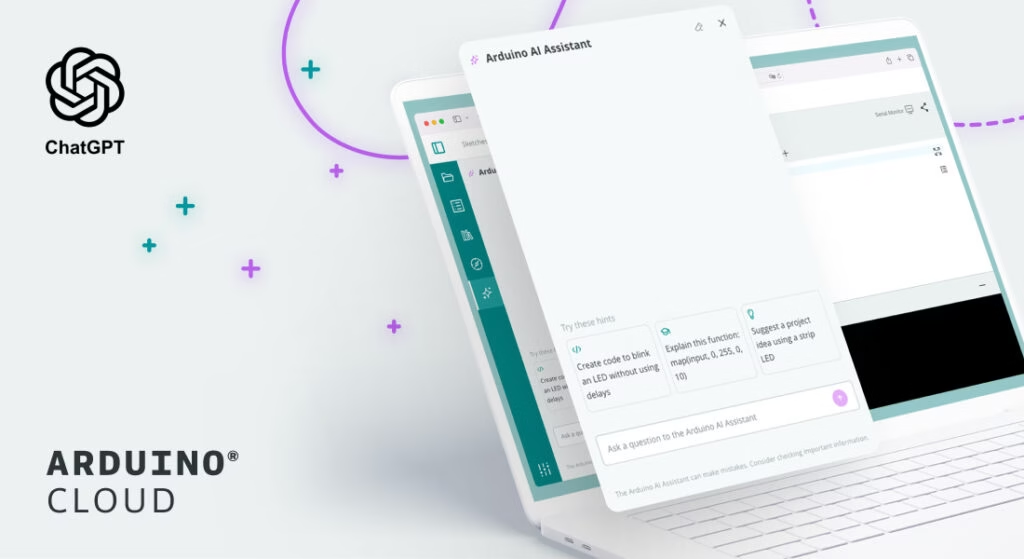

Arduino AI Assistant vs. ChatGPT: Which one to use for your projects?

Reading Time: 4 minutesIf you’ve been turning to ChatGPT to write your Arduino code, you may actually be missing out on a tool designed just for you: the Arduino AI Assistant, built directly into Arduino Cloud. While general-purpose AIs like ChatGPT can generate code, they often miss critical details, such as using the wrong libraries…

-

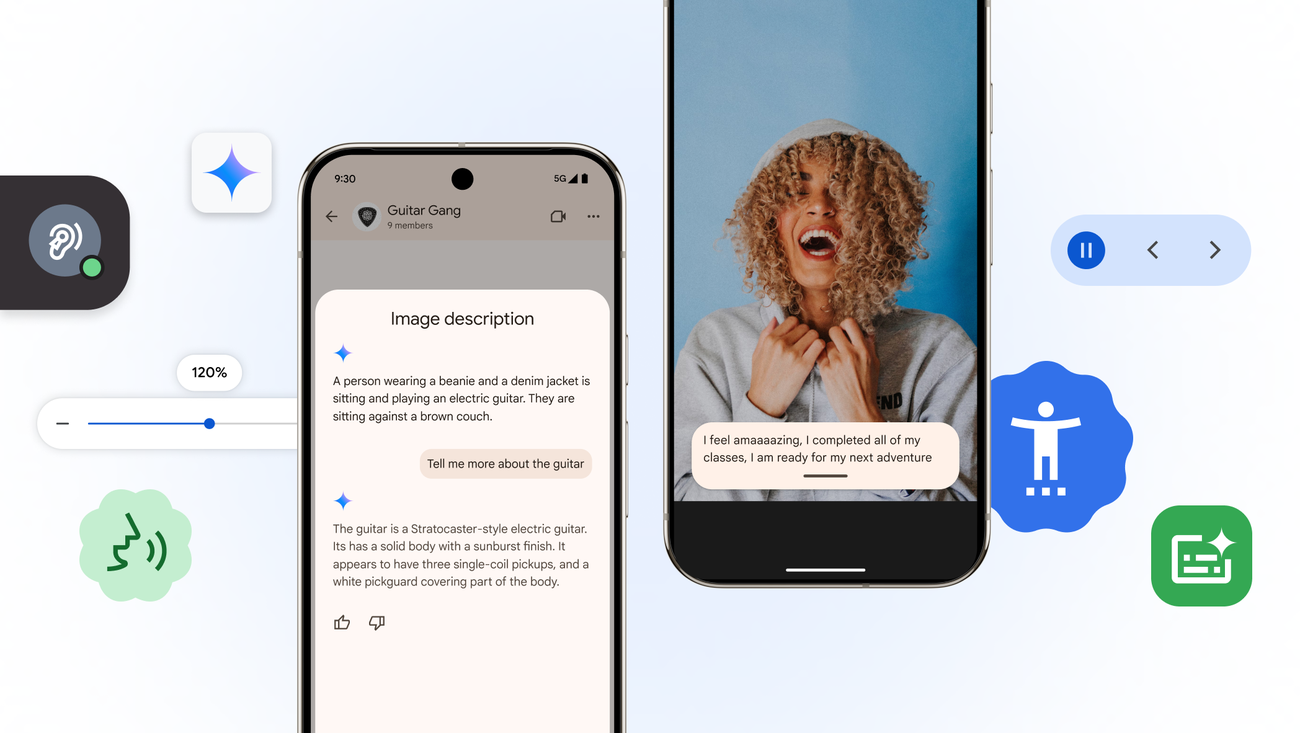

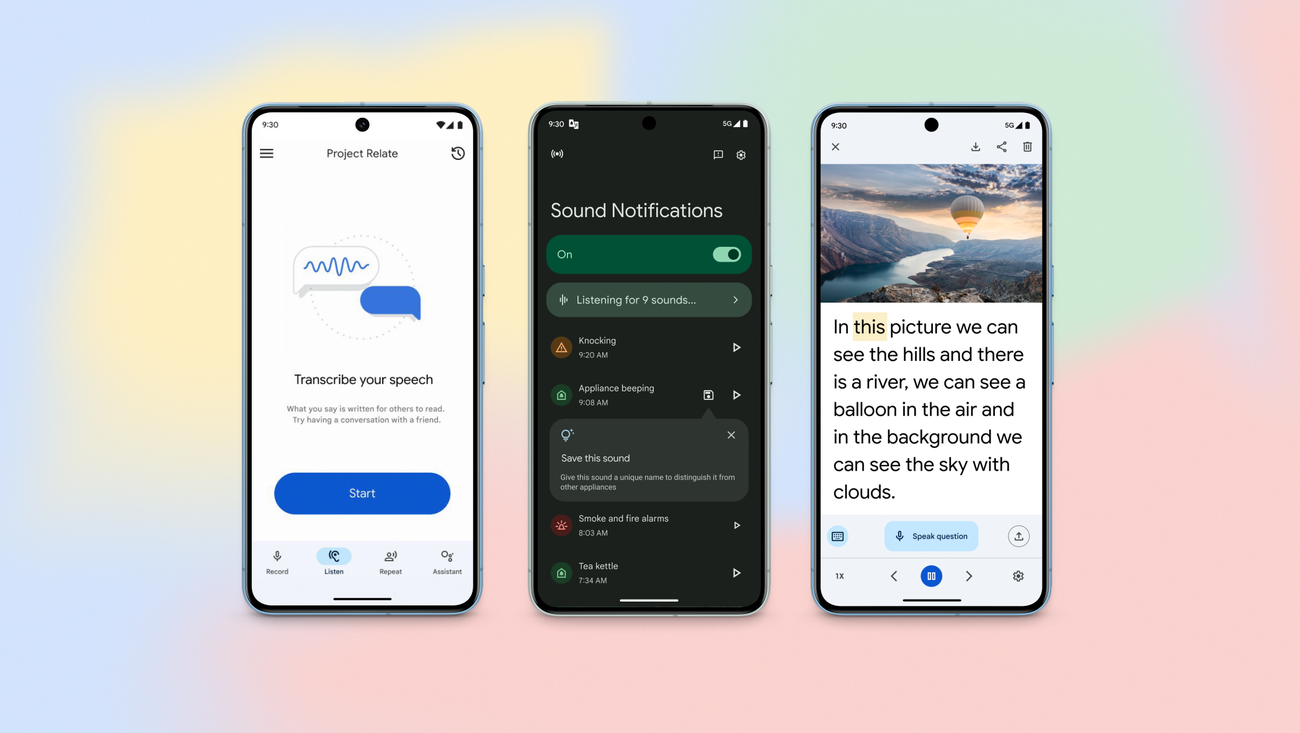

New AI and accessibility updates across Android, Chrome and moreNew AI and accessibility updates across Android, Chrome and moreDirector, Product Management

Reading Time: 2 minutesIn honor of Global Accessibility Awareness Day, we’re excited to roll out new updates across Android and Chrome, plus new resources for the ecosystem.In honor of Global Accessibility Awareness Day, we’re excited to roll out new updates across Android and Chrome, plus new resources for the ecosystem.Website: LINK

-

How well do you know our I/O 2025 announcements?How well do you know our I/O 2025 announcements?Contributor

Reading Time: < 1 minuteTake this quiz about Google I/O 2025 to see how well you know what we announced this year at I/O.Take this quiz about Google I/O 2025 to see how well you know what we announced this year at I/O.Website: LINK

-

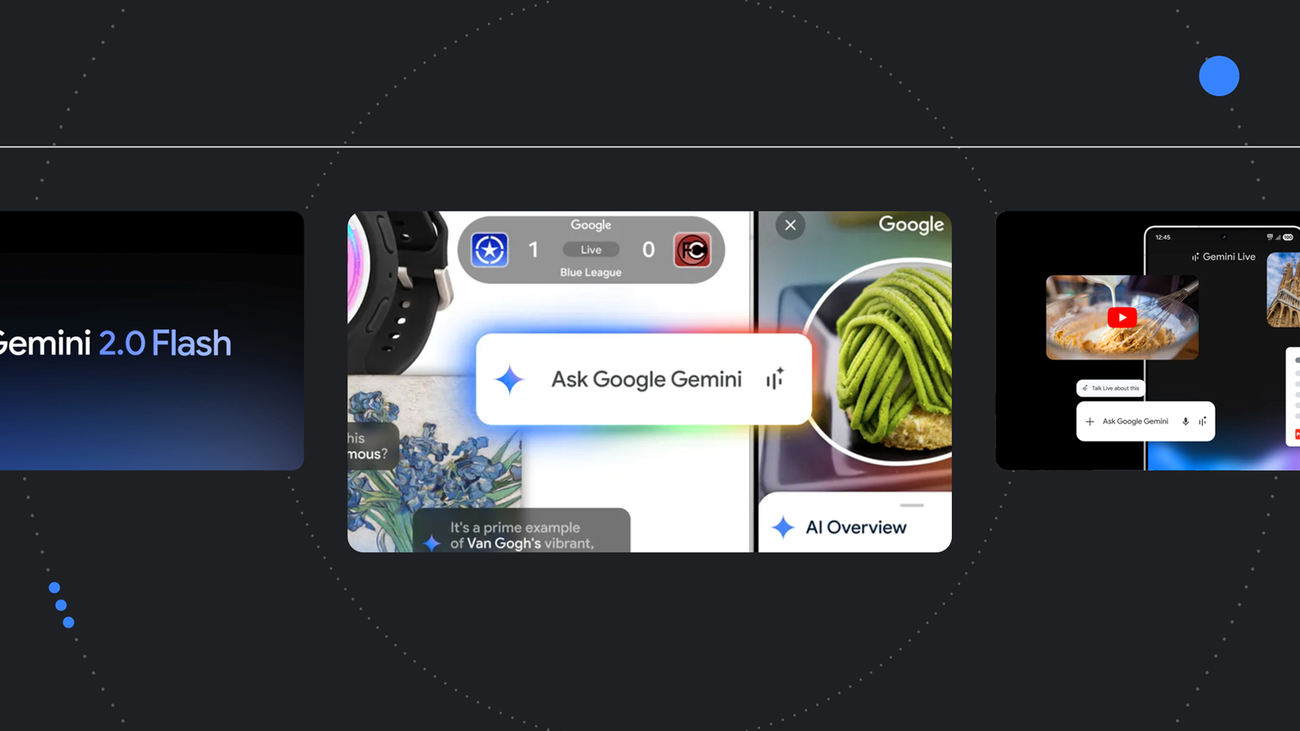

The latest AI news we announced in MayThe latest AI news we announced in May

Reading Time: < 1 minuteHere are Google’s latest AI updates from May 2025Here are Google’s latest AI updates from May 2025Website: LINK

-

How Google and NVIDIA are teaming up to solve real-world problems with AIHow Google and NVIDIA are teaming up to solve real-world problems with AI

Reading Time: 3 minutesAn overview of the collaboration between Google and NVIDIA and a preview of the announcements at GTC this week.An overview of the collaboration between Google and NVIDIA and a preview of the announcements at GTC this week.Website: LINK

-

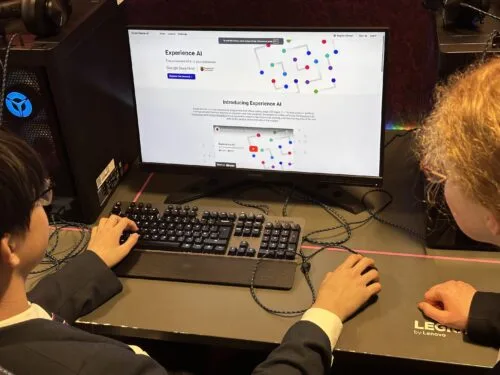

Experience AI: The story so far

Reading Time: 5 minutesIn April 2023, we launched our first Experience AI resources, developed in partnership with Google DeepMind to support educators to engage their students in learning about the topic of AI. Since then, the Experience AI programme has grown rapidly, reaching thousands of educators all over the world. Read on to find out…

-

Xbox-Update im Februar: Verschicke Einladungslinks, Cloud Gaming-Updates und mehr

Reading Time: 4 minutesAuch dieses Mal werden mit dem neuen Xbox-Update wieder frische Features ausgerollt. Ab heute kannst Du als Game Pass-Abonnent*in Deine Freund*innen ganz bequem über einen Link zu Deinen Xbox Cloud Gaming (Beta)-Sessions einladen. Außerdem stehen noch mehr über Cloud spielbare Xbox-Titel zur Verfügung. Falls Du es verpasst haben solltest: Xbox forscht aktiv…

-

Teaching about AI – Teacher symposium

Reading Time: 5 minutesAI has become a pervasive term that is heard with trepidation, excitement, and often a furrowed brow in school staffrooms. For educators, there is pressure to use AI applications for productivity — to save time, to help create lesson plans, to write reports, to answer emails, etc. There is also a lot…

-

Muse, ein generatives KI-Modell für Gameplay, bietet neue Möglichkeiten im Gaming und in der Spielentwicklung

Reading Time: 3 minutesWas Muse für das Gaming von morgen bedeutet Obwohl wir noch am Anfang stehen, stößt die Modellforschung bereits an die Grenzen dessen, was wir für möglich gehalten haben. Wir verwenden Muse bereits, um ein in Echtzeit spielbares KI-Modell zu entwickeln, das auf der Basis anderer Spiele von Erstanbietern trainiert wurde. Wir sehen…

-

The latest AI news we announced in JanuaryThe latest AI news we announced in January

Reading Time: < 1 minuteHere are Google’s latest AI updates from January.Here are Google’s latest AI updates from January.Website: LINK

-

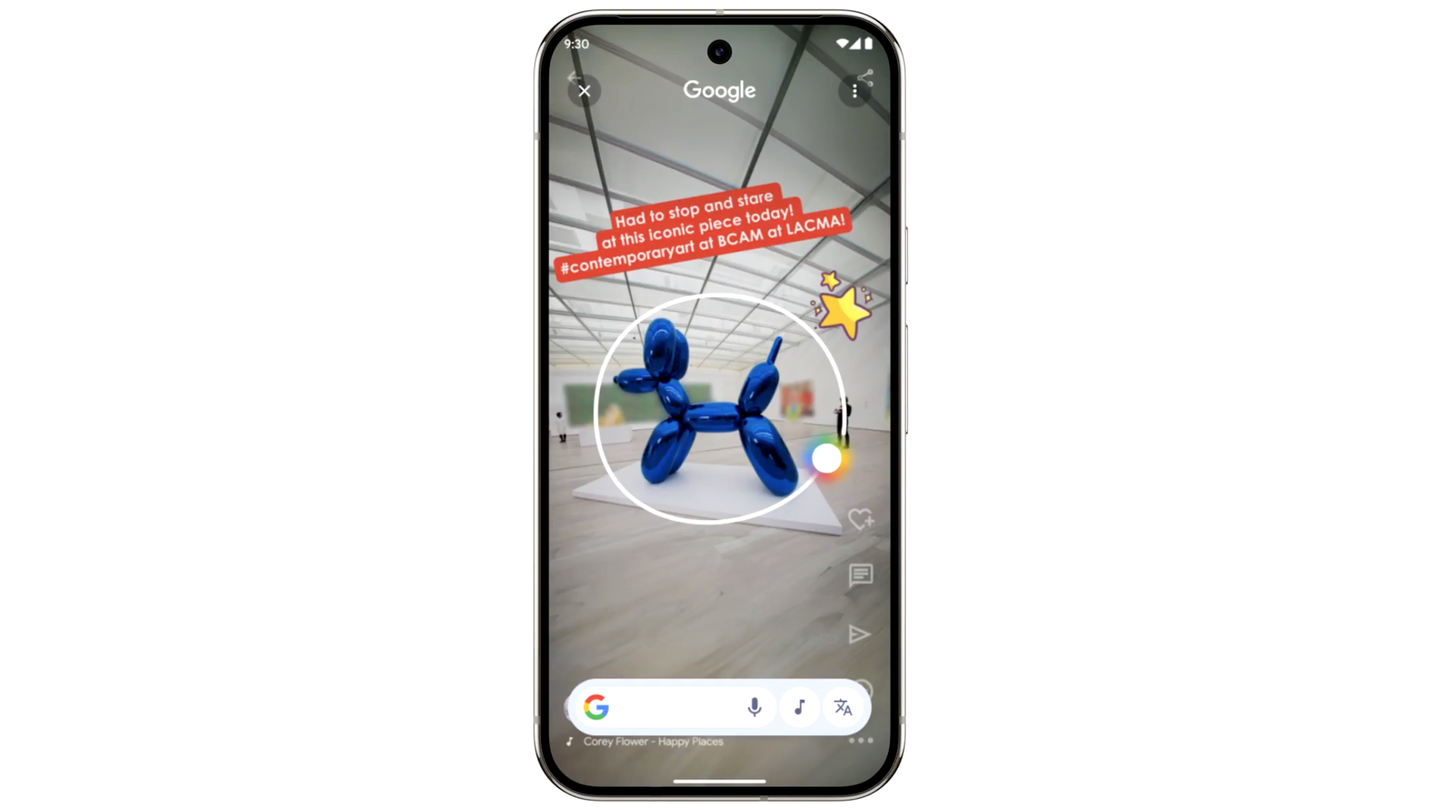

New Circle to Search updates make it even easier to find information and get things done.New Circle to Search updates make it even easier to find information and get things done.Product Manager

Reading Time: < 1 minuteLast year, we introduced Circle to Search to help you easily circle, scribble or tap anything you see on your Android screen, and find information from the web without s…Website: LINK

-

60 of our biggest AI announcements in 202460 of our biggest AI announcements in 2024

Reading Time: 6 minutesRecap some of Google’s biggest AI news from 2024, including moments from Gemini, NotebookLM, Search and more.Recap some of Google’s biggest AI news from 2024, including moments from Gemini, NotebookLM, Search and more.Website: LINK

-

The latest AI news we announced in DecemberThe latest AI news we announced in December

Reading Time: < 1 minuteHere are Google’s latest AI updates from December including Gemini 2.0, GenCast, and Willow.Here are Google’s latest AI updates from December including Gemini 2.0, GenCast, and Willow.Website: LINK

-

New AI features and more for Android and PixelNew AI features and more for Android and Pixel

Reading Time: < 1 minuteLearn more about the latest Pixel and Android updates, featuring the latest in Google AI innovation.Learn more about the latest Pixel and Android updates, featuring the latest in Google AI innovation.Website: LINK

-

Does AI-assisted coding boost novice programmers’ skills or is it just a shortcut?

Reading Time: 6 minutesArtificial intelligence (AI) is transforming industries, and education is no exception. AI-driven development environments (AIDEs), like GitHub Copilot, are opening up new possibilities, and educators and researchers are keen to understand how these tools impact students learning to code. In our 50th research seminar, Nicholas Gardella, a PhD candidate at the University…

-

Discover #Virgil: history comes to life with Arduino

Reading Time: 2 minutesWe’re excited to introduce #Virgil, an innovative project that combines the power of Arduino technology with a passion for history, creating a groundbreaking interactive experience for museums. Using Arduino’s versatile and scalable ecosystem, #Virgil operates completely offline, allowing visitors to interact with 3D avatars in a seamless and immersive way. The project…

-

Reimagining the chicken coop with predator detection, Wi-Fi control, and more

Reading Time: 2 minutesThe traditional backyard chicken coop is a very simple structure that typically consists of a nesting area, an egg-retrieval panel, and a way to provide food and water as needed. Realizing that some aspects of raising chickens are too labor-intensive, the Coders Cafe crew decided to automate most of the daily care…

-

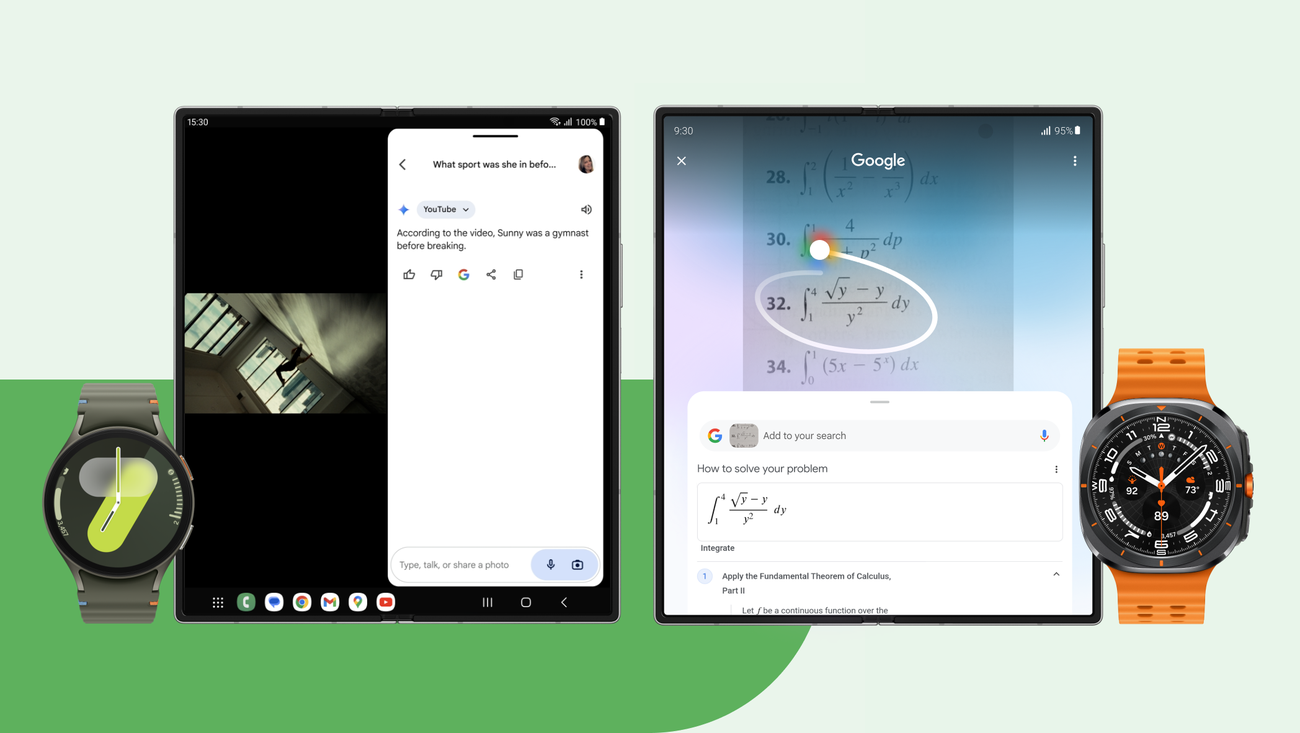

4 Google updates coming to Samsung devices4 Google updates coming to Samsung devicesSenior Director, Global Android Product Marketing

Reading Time: < 1 minuteNew Circle to Search capabilities, Wear OS 5 and more are coming to Samsung’s latest devices.New Circle to Search capabilities, Wear OS 5 and more are coming to Samsung’s latest devices.Website: LINK

-

3 new ways to use Google AI on Android at work3 new ways to use Google AI on Android at workProduct Management Lead

Reading Time: < 1 minuteLearn how new Google AI on Android features can boost employee productivity, help developers to build smarter tools and improve business workflows.Learn how new Google AI on Android features can boost employee productivity, help developers to build smarter tools and improve business workflows.Website: LINK

-

8 new accessibility updates across Lookout, Google Maps and more8 new accessibility updates across Lookout, Google Maps and moreSenior Director, Products for All

Reading Time: < 1 minuteFor Global Accessibility Awareness Day, here’s new updates to our accessibility products.For Global Accessibility Awareness Day, here’s new updates to our accessibility products.Website: LINK

-

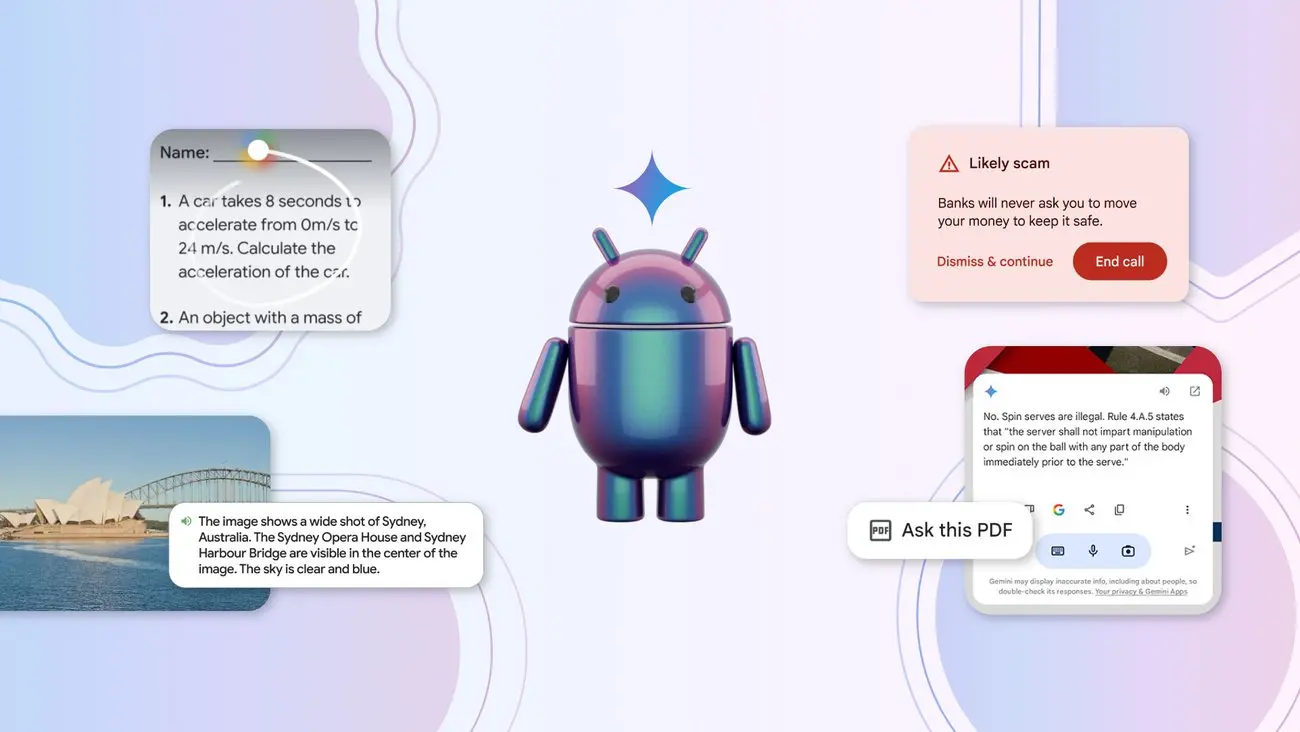

Experience Google AI in even more ways on AndroidExperience Google AI in even more ways on AndroidPresident, Android Ecosystem

Reading Time: < 1 minuteHere’s more ways you can experience Google AI on Android. Learn how on-device AI is changing what your phone can do.Here’s more ways you can experience Google AI on Android. Learn how on-device AI is changing what your phone can do.Website: LINK