Kategorie: News

-

Teaching about AI in K–12 education: Thoughts from the USA

Reading Time: 5 minutesAs artificial intelligence continues to shape our world, understanding how to teach about AI has never been more important. Our new research seminar series brings together educators and researchers to explore approaches to AI and data science education. In the first seminar, we welcomed Shuchi Grover, Director of AI and Education Research…

-

The Apple TV app is now available on Google Play for Android mobile devices.The Apple TV app is now available on Google Play for Android mobile devices.Managing Director

Reading Time: < 1 minuteSince its launch in 2021, the Apple TV app for Google TV has given people the ability to stream award-winning original shows, like “Severance,” “Slow Horses,” “The Morning Show” and “Ted Lasso,” as well as films like “Wolfs” and “The Instigators.” Starting today, the Apple TV app is available on the…

-

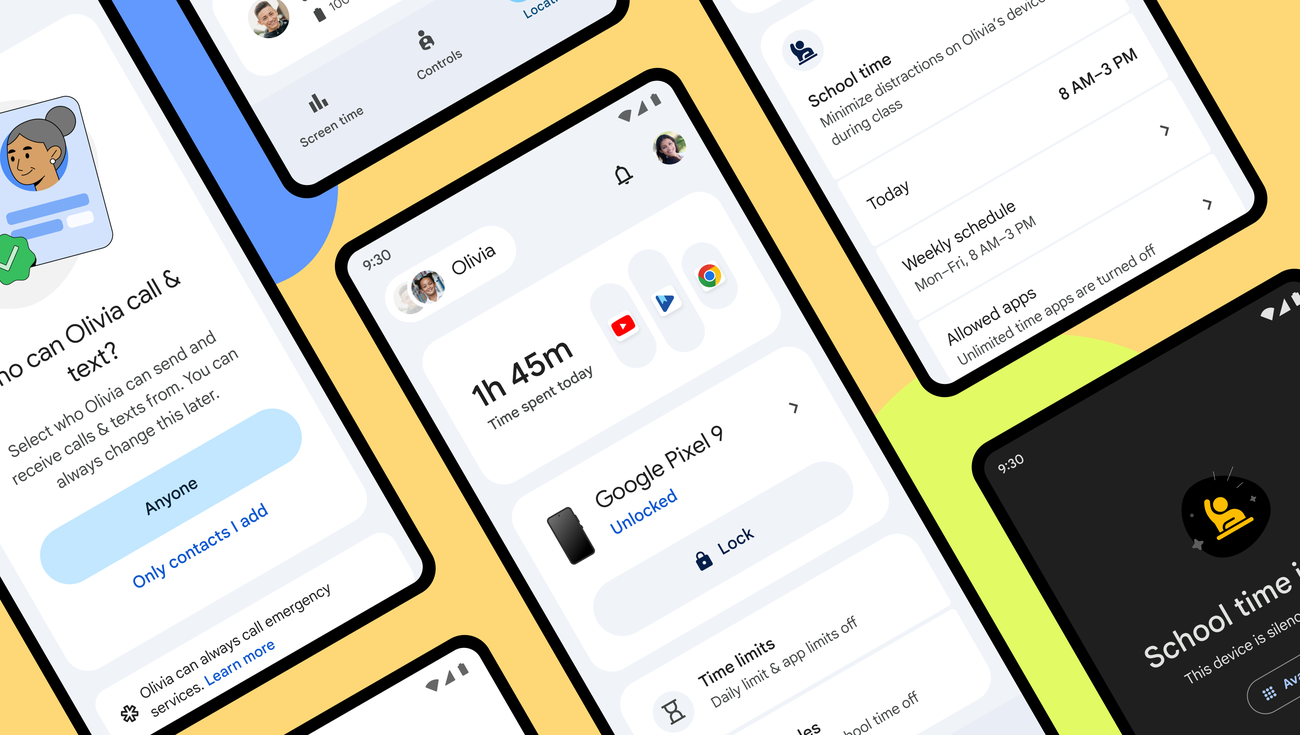

Google Family Link brings new supervision tools for parentsGoogle Family Link brings new supervision tools for parentsProduct Manager

Reading Time: < 1 minuteGoogle Family Link is introducing new features that help families foster healthy digital habits.Google Family Link is introducing new features that help families foster healthy digital habits.Website: LINK

-

#AvowedExperience in Hamburg – das Land der Lebenden übernimmt das XPERION

Reading Time: 2 minutesDie Welt von Avowed ruft nach Dir… und sie ist nur ein paar Rolltreppen entfernt! Triff Deine Lieblingscreator im XPERION in Hamburg beim Meet & Greet, mach Fotos mit Cosplayer*innen im Avowed-Stil, gewinne tolle Preise und vor allem: Spiel das neue RPG-Meisterwerk von Obsidian vor Ort auf Xbox Series X, PC oder…

-

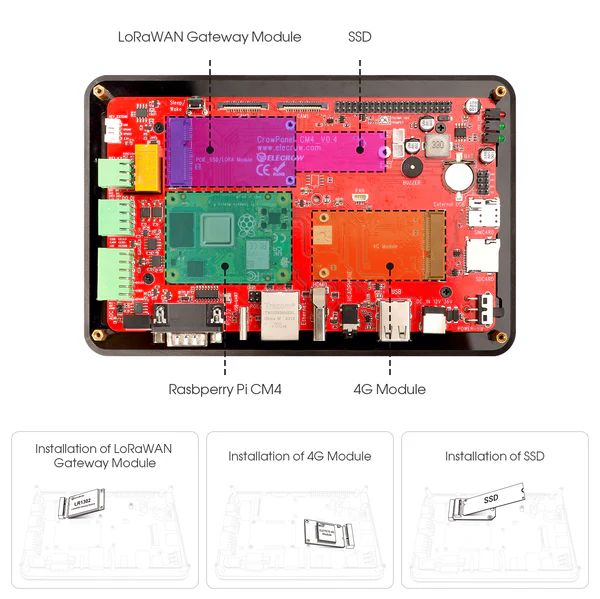

Pi Terminal review

Reading Time: 2 minutesIt also has the equally important connectivity requirements of modern industrial automation. From your classic terminal pins, serial connector, Ethernet, etc., there’s also access to the built-in Wi-Fi and Bluetooth of CM4, along with a GPS antenna add-on, and you can expand it with LoRa or LTE for more radio connections. Ready…

-

Safer Internet Day: ein tiefer Einblick in das Thema KI mit Minecraft Education

Reading Time: 3 minutesKI erhält weltweit immer mehr Einzug auf der Arbeit, zuhause und in der Schule. Laut der jüngsten Microsoft Global Online Safety Survey hat die Zahl der aktiven Nutzer*innen generativer KI überall auf der Welt zugenommen. Unsere Ergebnisse zeigen, dass im letzten Jahr 51 Prozent der Menschen generative KI nutzten oder zumindest damit…

-

South of Midnight angespielt: ein wunderschönes, schonungsloses Märchen

Reading Time: 7 minutesAuf den ersten Blick könnte South of Midnight als klassisches Action-Adventure beschrieben werden – eine Heldin, die aus ihrem gewöhnlichen Leben gerissen wird und sich auf eine bemerkenswerte Reise begibt − mit magischen Waffen und Fähigkeiten. Doch wenn Du nur wenige Minuten mit dem Spiel verbringst, verflüchtigt sich dieses Gefühl sofort. Eine…

-

Wired for success: Inspiring the next generation of women in science

Reading Time: 3 minutesDid you know that it’s International Day of Women and Girls in Science on February 11th, 2025? To celebrate this global event, we’re shining a light on the efforts to make STEM more accessible, inclusive, and inspiring for future generations. Let’s dive in! Mind the gap: gender representation in STEM Science and…

-

Teaching AI safety: Lessons from Romanian educators

Reading Time: 6 minutesThis blog post has been written by our Experience AI partners in Romania, Asociatia Techsoup, who piloted our new AI safety resources with Romanian teachers at the end of 2024. Last year, we had the opportunity to pedagogically test the new three resources on AI safety and see first-hand the transformative effect…

-

Gustavo Reynaga: Inspiring the next generation of makers with MicroPython

Reading Time: 3 minutesIf you’re a fan of open-source technology, Gustavo Salvador Reynaga Aguilar is a name to know. An experienced educator with a passion for technology, Gustavo has spent nearly three decades teaching and inspiring students at CECATI 132 in Mexico. He’s worked with platforms like Arduino, Raspberry Pi, and BeagleBone, and is renowned…

-

WOPR

Reading Time: 2 minutesWhat’s inside? • Raspberry Pi 4 • Raspberry Pi Touch Display 2 • 5 V / 30 A power supply • 615 Adafruit NeoPixels • Bluetooth speaker A script runs on boot, which twinkles the NeoPixels in the traditional 1980s supercomputer colours: yellow and red. Another script can be run to play…