Reading Time: 4 minutesAs the Experience AI Challenge has closed for submissions, we would like to thank all the talented young people who participated and submitted their projects this year.

The Challenge, created by us in collaboration with Google DeepMind, guides young people under the age of 18, and their mentors, through the process of creating their own unique AI project. It encourages young people to seek out real-world problems and create possible AI-based solutions. From January to May, participants in the UK were also able to submit their projects for feedback from AI experts.

In response to the submissions, Richard Hayler, our Director of Youth Programmes commented:

“In running the Challenge, we have seen an incredible display of creativity, ingenuity, and curiosity about AI among young people. The dedication and innovation they demonstrated in their submitted projects has been truly inspiring. The Challenge has not only showcased the immense potential of addressing problems using AI tools, but most of all the remarkable talent and dedication of the next generation of innovators.

We would also like to thank all the mentors who guided and encouraged participants throughout the Challenge for their invaluable support. Their expertise and mentorship were instrumental in the young people’s success.”

Some Challenge highlights

These are some examples of the innovative projects young people created:

AI creation: River Water Quality Prediction App

Creator: Shreyas, age 13

What does it do:

“The model predicts how good the water quality of a river is based on several factors such as the levels of ammonium, nitrates, and dissolved oxygen.”

Who is it for:

”It can be used to tell if river water is safe to drink, or safe for life. This can also be used by authorities to decide where to deploy limited resources to purify water depending on its toxicity.”

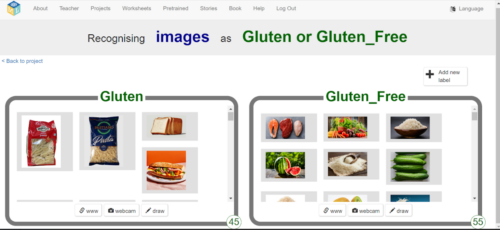

AI creation: Coeliac Disease

Creator: Zainev, age 14–18

What does it do:

“The model aims to identify foods that contain the allergen gluten.”

Who is it for:

“It is for people with gluten allergy and/or people trying to arrange food for those with a gluten allergy, as it will easily help them identify foods that contain gluten and are not safe to eat.”

AI creation: Spacepuppy’s colour adventure

Creator: Charlotte, age 12

What does it do:

“Teaches children about colours.”

Who is it for:

“Teachers at primary schools/ nurseries.”

AI creation: Nutrify

Creator: Ishaan, age 14–18

What does it do:

“The model identifies the students’ food items through a webcam image, giving its specific nutritional information including calories, carbs, sugars and proteins.”

Who is it for:

“This model can be easily used by students to be aware of the nutritional information of their meals.”

AI creation: Flossie

Creator: Florence, age 11

What does it do:

“Identifies dressing gowns, slippers and pyjamas.”

Who is it for:

“For young children to learn different clothing.”

AI creation: Dermalyst

Creator: Vedant, age 14–18

What does it do:

“Dermalyst is an AI-based dermatologist that analyses images of your skin to check if you have any skin infection or disease and also suggests solutions.”

Who is it for:

“This app is targeted at young people but anyone could use it. It saves them from having to wait for a GP appointment.”

AI creation: Bird identifier

Creator: William, age 13

What does it do:

“It is designed to identify common garden birds native to the United Kingdom. It can identify robins, blue tits, great tits and blackbirds by their photograph.”

Who is it for:

“Bird watchers may use the app to identify the birds that they see but don’t know what they are.”

Save the date for the celebratory webinar

We would like to invite you to an online webinar on Wednesday 10 July at 4pm BST to celebrate all Experience AI Challenge participants. Click ‘notify me’ on YouTube to be notified when the webinar starts.

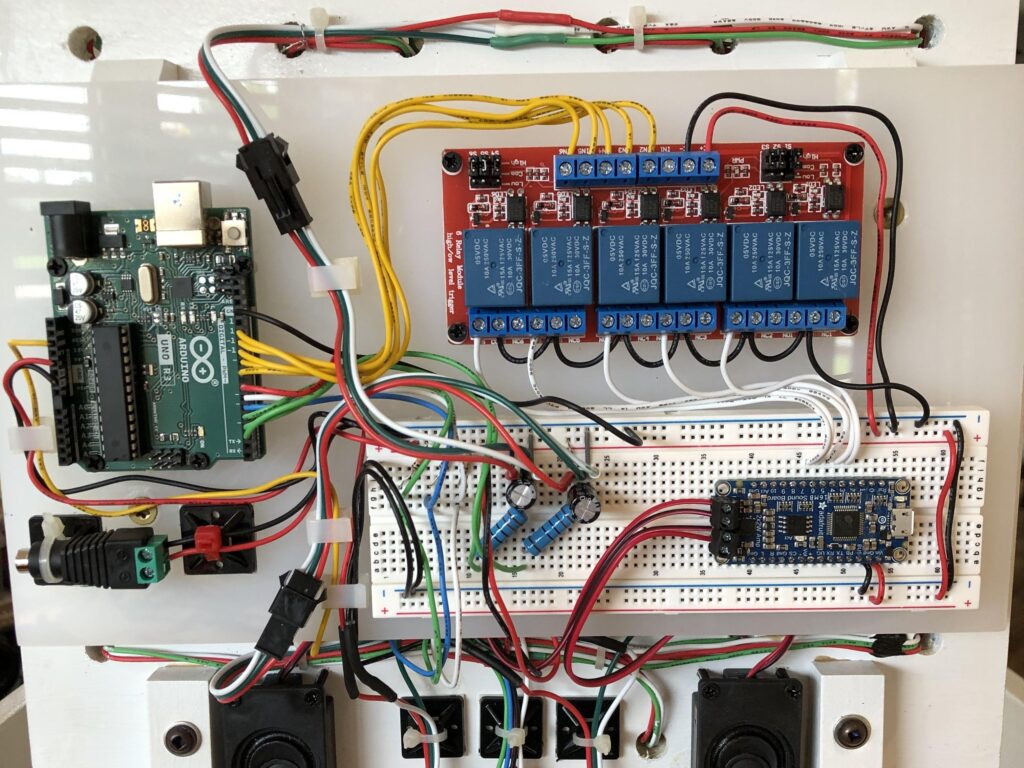

During the webinar, Mark Calleja from the Raspberry Pi Foundation and Matko Bošnjak, Research Scientist at Google DeepMind, will highlight some young people’s AI creations, and discuss all things AI. You can share your questions about AI for Mark and Matko by filling in this form today.

Download the Experience AI Challenge resources

Once again thank you to everyone who participated in the Experience AI Challenge and submitted their projects.

If you’re interested in the Challenge, you can still download the resources and use them to create your own AI projects.

Website: LINK