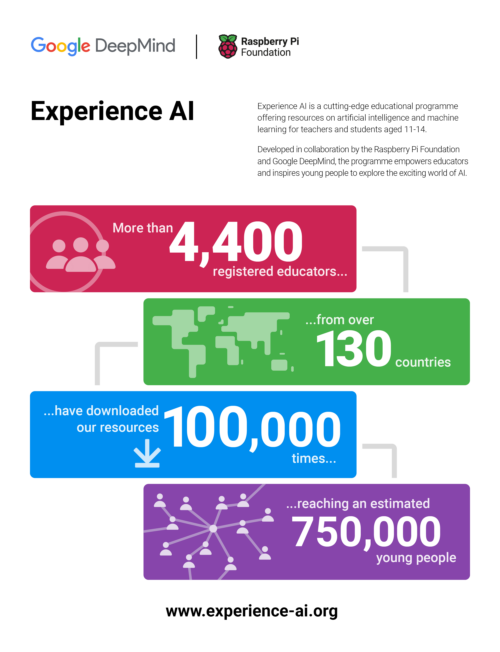

Since we launched the Experience AI learning programme in the UK in April 2023, educators in 130 countries have downloaded Experience AI lesson resources. They estimate reaching over 630,000 young people with the lessons, helping them to understand how AI works and to build the knowledge and confidence to use AI tools responsibly. Just last week, we announced another exciting expansion of Experience AI: thanks to $10 million in funding from Google.org, we will be able to work with local partner organisations to provide research-based AI education to an estimated over 2 million young people across Europe, the Middle East and Africa.

This blog post explains how we use research to continue to shape our Experience AI resources, including the new AI safety resources we are developing.

The beginning of Experience AI

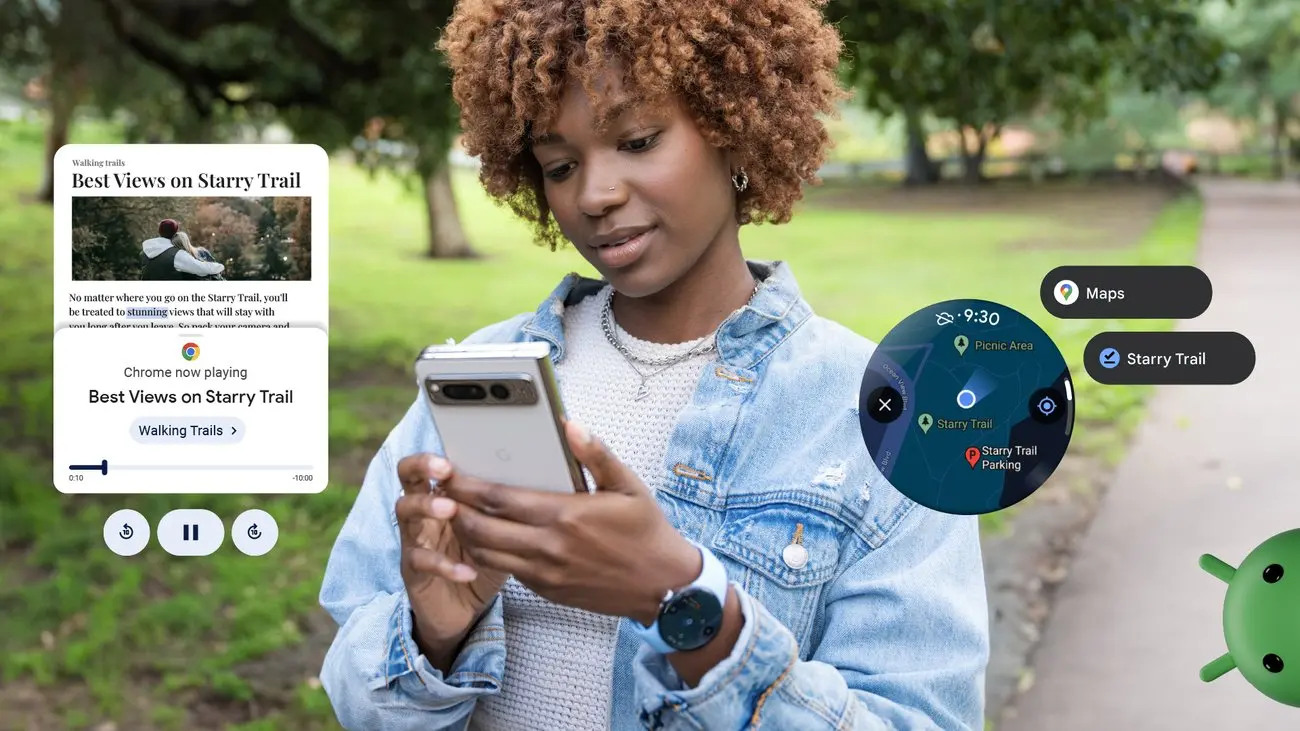

Artificial intelligence (AI) and machine learning (ML) applications are part of our everyday lives — we use them every time we scroll through social media feeds organised by recommender systems or unlock an app with facial recognition. For young people, there is more need than ever to gain the skills and understanding to critically engage with AI technologies.

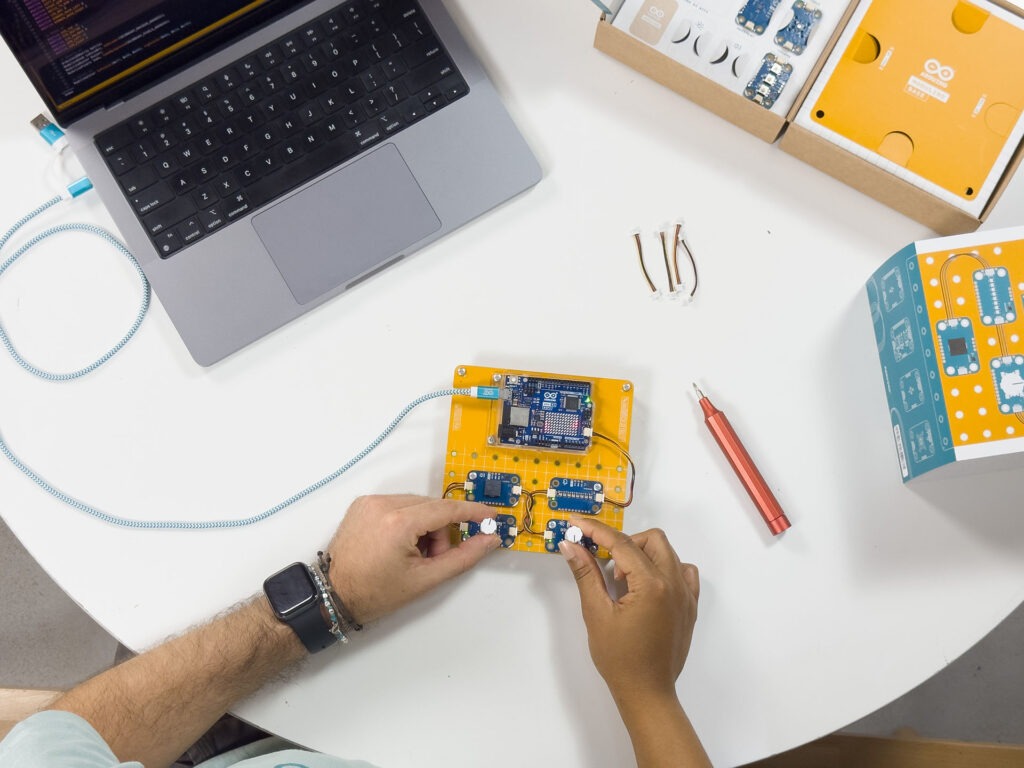

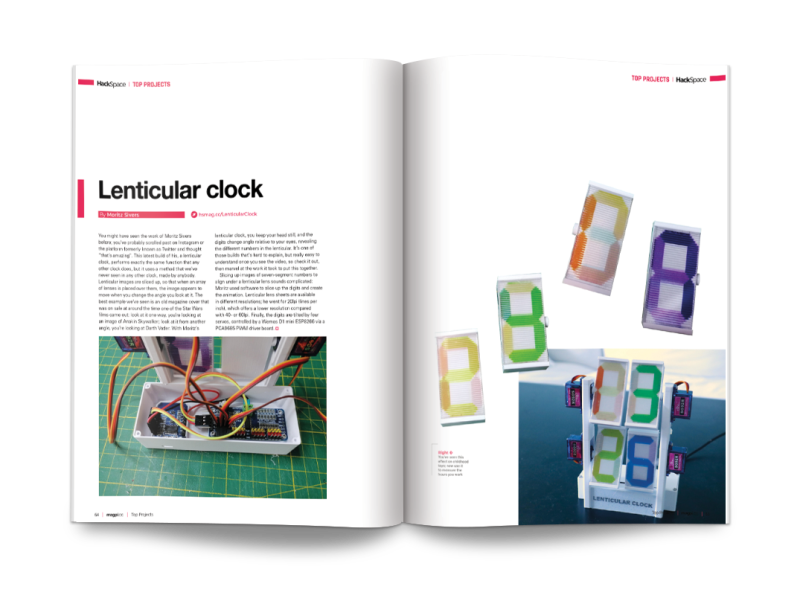

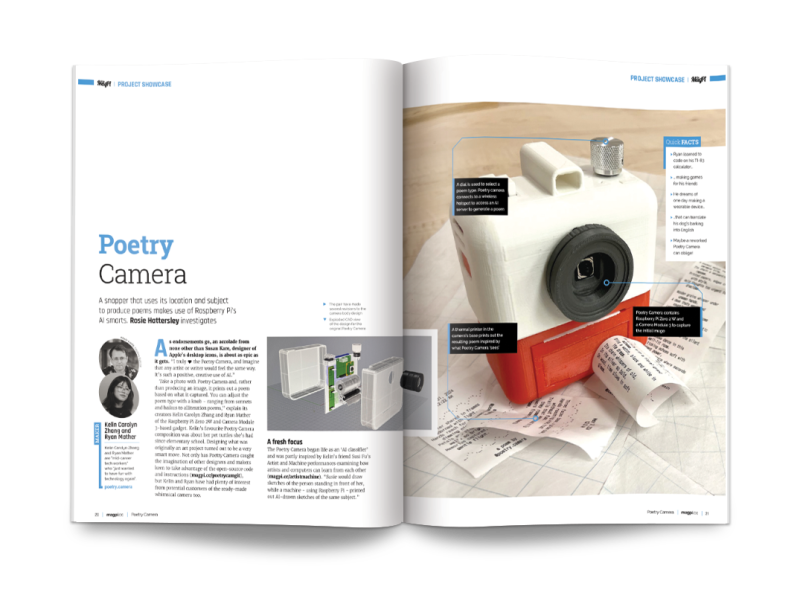

We wanted to design free lesson resources to help teachers in a wide range of subjects confidently introduce AI and ML to students aged 11 to 14 (Key Stage 3). This led us to develop Experience AI, in collaboration with Google DeepMind, offering materials including lesson plans, slide decks, videos (both teacher- and student-facing), student activities, and assessment questions.

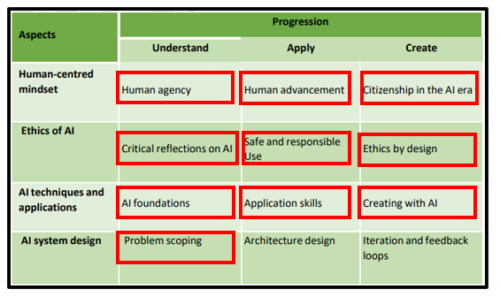

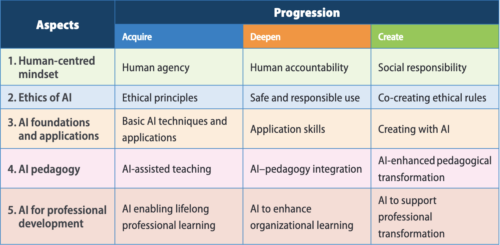

SEAME: The research-based framework behind Experience AI

The Experience AI resources were built on rigorous research from the Raspberry Pi Computing Education Research Centre as well as from other researchers, including those we hosted at our series of seminars on AI and data science education. The Research Centre’s work involved mapping and categorising over 500 resources used to teach AI and ML, and found that the majority were one-off activities, and that very few resources were tailored to a specific age group.

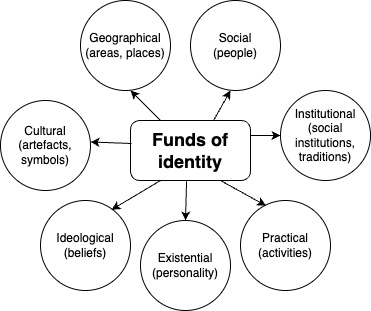

To analyse the content that existing AI education resources covered, the Centre developed a simple framework called SEAME. The framework gives you an easy way to group concepts, knowledge, and skills related to AI and ML based on whether they focus on social and ethical aspects (SE), applications (A), models (M), or engines (E, i.e. how AI works.)

Through Experience AI, learners also gain an understanding of the models underlying AI applications, and the processes used to train and test ML models.

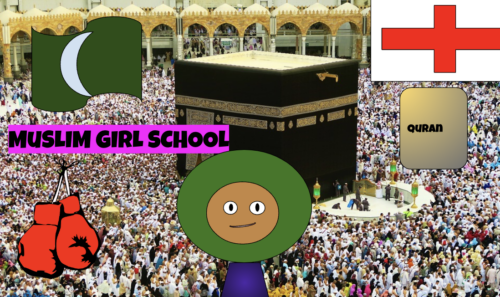

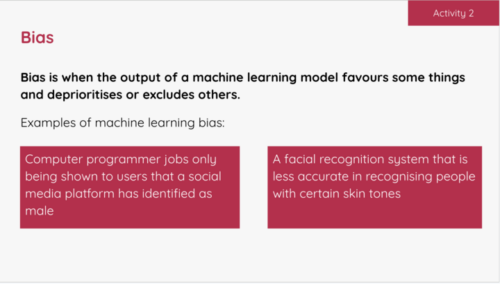

Our Experience AI lessons cover all four levels of SEAME and focus on applications of AI that are relatable for young people. They also introduce learners to AI-related issues such as privacy or bias concerns, and the impact of AI on employment.

The six foundation lessons of Experience AI

- What is AI?: Learners explore the current context of AI and how it is used in the world around them. Looking at the differences between rule-based and data-driven approaches to programming, they consider the benefits and challenges that AI could bring to society.

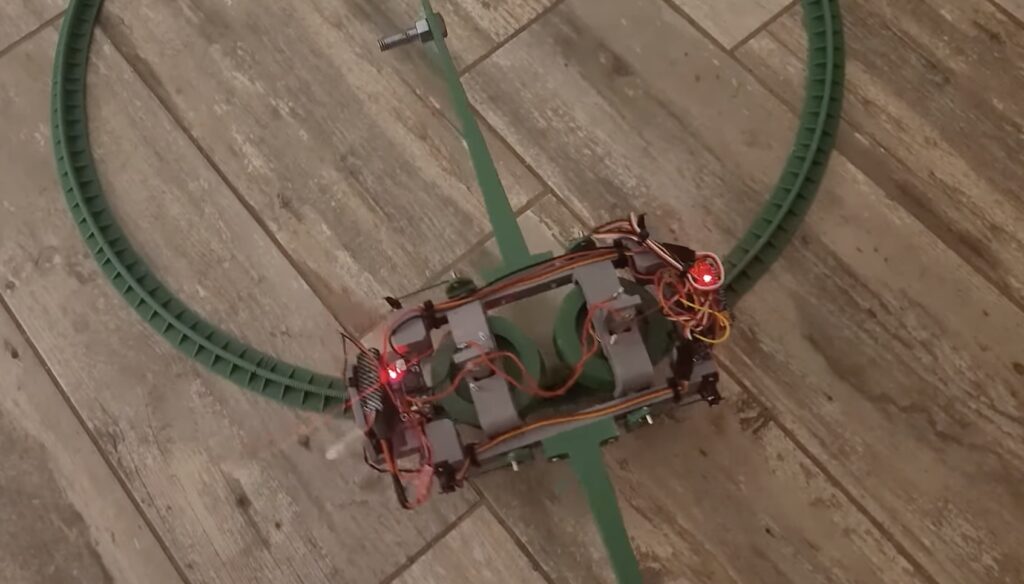

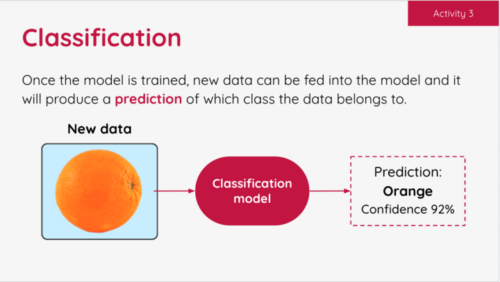

- How computers learn: Focusing on the role of data-driven models in AI systems, learners are introduced to ML and find out about three common approaches to creating ML models. Finally they explore classification, a specific application of ML.

- Bias in, bias out: Students create their own ML model to classify images of apples and tomatoes. They discover that a limited dataset is likely to lead to a flawed ML model. Then they explore how bias can appear in a dataset, resulting in biased predictions produced by a ML model.

- Decision trees: Learners take their first in-depth look at a specific type of ML model: decision trees. They see how different training datasets result in the creation of different ML models, experiencing first-hand what the term ‘data-driven’ means.

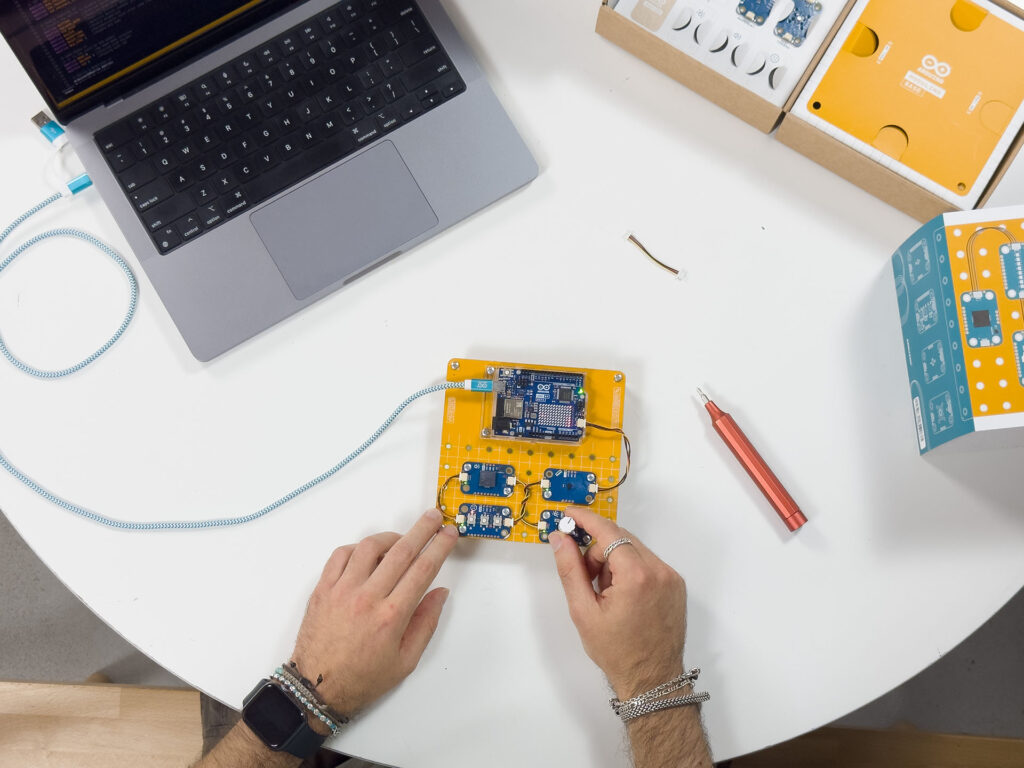

- Solving problems with ML models: Students are introduced to the AI project lifecycle and use it to create a ML model. They apply a human-focused approach to working on their project, train a ML model, and finally test their model to find out its accuracy.

- Model cards and careers: Learners finish the AI project lifecycle by creating a model card to explain their ML model. To complete the unit, they explore a range of AI-related careers, hear from people working in AI research at Google DeepMind, and explore how they might apply AI and ML to their interests.

We also offer two additional stand-alone lessons: one on large language models, how they work, and why they’re not always reliable, and the other on the application of AI in ecosystems research, which lets learners explore how AI tools can be used to support animal conservation.

New AI safety resources: Empowering learners to be critical users of technology

We have also been developing a set of resources for educator-led sessions on three topics related to AI safety, funded by Google.org.

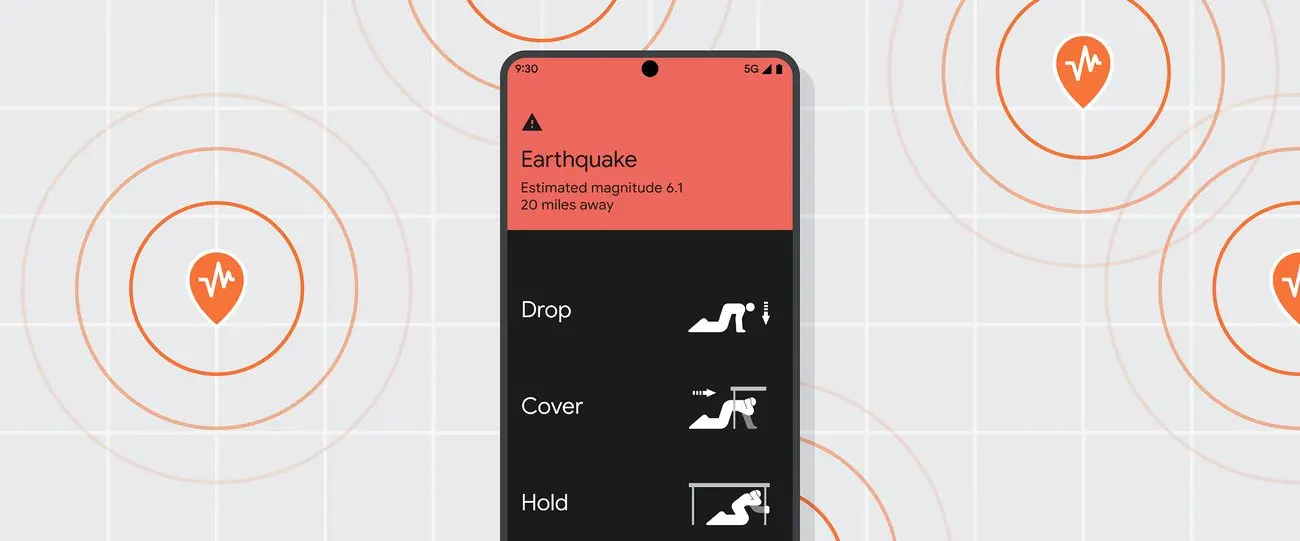

- AI and your data: With the support of this resource, young people reflect on the data they have already provided to AI applications in their daily lives, and think about how the prevalence of AI tools might change the way they protect their data.

- Media literacy in the age of AI: This resource highlights the ways AI tools can be used to perpetuate misinformation and how AI applications can help people combat misleading claims.

- Using generative AI responsibly: With this resource, young people consider their responsibilities when using generative AI, and their expectations of developers who release Experience AI tools.

Other research principles behind our free teaching resources

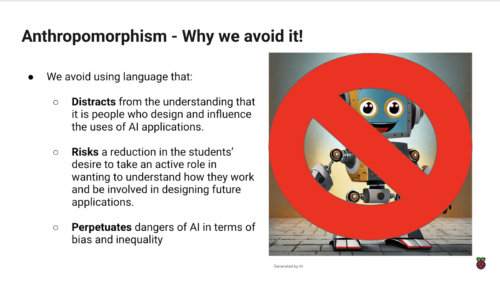

As well as using the SEAME framework, we have incorporated a whole host of other research-based concepts in the design principles for the Experience AI resources. For example, we avoid anthropomorphism — that is, words or imagery that can lead learners to wrongly believe that AI applications have sentience or intentions like humans do — and we instead promote the understanding that it’s people who design AI applications and decide how they are used. We also teach about data-driven application design, which is a core concept in computational thinking 2.0.

Share your feedback

We’d love to hear your thoughts and feedback about using the Experience AI resources. Your comments help us to improve the current materials, and to develop future resources. You can tell us what you think using this form.

And if you’d like to start using the Experience AI resources as an educator, you can download them for free at experience-ai.org.

Website: LINK