Reading Time: 9 minutesDuring this year’s annual Digital Learning Week conference in September, UNESCO launched their AI competency frameworks for students and teachers.

What is the AI competency framework for students?

The UNESCO competency framework for students serves as a guide for education systems across the world to help students develop the necessary skills in AI literacy and to build inclusive, just, and sustainable futures in this new technological era.

It is an exciting document because, as well as being comprehensive, it’s the first global framework of its kind in the area of AI education.

The framework serves three specific purposes:

- It offers a guide on essential AI concepts and skills for students, which can help shape AI education policies or programs at schools

- It aims to shape students’ values, knowledge, and skills so they can understand AI critically and ethically

- It suggests a flexible plan for when and how students should learn about AI as they progress through different school grades

The framework is a starting point for policy-makers, curriculum developers, school leaders, teachers, and educational experts to look at how it could apply in their local contexts.

It is not possible to create a single curriculum suitable for all national and local contexts, but the framework flags the necessary competencies for students across the world to acquire the values, knowledge, and skills necessary to examine and understand AI critically from a holistic perspective.

How does Experience AI compare with the framework?

A group of researchers and curriculum developers from the Raspberry Pi Foundation, with a focus on AI literacy, attended the conference and afterwards we tasked ourselves with taking a deep dive into the student framework and mapping our Experience AI resources to it. Our aims were to:

- Identify how the framework aligns with Experience AI

- See how the framework aligns with our research-informed design principles

- Identify gaps or next steps

Experience AI is a free educational programme that offers cutting-edge resources on artificial intelligence and machine learning for teachers, and their students aged 11 to 14. Developed in collaboration with the Raspberry Pi Foundation and Google DeepMind, the programme provides everything that teachers need to confidently deliver engaging lessons that will teach, inspire, and engage young people about AI and the role that it could play in their lives. The current curriculum offering includes a ‘Foundations of AI’ 6-lesson unit, 2 standalone lessons (‘AI and ecosystems’ and ‘Large language models’), and the 3 newly released AI safety resources.

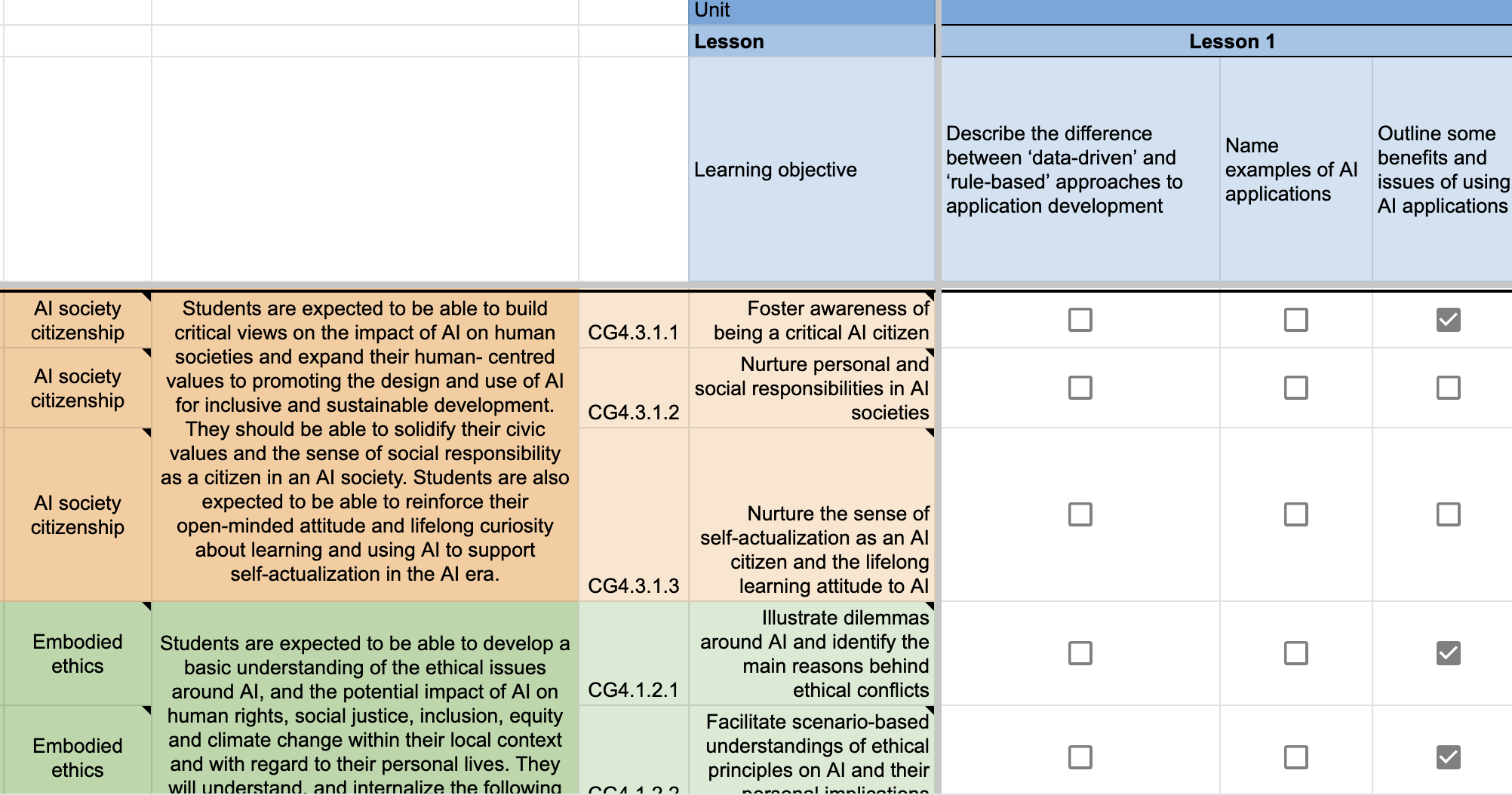

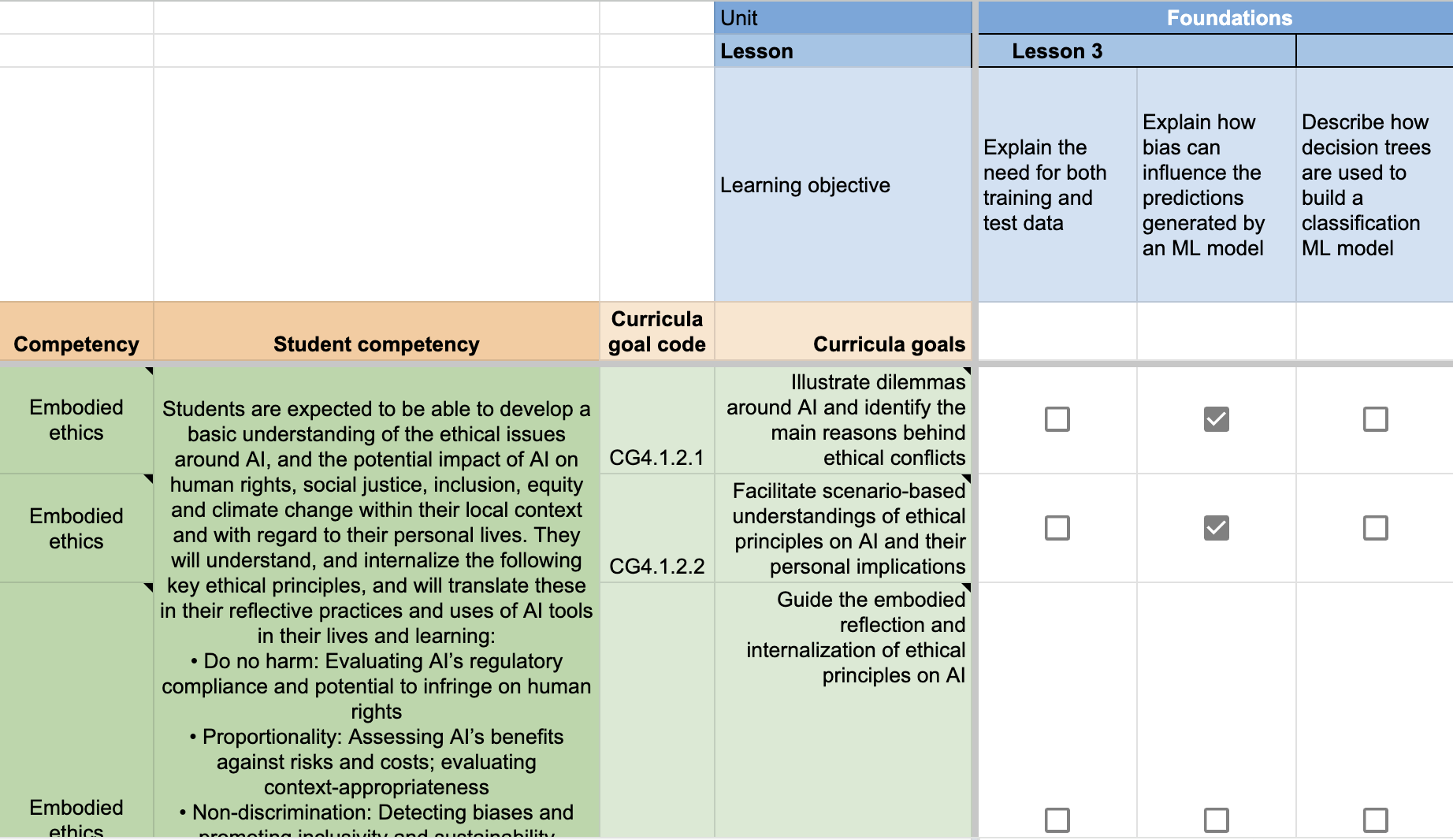

Working through each lesson objective in the Experience AI offering, we compared them with each curricular goal to see where they overlapped. We have made this mapping publicly available so that you can see this for yourself: Experience AI – UNESCO AI Competency framework students – learning objective mapping (rpf.io/unesco-mapping)

The first thing we discovered was that the mapping of the objectives did not have a 1:1 basis. For example, when we looked at a learning objective, we often felt that it covered more than one curricular goal from the framework. That’s not to say that the learning objective fully met each curricular goal, rather that it covers elements of the goal and in turn the student competency.

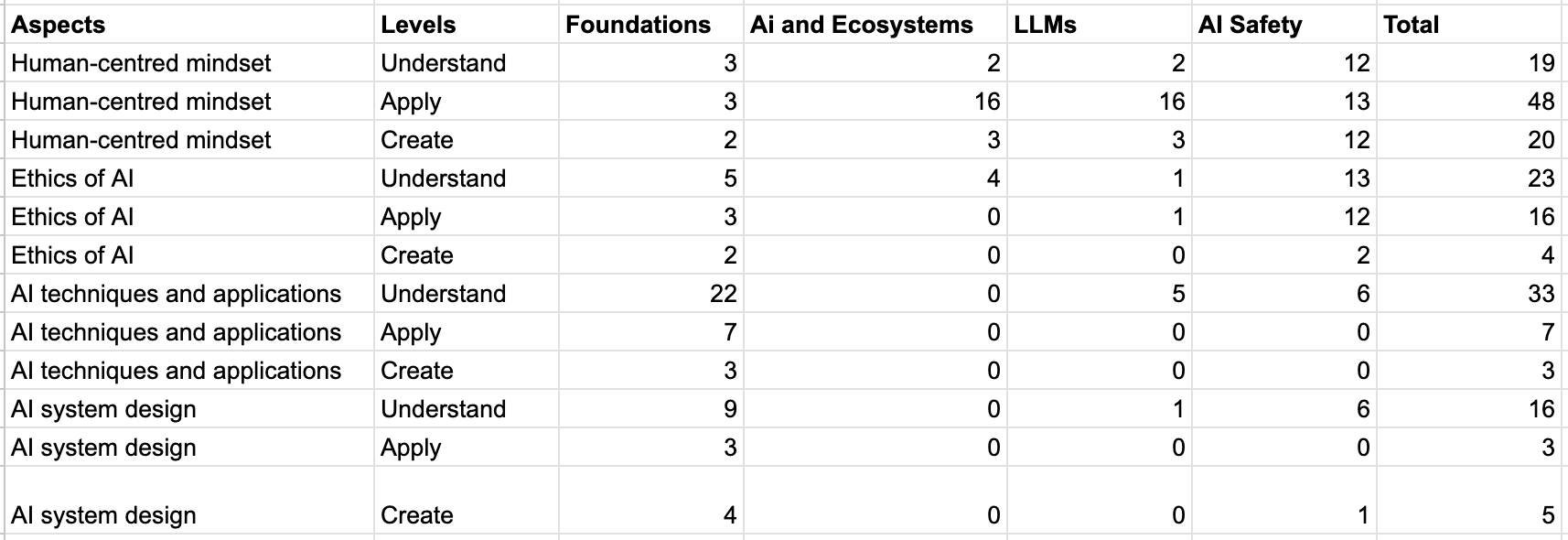

Once we had completed the mapping process, we analysed the results by totalling the number of objectives that had been mapped against each competency aspect and level within the framework.

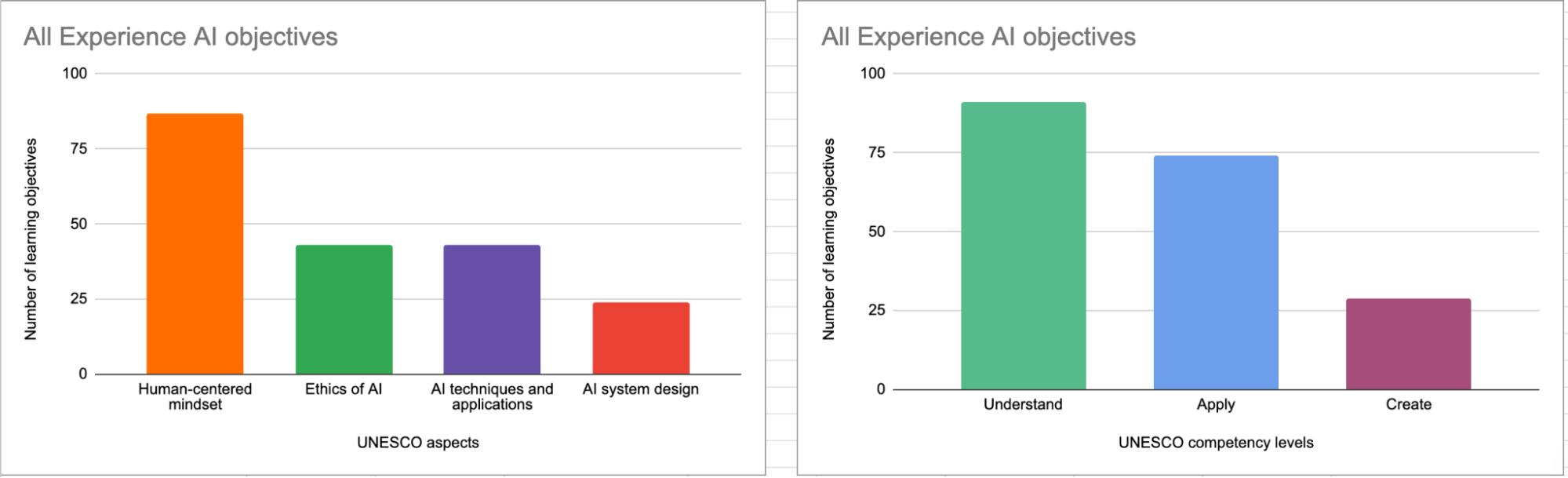

This provided us with an overall picture of where our resources are positioned against the framework. Whilst the majority of the objectives for all of the resources are in the ‘Human-centred mindset’ category, the analysis showed that there is still a relatively even spread of objectives in the other three categories (Ethics of AI, ML techniques and applications, and AI system design).

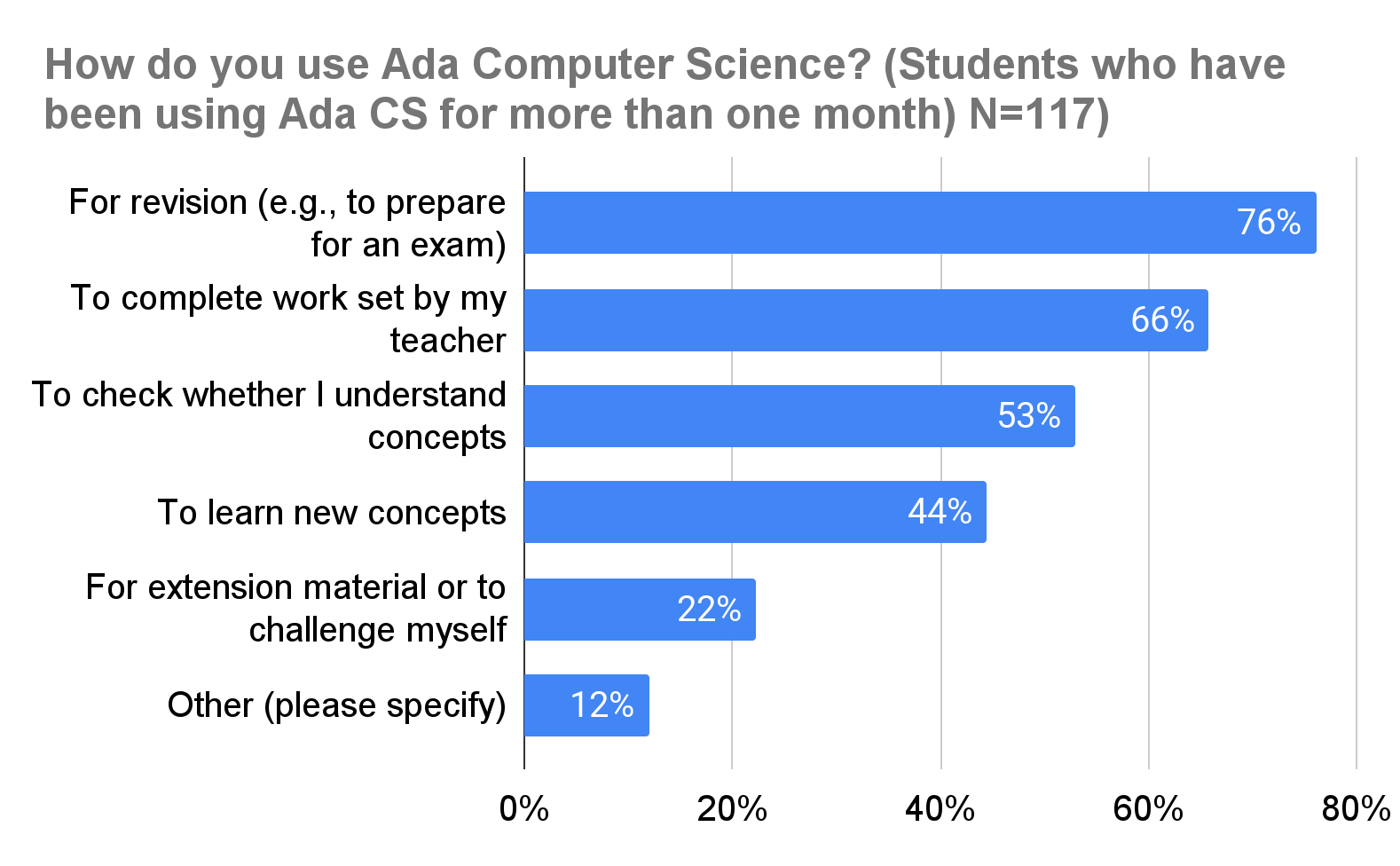

As the current resource offering is targeted at the entry level to AI literacy, it is unsurprising to see that the majority of the objectives were at the level of ‘Understand’. It was, however, interesting to see how many objectives were also at the ‘Apply’ level.

It is encouraging to see that the different resources from Experience AI map to different competencies in the framework. For example, the 6-lesson foundations unit aims to give students a basic understanding of how AI systems work and the data-driven approach to problem solving. In contrast, the AI safety resources focus more on the principles of Fairness, Accountability, Transparency, Privacy, and Security (FATPS), most of which fall more heavily under the ethics of AI and human-centred mindset categories of the competency framework.

What did we learn from the process?

Our principles align

We built the Experience AI resources on design principles based on the knowledge curated by Jane Waite and the Foundation’s researchers. One of our aims of the mapping process was to see if the principles that underpin the UNESCO competency framework align with our own.

Avoiding anthropomorphism

Anthropomorphism refers to the concept of attributing human characteristics to objects or living beings that aren’t human. For reasons outlined in the blog I previously wrote on the issue, a key design principle for Experience AI is to avoid anthropomorphism at all costs. In our resources, we are particularly careful with the language and images that we use. Putting the human in the process is a key way in which we can remind students that it is humans who design and are responsible for AI systems.

It was reassuring to see that the UNESCO framework has many curricular goals that align closely to this, for example:

- Foster an understanding that AI is human-led

- Facilitate an understanding on the necessity of exercising sufficient human control over AI

- Nurture critical thinking on the dynamic relationship between human agency and machine agency

SEAME

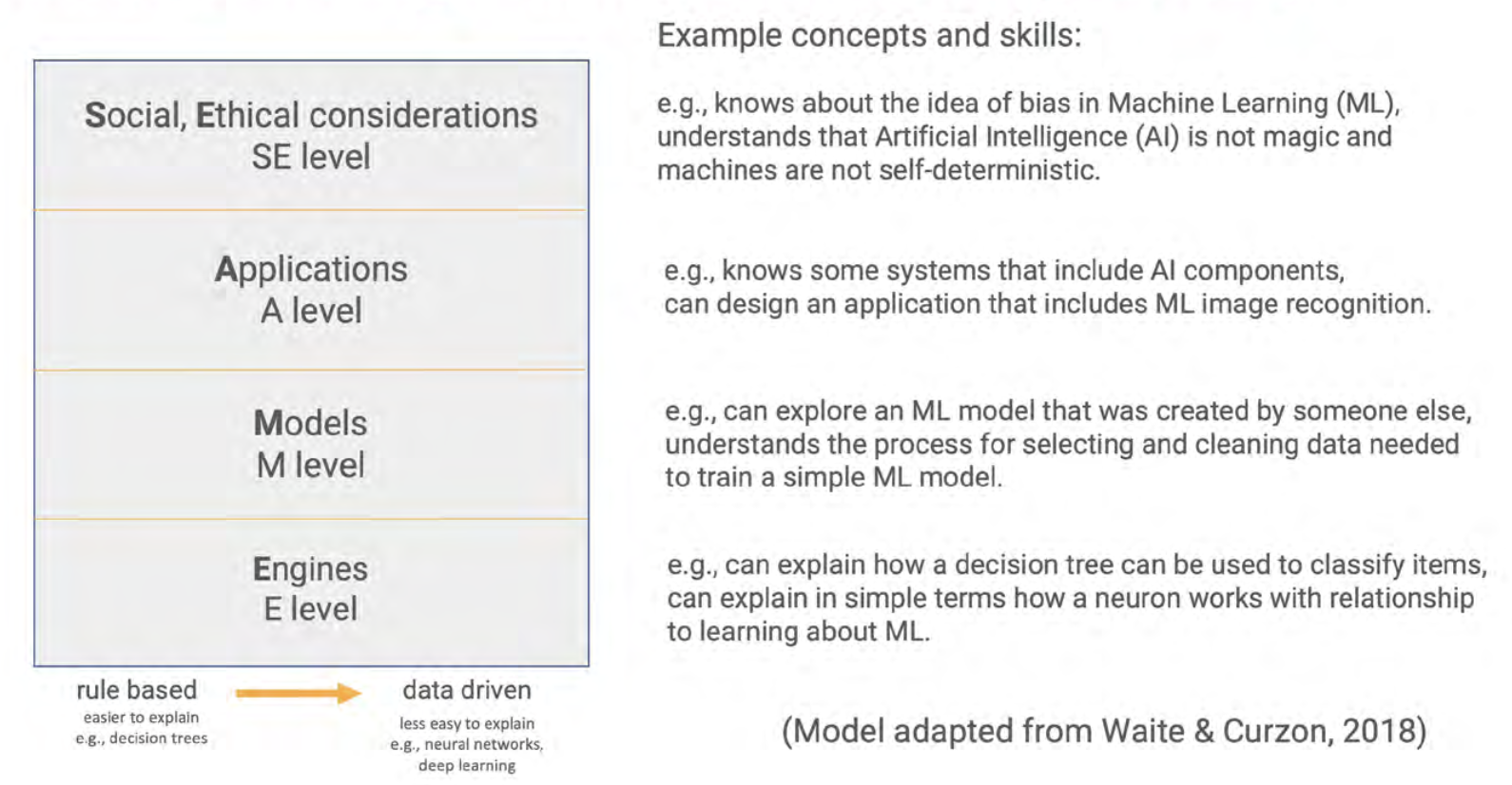

The SEAME framework created by Paul Curzon and Jane Waite offers a way for teachers, resource developers, and researchers to talk about the focus of AI learning activities by separating them into four layers: Social and Ethical (SE), Application (A), Models (M), and Engines (E).

The SEAME model and the UNESCO AI competency framework take two different approaches to categorising AI education — SEAME describes levels of abstraction for conceptual learning about AI systems, whereas the competency framework separates concepts into strands with progression. We found that although the alignment between the frameworks is not direct, the same core AI and machine learning concepts are broadly covered across both.

Computational thinking 2.0 (CT2.0)

The concept of computational thinking 2.0 (a data-driven approach) stems from research by Professor Matti Tedre and Dr Henriikka Vartiainen from the University of Eastern Finland. The essence of this approach establishes AI as a different way to solve problems using computers compared to a more traditional computational thinking approach (a rule-based approach). This does not replace the traditional computational approach, but instead requires students to approach the problem differently when using AI as a tool.

The UNESCO framework includes many references within their curricular goals that places the data-driven approach at the forefront of problem solving using AI, including:

- Develop conceptual knowledge on how AI is trained based on data

- Develop skills on assessing AI systems’ need for data, algorithms, and computing resources

Where we slightly differ in our approach is the regular use of the term ‘algorithm’, particularly in the Understand and Apply levels of the framework. We have chosen to differentiate AI systems from traditional computational thinking approaches by avoiding the term ‘algorithm’ at the foundational stage of AI education. We believe the learners need a firm mental model of data-driven systems before students can understand that the Model and Engines of the SEAME model refer to algorithms (which would possibly correspond to the Create stage of the UNESCO framework).

We can identify areas for exploration

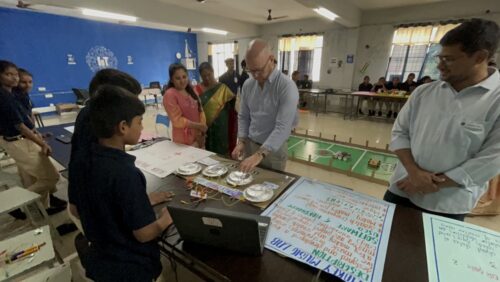

As part of the international expansion of Experience AI, we have been working with partners from across the globe to bring AI literacy education to students in their settings. Part of this process has involved working with our partners to localise the resources, but also to provide training on the concepts covered in Experience AI. During localisation and training, our partners often have lots of queries about the lesson on bias.

As a result, we decided to see if mapping taught us anything about this lesson in particular, and if there was any learning we could take from it. At close inspection, we found that the lesson covers two out of the three curricular goals for the Understand element of the ‘Ethics of AI’ category (Embodied ethics).

Specifically, we felt the lesson:

- Illustrates dilemmas around AI and identifies the main reasons behind ethical conflicts

- Facilitates scenario-based understandings of ethical principles on AI and their personal implications

What we felt isn’t covered in the lesson is:

- Guide the embodied reflection and internalisation of ethical principles on AI

Exploring this further, the framework describes this curricular goal as:

“Guide students to understand the implications of ethical principles on AI for their human rights, data privacy, safety, human agency, as well as for equity, inclusion, social justice and environmental sustainability. Guide students to develop embodied comprehension of ethical principles; and offer opportunities to reflect on personal attitudes that can help address ethical challenges (e.g. advocating for inclusive interfaces for AI tools, promoting inclusion in AI and reporting discriminatory biases found in AI tools).”

We realised that this doesn’t mean that the lesson on bias is ineffective or incomplete, but it does help us to think more deeply about the learning objective for the lesson. This may be something we will look to address in future iterations of the foundations unit or even in the development of new resources. What we have identified is a process that we can follow, which will help us with our decision making in the next phases of resource development.

How does this inform our next steps?

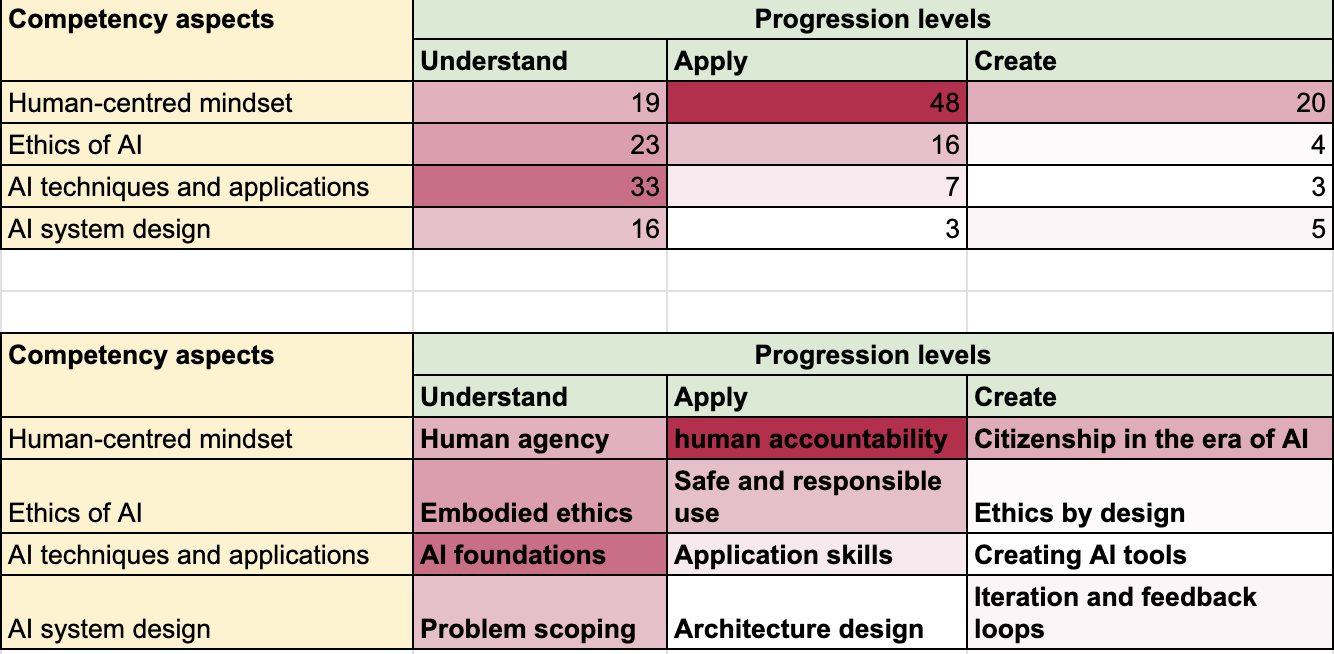

As part of the analysis of the resources, we created a simple heatmap of how the Experience AI objectives relate to the UNESCO progression levels. As with the barcharts, the heatmap indicated that the majority of the objectives sit within the Understand level of progression, with fewer in Apply, and fewest in Create. As previously mentioned, this is to be expected with the resources being “foundational”.

The heatmap has, however, helped us to identify some interesting points about our resources that warrant further thought. For example, under the ‘Human-centred mindset’ competency aspect, there are more objectives under Apply than there are Understand. For ‘AI system design’, architecture design is the least covered aspect of Apply.

By identifying these areas for investigation, again it shows that we’re able to add the learnings from the UNESCO framework to help us make decisions.

What next?

This mapping process has been a very useful exercise in many ways for those of us working on AI literacy at the Raspberry Pi Foundation. The process of mapping the resources gave us an opportunity to have deep conversations about the learning objectives and question our own understanding of our resources. It was also very satisfying to see that the framework aligns well with our own researched-informed design principles, such as the SEAME model and avoiding anthropomorphisation.

The mapping process has been a good starting point for us to understand UNESCO’s framework and we’re sure that it will act as a useful tool to help us make decisions around future enhancements to our foundational units and new free educational materials. We’re looking forward to applying what we’ve learnt to our future work!

Website: LINK