Reading Time: 5 minutesHave you heard of ChatGPT, Gemini, or Claude, but haven’t tried any of them yourself? Navigating the world of large language models (LLMs) might feel a bit daunting. However, with the right approach, these tools can really enhance your teaching and make classroom admin and planning easier and quicker.

That’s where the OCEAN prompting process comes in: it’s a straightforward framework designed to work with any LLM, helping you reliably get the results you want.

The great thing about the OCEAN process is that it takes the guesswork out of using LLMs. It helps you move past that ‘blank page syndrome’ — that moment when you can ask the model anything but aren’t sure where to start. By focusing on clear objectives and guiding the model with the right context, you can generate content that is spot on for your needs, every single time.

5 ways to make LLMs work for you using the OCEAN prompting process

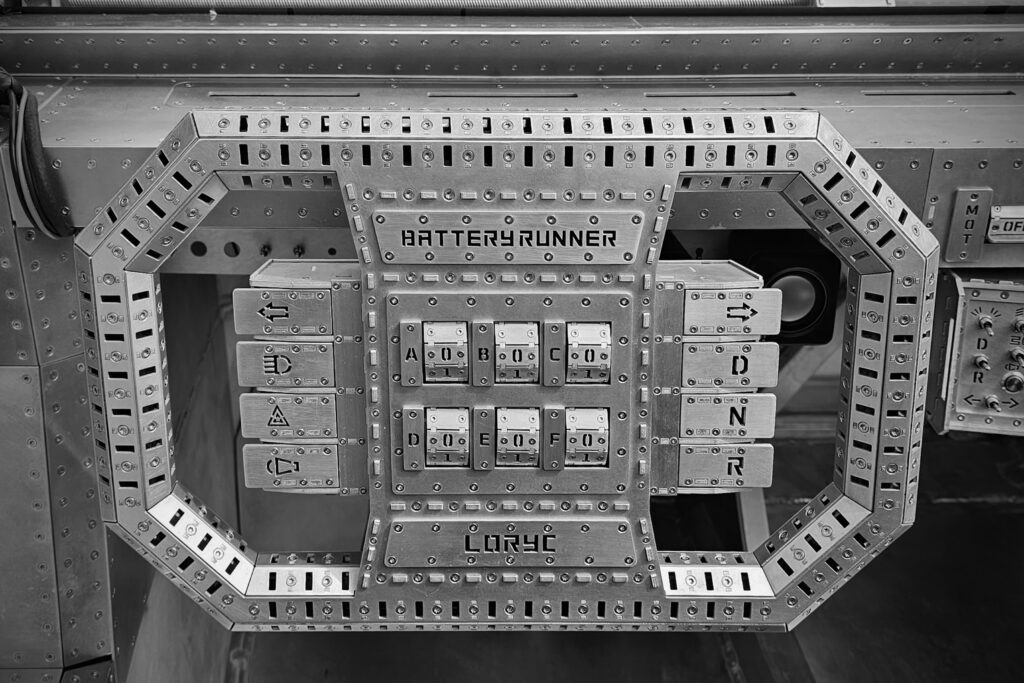

OCEAN’s name is an acronym: objective, context, examples, assess, negotiate — so let’s begin at the top.

1. Define your objective

Think of this as setting a clear goal for your interaction with the LLM. A well-defined objective ensures that the responses you get are focused and relevant.

Maybe you need to:

- Draft an email to parents about an upcoming school event

- Create a beginner’s guide for a new Scratch project

- Come up with engaging quiz questions for your next science lesson

By knowing exactly what you want, you can give the LLM clear directions to follow, turning a broad idea into a focused task.

2. Provide some context

This is where you give the LLM the background information it needs to deliver the right kind of response. Think of it as setting the scene and providing some of the important information about why, and for whom, you are making the document.

You might include:

- The length of the document you need

- Who your audience is — their age, profession, or interests

- The tone and style you’re after, whether that’s formal, informal, or somewhere in between

All of this helps the LLM include the bigger picture in its analysis and tailor its responses to suit your needs.

3. Include examples

By showing the LLM what you’re aiming for, you make it easier for the model to deliver the kind of output you want. This is called one-shot, few-shot, or many-shot prompting, depending on how many examples you provide.

You can:

- Include URL links

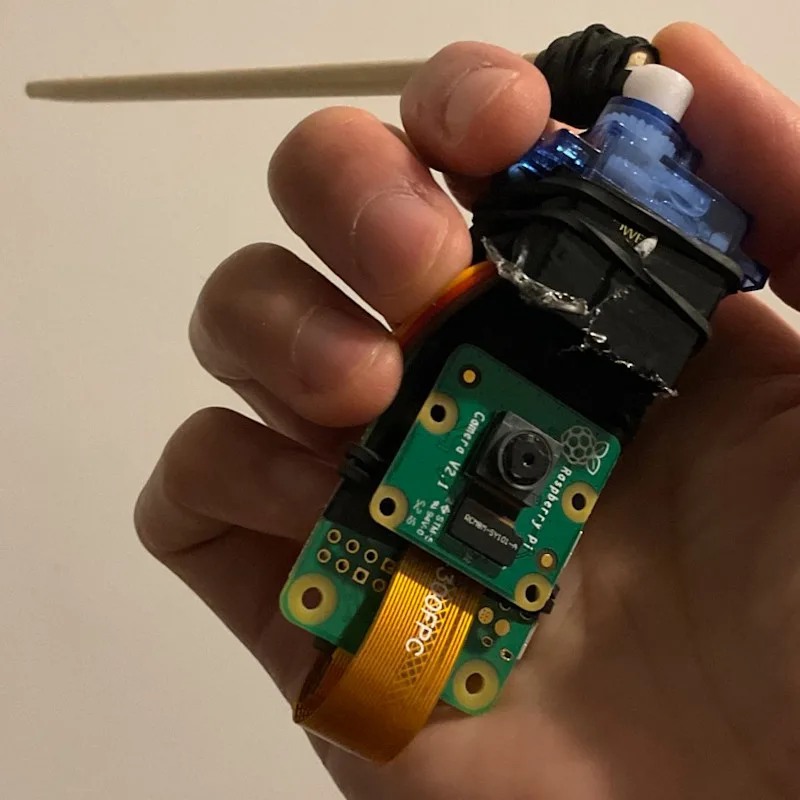

- Upload documents and images (some LLMs don’t have this feature)

- Copy and paste other text examples into your prompt

Without any examples at all (zero-shot prompting), you’ll still get a response, but it might not be exactly what you had in mind. Providing examples is like giving a recipe to follow that includes pictures of the desired result, rather than just vague instructions — it helps to ensure the final product comes out the way you want it.

4. Assess the LLM’s response

This is where you check whether what you’ve got aligns with your original goal and meets your standards.

Keep an eye out for:

- Hallucinations: incorrect information that’s presented as fact

- Misunderstandings: did the LLM interpret your request correctly?

- Bias: make sure the output is fair and aligned with diversity and inclusion principles

A good assessment ensures that the LLM’s response is accurate and useful. Remember, LLMs don’t make decisions — they just follow instructions, so it’s up to you to guide them. This brings us neatly to the next step: negotiate the results.

5. Negotiate the results

If the first response isn’t quite right, don’t worry — that’s where negotiation comes in. You should give the LLM frank and clear feedback and tweak the output until it’s just right. (Don’t worry, it doesn’t have any feelings to be hurt!)

When you negotiate, tell the LLM if it made any mistakes, and what you did and didn’t like in the output. Tell it to ‘Add a bit at the end about …’ or ‘Stop using the word “delve” all the time!’

How to get the tone of the document just right

Another excellent tip is to use descriptors for the desired tone of the document in your negotiations with the LLM, such as, ‘Make that output slightly more casual.’

In this way, you can guide the LLM to be:

- Approachable: the language will be warm and friendly, making the content welcoming and easy to understand

- Casual: expect laid-back, informal language that feels more like a chat than a formal document

- Concise: the response will be brief and straight to the point, cutting out any fluff and focusing on the essentials

- Conversational: the tone will be natural and relaxed, as if you’re having a friendly conversation

- Educational: the language will be clear and instructive, with step-by-step explanations and helpful details

- Formal: the response will be polished and professional, using structured language and avoiding slang

- Professional: the tone will be business-like and precise, with industry-specific terms and a focus on clarity

Remember: LLMs have no idea what their output says or means; they are literally just very powerful autocomplete tools, just like those in text messaging apps. It’s up to you, the human, to make sure they are on the right track.

Don’t forget the human edit

Even after you’ve refined the LLM’s response, it’s important to do a final human edit. This is your chance to make sure everything’s perfect, checking for accuracy, clarity, and anything the LLM might have missed. LLMs are great tools, but they don’t catch everything, so your final touch ensures the content is just right.

At a certain point it’s also simpler and less time-consuming for you to alter individual words in the output, or use your unique expertise to massage the language for just the right tone and clarity, than going back to the LLM for a further iteration.

Ready to dive in?

Now it’s time to put the OCEAN process into action! Log in to your preferred LLM platform, take a simple prompt you’ve used before, and see how the process improves the output. Then share your findings with your colleagues. This hands-on approach will help you see the difference the OCEAN method can make!

Sign up for a free account at one of these platforms:

- ChatGPT (chat.openai.com)

- Gemini (gemini.google.com)

By embracing the OCEAN prompting process, you can quickly and easily make LLMs a valuable part of your teaching toolkit. The process helps you get the most out of these powerful tools, while keeping things ethical, fair, and effective.

If you’re excited about using AI in your classroom preparation, and want to build more confidence in integrating it responsibly, we’ve got great news for you. You can sign up for our totally free online course on edX called ‘Teach Teens Computing: Understanding AI for Educators’ (helloworld.cc/ai-for-educators). In this course, you’ll learn all about the OCEAN process and how to better integrate generative AI into your teaching practice. It’s a fantastic way to ensure you’re using these technologies responsibly and ethically while making the most of what they have to offer. Join us and take your AI skills to the next level!

A version of this article also appears in Hello World issue 25.

Website: LINK