Since we released the first Raspberry Pi camera module back in 2013, users have been clamouring for better access to the internals of the camera system, and even to be able to attach camera sensors of their own to the Raspberry Pi board. Today we’re releasing our first version of a new open source camera stack which makes these wishes a reality.

(Note: in what follows, you may wish to refer to the glossary at the end of this post.)

We’ve had the building blocks for connecting other sensors and providing lower-level access to the image processing for a while, but Linux has been missing a convenient way for applications to take advantage of this. In late 2018 a group of Linux developers started a project called libcamera to address that. We’ve been working with them since then, and we’re pleased now to announce a camera stack that operates within this new framework.

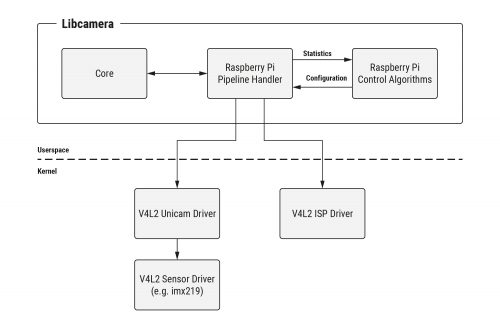

Here’s how our work fits into the libcamera project.

We’ve supplied a Pipeline Handler that glues together our drivers and control algorithms, and presents them to libcamera with the API it expects.

Here’s a little more on what this has entailed.

V4L2 drivers

V4L2 (Video for Linux 2) is the Linux kernel driver framework for devices that manipulate images and video. It provides a standardised mechanism for passing video buffers to, and/or receiving them from, different hardware devices. Whilst it has proved somewhat awkward as a means of driving entire complex camera systems, it can nonetheless provide the basis of the hardware drivers that libcamera needs to use.

Consequently, we’ve upgraded both the version 1 (Omnivision OV5647) and version 2 (Sony IMX219) camera drivers so that they feature a variety of modes and resolutions, operating in the standard V4L2 manner. Support for the new Raspberry Pi High Quality Camera (using the Sony IMX477) will be following shortly. The Broadcom Unicam driver – also V4L2‑based – has been enhanced too, signalling the start of each camera frame to the camera stack.

Finally, dumping raw camera frames (in Bayer format) into memory is of limited value, so the V4L2 Broadcom ISP driver provides all the controls needed to turn raw images into beautiful pictures!

Configuration and control algorithms

Of course, being able to configure Broadcom’s ISP doesn’t help you to know what parameters to supply. For this reason, Raspberry Pi has developed from scratch its own suite of ISP control algorithms (sometimes referred to generically as 3A Algorithms), and these are made available to our users as well. Some of the most well known control algorithms include:

- AEC/AGC (Auto Exposure Control/Auto Gain Control): this monitors image statistics into order to drive the camera exposure to an appropriate level.

- AWB (Auto White Balance): this corrects for the ambient light that is illuminating a scene, and makes objects that appear grey to our eyes come out actually grey in the final image.

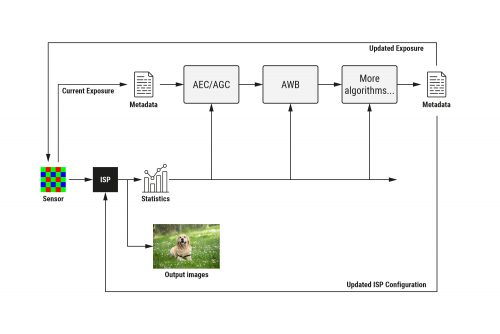

But there are many others too, such as ALSC (Auto Lens Shading Correction, which corrects vignetting and colour variation across an image), and control for noise, sharpness, contrast, and all other aspects of image processing. Here’s how they work together.

The control algorithms all receive statistics information from the ISP, and cooperate in filling in metadata for each image passing through the pipeline. At the end, the metadata is used to update control parameters in both the image sensor and the ISP.

Previously these functions were proprietary and closed source, and ran on the Broadcom GPU. Now, the GPU just shovels pixels through the ISP hardware block and notifies us when it’s done; practically all the configuration is computed and supplied from open source Raspberry Pi code on the ARM processor. A shim layer still exists on the GPU, and turns Raspberry Pi’s own image processing configuration into the proprietary functions of the Broadcom SoC.

To help you configure Raspberry Pi’s control algorithms correctly for a new camera, we include a Camera Tuning Tool. Or if you’d rather do your own thing, it’s easy to modify the supplied algorithms, or indeed to replace them entirely with your own.

Why libcamera?

Whilst ISP vendors are in some cases contributing open source V4L2 drivers, the reality is that all ISPs are very different. Advertising these differences through kernel APIs is fine – but it creates an almighty headache for anyone trying to write a portable camera application. Fortunately, this is exactly the problem that libcamera solves.

We provide all the pieces for Raspberry Pi-based libcamera systems to work simply “out of the box”. libcamera remains a work in progress, but we look forward to continuing to help this effort, and to contributing an open and accessible development platform that is available to everyone.

Summing it all up

So far as we know, there are no similar camera systems where large parts, including at least the control (3A) algorithms and possibly driver code, are not closed and proprietary. Indeed, for anyone wishing to customise a camera system – perhaps with their own choice of sensor – or to develop their own algorithms, there would seem to be very few options – unless perhaps you happen to be an extremely large corporation.

In this respect, the new Raspberry Pi Open Source Camera System is providing something distinctly novel. For some users and applications, we expect its accessible and non-secretive nature may even prove quite game-changing.

What about existing camera applications?

The new open source camera system does not replace any existing camera functionality, and for the foreseeable future the two will continue to co-exist. In due course we expect to provide additional libcamera-based versions of raspistill, raspivid and PiCamera – so stay tuned!

Where next?

If you want to learn more about the libcamera project, please visit https://libcamera.org.

To try libcamera for yourself with a Raspberry Pi, please follow the instructions in our online documentation, where you’ll also find the full Raspberry Pi Camera Algorithm and Tuning Guide.

If you’d like to know more, and can’t find an answer in our documentation, please go to the Camera Board forum. We’ll be sure to keep our eyes open there to pick up any of your questions.

Acknowledgements

Thanks to Naushir Patuck and Dave Stevenson for doing all the really tricky bits (lots of V4L2-wrangling).

Thanks also to the libcamera team (Laurent Pinchart, Kieran Bingham, Jacopo Mondi and Niklas Söderlund) for all their help in making this project possible.

Glossary

3A, 3A Algorithms: refers to AEC/AGC (Auto Exposure Control/Auto Gain Control), AWB (Auto White Balance) and AF (Auto Focus) algorithms, but may implicitly cover other ISP control algorithms. Note that Raspberry Pi does not implement AF (Auto Focus), as none of our supported camera modules requires it

AEC: Auto Exposure Control

AF: Auto Focus

AGC: Auto Gain Control

ALSC: Auto Lens Shading Correction, which corrects vignetting and colour variations across an image. These are normally caused by the type of lens being used and can vary in different lighting conditions

AWB: Auto White Balance

Bayer: an image format where each pixel has only one colour component (one of R, G or B), creating a sort of “colour mosaic”. All the missing colour values must subsequently be interpolated. This is a raw image format meaning that no noise, sharpness, gamma, or any other processing has yet been applied to the image

CSI-2: Camera Serial Interface (version) 2. This is the interface format between a camera sensor and Raspberry Pi

GPU: Graphics Processing Unit. But in this case it refers specifically to the multimedia coprocessor on the Broadcom SoC. This multimedia processor is proprietary and closed source, and cannot directly be programmed by Raspberry Pi users

ISP: Image Signal Processor. A hardware block that turns raw (Bayer) camera images into full colour images (either RGB or YUV)

Raw: see Bayer

SoC: System on Chip. The Broadcom processor at the heart of all Raspberry Pis

Unicam: the CSI-2 receiver on the Broadcom SoC on the Raspberry Pi. Unicam receives pixels being streamed out by the image sensor

V4L2: Video for Linux 2. The Linux kernel driver framework for devices that process video images. This includes image sensors, CSI-2 receivers, and ISPs

Website: LINK